* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Connectionism

Aging brain wikipedia , lookup

Brain morphometry wikipedia , lookup

Human brain wikipedia , lookup

Single-unit recording wikipedia , lookup

Cognitive neuroscience of music wikipedia , lookup

Neuroinformatics wikipedia , lookup

Activity-dependent plasticity wikipedia , lookup

Embodied language processing wikipedia , lookup

History of neuroimaging wikipedia , lookup

Haemodynamic response wikipedia , lookup

Premovement neuronal activity wikipedia , lookup

Cognitive neuroscience wikipedia , lookup

Clinical neurochemistry wikipedia , lookup

Neural coding wikipedia , lookup

Neuroplasticity wikipedia , lookup

Neuropsychology wikipedia , lookup

Neurolinguistics wikipedia , lookup

Binding problem wikipedia , lookup

Neuroesthetics wikipedia , lookup

Feature detection (nervous system) wikipedia , lookup

Pre-Bötzinger complex wikipedia , lookup

Circumventricular organs wikipedia , lookup

Neuroeconomics wikipedia , lookup

Synaptic gating wikipedia , lookup

Neural oscillation wikipedia , lookup

Brain Rules wikipedia , lookup

Neural correlates of consciousness wikipedia , lookup

Mind uploading wikipedia , lookup

Artificial general intelligence wikipedia , lookup

Artificial neural network wikipedia , lookup

Optogenetics wikipedia , lookup

Neural engineering wikipedia , lookup

Neuroanatomy wikipedia , lookup

Channelrhodopsin wikipedia , lookup

Neurophilosophy wikipedia , lookup

Neuropsychopharmacology wikipedia , lookup

Central pattern generator wikipedia , lookup

Development of the nervous system wikipedia , lookup

Holonomic brain theory wikipedia , lookup

Neural binding wikipedia , lookup

Catastrophic interference wikipedia , lookup

Convolutional neural network wikipedia , lookup

Nervous system network models wikipedia , lookup

Metastability in the brain wikipedia , lookup

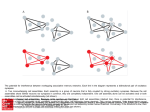

Summer 2011 Monday, 8/1 As you’re working on your paper • Make sure to state your thesis and the structure of your argument in the very first paragraph. • Help the reader (me!) by including signposts of where you are in the argument. • Ask yourself what the point of each paragraph is and how it contributes to your argument. • Give reasons for your claims! Don’t make unsupported assertions. Neural Networks • The brain can be thought of as a highly complex, non-linear and parallel computer whose structural constituents are neurons. There are billions of neurons in the brain. • The computational properties of neurons are the reason why we’re interested in neurons more than in any other, nonneuronal cells in the brain. Neural Networks • Consider a simple recognition task, e.g. matching an image with a stored photograph. • To perform the task, a computer must compare the image with thousands of stored photographs. • At the end of all the comparisons, the computer may output the photograph that best matches the image. • If the photograph database is as large as the one in our memory, this may take several hours. • But our brain can do this instantly! Neural Networks • A silicon chip can perform a computation in nanoseconds (10 to the power of -9 seconds). • But neuronal computations are done in miliseconds, which are 6 orders slower! • Yet it seems that our computational capability (processing speed) is enormously greater than that of the typical computer. • How is this possible? Neural Networks • The answer seems to lie in the massively parallel structure of the brain, which includes trillions of interconnections between neurons. Artificial Neural Networks • Inspired by the organization of the brain. • Like the brain, are composed of many simple processors linked in parallel. • In the brain, the simple processors are neurons and the connections are axons and synapses. • In connectionist theory, the simple processing elements (much simpler than neurons) are called units and the connections are numerically weighted links between these units. • Each unit takes inputs from a small group of neighbouring units and passes outputs to a small group of neighbors. NETtalk • An artificial neural network that can be trained to pronounce English words. • Consists of about 300 units (neurons) arranged in three layers: an input layer, which reads the words, an output layer, which generates speech sounds, or phonemes, and a middle, ''hidden layer,'' which mediates between the other two. • The units are joined to one another with 18,000 synapses, adjustable connections whose strengths can be turned up or down. NETtalk • At first volume controls are set at random and NetTalk is a structureless, homogenized tabula rasa. Provided with a list of words, it babbles incomprehensibly. But some of its guesses are better than others, and they are reinforced by adjusting the strengths of the synapses according to a set of learning rules. • After a half day of training, the pronunications become clearer and clearer until NetTalk can recognize some 1,000 words. In a week, it can learn 20,000. NETtalk • NetTalk is not provided with any rules for how different letters are pronounced under different circumstances. (It has been argued that ''ghiti'' could be pronounced ''fish'' - ''gh'' from ''enough'' and ''ti'' from ''nation.'') • But once the system has evolved, it acts as though it knows the rules. They become implicitly coded in the network of connections, though noone has any idea where the rules are located or what they look like. (On the surface, there’s just “numerical spaghetti”) Back-Propagation • The network begins with a set of randomly selected connection weights. • It is then exposed to a large number of input patterns. • For each input pattern, some (initially incorrect) output is produced. • An automatic supervisory system monitors the output, compares it to the target output, and calculates small adjustments to the connection weights. • This is repeated until (often) the network solves the problem and yields the desired input-output profile. Distributed Representation • A connectionist system’s knowledge base does not consist in a body of declarative statements written out in a formal notation. • Rather, it inheres in the set of connection weights and the unit architecture. • The information active during the processing of a specific input may be equated with the transient activation patterns of the hidden units. • An item of information has a distributed representation if it is expressed by the simultaneous activity of a number of units. Superpositional Coding • Partially overlapping use of distributed resources, where the overlap is informationally significant. • For example, the activation pattern for a black panther may share some of the substructure of the activation pattern for a cat. • The public language words “cat” and “panther” display no such overlap. “Free” Generalizations • A benefit of connectionist architecture. • Generalizations occur because a new input pattern, if it resembles the old one in some aspects, yields a response that’s rooted in that partial overlap. Graceful Degradation • Another benefit of connectionist architecture. • The ability of the system to produce sensible responses given some systematic damage. • Such damage tolerance is possible in virtue of the use of distributed, superpositional storage schemes. • This is similar to what goes on in our brains. Compare: Messing with wiring in a computer. Sub-symbolic representation • Physical symbol systems displayed semantic transparency: familiar words and ideas were rendered as simple inner symbols. • Connectionist approaches introduce greater distance between daily talk and the contents manipulated by the computational system. • The contentful elements in a subsymbolic program do not reflect our ways of thinking about the task domain. • The structure that’s represented by a large pattern of unit activity may be too rich and subtle to be captured in everyday language. Post-training Analysis How do we figure out what knowledge and strategies the network is actually using to solve the problems in its task domain? 1. Artificial lesions. 2. Statistical Analysis, e.g. PCA, cluster analysis. Recurrent Neural Networks • “Second generation” neural networks. • Geared towards producing patterns that are extended in time (e.g. commands to produce a running motion) and to recognizing temporally extended patterns (e.g. facial motions). • Includes a feedback-loop that “recycles” some aspects of the networks activity at time t1 along with the new inputs arriving at t2. • The traces that are preserved act as short-term memory, enabling the network to generate new responses that depend both on current input and on the previous activity of the network. Dynamical Connectionism • “Third generation” connectionism. • Puts even greater stress on dynamic and time involving properties. • Introduces more neurobiologically realistic features, including special purpose units, more complex connectivity, computationally salient time delays in processing, deliberate use of noise, etc.