* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Auditory Brain Development in Children with Hearing Loss – Part Two

Neuroanatomy wikipedia , lookup

Neurophilosophy wikipedia , lookup

Executive functions wikipedia , lookup

Embodied language processing wikipedia , lookup

Activity-dependent plasticity wikipedia , lookup

Emotional lateralization wikipedia , lookup

Affective neuroscience wikipedia , lookup

Development of the nervous system wikipedia , lookup

Cognitive neuroscience wikipedia , lookup

Brain Rules wikipedia , lookup

Sound localization wikipedia , lookup

History of neuroimaging wikipedia , lookup

Environmental enrichment wikipedia , lookup

Holonomic brain theory wikipedia , lookup

Neuropsychology wikipedia , lookup

Embodied cognitive science wikipedia , lookup

Sensory substitution wikipedia , lookup

Animal echolocation wikipedia , lookup

Neurocomputational speech processing wikipedia , lookup

Synaptic gating wikipedia , lookup

Neurolinguistics wikipedia , lookup

Neuropsychopharmacology wikipedia , lookup

Eyeblink conditioning wikipedia , lookup

Neuroesthetics wikipedia , lookup

Aging brain wikipedia , lookup

Neurostimulation wikipedia , lookup

Human brain wikipedia , lookup

Sensory cue wikipedia , lookup

Metastability in the brain wikipedia , lookup

Neural correlates of consciousness wikipedia , lookup

Neuroeconomics wikipedia , lookup

Time perception wikipedia , lookup

Neuroplasticity wikipedia , lookup

Cortical cooling wikipedia , lookup

Feature detection (nervous system) wikipedia , lookup

Evoked potential wikipedia , lookup

Neuroprosthetics wikipedia , lookup

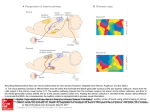

Tot 10 Auditory Brain Development in Children with Hearing Loss – Part Two By Jace Wolfe, PhD, & Joanna Smith, MS Editor’s Note: This is the conclusion of a two-part article. The first part was published in the October 2016 issue. Horizontal sections relative to the intercommissural plane: 10 mm below 4 mm above 8 mm above 5. The secondary auditory cortex: For sale to the highest bidder! Although the activity observed in the primary auditory cortex was certainly interesting, the most relevant finding of the Nishimura et al. study was the activity they observed in the secondary au Figure 6. PET scan imaging results showing neural responses in the brain of a pre- ditory cortex (Nature. 1999; lingually deafened adult with CI after auditory deprivation since birth. Responses are 397[6715]:116). Little to no ac observed in a. the occipital lobe (in blue) in response to meaningless hand movetivity was observed in the sec ments, b. only the secondary auditory cortex (in yellow) in response to a story told ondary auditory cortex while with sign language, and c. only in the primary auditory cortex contralateral to the imparticipants listened to running planted ear when a story is spoken while the participant used the cochlear implant for speech, but robust neural activ the left ear (Reproduced with permission: Nature. 1999;397[6715]:116). ity was observed in the area when participants observed sign language (Fig. 6). This find primary auditory cortex, a functional disconnection between ing was one of the most prominent early reports of crossthe primary and secondary cortices was postulated (Brain modal reorganization in the secondary auditory cortices of Res Rev. 2007;56[1]:259). people who are born with severe to profound hearing loss Recent research in Dr. Andrej Kral’s laboratory investigated and deprived of access to intelligible speech during the first activity in single neurons in the secondary auditory cortex in re few years of life. sponse to cochlear implants (CIs) (J Neurosci. 2016; Stated differently, in the absence of access to intelligible 36[23]:6175). They demonstrated that while some anatomical speech from the primary auditory cortex, the secondary audi fiber tracts among cortical areas and thalamus persist in deaf tory cortex is colonized by the visual system to aid in visual ness and the secondary cortex preserves some auditory respon function. Numerous published reports have shown similar find siveness, there is also an increased visual responsiveness in the ings over the past 15 years, with some indicating activity in the area (PLoS One. 2013;8[4]:e60093). Neurons in the secondary secondary auditory cortex in response to tactile stimulation as auditory area that responded to visual stimuli did not respond to well (Brain Res Rev. 2007;56[1]:259). The acquisition of the auditory stimuli, demonstrating that visual input occupied some secondary auditory cortex by other sensory modalities likely ex of the auditory resources normally used by hearing. plains why people who are born deaf without sufficient access The results of Dr. Kral’s studies (along with the research of to auditory stimuli develop exceptionally adept abilities in some others) suggest that when the brain does not have access to areas that involve other sensory functions (e.g., peripheral vi intelligible speech during the early years of life, meaningful au sion is better in people who are born deaf without access to ditory input does not coordinate activity between the primary sound during the critical period) (Trends Cogn Sci. 2006; and secondary auditory cortices. Instead, the secondary audi 10[11]:512). Since such reorganization occurs outside of the tory cortex assists with other sensory functions such as visual processing. Additionally, auditory stimulation beyond the criti cal period of language development finds disordered functional Dr. Wolfe, left, is the director of connections or interactions between the primary and second audiology at Hearts for Hearing ary auditory cortices, further limiting auditory learning. and an adjunct assistant professor at the University of Oklahoma Health Sciences Center and Salus University. Ms. Smith, right, is a founder and the executive director of Hearts for Hearing in Oklahoma City. 14 The Hearing Journal 4. The break-up! Starring the primary and secondary auditory cortices At this point, a natural question to ask is, “Where does the disconnect occur when the auditory areas of the brain do not November 2016 Tot 10 receive early access to intelligible between the primary and secondary audi Supragranular speech?” To answer that question, we tory cortices are not developed and are 1 Layers turn to Dr. Kral’s research exploring the pruned away in the absence of exposure 2 functions within the multiple layers of to meaningful auditory stimulation. In the primary auditory cortex. The audi deed, visual stimulation elicits responses tory cortex is comprised of six layers in the secondary cortex, but it does not 3 of neurons (2-4 mm thick; Fig. 7). Affer promote the development of functional Thalamic input arrives here ent inputs from the thalamus arrive at the synaptic connections between the pri cortex at layer IV, and much of the pro mary and secondary auditory cortices, 4 The infragranular layers of the cortex cessing within the cortex takes place at which are required for auditory informa are the output circuits of the cortex. layers I-III (i.e. the suprgranular layers). tion to be disseminated in a neural net 5 Layers V-VI (i.e., the infragranular lay work across the brain. ers) modulate activity in the supragran ular layers, serve as the output layers of 3. Kids and kittens the cortex into the subcortical auditory In deaf white kittens, Dr. Kral showed 6 structures, receive top-down projec that the loss of infragranular activity de Infragranular Layers tions from higher-order areas, and in velopmentally occurred between four to tegrate higher- order information with five months of age. This period is consis the bottom-up stream of auditory input. tent with the critical period for adaptation Dr. Kral measured neural responses Figure 7. A visual representation of the to chronic CI stimulation (Cereb Cortex. to auditory stimulation at the different six layers that make up the cortex 2002;12[8]:797; Brain. 2013;136[Pt 1]: layers of the auditory cortex using mi (Cereb Cortex. 2000;10[7]:714). 180-93). In other words, the critical pe croelectrodes inserted to varying riod of auditory brain development depths (Cereb Cortex. 2000;10[7]:714). Because such spanned over the first few months of a cat’s life when cortical testing is too invasive to conduct in young children, Dr. Kral synapses appeared and were pruned. If auditory stimulation completed his studies with deaf white cats. He discovered was not provided during these first few months, during the time activity in layers I-IV but reduced activity in layers V-VI (Fig. 8). when synaptic development happens, development of the au Among other deficits, reduced infragranular layer activity in ditory function is severely compromised. However, if these deaf terferes with the integration of bottom-up and top-down in kittens were provided with a CI within this time period (Fig. 8), formation streams. As a result, Dr. Kral and his coauthors the microelectrode recordings made by Dr. Kral and his col concluded that a functional decoupling between the primary leagues suggest the kittens’ auditory areas of the brain devel and secondary auditory cortices had occurred, particularly oped rather typically. from the top-down information stream. How does Dr. Kral’s research with kittens translate to kids? This “break-up” between the primary and secondary corti For that answer, let’s turn to the work of Dr. Anu Sharma, who ces has significant functional implications for auditory and measured the latency of the P1 component of the cortical spoken language. When auditory signals are not efficiently auditory evoked potential (P1-CAEP) in children with normal and effectively transmitted from the primary to secondary au hearing and participants who were born deaf and received a ditory cortex, the secondary cortex cannot share spoken lan CI at ages ranging from about 1 year old to early adulthood guage and other meaningful sounds with the rest of the brain. (Ear Hear. 2002;23[6]:532). Children who received their CIs This lack of distribution of auditory stimulation to the second during the first three years of life had P1 latencies that were ary auditory cortex and then to the rest of the brain explains similar to children with normal auditory function (Fig. 9). In why a teenager who was born deaf and never had access to contrast, children who received their CIs at 7 years of age or auditory stimulation can detect sound at whisper-soft levels older had P1 latencies that were almost invariably later than with a CI but cannot understand conversational speech or their age-matched peers with normal hearing. Most (but not even distinguish between relatively disparate words. The all) of the children who received their CIs between 4 and bacon sizzling in the pan is audible, but the vivid experience 7 years old also had delayed P1 latencies. Dr. Sharma con evoked in an auditory system that has been exposed to rich cluded that the latency of the P1 component was a biomarker and robust auditory stimulation from birth is diminished or al of auditory brain development, with later latencies represent together absent in the brain of a child who is deprived of audi ing a decoupling of the primary and secondary auditory corti tory stimulation during the first few years of life. Auditory ces. In short, Dr. Sharma’s P1 latencies provided an cortex must distribute auditory stimulation to the rest of the electrophysiologic indication of the critical period of language brain for sounds to be endowed with higher-order meaning. development, which has long been considered to span over Such a connectome model of deafness has recently been used the first two to three years of life. to explain inter-individual variations in CI outcomes (Lancet The functional implication of Dr. Sharma’s work is obvi Neurol. 2016;15[6]:610). ous. Children with hearing loss must be appropriately fitted Likewise, visual stimulation during the first few years of life is with hearing technology (e.g., hearing aids or a CI) as early not sufficient to develop, support, sustain, or lay a foundation for as possible to avoid auditory deprivation and provide access listening and spoken language development. Again, the underly to a rich and robust model of intelligible speech. Early fitting ing explanation resides in the fact that the functional connections of technology is necessary to feed the auditory cortex with 16 The Hearing Journal November 2016 Tot 10 adequate stimulation to promote synaptogenesis between the primary and secondary cortices and establish the func tional neural networks between the secondary auditory cor tex and the rest of the brain to make incoming sound come to life and possess higher-order meaning. To optimize listen ing and spoken language, the brain must be fed with a hearty diet of intelligible speech. Visual stimulation does not pro mote the requisite connection between the primary and sec ondary cortices necessary to develop spoken language skills; decades of clinical and anecdotal observations indi cate poor auditory and spoken language outcomes in chil dren who are deprived of sound during the critical period. Also, a large number of studies show better listening, spo ken language, and literacy skills in children who communi cate using listening and spoken language relative to their peers who use sign language or Total Communication (e.g., a combination of aural/oral and sign language) (Int J Audiol. 2013;52 Suppl 2:S65; Otol Neurotol. 2016;37[2]:e82; Ear Hear. 2011;32[1 Suppl]:84S; Ear Hear. 2003;24[1 Suppl]: 121S). We must remember that every day within the critical period is actually critical. In other words, delays (and most probably, lifetime deficits) in listening and spoken language abilities will occur if a child is deprived of sound throughout the first 30 months of life, even if cochlear implantation is provided weeks or months before the third birthday. The deprivation that occurred during the first two and a half years of the child’s life will almost certainly result in a weakening of the functional synaptic connections between the primary and secondary au b) a subsequent decline in the functional ditory cortices and Naive (congenitally deaf) cat neural networks between the secondary auditory cortex and the rest of the brain. We know that the typical child from an affluent home hears 46 million intelligible words by his or her fourth birthday (American Educator, 2003). These 46 million words serve as the bricks and mortar that lay the functional pathways between the primary and secondary auditory corti ces and establish the neural networks necessary for sound to come to life and possess higher-order meaning. Admittedly, it is a daunting goal to provide access to these 46 million words by the fourth birthday. To do so, we must remind ourselves that every day within the critical period is an important oppor tunity to nourish the developing auditory brain with intelligible speech. 18 The Hearing Journal 5 mV/mm2 500 V 5 mV/mm2 5 mV/mm2 500 V 500 V 2. Upping the ante Important work by Mortensen and colleagues has shown that the complex neural networks that arise when the secondary auditory cortex shares an auditory signal with other areas of the brain extends beyond the perspective of auditory skill de velopment (Neuroimage. 2006;31[2]:842). Mortensen used PET scan to image the brain while high-performing and poorperforming CI users listened to running speech. The high per formers showed activity in the left inferior prefrontal cortex while the poor performers did not (Fig. 10). The left inferior prefrontal cortex, a region often referred to as Broca’s area, is involved with phonological processing, phonemic awareness, speech production, and literacy aptitude. As a result, robust connections must be developed between the primary and secondary auditory cortices so that the latter may facilitate responses in the left inferior prefrontal cortex, which is imperative for several rea ring cat sons. Engaging the left inferior Cortical field potentials Current source densities Current source densities prefrontal cortex in response to a) b) Normal hearing cat Naive (congenitally deaf) cat ayer Cortical layer meaningful sounds is necessary Cortical field potentials Cortical field potentials Current source densities Current source densities so that the child may learn to pro II Cortical layer Cortical layer duce intelligible speech. As we’ve III known for quite some time, chil II II IV dren speak as they hear, and ac III III IV IV IV cess to intelligible words is V/VI IV IV necessary to develop intelligible V/VI V/VI V/VI speech. Furthermore, access to V/VI V/VI V/VI V/VI V/VI intelligible speech is necessary to V/VI V/VI V/VI develop phonemic awareness V/VI V/VI V/VI V/VI (e.g., The “A” says “ah.”), which V/VI V/VI V/VI V/VI V/VI serves as the foundation for read ing development. To summarize, children with hearing loss need brain access to intelligible speech 10 20 30 40 50 10 20 30 40 50 10 20 30 40 50 10 20 30 40 50 as early and as much as possible 10 20 30 40 50 10 20 30 40 50 Time [ms] 10 20 30 40Time 50[ms] Time [ms] Time [ms] to develop their auditory skills as Time [ms] Time [ms] Time [ms] Cochlear Implant Figure 8. Single-unit neural responses to auditory stimu- well as their speech production Cochlear Implant lation recorded with a microelectrode inserted at differ- and literacy abilities. ent layers within the cortex of cats. Figure 8a provides an example of responses from normal hearing cats. Of note, 1. The auditory brain is hungry! Feed it clearly and robust neural responses were recorded at all six cortical layers. Figure 8b provides an example of responses ob- frequently. tained from a deaf white cat that received a CI after the So what do we do to promote au critical period of auditory brain development. Note that robust responses are only ob- ditory brain development in chil dren with hearing loss in order to served in layers I through IV, a finding associated with decoupling of primary and secpromote optimization of listening, ondary auditory cortex (Cereb Cortex. 2000;10[7]:714). November 2016 Tot 10 20 P1 latency (ms) routinely administer audiologi cal evaluations to ensure chil Age of implantation dren are hearing well with their 350 hearing technology. < 3.5 years We also must make certain 3.6-6.5 years 300 that each of the child’s caregiv > 7 years ers realizes the importance of using a hearing aid, CI, and RM 250 technology during all waking hours from the first day when these technologies are fitted. 200 Finally, we must assist the family with creating a robust 150 daily conversational/language model rich in intelligible speech. Families must also be aware 100 that their child needs to hear 46 million words by the time he/ she is 4 years old and that their 50 auditory brain development de pends upon it. Families must understand that a child’s long0 0 2 4 6 8 10 12 14 16 18 20 34 36 term listening, spoken language, literacy, academic, and social Age (years) development is influenced by early brain access to intelligible speech, and they must be Figure 9. Cortical auditory evoked potentials (CAEP) obtained from congenitally-deafened children who re- equipped with skills to optimize the child’s exposure to intelligi ceived a CI prior to 3.5 years of age (red circle), 3.6 to ble speech. Audiologists and 6.5 years of age (blue x), and of after 7 years of age speech-language pathologists (black triangles). The data points are plotted relative must work hand-in-hand with to the 95 confidence interval lines representing typical families to achieve these goals. P1 CAEP latencies of children with normal hearing (Ear Neuroscientists from around Hear. 2002;23[6]:532). the world have enlightened our profession on the neurophysiologic under pinnings of listening and spoken language High-comprehension 7.5 development. Of particular note, children must have access to intelligible speech and meaningful acoustic input to fully develop the auditory areas of the brain and optimize spoken language and literacy aptitude. Vi sual stimulation in the form of sign language does not promote development of the func Activation of the LIPC requires exposure to intelligible speech. tional synaptic connections between the primary and secondary auditory cortices. These connections serve as the spring board for a neural network/connectome that fully engages the brain and is neces sary for the development of typical listening z=5 Right hemisphere Left hemisphere and spoken language abilities. Modern hearing technology (e.g., hearing aids, CIs, High performers show activity in LIPC, while poor performers do not. digital adaptive remote microphone sys tems) allows almost every child with hear Figure 10. PET scan imaging showing typical response of (a) high-performing ing loss the access needed to fully develop CI users with broad activity present in primary and secondary auditory cortex the auditory areas of the brain. It is our job and also in left inferior prefrontal cortex in response to auditory stimulation from a CI, and (b) poor-performing CI users with limited activity present in pri- as pediatric hearing health care profession mary and secondary auditory cortex and no activity in left inferior prefrontal cor- als to provide the children we serve with the tex in response to auditory stimulation from a CI (Neuroimage. 2006;31[2]:842). brain growth they deserve. spoken language, and literacy abilities? We stick with the tried and true fundamentals of mod ern, evidence-based pediatric hearing health care. We seek to accurately diagnose children with hearing loss by 1 month of age so that hearing aids may be fitted using probe microphone measures and evidence-based targets (e.g., Desired Sensation Level 5.0/NAL-NL2) as soon as possible. For children with se vere to profound hearing loss, we move forward with cochlear implantation between 6 and 9 months of age. For all children using hearing technology, we also ensure they are fitted with digital adaptive remote micro phone systems (RM) so they have access to intelligible speech in our noisy world. We are convinced that the road to 46 million words is much more manageable to navigate with the use of a digital adaptive RM system. Research has clearly shown that RM technology is the most effective means to im prove communication in noise (J Am Acad Audiol. 2013; 24[8]:714; Am J Audiol. 2015; 24[3]:440). Additionally, we must The Hearing Journal November 2016