* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download PDF - Bentham Open

Neural coding wikipedia , lookup

Development of the nervous system wikipedia , lookup

Donald O. Hebb wikipedia , lookup

Response priming wikipedia , lookup

Executive functions wikipedia , lookup

Emotion and memory wikipedia , lookup

Perceptual learning wikipedia , lookup

Neural engineering wikipedia , lookup

Psychoneuroimmunology wikipedia , lookup

Artificial neural network wikipedia , lookup

Nervous system network models wikipedia , lookup

Biological neuron model wikipedia , lookup

Machine learning wikipedia , lookup

Embodied cognitive science wikipedia , lookup

Feature detection (nervous system) wikipedia , lookup

Neuroethology wikipedia , lookup

Emotion perception wikipedia , lookup

Emotional lateralization wikipedia , lookup

Central pattern generator wikipedia , lookup

Pattern recognition wikipedia , lookup

Neural modeling fields wikipedia , lookup

Metastability in the brain wikipedia , lookup

Eyeblink conditioning wikipedia , lookup

Convolutional neural network wikipedia , lookup

Psychological behaviorism wikipedia , lookup

Psychophysics wikipedia , lookup

Catastrophic interference wikipedia , lookup

Reinforcement wikipedia , lookup

Stimulus (physiology) wikipedia , lookup

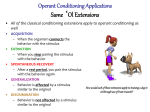

Operant conditioning wikipedia , lookup