* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download A self-organizing model of disparity maps in the primary visual cortex

Clinical neurochemistry wikipedia , lookup

Apical dendrite wikipedia , lookup

Neural modeling fields wikipedia , lookup

Premovement neuronal activity wikipedia , lookup

Neuroesthetics wikipedia , lookup

Subventricular zone wikipedia , lookup

Eyeblink conditioning wikipedia , lookup

Central pattern generator wikipedia , lookup

Holonomic brain theory wikipedia , lookup

Recurrent neural network wikipedia , lookup

Neural coding wikipedia , lookup

Types of artificial neural networks wikipedia , lookup

Neuroanatomy wikipedia , lookup

Optogenetics wikipedia , lookup

Neuropsychopharmacology wikipedia , lookup

Development of the nervous system wikipedia , lookup

Convolutional neural network wikipedia , lookup

Stimulus (physiology) wikipedia , lookup

Metastability in the brain wikipedia , lookup

Synaptic gating wikipedia , lookup

Nervous system network models wikipedia , lookup

Neural correlates of consciousness wikipedia , lookup

Biological neuron model wikipedia , lookup

Efficient coding hypothesis wikipedia , lookup

A self-organizing model of disparity

maps in the primary visual cortex

TIKESH RAMTOHUL

(S0566072)

MASTER OF SCIENCE

SCHOOL OF INFORMATICS

UNIVERSITY OF EDINBURGH

2006

ABSTRACT

Current models of primary visual cortex (V1) development show how visual features

such as orientation and eye preference can emerge from spontaneous and visually

evoked neural activity, but it is not yet known whether spatially organized maps for

low-level visual pattern disparity are present in V1, and if so how they develop. This

report documents a computational approach based on the LISSOM model that was

adopted to study the potential self-organizing aspect of binocular disparity. It is among

the first studies making use of computational modelling to investigate the topographical

organization of cortical neurons based on disparity preferences.

The simulation results show that neurons develop phase disparities as a result of the

self-organizing process, but that there is no apparent orderly grouping based on

disparity preferences. However there seems to be a strong correlation between disparity

selectivity and orientation preference. Neurons exhibiting relatively large phase

disparities tend to prefer vertical orientations. This leads to suggest that cortical regions

grouped by orientation preferences might be subdivided into compartments that are in

turn organised based on disparity selectivity.

i

ACKNOWLEDGEMENTS

I would like to thank Jim Bednar for his help and support throughout this endeavour.

Thank you for your insightful comments and your patience. My sincere thanks also goes

to Chris Ball who has contributed a lot to my understanding of the Python language and

the Topographica simulator. A big thank you also to all the friends I’ve made during

my stay in Edinburgh. The camaraderie has been most soothing, especially during

stressful situations. And finally, thank you Mom and Dad for always being there for

your son.

ii

DECLARATION

I declare that this thesis was composed by myself, that the work contained herein is my

own except where explicitly stated otherwise in the text, and that this work has not been

submitted for any other degree or professional qualification except as specified.

(Tikesh RAMTOHUL)

iii

TABLE OF CONTENTS

CHAPTER 1 INTRODUCTION ............................................................................................... 1

1.1 MOTIVATION ..................................................................................................................................1

1.1 TASK DECOMPOSITION ................................................................................................................2

1.2 THESIS OUTLINE ............................................................................................................................2

CHAPTER 2 BACKGROUND .................................................................................................. 3

2.1 BASICS OF VISUAL SYSTEM........................................................................................................3

2.1.1 EYE .............................................................................................................................................3

2.1.2 VISUAL PATHWAY..................................................................................................................4

2.1.3 RETINA ......................................................................................................................................4

2.1.4 LGN.............................................................................................................................................5

2.1.5 PRIMARY VISUAL CORTEX ..................................................................................................6

2.2 DISPARITY .......................................................................................................................................7

2.2.1 GEOMETRY OF BINOCULAR VIEWING ..............................................................................7

2.2.2 ENCODING OF BINOCULAR DISPARITY ............................................................................9

2.2.3 DISPARITY SENSITIVITY OF CORTICAL CELLS.............................................................10

2.2.4 CHRONOLOGICAL REVIEW ................................................................................................12

2.2.5 Ohzawa-DeAngelis-Freeman (ODF) ENERGY MODEL (1990) .............................................14

2.2.6 ENERGY MODEL: READ et al (2002)....................................................................................16

2.3 TOPOGRAPHY ...............................................................................................................................17

2.3.1 TOPOGRAPHY AND DISPARITY .........................................................................................18

2.3.2 TS’O, WANG ROE and GILBERT (2001)...............................................................................19

2.4 SELF-ORGANIZATION .................................................................................................................20

2.5 COMPUTATIONAL MODELS ......................................................................................................20

2.5.1 KOHONEN SOM......................................................................................................................21

2.6 LISSOM ...........................................................................................................................................22

2.6.1 LISSOM ARCHITECTURE .....................................................................................................23

2.6.2 SELF-ORGANIZATION IN LISSOM .....................................................................................26

2.7 TOPOGRAPHICA ...........................................................................................................................26

2.7.1 MAP MEASUREMENT IN TOPOGRAPHICA ......................................................................27

2.8 MODEL OF DISPARITY SELF-ORGANIZATION ......................................................................28

2.8.1 WIEMER ET AL (2000) ............................................................................................................29

CHAPTER 3 METHODOLOGY ............................................................................................ 32

3.1 SELF-ORGANIZATION OF DISPARITY SELECTIVITY ...........................................................32

3.1.2 TWO-EYE MODEL FOR DISPARITY SELECTIVITY .........................................................32

3.1.3 DISPARITY MAP MEASUREMENT .....................................................................................35

3.1.4 TYPE OF INPUT ......................................................................................................................38

3.2 PHASE INVARIANCE....................................................................................................................39

3.2.1 TEST CASES FOR ODF MODEL ...........................................................................................41

CHAPTER 4 RESULTS ........................................................................................................... 44

4.1 GAUSSIAN......................................................................................................................................45

4.1.1 DETERMINATION OF DISPARITY THRESHOLD..............................................................45

4.1.2 RECEPTIVE FIELD PROPERTIES AND DISPARITY MAPS..............................................47

4.2 PLUS/MINUS ..................................................................................................................................53

4.2.1 DETERMINATION OF DISPARITY THRESHOLD..............................................................53

4.2.2 RECEPTIVE FIELD PROPERTIES AND DISPARITY MAPS..............................................55

4.3 NATURAL.......................................................................................................................................62

4.4 PHASE INVARIANCE....................................................................................................................64

CHAPTER 5 DISCUSSION..................................................................................................... 66

5.1 RECEPTIVE FIELD STRUCTURE ................................................................................................66

5.2 DISPARITY AND ORIENTATION PREFERENCE......................................................................67

5.3 ORIENTATION PREFERENCE AND PHASE DISPARITY ........................................................69

iv

5.4 VALIDATION AGAINST BIOLOGICAL DATA .........................................................................76

5.4.1 DISTRIBUTION OF PHASE DISPARITIES...........................................................................76

5.4.2 DISPARITY AND ORIENTATION PREFERENCE...............................................................77

5.5 SUMMARY .....................................................................................................................................77

CHAPTER 6 CONCLUSION AND FUTURE WORK ......................................................... 79

6.1 CONCLUSION ................................................................................................................................79

6.2 FUTURE WORK .............................................................................................................................80

6.2.1 PHASE INVARIANCE.............................................................................................................80

6.2.2 DISPARITY SELECTIVITY AND OCULAR DOMINANCE................................................83

6.2.3 VERTICAL DISPARITY..........................................................................................................83

6.2.4 PRENATAL AND POSTNATAL SELF-ORGANIZATION...................................................84

BIBLIOGRAPHY ..................................................................................................................... 85

v

CHAPTER 1

INTRODUCTION

1.1 MOTIVATION

An extremely remarkable function of the visual system is the perception of the world in

three-dimensions although the image cast on the retinas is only two-dimensional. It is

believed that the third dimension, depth, is inferred from the visual cues in the retinal

images, the most prominent one being binocular disparity (Qian, 1997). Humans and

other animals possess two eyes whose range of vision overlap. Objects within the

overlapping region project slightly different images on the two retinas. This is referred

to as disparity and it is believed to be one of the cues that the brain uses for assessing

depth.

Although recent studies of binocular disparity at the physiological level have brought

much insight to the understanding of the role of stereopsis in depth perception, much

experimental work remains to be done to eventually yield a unified account of how the

actual mechanism operates. Trying to unlock the mysteries of the brain by relying solely

on biological data is practically impossible. This is where computational models of the

brain come into the picture. They can provide concrete, testable explanations for

mechanisms of long-term development that are difficult to observe directly, making it

possible to link a large number of observations into a coherent theory. It is essential that

the models are based on real physiological data in order to understand brain functions

(Qian, 1997).

One such computational model is LISSOM (Laterally Interconnected Synergetically

Self-Organizing Map), a self-organizing map model of the visual cortex. It models the

structure, development, and function of the visual cortex at the level of maps and their

connections. Current models of the primary visual cortex (V1) development show how

visual features such as orientation and eye preference can emerge from spontaneous and

visually-evoked activity, i.e. there are groups of neurons across the surface of the cortex

which respond selectively to different types of orientation and ocular dominance. But it

is not known if spatially organized maps for disparity are present in V1, and if so, how

they develop. The main aim of the project was to investigate the potential selforganizing feature of disparity selectivity.

1

1.1 TASK DECOMPOSITION

The project was broken down into 2 main stages. The first one dealt with the

investigation of the input-driven self-organization process when disparate retinal images

are used as input. This consisted of developing an appropriate LISSOM model for

disparity and the implementation of suitable map measuring techniques to illustrate

differences in disparity selectivity. The second stage was concerned with the

investigation of possible ways to integrate or simulate complex cells in LISSOM so that

the self-organizing process becomes phase-independent and hence better suited for

disparity selectivity. Current versions of LISSOM develop phase maps when

anticorrelated inputs are used, for e.g. when positive and negative Gaussian inputs are

fed onto the retina. This is quite a predictable outcome since LISSOM develops

behaviour reminiscent of simple cells, and these cells are sensitive to the phase of the

input stimulus. But real animals do not have such maps in V1. One reason might be

because most cells in real animals are complex, and hence insensitive to phase. Thus,

integration of complex cells in the LISSOM structure could lead to formation of selforganized maps that are independent of phase.

1.2 THESIS OUTLINE

The thesis has been divided into 6 chapters, starting with this introductory chapter. The

remaining chapters are organised as follows:

•

Chapter 2 gives an overview of the visual system, describes the key points about

research on binocular disparity, talks about the self-organizing process and

presents related work on computational modelling of disparity.

•

Chapter 3 describes the methodology used for investigating the self-organizing

process of disparity preferences in LISSOM.

•

Chapter 4 presents the simulation results, giving a brief account of the direct

observations.

•

Chapter 5 provides a discussion based on the simulations, validating the

computational results with biological findings where necessary.

•

Chapter 6 highlights the major outcomes from the study on disparity, and

provides a guide to possible extensions to this research work.

2

CHAPTER 2

BACKGROUND

This chapter presents background material required for a good understanding of the

neural processes involved in disparity encoding. Moreover, the self-organizing process

is discussed thoroughly, together with the importance of computational modelling in

neuroscience. The main components of LISSOM are highlighted to provide a clear

image of how topographic maps form using this model. The chapter also includes

related work on disparity models.

2.1 BASICS OF VISUAL SYSTEM

The human visual system is a biological masterpiece that has been faceted and refined

by millions of years of evolution. Its efficiency is unparalleled in comparison with any

piece of apparatus ever invented. It interprets the information from visible light to build

a three-dimensional representation of the world. This section briefly describes the

prominent constituents of the visual system and outlines the neural mechanisms

involved during visual processing.

2.1.1 EYE

Light entering the eye is refracted as it passes through the cornea. It then passes through

the pupil, whose size is regulated by the dilation and constriction of the iris muscles, in

order to control the amount of light entering the eye. The lens is responsible for

focusing light onto the retina by proper adjustment of its shape.

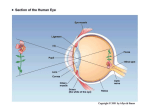

Figure 2.1: Anatomy of the human eye (reprinted from [26])

3

2.1.2 VISUAL PATHWAY

The main structures involved in early visual processing are the retina, the lateral

geniculate nucleus of the thalamus (LGN), and the primary visual cortex (V1). The

initial step of the processing is carried out in the retina of the eye. Output from the

retina of each eye is fed to the LGN, at the base of each side of the brain. The processed

signals from the LGN are then sent to V1. V1 outputs are then fed to higher cortical

areas where further processing takes place. The diagram below gives a schematic

overview of the visual pathway

Figure 2.2: Visual Pathway (reprinted from [40])

2.1.3 RETINA

The retina is part of the central nervous system. It is located on the inside of the rear

surface of the eye. It consists of an array of photoreceptors and other related cells to

convert the incident light into neural signals. The retinal output takes the form of action

potentials in retinal ganglion cells whose axons collect in a bundle to constitute the

optic nerve.

The light receptors are of two kinds, rods and cones, being responsible for vision in dim

light and bright light respectively. Rods are more numerous than cones but are

conspicuously absent at the centre of the retina. This region is known as the fovea and

represents the centre of fixation. It contains a high concentration of cones, thereby

making it well-suited for fine-detailed vision.

As mentioned earlier, the output of the retina is represented by the activity of the retinal

ganglion cells. An interesting feature of these cells, which is shared by other neurons

higher up in the visual pathway, is their selective responsiveness to stimuli on specific

spots on the retina. The term ‘receptive field’ is used to explain this phenomenon.

4

Stephen Kuffler was the first to record the responses of retinal ganglion cells to spots of

light in a cat in 1953(Hubel, 1995). He observed that he could influence the firing rate

of a retinal ganglion by focusing a spot of light on a specific region of the retina. This

region was the receptive field (RF) of the cell. Levine and Shefner (1991) define a

receptive field as an “area in which stimulation leads to a response of a particular

sensory neuron”. For a retinal ganglion cell or any other neuron concerned with vision,

the receptive field is that part of the visual world that influences the firing of that

particular cell; in other words, it is that region of the retina which receives stimulation ,

consequently altering the firing rate of the cell being studied.

Most retinal ganglion cells have concentric (or centre-surround) RFs. The latter are of

two types, ON-centre cells and OFF-centre cells. These RFs are divided into 2 parts

(centre/surround), one of which is excitatory ("ON"), the other inhibitory ("OFF"). For

an ON-centre cell, a spot of light incident on the inside (centre) of the receptive field

will increase the discharge rate, while light falling on the outside ring (surround) will

suppress firing. The opposite effect is observed for OFF-centre cells. Other cells may

have receptive fields of different shapes. For example, the RFs of most simple cells in

V1 can have a two-lobe arrangement, favouring a 45-degree edge with dark in the upper

left and light in the lower right, and a three-lobe pattern, favouring a 135-degree white

line against a dark background (Miikkulainen et al, 2005)

Figure 2.3: Receptive Fields (reprinted from [40])

2.1.4 LGN

The LGN receives neural signals from the retina, and sends projections directly to the

primary visual cortex, thereby acting as a relay. Its role in the central nervous system is

not very clear, but it consists of neurons that are very similar to the retinal ganglion

cells. These neurons are arranged retinotopically. Retinotopy or topographic

representation implies that as we move along the retina from one point to another, the

corresponding points in the LGN trace a continuous path (Hubel, 1995). The ON-centre

cells in the retina connect to the ON cells in the LGN and the OFF cells in the retina

connect to the OFF cells in the LGN. Both groups of cells share a common

5

functionality, namely that of performing some sort of edge detection on the input

signals.

2.1.5 PRIMARY VISUAL CORTEX

ARCHITECTURE

The primary visual cortex, situated at the rear of the brain, is the first cortical site of

visual processing. Just like the LGN, V1 neurons also exhibit retinotopy, but they have

altogether different characteristics and functionalities as compared to their geniculate

counterparts. To begin with, most V1 neurons are binocular, displaying a strong

response to stimuli from either eye. They also respond selectively to certain features

such as spatial frequency, orientation and direction of movement of the stimulus.

Interestingly, disparity has also been identified as one of the visual cues that cause

selective discharge in V1 cells. The architecture of V1 is such that at a given location, a

vertical section through the cortical sheet consists of cells that have more or less similar

feature preferences. In this columnar model, nearby columns tend to have somewhat

matching preferences while more distant columns show a greater degree of

dissimilarity. Moreover, preferences repeat at regular intervals in every direction, thus

giving rise to a smoothly varying map for each feature (Miikkulainen et al, 2005).For

example, as we move parallel to the surface of V1, there are alternating columns of

cells, known as ocular dominance columns, which are driven predominantly by inputs

to a single eye. Another type of feature map is the orientation map, which describes the

orientation preference of cells changing gradually from horizontal to vertical and back

again as we move perpendicular to the cortical surface.

TYPE OF CELLS

Another important point about V1 which is relevant to this project concerns the type of

cells that can be found. Hubel and Wiesel(1962) subdivided the cortical cells into 2

main groups, simple and complex, based on their RFs. Simple cells often have a twolobe or three-lobe RF (shown in figure 2.3) . Consider a simple cell with a three-lobe

RF, with an ON region flanked by OFF regions. If a bar of light, with the correct

orientation, is incident on the middle region, the firing rate of the cell will increase, but

if the image is incident on the OFF regions, the firing rate will be suppressed. On the

other hand if a dark bar is incident on the ON region, suppression will take place,

whereas excitation will occur if it falls into the OFF regions of the RF. Thus, the

response of simple cells is dependent on the phase of the stimulus. In contrast, the

response of complex cells does not depend on the phase of the stimulus; spikes will be

elicited if the bar, whether dark or bright, is incident on any region within its receptive

field as long as it is properly oriented.

6

2.2 DISPARITY

We are capable of three-dimensional vision despite having only a 2-D projection of the

world on the retina. This remarkable ability might be just a mundane task for the visual

system but it has baffled many researchers for decades. No wonder then that much

effort has been put in by the scientific community to understand the processes taking

place in the brain during depth perception. It is now known that the sensation of depth is

based upon many visual cues (Qian, 1997), for example occlusion, relative size,

perspective, motion parallax, shading, blur, and relative motion (DeAngelis, 2000;

Gonzales and Perez, 1998). Such cues are monocular, but species having frontally

located eyes are additionally subjected to binocular cues, an example of which is

binocular disparity. It refers to differences between the retinal images of a given object

in space, and arises because the two eyes are laterally separated. Three-dimensional

vision based on binocular disparity is commonly referred to as stereoscopic vision.

Although monocular cues are sufficient to provide the sensation of depth, it is the

contribution of stereopsis that makes this process so effective in humans (Gonzales and

Perez, 1998)

2.2.1 GEOMETRY OF BINOCULAR VIEWING

Suppose that an observer fixes his gaze on the white sphere Q (refer to figure 2.4);

fixation by default causes images of the object to fall on the fovea. We say that QR and

QL are corresponding points on the retinas. The black sphere S is closer to the observer,

and as can be deduced easily by geometry, its images fall at non-corresponding points

in the retinas. Similarly, a point further away from the point of fixation will give images

closer to each other compared to corresponding points. Any such lack of

correspondence is known as disparity.

Figure 2.4:Geometry of Stereopsis( adapted from [57])

7

The distance z from the fixation point, which basically represents the difference in

depth, can be deduced from the retinal disparity δ = r-l and the interocular distance I

(Read, 2005). Such a disparity which is directly related to the location of an object in

depth is known as horizontal disparity.

All points that are seen as the same distance away as the fixation point (Q in this case)

are said to lie on the horopter, a surface whose exact shape depends on our estimations

on distance, and hence on our brains (Hubel, 1995). Points in front and behind the

horopter induce negative and positive disparities respectively. The projection of the

horopter in the horizontal plane across the fovea is the Vieth-Muller circle, which

represents the locus of all points with zero disparity (Gonzales and Perez, 1998)

Figure 2.5:Horizontal disparity( reprinted from [14])

Another type of disparity, much less studied but generally accepted to play some role in

depth perception, is vertical disparity. When an object is located closer to one eye than

the other, its image is slightly larger on the retina of that eye. This gives rise to vertical

disparities. Bishop (1989) points out that such disparities occur when objects are viewed

at relatively near distances above or below the visual plane, but which do not lie on the

median plane (a vertical plane through the midline of the body that divides the body into

right and left halves). This can be best explained by an illustration, given in figure 2.6.

Suppose we have a point P which is above the visual plane and to the right of the

median plane, such that it is nearer to the right eye. Simple geometrical intuition shows

that the angles β1 and β2 subtended by P are different and that β2 > β1. The vertical

disparity v is given by the difference in the 2 vertical visual angles, such that v = β2 - β1

(Bishop, 1989)

8

Figure 2.6: Vertical disparity reprinted from [6])

2.2.2 ENCODING OF BINOCULAR DISPARITY

We have seen that disparity is about the difference between the 2 retinal projections of

an object, but how do cortical cells encode this information? There are 2 models that

have been forwarded, the position difference model and the phase difference model. Let

us assume a Gabor-shaped RF for a binocular simple cell in V1. In the position

difference model, the cell has the same RF profile on each eye but with an overall

position shift between the right and left RFs, i.e. the RF profiles have identical shape in

both eyes but are centred at non-corresponding points on the 2 retinas. In the phase

difference model, the RFs are centred at corresponding retinal points but have different

shapes or phases. Figure 2.7 illustrates the differences between position and phase

encoding.

(a) Position Difference Model

(b) Phase Difference Model

Figure 2.7: Disparity encoding( reprinted from [2])

9

There is evidence that supports each of these two encoding schemes. Position disparity

was demonstrated first by Nikara et al (1968), and later by Joshua and Bishop (1970),

von der Heydt et al (1978) and Maske et al (1984). Evidence of the phase disparities has

also been shown by various studies (DeAngelis et al., 1991, 1995; DeValois and

DeValois, 1988; Fleet et al., 1996; Freeman and Ohzawa, 1990; Nomura et al., 1990;

Ohzawa et al., 1996; Qian, 1994; Qian and Zhu, 1997; Zhu and Qian, 1996)

2.2.3 DISPARITY SENSITIVITY OF CORTICAL CELLS

The two mains groups of cortical cells, namely simple and complex, differ in the

complexity of their behaviour. Hubel and Wiesel (1962) proposed a hierarchical

organisation in which complex cells receive input from simple cells, which in turn

receive input from the LGN. In this model, the simple cells have the same orientation

preference and their RFs are arranged in an overlapping fashion over the entire RF of

the complex cell (Hubel, 1995). They suggested that the complex cells would only fire

if the connections between the simple cells and the complex cell are excitatory and the

input stimuli are incident on specific regions of the RF. This model is not unanimous

among neurophysiologists since some studies have shown that some complex cells have

direct neural connections with the LGN (Qian, 1997). Research in this area has been

very active and there is a much better understanding of the properties of these cells

nowadays, especially in the role they might play in disparity encoding. It is generally

accepted that most simple and complex cells are binocular, i.e. they have receptive

fields in both retinas and show a strong response when either eye is stimulated.

Furthermore, researchers are unanimous over the disparity selectivity of these cells; they

respond differently to different disparity stimuli. These two properties are essential for

disparity computation.

It is therefore tempting to conclude that both types of cell are suitable disparity

detectors, but this is not the case since they have quite distinct characteristics. Receptive

fields of simple cells consist of excitatory (ON) and inhibitory (OFF) subregions that

respond to light and dark stimuli respectively. Complex cells, on the other hand,

respond to stimuli anywhere within their RFs for both bright and dark bars because of a

lack of separate excitatory and inhibitory subregions in the receptive fields (Skottun et

al, 1991). The following diagram illustrates typical RF types for complex and simple

cells. Complex cells generally have larger RFs and respond to targets even if the

contrast polarity is altered (for example, if we replace the black dots with the white dots

and vice-versa). Simple cells have discrete RF subregions and respond when the correct

input configuration is incident on their RFs.

10

Figure 2.8: 1-D RF for simple and complex cells (reprinted from [47])

Ohzawa and collaborators (1990) describe simple cells as “sensors for a multitude of

stimulus parameters” because besides responding to disparity, they also respond

selectively to stimulus position, contrast polarity, spatial frequency, and orientation. On

the contrary, disparity encoding in complex cells is independent of stimulus position

and contrast polarity; changes in irrelevant parameters would therefore not affect the

disparity encoding features of such cells (Ohzawa et al, 1990). The diagram below

shows how the binocular RF of a simple cell changes with lateral displacement of the

stimulus, whereas the complex cell has an elongated RF along the position axis, making

it a better disparity detector. Note that a binocular RF is generated by plotting the

response of the cell as a function of the position of the stimulus in each eye. The

stimulus used is typically a long bar at the preferred orientation of the cell.

Figure 2.9: Binocular RF for simple and complex cells (reprinted from [57])

11

2.2.4 CHRONOLOGICAL REVIEW

The first major contribution to the study of neural mechanisms in binocular vision came

from Barlow et al in the 1960’s. They found out that neurons fire selectively to objects

placed at different stereoscopic depths in the cat striate cortex (Barlow et al, 1967). A

few years later, Poggio and Fischer (1977) confirmed these findings when they

investigated awake behaving macaque monkeys. The visual system of these animals

resembles that of humans (Cowey and Ellis, 1967; Farrer and Graham, 1967; Devalois

and Jacobs, 1968; Harweth et al., 1995), and several studies have led to the conclusion

that they have characteristics of stereopsis similar to humans (Gonzales and Perez,

1998). Using solid bars as stimuli, Poggio and Fischer identified 4 basic types of

neurons, namely tuned-excitatory, tuned-inhibitory, near and far. Tuned-excitatory

neurons discharge best to objects at or very near to the horopter, i.e. zero disparity;

tuned inhibitory cells respond at all disparities except those near zero; near cells are

more responsive to objects that lie in front of the horopter, i.e. to negative disparities,

and finally far cells prefer objects that are behind the horopter, i.e positive disparities

(DeAngelis, 2000)

The invention of the random dot stereogram (RDS) by Julesz (1971) also contributed

largely to this field. A random dot stereogram is a pair of two images of randomly

distributed dots that are identical except that a portion of 1 image is displaced

horizontally (figure 2.10).

Figure 2.10: RDS reprinted from [57])

It looks unstructured when viewed individually, but under a condition of binocular

fusion, the shifted region jumps out vividly at a different depth (Qian, 1997). Several

experiments based on solid bars and RDS provided results that strengthened the notion

of 4 basic categories of disparity-selective neurons being present (Gonzales and Perez,

12

1998). A few reports also mention the presence of 2 additional categories, namely tuned

near and tuned far (Poggio et al, 1988; Gonzales et al, 1993a), combining the properties

of tuned excitatory cells with those of near and far cells respectively. Figure 2.11

illustrates the disparity tuning curves for the 6 categories of cells obtained from

experiments on the visual cortex of monkeys carried out by Poggio et al (1988) .TN

refers to tuned near cell, TE to tuned excitatory, TF to tuned far, NE to near, TI to

tuned inhibitory, and FA to far.

Figure 2.11: Disparity Tuning Curves (reprinted from [24])

Amidst all these exciting findings, a proper mathematical approach to simulate

disparity-selectivity of cortical cells failed to emerge (Qian, 1997). In 1990 however,

Ohzawa, DeAngelis and Freeman proposed the disparity energy model to simulate the

response of complex cells. The latter has been widely accepted as a very good model of

the behaviour of disparity-selective cells in V1. The next section gives an in-depth

description of this interesting approach. However, results obtained from some

physiological studies have not been in phase with certain of its predictions. In an

attempt to account for these discrepancies, Read et al (2002) came up with a modified

version of the original energy model. The basic features of Read’s model are also

highlighted in this chapter.

13

2.2.5 Ohzawa-DeAngelis-Freeman (ODF) ENERGY MODEL (1990)

In an earlier section, certain properties of complex cells have been highlighted that

make them well-suited for disparity encoding. To recap, the interesting features of these

cells include selectivity to different stimuli disparities, an indiscriminate response to

contrast polarity and an apparent insensitivity to stimulus position. Ohzawa and

colleagues postulate that these are not enough to create a suitable disparity detector.

They outline 3 additional properties that need to be taken into account to develop a

suitable algorithm. These are:

1. The disparity selectivity of complex cells must be much finer than that predicted

by the size of the RFs, as reported by Nikara et al (1968)

2. The preferred disparity must be constant for all stimulus positions within the RF

3. Incorrect contrast polarity combinations should be ineffective if presented at the

optimal disparity for the matched polarity pair, i.e. a bright bar to one eye and a

dark bar to the other should not give rise to a response at the preferred disparity

The authors explain the purpose of the first 2 requirements by an illustration shown in

the figure 2.12. Figure 2.12(a) shows the RF of a cortical neuron in image space on left

and right eye retinas. The hatched diamond-shaped zone represents the region viewed

by both eyes. Any stimulus within this zone should elicit a response from the neuron in

question. But this implies that the neuron is limited to crude disparity selectivity since

this region encompasses many different disparities (Ohzawa et al, 1990). A disparity

detector should be more specific, i.e. it should respond to a restricted range of visual

space. In this example, if we assume that the 2 eyes are fixated on an object (meaning

zero disparity), then the dark-shaded oval region represents a suitable zone. A graphical

representation of this region is shown in figure 2.12(b). The diagonal slope represents a

plane of zero disparity. The 2 axes represent stimulus positions along the left eye and

right eye. For nonzero disparity, the sensitive region for a detector must be located

parallel to and off the diagonal.

The third requirement deals with “mismatched contrast polarity” (Ohzawa et al, 1990).

Recall that complex cells exhibit phase independence since they are insensitive to

contrast polarity. The question that arises is whether a disparity detector should respond

if anti-correlated stimuli are presented to the eyes at the correct disparity (for e.g., a

bright bar to one eye and a dark one to the other). This is a theoretically implausible

scenario because images on the retinas are from the same object, so there is no question

14

of getting a bright spot in 1 part of one of the retinas and a dark spot in the

corresponding part of the other retina (Ohzawa et al, 1990), but in a computational

framework, this is very likely to happen, especially with RDS as inputs. Ohzawa and

colleagues classify this requirement as a “non-trivial” one and suggest that it is

“counterintuitive to expect the detector to reject anti-correlated stimuli at the correct

disparity on the basis of mismatched contrast polarity”

Figure 2.12: Desired characteristics of a disparity detector (reprinted from [45])

Taking into account all the aforementioned requirements, Ohzawa and collaborators

devised the disparity energy model. It consists of 4 binocular simple cell subunits that

can be combined to produce the output of a complex cell. The inputs from the 2 eyes are

combined to give the output of each subunit. The resulting signal is then subjected to a

15

half-wave rectification followed by a squaring non-linearity. The response of the

complex cell is given by combining the contribution from each subunit.

The authors postulate that the simple cell subunits must meet certain requirements to

produce a smooth binocular profile. These are as follows:

•

They must have similar monocular properties like spatial frequency, orientation,

size and position of the RF envelopes

•

They must share a common preferred disparity

•

The phases of the four simple cells must differ from each other by multiples of

90 (quadrature phase)

•

The RFs for the simple cells must be Gabor-shaped

•

The simple cells must be organised into “push-pull” pairs, i.e., the RFs in 1

simple cell are the inverses of those in the other simple cell; in other words, the

ON region of 1 cell corresponds to an OFF region in the other.

The schematic diagram in figure 2.13 shows the ODF model for a tuned-excitatory

neuron. S1 and S2 form 1 push-pull pair while S3 and S4 form the other. Members in a

push-pull pair are mutually inhibitory (Ohzawa et al, 1990).

Figure 2.13:ODF Disparity Energy Model( reprinted from [47])

2.2.6 ENERGY MODEL: READ et al (2002)

This model is very similar to the previous model except that the half-wave rectification

is performed on the inputs from each eye before they converge on a binocular cell. This

16

thresholding is achieved by introducing the monocular cells as shown in figure 2.14 and

the authors claim that this alteration gives results close to real neuronal behaviour when

anticorrelated inputs are used. The model also emphasizes on the type of synapses the

monocular cells make with the binocular neurons to account for inhibitory effects of

visual stimuli observed during biological experiments.

Figure 2.14: Modified Energy Model (reprinted from [58])

2.3 TOPOGRAPHY

The topographic arrangement of the primary visual cortex and the presence of cortical

feature maps were highlighted in a previous section. The maps are superimposed to

form a hierarchical representation of the input features. This type of representation was

suggested by Hubel and Wiesel (1977). They postulated that orientation and OD maps

are overlaid in a fashion so as to optimize the uniform representation of all possible

permutations of orientation, eye preference and retinal position (Hubel and Wiesel,

1977). Subsequent studies making use of optical imaging techniques have helped to

justify this claim of superimposed, spatially periodic maps being present in the cortex,

and also to strengthen the views formulated by Hubel and Wiesel about the geometrical

relations between feature maps (Swindale, 2000).

In addition to orientation and OD columns in the cortex, maps for direction of motion

and spatial frequency have also been observed (Hubener et al, 1997; Shmuel and

Grinvald, 1996; Welliky et al, 1996) have also been observed. The following question

comes to one’s mind: Do spatially periodic maps for other features that influence

neuronal behaviour exist? It is a well-known fact that cortical cells respond to a

17

multitude of visual feature attributes, so there exists the possibility of corresponding

feature maps to be present in the cortex. It is only through delicate biological

experiments using state-of the art imaging techniques that clear-cut results can be

obtained.

2.3.1 TOPOGRAPHY AND DISPARITY

Studies on stereoscopic vision have so far focused more on the physiology of simple

and complex cells and their role in encoding disparity. Very few studies have

concentrated on the topographic arrangement of disparity-sensitive neurons in V1.

Blakemore (1970) initially proposed that ‘constant depth’ columns can be found in the

cat V1 (DeAngelis, 2000). He advocated that such columns consist of neurons having

similar receptive-field position disparities. But due to the unsophisticated experimental

setup and lack of substantial experimental data, his findings were not deemed good

enough to comprehensively resolve this issue.

Another study conducted by LeVay and Voigt (1988) showed that “nearby V1 neurons

have slightly more similar disparity preferences than would be expected by chance”

(DeAngelis, 2000), although , as DeAngelis (2000) points out, the clustering was not as

definite as in the case of orientation selectivity or ocular dominance. Moreover, most of

the neurons that were investigated were near the V1/V2 border, thereby weakening the

claim of V1 having disparity-selective columns (DeAngelis, 2000).

Most work in this area has shifted to looking for these maps in higher areas of the

cortex, and recent research conducted by DeAngelis and Newsome (1999) has provided

some evidence for a map of binocular disparity in the middle temporal (MT, also known

as V5) visual area (DeAngelis and Newsome, 1999) of macaque monkeys by using

electrode penetrations. They found discrete patches of disparity-selective neurons

interspersed among other patches showing no disparity sensitivity. They claim that the

preferred disparity varies within the disparity-tuned patches and that disparity columns

exist in the MT region, and possibly in V2, since MT receives input connections from

V2. This is an interesting finding but yet not conclusive enough to prove the existence

of spatially periodic disparity maps. Reliable results can only be obtained if a large

population of neurons can be measured at once, for example by using optical imaging

techniques. Experiments using such a procedure have actually been conducted by Ts’o

et al (2001). They combined optical imaging, single unit electrophysiology and

cytochrome oxidase (CO) histology to investigate the structural organization for colour,

form (orientation) and disparity of area V2 in monkeys. The findings from this

18

endeavour might be of great relevance to the investigation of disparity maps in the

brain, hence the need for a separate subsection to describe the prominent features of this

research work.

2.3.2 TS’O, WANG ROE and GILBERT (2001)

Previous work on primates using CO histology has shown that V2 has a hierarchical

organization reminiscent of that found in V1, except that cells are compartmentalised

based on orientation-selectivity, colour preference and disparity selectivity. Two main

types of alternating CO-rich stripes have been observed, the thick and thin stripes that

are often separated by a third type commonly referred to as the pale stripe (Tootell and

Hamilton, 1989). The thick stripe is believed to contain cells selective to disparity and

motion, the thin stripe seems to be responsive to colour preferences, and the pale stripe

is associated with orientation selectivity. Ts’o and colleagues performed various

experiments by using a combination of techniques that included optical imaging,

electrode penetrations and CO histology for increased effectiveness and reliability. The

first set of tests provided results that seemed to justify the tripartite model of visual

processing in V2 that was put forward by earlier research work. They found unoriented,

colour-selective cells in the thin stripes, and of greater interest to this project, cells that

are selective to retinal disparity in the thick stripes. These cells were unselective to

colour, and had complex, oriented RFs (Ts’o et al, 2001).They also observed that these

cells showed little or no response to monocular stimulation but responded vigorously to

binocular stimuli over a small range of disparities. In order to get a better distinction

between the arrangement of the colour and disparity stripes, they subjected the primates

to monocular stimulation, the idea being that disparity-selective regions would not be

responding. The expected result of clear-cut delineation between the disparity and

colour stripes was not observed, leading the authors to suggest the existence of

subcompartments within the V2 stripes. Based on this type of hierarchical organisation

in V2, they draw a parallel with that of V1, which also contains subcompartments,

although mention is not made of the presence of disparity stripes in V1. Other relevant

observations along disparity stripes concern the orientation preference and type of

disparity-selective cells. It was seen that most of the cells had vertical or near vertical

preferred orientation. They found out that within a disparity stripe, most of the columns

contained cells of the tuned excitatory type but that columns populated with the other 3

types of cells were also present. The authors conclude that “one key functional role of

area V2 lies in the establishment of a definitive functional organisation and cortical map

for retinal disparity”.

19

2.4 SELF-ORGANIZATION

How does the brain develop such distinctive characteristics? Researchers believe that

these maps form by the self-organization of the afferent connections to the cortex and

are shaped by visual experience (Miikkulainen et al, 2005), based on cooperation and

competition between neurons as a result of correlations in the input activity (Sirosh and

Miikkulainen ,1993). As research in this field intensified, it became clear that selforganization is not influenced by these afferent connections alone, but also by lateral

connections parallel to the cortical surface (Miikkulainen et al, 2005). Based on various

observations, Miikuulainen and collaborators believe that the wiring of the lateral

connections is not static but rather develops “synergetically and simultaneously” with

the afferent connections, based on visual experience. These researchers describe the

adult visual cortex as a “continuously adapting recurrent structure in a dynamic

equilibrium, capable of rapid changes in response to altered visual environments”

(Miikkulainen et al, 2005). This implies that the functional role of the afferent and

lateral connections is to suppress redundant information in the input stimuli, while being

able to learn correlations found in novel visual features. This type of organization that

relies heavily on visual features is termed as Input-Driven Self-Organization.

If self-organization depends heavily on the types of inputs presented, then what about

the influence of genetic factors in the arrangement of neurons in the brain? The previous

discussion might mislead the reader in thinking that cortical maps develop solely as a

result of visual activity after birth. Experimental findings tend to show that this is not

the case. Indeed, it is believed that both genetic and environmental factors affect the

topography of the cortex. In the prenatal stage, internally generated activity such as

retinal waves and Ponto-Geniculo-Occipital(PGO) waves are thought to be genetically

specified training patterns that initiate the self-organizing process. Studies have shown

that animals have brain regions showing orientation selectivity even before birth

(Miikkulainen et al, 2005), thereby strengthening the hypothesis of geneticallydetermined internal signals in initiating the self-organizing process. Thus, an organism

already has a basic topographic framework at birth, and this organization is constantly

refined by visually-evoked activity after birth.

2.5 COMPUTATIONAL MODELS

Neuroscience has been the focus of extensive research for a very long time, but

researchers are not even close to come up with a unified account of on-going neural

mechanisms and processes. The sheer complexity of the brain has so far proved to be an

20

overwhelming hurdle although, to be fair, considerable headway has been made in

many research areas.

The setting up of biological experiments requires lots of time and effort, consequently

making progess in neuroscience slow and painful. For quite some time now, scientists

have adopted a new approach to research, namely computational modelling. This has

provided a new dimension to studies related to the brain and has been embraced by

many researchers. Computational models can be used instead of biology, as concisely

described by Miikkulainen et al (2005), “to test ideas that are difficult to establish

experimentally, and to direct experiment to areas that are not understood well”.

(Miikkulainen et al, 2005). Ohzawa and colleagues (1990) point out that these models

play an important role in neuroscience since they can provide quantitative predictions

that may be compared with results from biological experiments (Ohzawa et al, 1990).

Because of these attractive features, it is unsurprising that computational neuroscience

has been used to investigate the self-organizing process.

Ever since von der Malsburg (1973) pioneered this area of research by using a twodimensional network of neural units to model the cortex, several other models have

been proposed (Miikkulainen et al, 2005). However most of these models did not cater

for the dynamic nature of the lateral connections (Miikkulainen et al, 2005), and

therefore might not be ideal to simulate the self-organizing process. More recent models

have inevitably focused more on the dynamicity of the visual cortex with increased

emphasis on the interaction between the afferent and lateral connections (Miikkulainen

et al, 2005). The model of interest for this project is LISSOM, proposed by

Miikkulainen and colleagues. Section 2.6 describes the hierarchical and functional

properties of this model. It is based on the simple but effective self-organizing feature

map (SOM) model proposed by Kohonen (1982b). The next section gives a brief

overview of this famous algorithm.

2.5.1 KOHONEN SOM

SOM maps a high-dimensional input data space onto a two-dimensional array of

neurons. In the context of our study, the latter represents the cortical surface whereas

the former refers to a receptor surface such as the retina. Every unit in the neural sheet

is connected to all the units on the receptor surface, such that all the cortical units

receive the same input stimuli. So if we have a retina of N units, each neuron will have

an input vector of length N. Each connection has a positive synaptic weight. Since each

neuron is connected to N inputs, it will have a weight vector of length N associated with

21

it. The neuron computes its initial response as a function of the Euclidean distance

between the input and the weight vectors. A winner-take-all process usually operates

whereby the cortical neuron with the highest activation affects the activity of the nearby

neurons based on a neighbourhood function. The weight vector is modified using the

Euclidean difference between the input and the weight vectors. Initially the connection

weights are random, such that each neuron responds randomly to activity on the retina.

During learning, the weights adapt, slowly making each neuron more specific to

particular input patterns. Consequently, the weight vectors become better

approximations of the input vectors, and neighbouring weight vectors become more

similar. After many iterations, the weight vectors become an ordered map of the input

space, thereby leading to retinotopy.

2.6 LISSOM

LISSOM stands for Laterally Interconnected Synergetically Self-Organizing Map. It is

a learning algorithm designed to capture the essence of the self-organizing process in

the visual cortex by concentrating on certain processes that have been overlooked by

most computational models, more specifically the influence of the lateral connections.

Miikkulainen et al (2005) perceive LISSOM as a model that can give concrete, testable

answers to the following viewpoints:

1. input-driven self-organization is responsible to shape up cortical structures

2. self-organization is influenced by inputs that are internally generated, as a result

of the genetic blueprint of an organism, as well as visually-evoked activity from

the environment

3. perceptual grouping, i.e. the process of finding correlations in the input stimuli

to successfully and coherently identify an object in the visual scene, is a

consequence of the interaction between afferent and lateral connections

LISSOM is inspired from various studies that have been conducted on the selforganizing process and from data collected about the structure of the cortex. It was

developed in an attempt to model neurobiological phenomena and yield results that may

inspire new research directions. The salient features of LISSOM, as described by

Miikkulainen and collaborators (2005) are given below:

1. the neural sheet is a two-dimensional array of computational units, each unit

corresponding to a vertical column in the cortex

2. each cortical unit received input from a local anatomical receptive field in the

retina, usually with the ON-centre and OFF-centre channels of the LGN as

intermediate sheets between the input and output sheets

22

3. interactions between afferent and lateral connections govern the input-driven

self-organizing process

4. each cortical unit has a weight vector whose length is determined by the number

of connections; it responds by computing a weighted sum of its input

5. learning is based on Hebbian adaptation with divisive normalization, which is

the computational equivalent of the biological learning procedure

The following sections describe the LISSOM model in more detail. Most of the material

is taken from Chapter 3 in ‘Computational Maps in the Visual Cortex’ (CMVC) book

by Miikkulainen and colleagues (2005).

2.6.1 LISSOM ARCHITECTURE

The architecture of the basic LISSOM model is shown in figure 2.15. Each V1 neuron is

connected to a bunch of neurons in the LGN-ON and LGN-OFF sheets. In LISSOM

jargon, the term connection field is used to refer to a region in a lower-level sheet that

has direct connections with a neuron in a layer hierarchically on top of the other one.

Thus each cortical neuron is connected to specific regions in the LGN layer. This is

unlike the SOM model, wherein each cortical neuron is fully connected to the lower

layer. The LGN neurons in turn have connection fields onto the retina. Each neuron

develops an initial response as a weighted sum of the activation in its afferent input

connections. The lateral connections translate the initial activation pattern into a

localized response on the map. After a settling period, the connection weights of cortical

neurons are modified through Hebbian learning. As the self-organizing process

progresses, activity bubbles are produced that become increasingly focused and

localized. The result is a self-organized structure in a dynamic equilibrium with the

input.

Figure 2.1 : Architecture for basic LISSOM model(reprinted from [40])

23

RETINA

The retinal sheet is basically an array of photoreceptors that can be activated by the

presentation of input patterns. The activity χxy for a photoreceptor cell (x,y) is calculated

according to

where (xc,k , yc,k) specifies the centre of Gaussian k and σu its width

LGN

The connection weights of the LGN neurons are set to fixed strengths using a

difference-of-Gaussians model (DoG). There is a retinotopic mapping between the LGN

and the retina. The weights are calculated from the difference of two normalized

Gaussians; weights for an OFF-centre cell are the negative of the ON-centre weights,

i.e. they are calculated as the surround minus the centre. The weight Lxy,ab from receptor

(x, y) in the connection field of an ON-centre cell (a, b) with centre (xc, yc) is given by

where σc determines the width of the central Gaussian and σs width of the surround

Gaussian.

The cells in the ON and OFF channels of the LGN compute their responses as a

squashed weighted sum of activity in their receptive. Mathematically the response ξab of

an LGN cell (a,b) is computed by

where χxy is the activation of cell (x, y) in the connection field of (a, b), Lxy,ab is the

afferent weight from (x, y) to (a, b), and γL is a constant scaling factor. The squashing

function σ is a piecewise linear approximation of a sigmoid activation function

24

CORTEX

The total activation is obtained by taking both the afferent and lateral connections into

account .First, the afferent stimulation sij of V1 neuron (i, j) is calculated as a weighted

sum of activations in its connection fields on the LGN:

where ξab is the activation of neuron (a, b) in the receptive field of neuron (i, j) in the

ON or OFF channels, Aab,ij is the corresponding afferent weight, and γA is a constant

scaling factor. The afferent stimulation is squashed using the sigmoid activation

function. The neuron’s initial response is given as as

where σ (·) is a piecewise linear sigmoid

After the initial response, lateral connections influence cortical activity over discretized

time steps. At each of these time steps, the neuron combines the afferent stimulation s

with lateral excitation and inhibition:

where ηkl(t-1) is the activity of another cortical neuron (k, l) during the previous time

step, Ekl,ij is the excitatory lateral connection weight on the connection from that neuron

to neuron (i, j), and Ikl,ij is the inhibitory connection weight. The scaling factors γE and γI

represent the relative strengths of excitatory and inhibitory lateral interactions.

Connections to the cortex are not set to fixed strengths as it is the case with the LGN

connections. Weight adaptation of afferent and lateral connections is based on Hebbian

learning with divisive postsynaptic normalization. The equation is as given below

25

where wpq,ij is the current afferent or lateral connection weight from (p, q) to (i, j),

w’pq,ij is the new weight to be used until the end of the next settling process, α is the

learning rate for each type of connection, Xpq is the presynaptic activity after settling,

and ηij stands for the activity of neuron (i, j) after settling

2.6.2 SELF-ORGANIZATION IN LISSOM

Orientation maps have been widely investigated using computational modeling.

LISSOM has also focused extensively on the topographic arrangement of cortical

neurons based on orientation preference. The figure below shows the organization of

orientation preferences before and after the self-organizing process

Iteration 0

Iteration 10,000

Figure 2.16 : Orientation map in LISSOM(reprinted from [40])

2.7 TOPOGRAPHICA

The Topographica simulator has been developed by Miikkulainen and collaborators to

investigate topographic maps in the brain. It is perpetually being refined and extended

in an attempt to make it as generic as possible and to promote the investigation of

biological phenomena that are not very well understood. This study on disparity

necessitated the addition of some new functionalities in Topographica, especially for

the generation of disparity feature maps. The next section highlights the map

measurement techniques currently used in Topographica.

26

2.7.1 MAP MEASUREMENT IN TOPOGRAPHICA

There are various algorithms that can be used for feature map measurement. Most of

these techniques produce similar results, especially in cases where neurons are strongly

selective for the feature being investigated, but might yield different results for units

that are less selective (Miikkulainen et al, 2005).There are typically two types of

methods, namely direct and indirect, that can be used to compute preference maps. In

direct methods, maps can be calculated directly from the weight values of each neuron,

while indirect methods involve presenting a set of input patterns and analyzing the

responses of each neuron. The choice of map measurement technique usually implies a

tradeoff between efficiency and accuracy, since direct methods are more efficient while

indirect methods are more accurate (Miikkulainen et al, 2005). In Topographica both

methods have been used to calculate feature maps. For example, the map of preferred

position is obtained by computing the centre of gravity of each neuron’s afferent

weights, whereas orientation maps are calculated by an indirect method called the

weighted average method, introduced by Blasdel and Salama (1986). The next section

gives a detailed account of this method. It is based almost entirely from material in

Appendix G.1.3 of the CMVC book.

WEIGHTED AVERAGE METHOD

In the weighted average method, inputs are presented in such a way so as to ensure that

a whole range of combinations of parameter values is possible. For each value of the

map parameter, the maximal response of the neuron is recorded. The preference of a

neuron corresponds to the weighted average of the peak responses to all map parameter

values. This may sound somewhat confusing; it’s better to clarify this by describing this

method mathematically and subsequently giving an example of its application. The

following subsection does just that.

Consider the weighted average method being used to compute orientation preferences.

For each orientation φ, parameters such as spatial frequency and phase are varied in a

systematic way, and the peak response η̂ φ is recorded. A vector with length η̂ φ and 2φ

as its orientation is used to encode information about each orientation φ. The vector

V= (Vx , Vy) is formed by summing up the vectors for all the orientation values.

27

The preferred orientation of the neuron, θ is estimated as half the orientation of V :

where atan2(Vx , Vy) returns tan-1(Vx / Vy) with the quadrant of the result chosen based

on the signs of both arguments. Orientation selectivity can be obtained by taking the

magnitude of V. This can be normalized for better comparison and analysis purposes.

Normalized orientation selectivity, denoted by S, is given by:

As an illustration, suppose that input patterns were presentation at orientation 0°, 60°

and 120°, and phases 0, π/8, …, 7π/8 for a total of 24 patterns. Assume that the peak

responses for a given neuron for all the different phases were 0.1 for 0°, 0.4 for 60° and

0.8 for 120°. The preferred orientation and selectivity of this neuron are

The interesting point to note here concerns the selectivity value for this neuron. It has a

relatively low selectivity because it responds quite well to patterns with different

orientations, namely 60° and 120°. High selectivity would therefore indicate a strong

bias towards one particular value of a feature parameter.

2.8 MODEL OF DISPARITY SELF-ORGANIZATION

Although numerous models for self-organization have been put forward, very few have

actually been customized to investigate the topographic arrangement of neurons based

on disparity preferences. This is quite surprising since the presence of disparity maps in

V1 based on biological data is yet to be confirmed, and therefore computational

neuroscience would have been ideal to help scientists channel their research on

predictions made by the model. Anyway, one such work was undertaken by Wiemer et

al (2000).

28

2.8.1 WIEMER ET AL (2000)

The aim of their research was to investigate the representation of orientation, ocular

dominance and disparity in the cortex. Since orientation and OD maps have been widely

studied using both computational and traditional neuroscience techniques, the emphasis

of their work was more on the potential presence of disparity map in the cortex. They

used the SOM algorithm for learning. Nothing really extraordinary, one might say, but

what really made their work distinctive are the methods employed for presenting input.

In an earlier section, the importance of the input stimuli in the self-organization process

has been made clear. Wiemer and colleagues stress on the significance of the types of

binocular stimuli to be presented in order to have any chance of getting clumps of

disparity-selective neurons. They generate such stimuli by first creating a threedimensional scene, and then take stereo pictures of it. According to the authors, this

kind of processing preserves the correlations between stimuli features such as

orientation and disparity. Further processing includes cutting the pictures in ocular

stripes, and aligning them in alternating order to produce a fused projection. The whole

fused image is not presented as the input stimulus. Instead, chunks of it that contain

features from both the left and right ocular stripes are selected based on the amount of

correlation between them. The algorithm rejects any chunk that contains very little

correlation between the part from the left ocular stripe and that from the right ocular

stripes. This is done to ascertain that the left and right-eyed part correspond to the same

three-dimensional object (Wiemer et al, 2000). The selected chunks represent the

binocular stimuli that will be presented to the network. They are normalized to ensure

that dissimilarities in brightness of different pairs of stereo images do not affect the final

results.

Figure 2.17 (reprinted from [69]): A and B represent the stereo images obtained from

the three-dimensional scene. These images are divided into stripes, which are arranged

in alternating order to form the image shown in C. Chunks of this image are then

selected based on the amount of correlation to yield the pool of stimuli, shown in D, to

be presented to the network.

29

The results obtained by Wiemer and collaborators are shown in figures 2.18 and 2.19

Figure 2.18 shows maps of left-eyed and right-eyed receptive fields. It can be seen that

RFs of different shapes and asymmetries are present with pools of neurons preferring

similar patterns varying gradually along the two-dimensional grid (Wiemer et al, 2000).

Fig 2.18 : Maps of left-eyed and right-eyed receptive fields(reprinted from [69])

Figure 2.19 shows the resulting binocular feature representation obtained by the fusion

of the left- and right-eyed receptive fields. The corresponding orientation, ocular

dominance and disparity maps are illustrated. One interesting point that can be clearly

distinguished when analyzing maps B and D in figure 2.19 is that disparity differences

are greatest in regions of vertical and oblique orientations (Wiemer et al, 2000). The

white patches in D represent positive disparities; black patches indicate negative

disparities, while gray is for zero or very small disparities. Based on these results, the

authors suggest that subcompartments corresponding to different disparities might exist

in regions of constant orientation, such that maps of orientation, ocular dominance and

disparity might be geometrically related.

The study by Wiener and colleagues is an intriguing one to say the least. Their

conclusion about disparity patches lying within regions of relatively uniform orientation

preference has been proved to be true by the experiments conducted by Ts’o et al on the

V2 region of the macaque monkey. But there are certain reservations about the validity

of their model. First, the authors do not mention anything about which region of the

visual cortex they are simulating, thereby casting doubts over specificity of their

experiment. Moreover, they don’t use the concept of overlapping receptive fields that is

a distinctive feature of cortical neurons, which might be important for self-organization.

In addition, the manner in which binocular stimuli are presented is quite debatable

although the algorithm used to extract such stimuli from a three-dimensional scene is

30

impressive. It seems that they use the notion of a ‘cyclopean’ eye to process the

binocular stimuli instead of having two eyes with slightly disparate images incident on

them. Next, if the maps are analyzed, serious discrepancies seem to be present as

compared to the ones found in biology: The neurons do not have the usual centresurround, two-lobed or three-lobed RF that is so characteristic of cortical neurons; the

orientation map is far from showing any kind of periodicity. Despite these apparent

imperfections, this model is one of the first to probe into the existence of disparity

maps, and therefore the effort by Wiemer et al is worth some consideration.

Figure 2.19 : Binocular Feature Representation(reprinted from [69])

31

CHAPTER 3

METHODOLOGY

This chapter is divided into 2 main sections. The first one highlights the methods used

to investigate self-organization of disparity maps in. The second one describes the work

done in an attempt to solve the problem of phase-dependent behaviour of current

LISSOM models.

3.1 SELF-ORGANIZATION OF DISPARITY SELECTIVITY

At the onset of the project, the Topographica software did not have the desired

functionality to investigate the potential self-organizing feature of disparity selectivity.

Therefore the first major task was to understand the intricacies of the simulator to be

able to provide the appropriate experimental setup for the inspection and measurement

of disparity preferences and selectivities. More specifically, the first requirement

consisted of developing a network based on two retinas, with suitable input patterns on

each one, to simulate the input-driven self-organizing process that is believed to

culminate into disparity selectivity in the primary visual cortex of primates. The second

major task was about development of a map measurement mechanism to compute the

disparity preferences of the cortical units. The following sections give a detailed

account of how these two tasks were tackled

3.1.2 TWO-EYE MODEL FOR DISPARITY SELECTIVITY

Most studies using LISSOM have been based on a single eye, although two retinas have

been used before to investigate ocular dominance. However the experiments on eye

preferences were conducted in the C++ version of LISSOM. There was no tailor-made

Topographica script that dealt with two eyes. So the first and perhaps the most

important aspect of the project was to come up with a model that could reliably simulate

the self-organization process. The starting point and inspiration was the lissom_oo_or.ty

script that can be found in the examples folder of the topographica directory. It is used

to investigate orientation selectivity based on a single retina and LGN ON/OFF

channels. The model for this simulation is illustrated in figure 3.1.

32

Figure 3.1: Model used in lissom_oo_or.ty

As can be seen from the illustration, the model consists of an input sheet (‘Retina’), an

LGN layer (‘LGNOff’ and ‘LGNOn’), and an output sheet (‘V1’). The connections from

the retina to the LGN (‘Surround’ and ‘Centre’) are based on the difference-ofGaussians (DoG) model. The afferent connections, namely ‘LGNOffAfferent’ and

‘LGNOnAfferent’, regulate the activation of neurons in the neural sheet. Lateral

connections are also included; they are represented as the dotted yellow circles in ‘V1’.

Another important point concerns the type of input pattern. This model makes use of

elongated Gaussians. They are generated randomly across the input space, and their