* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download 5-1

Binding problem wikipedia , lookup

Biological neuron model wikipedia , lookup

Neuroeconomics wikipedia , lookup

Neurogenomics wikipedia , lookup

Blood–brain barrier wikipedia , lookup

Artificial general intelligence wikipedia , lookup

Human brain wikipedia , lookup

Human multitasking wikipedia , lookup

Functional magnetic resonance imaging wikipedia , lookup

Cognitive neuroscience wikipedia , lookup

Donald O. Hebb wikipedia , lookup

Incomplete Nature wikipedia , lookup

Neuroesthetics wikipedia , lookup

Neural modeling fields wikipedia , lookup

Neurophilosophy wikipedia , lookup

Neurolinguistics wikipedia , lookup

Nervous system network models wikipedia , lookup

Aging brain wikipedia , lookup

Brain morphometry wikipedia , lookup

Neuropsychopharmacology wikipedia , lookup

Time perception wikipedia , lookup

Haemodynamic response wikipedia , lookup

History of neuroimaging wikipedia , lookup

Neuroinformatics wikipedia , lookup

Selfish brain theory wikipedia , lookup

Neuroplasticity wikipedia , lookup

Neuroanatomy wikipedia , lookup

Neuropsychology wikipedia , lookup

Brain Rules wikipedia , lookup

Activity-dependent plasticity wikipedia , lookup

Embodied cognitive science wikipedia , lookup

The free-energy principle: a

rough guide to the brain?

Karl Friston

Presented by : Gokrna Poudel

Guiding question.

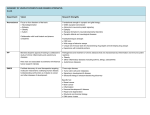

Q1: Explain the following terms: KL divergence, entropy, ergodic, free

energy, Bayesian surprise, generative model, recognition density,

sufficient statistics.

Q2: Explain the free-energy principle of the brain, i.e. the fact that selforganizing biological agents resist a tendency to disorder and therefore

minimize the entropy of their sensory states. Give various forms of free

energy.

Q3: How can action reduce free energy? How can perception reduce free

energy? How can active sampling of the sensorium contribute to the free

energy reduction?

Q4: Explain the neurobiological architecture for implementing the freeenergy principle in Figure 1 in Box 1. Describe each of the modules in

the figure and their functions as well as the quantities that define the free

energy.

2

Guiding question.

Q5: Describe the sufficient statistics representing a hierarchical dynamic

model of the world in the brain in Figure 1 in Box 2. How are they related

with each other? How are the changes in synaptic activity, connectivity, and

gain involved with perceptual inference, learning and attention?

Q6: Formulate and describe the neuronal architecture for the hierarchical

dynamic model in Figure 1 in Box 3. How are the forward prediction errors

computed? How are the backward predictions made? What are the sources

of the forward and backward connections in terms of brain anatomy?

Q7: A key implementational issue is how the brain encodes the recognition

density. There are two forms of probabilistic neuronal codes: free forms and

fixed forms. Give examples of each form and explain them.

Q8: What kinds of optimization schemes does the brain use? Does it use

deterministic search on free energy to optimize action and perception? Or,

does it use stochastic search? What is your opinion?

3

KL[Kullback-Leibler] divergence:

Information divergence, information gain, cross

or relative entropy is a non-commutative

measure of the difference between two

probability distributions.

In other words KL Divergence is a nonsymmetric measure of the difference between

two probability distributions .

p( x)

DKL ( P || Q) p( x) log

dx

q( x)

4

Ergodic

:

The process is ergodic if its long term time-average converges to

its ensemble average. Ergodic processes that evolve for a long

time forget their initial states.

Entropy:

The average surprise of outcomes sampled from a probability

density. A density with low entropy means, on average, the

outcome is relatively predictable.

The second law of thermo dynamics states that the entropy of

closed systems increases with time. Entropy is a measure of

disorder or, more simply, the number of ways the elements of a

system can be rearranged.

5

Generative model :

It is a forward model and is a probabilistic mapping from causes to

observed consequences (data). It is usually specified in terms of the

likelihood of getting some data given their causes (parameters of a

model) and priors on the parameters

Recognition density :

It is an approximating conditional density is an approximate

probability distribution of the causes of data. It is the product

inference or inverting a generative model.

sufficient statistics :

quantities which are sufficient to parameterize a probability density

(e.g., mean and covariance of a Gaussian density).

6

Bayesian theory

Bayesian probability theory, one of these “events” is the

hypothesis, H, and the other is data, D, and we wish to

judge the relative truth of the hypothesis given the

data. According to Bayes’ rule, we do this via the

relation

Bayesian surprise :

A measure of salience based on the divergence

between the recognition and prior densities. It

measures the information in the data that can be

recognized.

7

Free Energy .

It is an attempt to explain the structure

and function of the brain, starting from

the fact that exist.

Free-energy is an information theory

quantity that bounds the evidence for a

model of data.

free-energy is greater than the negative

log-evidence or ‘surprise’ in sensory data,

given a model of how they were

generated.

8

Action , perception and sensorium

contribution on free energy reduction

We are open systems in exchange with the environment; the

environment acts on us to produce sensory impressions, and

we act on the environment to change its states.

On changing the environment or our relationship to it, then

sensory input changes. Therefore, action can reduce freeenergy by changing the sensory input predicted.

perception reduces free-energy by changing predictions.

we sample the world to ensure our predictions become a selffulfilling prophecy and surprises are avoided. In this view,

perception is enslaved by action to provide veridical predictions

that guides active sampling of the sensorium.

9

No. 4

Explain the neurobiological architecture for

implementing the free-energy principle in Figure

1 in Box 1. Describe each of the modules in the

figure and their functions as well as the

quantities that define the free energy.

10

Neurobiological architecture for

implementing the free-energy principle

11

Neurobiological architecture for

implementing the free-energy principle

Upper panel: schematic detailing the quantities

that define free-energy.

Lower panel: alternative expressions for the freeenergy that show what its minimization entails.

For action, free-energy can only be suppressed

by increasing the accuracy of sensory data (i.e.

selectively sampling data that are predicted by

the representation).

12

No. 5

Describe the sufficient statistics representing a

hierarchical dynamic model of the world in the

brain in Figure 1 in Box 2. How are they related

with each other? How are the changes in

synaptic activity, connectivity, and gain involved

with perceptual inference, learning and

attention?

13

Hierarchical dynamic of the brain

14

Hierarchical dynamic of the brain

Key architecture is the hierarchy.

The recognition density is encoded in terms of

its sufficient statistics.

On the fig. three sorts of representations

pertaining to the states: {x,v}, parameters: θ and

precisions : λ of a hierarchical dynamic model,

these are encoded by neural activity, synaptic

connectivity and gain respectively. Crucially, the

optimization of any one representation depends

on the others.

15

Hierarchical dynamic of the brain

The equations associated with this

partition

represent a gradient descent on free- energy and

correspond to

(i) Perceptual inference on states of the world

(i.e. optimizing synaptic activity);

(ii) Perceptual learning of the parameters

underlying causal regularities (i.e. optimizing

synaptic efficacy) and

(iii) Attention or optimizing the expected

precision of states in the face of random

fluctuations and uncertainty (i.e. optimizing

synaptic gain).

16

No. 6

Formulate and describe the neuronal architecture

for the hierarchical dynamic model in Figure 1 in

Box 3. How are the forward prediction errors

computed? How are the backward predictions

made? What are the sources of the forward and

backward connections in terms of brain anatomy?

17

Neuronal architecture for the hierarchical

dynamic model

18

Neuronal architecture for the hierarchical

dynamic model

Schematic detailing the neuronal architectures

that might encode a density on the states of

hierarchical dynamic model.

This shows the speculative cells of origin of

forward driving connections that convey

prediction error from a lower area to a higher

area and backward connections that construct

predictions .

These predictions try to explain away prediction

error in lower levels. In this scheme, the sources

of forward and backward connections are

superficial and deep pyramidal cells, respectively.

19

No.7

A key implementational issue is how the

brain encodes the recognition density.

There are two forms of probabilistic

neuronal codes: free forms and fixed

forms. Give examples of each form and

explain them.

20

Brain encoding recognition density

The free-energy principle induces the

recognition density, which has to be represented

by its sufficient statistics. It is therefore a given

that the brain represents probability

distributions over sensory causes .

21

Probabilistic neuronal codes

Free-form

and fixed-form:

o Free form :

particle filtering :

the recognition density is represented by the

sample density of neuronal ensembles, whose

activity encodes the location of particles in

state-space.

22

Probabilistic neuronal codes

o probabilistic population code:

Method to represent stimuli by using the joint

activities of a number of neurons, each neuron has

a distribution of responses over some set of inputs,

the responses of many neurons may be combined

to determine some value about the inputs.

Probabilistic neuronal codes

o Fix

form :

multinomial or Gaussian

Multinomial forms assume the world is

in one of several discrete states and are

usually associated with hidden Markov

models.

The Gaussian or Laplace assumption

allows for continuous and correlated states.

24

No 8

What kinds of optimization schemes does

the brain use? Does it use deterministic

search on free energy to optimize action

and perception? Or, does it use stochastic

search? What is your opinion?

25

Optimization schemes by the brain

According to the free-energy principle, the

sufficient statistics representing will change to

minimize free-energy,

It provides principled explanation for perception,

memory and attention,

it accounts for perceptual inference (optimization

of synaptic activity to encode the states of the

environment),

perceptual learning and memory (optimization of

synaptic connections that encode contingencies

and causal regularities) and

Attention (neuromodulatory optimization of

synaptic gain that encodes the precision of states)

26

Optimization schemes by the brain

Assumption is that the brain uses a deterministic

gradient descent on free-energy to optimize

action and perception.

It might also use stochastic searches; sampling the

sensorium randomly for a percept with low freeenergy.

Evidence is our eye movements implement an

optimal stochastic strategy. This raises interesting

questions about the role of stochastic searches

from visual search to foraging, in both perception

and action

27

Summary

It provides a comprehensive measure of how

individual represent and come to sample it

adaptively.

It is the goal to minimize the prediction error

(suppress Free Energy)

Changes in synaptic activity, connectivity and

gain can be understood as perceptual

inference, learning and attention.

28

References

Friston. The free-energy principle: a unified

brain theory?. Nat Rev Neurosci (2010) vol. 11

(2) pp. 127-38

Friston. The free-energy principle: a rough

guide to the brain?. Trends Cogn Sci (Regul

Ed) (2009) vol. 13 (7) pp. 293-301

Friston etal. A free energy principle for the

brain, Journal of Physiology - Paris 100 (2006)

70–87

29

Thank You

30