* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download RB Buiatti

Primary transcript wikipedia , lookup

Human genetic variation wikipedia , lookup

Minimal genome wikipedia , lookup

Nutriepigenomics wikipedia , lookup

Human genome wikipedia , lookup

Cre-Lox recombination wikipedia , lookup

Extrachromosomal DNA wikipedia , lookup

Synthetic biology wikipedia , lookup

Gene expression programming wikipedia , lookup

Genome (book) wikipedia , lookup

Koinophilia wikipedia , lookup

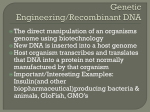

Genetic engineering wikipedia , lookup

Quantitative trait locus wikipedia , lookup

Vectors in gene therapy wikipedia , lookup

Biology and consumer behaviour wikipedia , lookup

Deoxyribozyme wikipedia , lookup

Therapeutic gene modulation wikipedia , lookup

Site-specific recombinase technology wikipedia , lookup

Designer baby wikipedia , lookup

Genome evolution wikipedia , lookup

Non-coding DNA wikipedia , lookup

Population genetics wikipedia , lookup

Genome editing wikipedia , lookup

Point mutation wikipedia , lookup

History of genetic engineering wikipedia , lookup

Helitron (biology) wikipedia , lookup