* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Back propagation-step-by-step procedure

Mirror neuron wikipedia , lookup

Neuropsychopharmacology wikipedia , lookup

Neural coding wikipedia , lookup

Caridoid escape reaction wikipedia , lookup

Stimulus (physiology) wikipedia , lookup

Central pattern generator wikipedia , lookup

Nonsynaptic plasticity wikipedia , lookup

Synaptogenesis wikipedia , lookup

Holonomic brain theory wikipedia , lookup

Neural modeling fields wikipedia , lookup

Single-unit recording wikipedia , lookup

Neurotransmitter wikipedia , lookup

Pattern recognition wikipedia , lookup

Gene expression programming wikipedia , lookup

Sparse distributed memory wikipedia , lookup

Nervous system network models wikipedia , lookup

Biological neuron model wikipedia , lookup

Synaptic gating wikipedia , lookup

Chemical synapse wikipedia , lookup

Convolutional neural network wikipedia , lookup

Catastrophic interference wikipedia , lookup

Back propagation-step-by-step

procedure

G.Anuradha

Slides downloaded and sivanandem

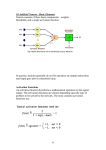

• Step 1:

Normalize the I/p and o/ p with respect to

their maximum values.

For each training pair, assume that in

normalized form there are

‘l’ inputs given by {I} and ‘n’ outputs given by {O}

Step 2:

Assume the number of neurons in the hidden

layers lie between 1<m<21

• Step 3:

Let [V] be the weights of synapses connecting

input neuron and hidden neuron

Let [W] be the weights of synapses connecting

hidden neuron and output neuron.

Initialize the weights to small random values

usually form -1 to +1

[V]0=[random weights]

[W]0=[random weights]

Initialize change in weights to 0

• Step 4: Present the pattern as inputs to {I}.

Linear activation function is used as the

output of the input layer. {O}I={I}I

• Step 5: Compute the inputs to the hidden

layers by multiplying corresponding weights of

synapses as

{I}H=[V]T{O}I

• Step 6: The hidden layer units,evaluates the

output using either bipolar or unipolar

continuous activation function