* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Document

Convolutional neural network wikipedia , lookup

Source amnesia wikipedia , lookup

Feature detection (nervous system) wikipedia , lookup

Central pattern generator wikipedia , lookup

Donald O. Hebb wikipedia , lookup

Synaptic gating wikipedia , lookup

Memory consolidation wikipedia , lookup

Atkinson–Shiffrin memory model wikipedia , lookup

Misattribution of memory wikipedia , lookup

Collective memory wikipedia , lookup

Emotion and memory wikipedia , lookup

Neural modeling fields wikipedia , lookup

De novo protein synthesis theory of memory formation wikipedia , lookup

Music-related memory wikipedia , lookup

Biological neuron model wikipedia , lookup

Total Annihilation wikipedia , lookup

Pattern recognition wikipedia , lookup

Multiple trace theory wikipedia , lookup

Nervous system network models wikipedia , lookup

Concept learning wikipedia , lookup

State-dependent memory wikipedia , lookup

Hierarchical temporal memory wikipedia , lookup

Types of artificial neural networks wikipedia , lookup

Recurrent neural network wikipedia , lookup

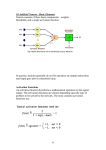

Distributed Representation, Connection-Based Learning, and Memory PDP Class Lecture January 26, 2011 The Concept of a Distributed Representation • Instead of assuming that an object (concept, etc) is represented in the mind by a single unit, we consider the possibility that it could be represented by a pattern of activation a over population of units. • The elements of the pattern may represent (approximately) some feature or sensible combination of features but they need not. • What is crucial is that no units are dedicated to a single object; in general all units participate in the representation of many different objects. • Neurons in the monkey visual cortex appear to exemplify these properties. • Note that neurons in some parts of the brain are more selective than others but (in most people’s view) this is just a matter of degree. Stimuli used by Baylis, Rolls, and Leonard (1991) Responses of Four Neurons to Face and Non-Face Stimuli in Previous Slide Responses to various stimuli by a neuron responding to a Tabby Cat (Tanaka et al, 1991) Another Example Neuron Kiani et al, J Neurophysiol 97: 4296–4309, 2007. The Infamous ‘Jennifer Aniston’ Neuron A ‘Halle Barry’ Neuron A ‘Sydney Opera House’ Neuron Figures on this and previous two slides from: Quiroga, Q. et al, 2005, Nature, 435, 1102-1107. Computational Arguments for the use of Distributed Representations (Hinton et al, 1986) • They use the units in a network more efficiently • They support generalization on the basis of similarity • They can support micro-inferences based on consistent relationships between participating units – E.g. units activated my male facial features would activate units associated with lower-pitched voices. • Overlap increases generalization and micro-inferences; less overlap reduces it. • There appears to be less overlap in the hippocampus than in other cortical areas – an issue to which we will return in a later lecture. What is a Memory? • The trace left in the memory system by an experience? • A representation brought back to mind of a prior event or experience? • Note that in some theories, these things are assumed to be one and the same (although there may be some decay or corruption). Further questions • Do we store separate representations of items and categories? – Experiments suggest participants are sensitive to item information and also to the category prototype. • Exemplar models store traces of each item encountered. But what is an item? Do items ever repeat? Is it exemplars all the way down? • For further discussion, see exchange of papers by Bowers (2009) and Plaut & McClelland (2010) in readings listed for today’s lecture. A PDP Approach to Memory • An experience is a pattern of activation over neurons in one or more brain regions. • The trace left in memory is the set of adjustments to the strengths of the connections. – Each experience leaves such a trace, but the traces are not separable or distinct. – Rather, they are superimposed in the same set of connection weights. • Recall involves the recreation of a pattern of activation, using a part or associate of it as a cue. • Every act of recall is always an act of reconstruction, shaped by the traces of many other experiences. The Hopfield Network • A memory is a random pattern of 1’s and (effectivey) -1’s over the units in a network like the one shown here (there are no selfconnections). • To learn, a pattern is clamped on the units; weights are learned using the Hebb rule. • A set of patterns can be stored in this way. • The network is probed by setting the states of the units in an initial state, and then updating the units asynchronously (as in the cube example) until the activations stop changing, using a step function. Input is removed during settling. • The result is the retrieved memory. – Noisy or incomplete patterns can be cleaned up or completed. • Network itself makes decisions; “no complex external machinery is required” • If many memories are stored, there is crosstalk among them. – If random vectors are used, capacity is only about .14*N, N being the number of units. The McClelland/Rumelhart (1985) Distributed Memory Model • Inspired by ‘Brain-State in a Box’ model of James Anderson, which predates the Hopfield net. • Uses continuous units with activations between -1 and 1. • Uses the same activation function as the iac model without a threshold. • Net input is the sum of external plus internal inputs: neti = ei + ii • Learning occurs according to the ‘Delta Rule’: Di = ei – ii wij += eDiaj • Short vs long-lasting changes to weights: – As a first approximation to this, weight increments are thought to decay rapidly from initial values to smaller more permanent values. Basic properties of auto-associator networks • They can learn multiple ‘memories’ in the same set of weights – Recall: pattern completion – Recognition: strength of pattern activation – Facilitation of processing: how quickly and strongly settling occurs. • With the Hebb Rule: – Orthogonal patterns can be stored without mutual contamination (up to n, but the memory ‘whites out’). • With the Delta Rule: – Sets of non-orthogonal patterns can be learned, and some of the cross-talk can be eliminated. • However, there is a limitation: – The external input to each unit must be linearly predicatible from a weighted combination of the activations of all of the other units. – The weights learned are the solutions to a set of simultaneous linear equations. Issues addressed by the M&R Distributed Memory Model • Memory for general and specific information – Learning a prototype – Learning multiple prototypes in the same network – Learning general as well as specific information Weights after learning from distortions of a prototype (each with a different ‘name’) Sending Units Weights after learning Dog, Cat, and Bagel Patterns Receiving Units Whittlesea (1983) • Examined the effect of general and specific information on identification of letter strings after exposure to varying numbers and degrees of distortions to particular prototype strings. Whittlesea’s Experiments • Each experiment involved different numbers of distortions presented different numbers of times during training. • Each test involved other distortions; W never tested the prototype but I did in some of my simulations. • Performance measures are per-letter increase in identification compared to base line (E) and increase in dot product of input with activation due to learning (S). Example stimuli Spared category learning with impaired exemplar learning in amnesia? This happens in the model if we simply assume amnesia reflects a smaller value of the learning rate parameter (Amnesia is a bit more interesting than this – see later lecture). Limitations of Auto-Associator Models • Capacity is limited – Different variants have different capacities – The sparser the patterns, the larger the number that can be learned • Sets of patterns violating linear predictability constraint cannot be learned perfectly. • Does not capture effects indicative of representational and behavioral sharpening – ‘Strength Mirror Effect’ – Sharpening of neural representations after repetition • We will return to these issues in a later lecture, after we have a procedure in hand for training connections into hidden units.