* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Chapter 1

Pattern language wikipedia , lookup

Perceptual control theory wikipedia , lookup

History of artificial intelligence wikipedia , lookup

Gene expression programming wikipedia , lookup

Neural modeling fields wikipedia , lookup

Hierarchical temporal memory wikipedia , lookup

Pattern recognition wikipedia , lookup

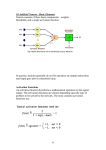

Introduction to Neural Networks John Paxton Montana State University Summer 2003 Textbook Fundamentals of Neural Networks: Architectures, Algorithms, and Applications Laurene Fausett Prentice-Hall 1994 Chapter 1: Introduction • Why Neural Networks? Training techniques exist. High speed digital computers. Specialized hardware. Better capture biological neural systems. Who is interested? • Electrical Engineers – signal processing, control theory • Computer Engineers – robotics • Computer Scientists – artificial intelligence, pattern recognition • Mathematicians – modelling tool when explicit relationships are unknown Characterizations • Architecture – a pattern of connections between neurons • Learning Algorithm – a method of determining the connection weights • Activation Function Problem Domains • • • • • Storing and recalling patterns Classifying patterns Mapping inputs onto outputs Grouping similar patterns Finding solutions to constrained optimization problems A Simple Neural Network x1 w1 y x2 w2 yin = x1w1 + x2w2 Activation is f(yin) Biological Neuron • Dendrites receive electrical signals affected by chemical process • Soma fires at differing frequencies dendrite soma axon Observations • A neuron can receive many inputs • Inputs may be modified by weights at the receiving dendrites • A neuron sums its weighted inputs • A neuron can transmit an output signal • The output can go to many other neurons Features • Information processing is local • Memory is distributed (short term = signals, long term = dendrite weights) • The dendrite weights learn through experience • The weights may be inhibatory or excitatory Features • Neurons can generalize novel input stimuli • Neurons are fault tolerant and can sustain damage Applications • Signal processing, e.g. suppress noise on a phone line. • Control, e.g. backing up a truck with a trailer. • Pattern recognition, e.g. handwritten characters or face sex identification. • Diagnosis, e.g. aryhthmia classification or mapping symptoms to a medical case. Applications • Speech production, e.g. NET Talk. Sejnowski and Rosenberg 1986. • Speech recognition. • Business, e.g. mortgage underwriting. Collins et. Al. 1988. • Unsupervised, e.g. TD-Gammon. Single Layer Feedforward NN x1 w11 y1 w1m wn1 xn ym wnm Multilayer Neural Network • More powerful • Harder to train x1 xn z1 y1 zp ym Setting the Weight • Supervised • Unsupervised • Fixed weight nets Activation Functions • Identity f(x) = x • Binary step f(x) = 1 if x >= q f(x) = 0 otherwise • Binary sigmoid f(x) = 1 / (1 + e-sx) Activation Functions • Bipolar sigmoid f(x) = -1 + 2 / (1 + -sx) • Hyperbolic tangent f(x) = (ex – e-x) / (ex + e-x) History • • • • 1943 McCulloch-Pitts neurons 1949 Hebb’s law 1958 Perceptron (Rosenblatt) 1960 Adaline, better learning rule (Widrow, Huff) • 1969 Limitations (Minsky, Papert) • 1972 Kohonen nets, associative memory History • • • • 1977 Brain State in a Box (Anderson) 1982 Hopfield net, constraint satisfaction 1985 ART (Carpenter, Grossfield) 1986 Backpropagation (Rumelhart, Hinton, McClelland) • 1988 Neocognitron, character recognition (Fukushima) McCulloch-Pitts Neuron x1 f(yin) = 1 if yin >= q x2 x3 y Exercises • • • • 2 input AND 2 input OR 3 input OR 2 input XOR