* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Detection and Attribution of External Influences on the Climate System

German Climate Action Plan 2050 wikipedia , lookup

Mitigation of global warming in Australia wikipedia , lookup

2009 United Nations Climate Change Conference wikipedia , lookup

Atmospheric model wikipedia , lookup

Heaven and Earth (book) wikipedia , lookup

ExxonMobil climate change controversy wikipedia , lookup

Climate resilience wikipedia , lookup

Climatic Research Unit email controversy wikipedia , lookup

Michael E. Mann wikipedia , lookup

Climate change denial wikipedia , lookup

Soon and Baliunas controversy wikipedia , lookup

Effects of global warming on human health wikipedia , lookup

Climate change adaptation wikipedia , lookup

Economics of global warming wikipedia , lookup

Fred Singer wikipedia , lookup

Citizens' Climate Lobby wikipedia , lookup

Climate engineering wikipedia , lookup

Climate governance wikipedia , lookup

Global warming controversy wikipedia , lookup

Politics of global warming wikipedia , lookup

Climate change in Saskatchewan wikipedia , lookup

Climate change in Tuvalu wikipedia , lookup

Climate change and agriculture wikipedia , lookup

Physical impacts of climate change wikipedia , lookup

Media coverage of global warming wikipedia , lookup

Climatic Research Unit documents wikipedia , lookup

Effects of global warming wikipedia , lookup

Global warming wikipedia , lookup

Climate change in the United States wikipedia , lookup

Climate change and poverty wikipedia , lookup

Global warming hiatus wikipedia , lookup

Scientific opinion on climate change wikipedia , lookup

Effects of global warming on humans wikipedia , lookup

Climate change feedback wikipedia , lookup

North Report wikipedia , lookup

Public opinion on global warming wikipedia , lookup

Global Energy and Water Cycle Experiment wikipedia , lookup

Solar radiation management wikipedia , lookup

Climate sensitivity wikipedia , lookup

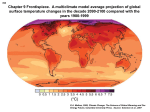

General circulation model wikipedia , lookup

Climate change, industry and society wikipedia , lookup

Surveys of scientists' views on climate change wikipedia , lookup

Attribution of recent climate change wikipedia , lookup