* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download 2017

Subventricular zone wikipedia , lookup

Psychoneuroimmunology wikipedia , lookup

Neuroanatomy wikipedia , lookup

Multielectrode array wikipedia , lookup

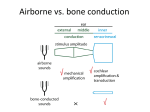

Sound localization wikipedia , lookup

Electrophysiology wikipedia , lookup

Psychophysics wikipedia , lookup

Time perception wikipedia , lookup

Animal echolocation wikipedia , lookup

Eyeblink conditioning wikipedia , lookup

Neuropsychopharmacology wikipedia , lookup

Cognitive neuroscience of music wikipedia , lookup

Development of the nervous system wikipedia , lookup

Sensory cue wikipedia , lookup

Evoked potential wikipedia , lookup

Optogenetics wikipedia , lookup

Stimulus (physiology) wikipedia , lookup

Channelrhodopsin wikipedia , lookup