* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Document

Neuropsychopharmacology wikipedia , lookup

Multielectrode array wikipedia , lookup

Biological neuron model wikipedia , lookup

Nervous system network models wikipedia , lookup

Neural coding wikipedia , lookup

Synaptic gating wikipedia , lookup

Subventricular zone wikipedia , lookup

Neuroanatomy wikipedia , lookup

Convolutional neural network wikipedia , lookup

Neural correlates of consciousness wikipedia , lookup

Stimulus (physiology) wikipedia , lookup

Optogenetics wikipedia , lookup

Development of the nervous system wikipedia , lookup

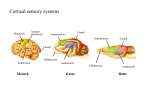

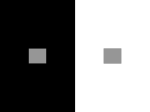

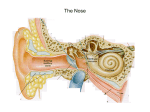

Efficient coding hypothesis wikipedia , lookup

Chapter 2 Outline • Linear filters • Visual system (retina, LGN, V1) • Spatial receptive fields – V1 – LGN, retina • Temporal receptive fields in V1 – Direction selectivity Linear filter model Given s(t) and r(t), what is D? White noise stimulus Fourier transform H1 neuron in visual system of blowfly • A: Stimulus is velocity profile; • B: response of H1 neuron of the fly visual system; • C: rest(t) using the linear kernel D(t) (solid line) and actual neural rate r(t) agree when rates vary slowly. • D(t) is constructed using white noise Deviation from linearity Early visual system: Retina • 5 types of cells: – Rods and cones: phototransduction into electrical signal – Lateral interaction of Bipolar cells through Horizontal cells. No action potentials for local computation – Action potentials in retinal ganglion cells coupled by Amacrine cells. Note • G_1 off response • G_2 on response Pathway from retina via LGN to V1 • • • Lateral geniculate nucleus (LGN) cells receive input from Retinal ganglion cells from both eyes. Both LGNs represent both eyes but different parts of the world Neurons in retina, LGN and visual cortex have receptive fields: – Neurons fire only in response to higher/lower illumination within receptive field – Neural response depends (indirectly) on illumination outside receptive field Simple and complex cells • Cells in retina, LGN, V1 are simple or complex • Simple cells: – Model as linear filter • Complex cells – Show invariance to spatial position within the receptive field – Poorly described by linear model Retinotopic map • Neighboring image points are mapped onto neighboring neurons in V1 • Visual world is centered on fixation point. • The left/right visual world maps to the right/left V1 • Distance on the display (eccentricity) is measured in degrees by dividing by distance to the eye Retinotopic map Retinotopic map Visual stimuli Nyquist Frequency Spatial receptive fields V1 spatial receptive fields Gabor functions Response to grating Temporal receptive fields • Space-time evolution of V1 cat receptive field • ON/OFF boundary changes to OFF/ON boundary over time. • Extrema locations do not change with time: separable kernel D(x,y,t)=Ds(x,y)Dt(t) Temporal receptive fields Space-time receptive fields Space-time receptive fields Space-time receptive fields Direction selective cells Complex cells Example of non-separable receptive fields LGN X cell Example of non-separable receptive fields LGN X cell Comparison model and data Constructing V1 receptive fields • Oriented V1 spatial receptive fields can be constructed from LGN center surround neurons Stochastic neural networks The top two layers form an associative memory whose energy landscape models the low dimensional manifolds of the digits. The energy valleys have names 2000 top-level neurons 10 label neurons The model learns to generate combinations of labels and images. To perform recognition we start with a neutral state of the label units and do an up-pass from the image followed by a few iterations of the top-level associative memory. 500 neurons 500 neurons 28 x 28 pixel image Hinton Samples generated by letting the associative memory run with one label clamped using Gibbs sampling Hinton Examples of correctly recognized handwritten digits that the neural network had never seen before Hinton How well does it discriminate on MNIST test set with no extra information about geometric distortions? • • • • • • Generative model based on RBM’s Support Vector Machine (Decoste et. al.) Backprop with 1000 hiddens (Platt) Backprop with 500 -->300 hiddens K-Nearest Neighbor See Le Cun et. al. 1998 for more results 1.25% 1.4% ~1.6% ~1.6% ~ 3.3% • Its better than backprop and much more neurally plausible because the neurons only need to send one kind of signal, and the teacher can be another sensory input. Hinton Summary • Linear filters – White noise stimulus for optimal estimation • Visual system (retina, LGN, V1) • Visual stimuli • V1 – – – – Spatial receptive fields Temporal receptive fields Space-time receptive fields Non-separable receptive fields, Direction selectivity • LGN and Retina – Non-separable ON center OFF surround cells – V1 direction selective simple cells as sum of LGN simple cells Exercise 2.3 • Is based on Kara, Reinagel, Reid (Neuron, 2000). – Simultaneous single unit recordings of retinal ganglion cells, LGN relay cells and simple cells from primary visual cortex – Spike count variability (Fano) less than Poisson, doubling from RGC to LGN and from LGN to cortex. – Data explained by Poisson with refractory period – Fig. 1,2,3