* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download NEURAL NETWORKS

Neural coding wikipedia , lookup

Neural oscillation wikipedia , lookup

Optogenetics wikipedia , lookup

Biological neuron model wikipedia , lookup

Neural modeling fields wikipedia , lookup

Neuroeconomics wikipedia , lookup

Holonomic brain theory wikipedia , lookup

Neuropsychopharmacology wikipedia , lookup

Synaptic gating wikipedia , lookup

Pattern recognition wikipedia , lookup

Central pattern generator wikipedia , lookup

Neural binding wikipedia , lookup

Metastability in the brain wikipedia , lookup

Neural engineering wikipedia , lookup

Development of the nervous system wikipedia , lookup

Artificial neural network wikipedia , lookup

Nervous system network models wikipedia , lookup

Catastrophic interference wikipedia , lookup

Convolutional neural network wikipedia , lookup

NEURAL NETWORKS

Vedat Tavşanoğlu

What Is a Neural Network?

Work on artificial neural networks,

commonly referred to as "neural networks,"

has been motivated right from its inception

by the recognition that the brain computes

in an entirely different way from the

conventional digital computer.

What Is a Neural Network?

The struggle to understand the brain owes much

to the pioneering work of Ramon y Cajal (1911),

who introduced the idea of neurons as structural

constituents of the brain.

Typically, neurons are five to six orders of

magnitude slower than silicon logic gates; events

in a silicon chip happen in the nanosecond (10-9 s)

range, whereas neural events happen in the

millisecond (10-3 s) range.

What Is a Neural Network?

However, the brain makes up for the

relatively slow rate of operation of a neuron

by having a truly staggering number of

neurons (nerve cells) with massive

interconnections between them.

What Is a Neural Network?

It is estimated that there must be on the order of

10 billion neurons in the human cortex, and 60

trillion synapses or connections (Shepherd and

Koch, 1990). The net result is that the brain is an

enormously efficient structure. Specifically, the

energetic efficiency of the brain is approximately

10-16 joules (J) per operation per second.

The corresponding value for the best computers

in use today is about 10-6 joules per operation per

second (Faggin, 1991).

What Is a Neural Network?

The brain is a highly complex, nonlinear,

and parallel computer (informationprocessing system). It has the capability of

organizing neurons so as to perform certain

computations (e.g., pattern recognition,

perception, and motor control) many times

faster than the fastest digital computer in

existence today.

What Is a Neural Network?

Consider, for example, human vision, which is an

information-processing task (Churchland and

Sejnowski, 1992; Levine, 1985; Marr, 1982).

It is the function of the visual system to provide a

representation of the environment around us and,

more important, to supply the information we

need to interact with the environment.

What Is a Neural Network?

The brain routinely accomplishes perceptual

recognition tasks (e.g., recognizing a

familiar face embedded in an unfamiliar

scene) in something of the order of 100-200

ms, whereas tasks of much lesser complexity

will take hours on conventional computers.

What Is a Neural Network?

For another example, consider the sonar of a bat.

Sonar is an active echo-location system.

In addition to providing information about how far

away a target (e.g., a flying insect) is, a bat sonar

conveys information about the relative velocity of

the target, the size of the target, the size of various

features of the target, and the azimuth and

elevation of the target (Suga, 1990a, b).

What Is a Neural Network?

The complex neural computations needed to

extract all this information from the target

echo occur within a brain the size of a plum.

Indeed, an echo-locating bat can pursue and

capture its target with a facility and success

rate that would be the envy of a radar or

sonar engineer.

What Is a Neural Network?

How, then, does a human brain or the brain

of a bat do it?

At birth, a brain has great structure and the

ability to build up its own rules through

what we usually refer to as "experience."

What Is a Neural Network?

Indeed, experience is built up over the years,

with the most dramatic development (i.e.,'·

hard-wiring) of the human brain taking place

in the first two years from birth; but the

development continues well beyond that stage.

During this early stage of development, about 1

million synapses are formed per second.

What Is a Neural Network?

Synapses are elementary structural and

functional units that mediate the

interactions between neurons. The most

common kind of synapse is a chemical

synapse, which operates as follows:

What Is a Neural Network?

A presynaptic process liberates a transmitter

substance that diffuses across the synaptic

junction between neurons and then acts on a

postsynaptic process.

Thus a synapse converts a presynaptic

electrical signal into a chemical signal and

then back into a postsynaptic electrical

signal (Shepherd and Koch, 1990).

What Is a Neural Network?

In electrical terminology, such an element is

said to be a nonreciprocal two-port device.

In traditional descriptions of neural

organization, it is assumed that a synapse is

a simple connection that can impose

excitation or inhibition, but not both on the

receptive neuron.

What Is a Neural Network?

A developing neuron is synonymous with a plastic

brain: Plasticity ([Latin plasticus, from Greek

plastikos, from plastos, molded, from plassein, to

mold; see pelə-2 in Indo-European roots.] )permits

the developing nervous system to adapt to its

surrounding environment (Churchland and

Sejnowski, 1992; Eggermont, 1990). In an adult

brain, plasticity may be accounted for by two

mechanisms: the creation of new synaptic

connections between neurons, and the

modification of existing synapses.

What Is a Neural Network?

Axons, the transmission lines, and dendrites, the

receptive zones, constitute two types of cell

filaments that are distinguished on morphological

grounds; an axon has a smoother surface, fewer

branches, and greater length, whereas a dendrite

(so called because of its resemblance to a tree)

has an irregular surface and more branches

(Freeman, 1975).

What Is a Neural Network?

Neurons come in a wide variety of shapes

and sizes in different parts of the brain. The

figure illustrates the shape of a pyramidal

cell, which is one of the most common types

of cortical neurons.

Like many other types of neurons, it receives

most of its inputs through dendritic spines.

The pyramidal cell can receive 10,000 or

more synaptic contacts and it can project

onto thousands of target cells.

What Is a Neural Network?

Just as plasticity appears to be essential to the

functioning of neurons as informationprocessing

units in the human brain, so it is with neural

networks made up of artificial neurons.

What Is a Neural Network?

In its most general form, a neural network is

a machine that is designed to model the way

in which the brain performs a particular task

or function of interest; the network is usually

implemented using electronic components

or simulated in software on a digital

computer.

What Is a Neural Network?

In most cases the interest is confined largely

to an important class of neural networks that

perform useful computations through a

process of learning.

What Is a Neural Network?

To achieve good performance, neural

networks employ a massive interconnection

of simple computing cells referred to as

"neurons" or "processing units." We may

thus offer the following definition of a neural

network viewed as an adaptive machine:

What Is a Neural Network?

A neural network is a massively parallel distributed

processor that has a natural propensity for storing

experiential knowledge and making it available for use.

It resembles the brain in two respects:

1 Knowledge is acquired by the network

through a learning process .

2

Interneuron connection strengths known as

synaptic weights are used to store the knowledge.

What Is a Neural Network?

The procedure used to perform the learning

process is called a learning algorithm,

the function of which is to modify the synaptic

weights of the network

in an orderly fashion so as to attain a desired

design objective.

What Is a Neural Network?

The modification of synaptic weights provides the

traditional method for the design of neural

networks. Such an approach is the closest to

linear adaptive filter theory, which is already well

established and successfully applied in such

diverse fields as communications, control, radar,

sonar, seismology, and biomedical engineering

(Haykin, 1991; Widrow and Stearns, 1985).

What Is a Neural Network?

However, it is also possible for a neural

network to modify its own topology, which

is motivated by the fact that neurons in the

human brain can die and that new synaptic

connections can grow.

What Is a Neural Network?

Neural networks are also referred to in the

literature as neurocomputers, connectionist

networks, parallel distributed processors,

etc.

Benefits of Neural Networks

From the above discussion, it is apparent that a

neural network derives its computing power

through:

1.

its massively parallel distributed structure,

1.

its ability to learn and therefore generalize;

generalization refers to the neural network

producing reasonable outputs for inputs not

encountered during training (learning).

Benefits of Neural Networks

How does the following example help you to generalize ?

confer: L. conferre- con-, together, ferre, to bring

v.t. to give, to bestow (to place or put by), to talk or consult together

defer: L. differre- dis-, asunder (adv. apart, into parts, separately), ferre, to bear , to carry

v.t. to put off to another time, to delay

defer: L. deferre- de-, down, ferre, to bear

v.i. to yield (to the wishes or opinions of another, or to authority), v.t. to submit or

to or to lay before somebody

differ: L. differre- dif.( for dis-), apart, ferre, to bear

v.i. to be unlike, distinct or various

infer: L. inferre- in-, into, ferre, to bring

v.t.. to bring on, to drive as a conclusion

prefer: L. preaferre- prea-,in front of, ferre, to bear

v.t. to set in front, to put forward, offer, submit, present, for acceptance or consideration,

to promote

Benefits of Neural Networks

convene L. convenire, con- together, and venire, to come

v.i. to come together, v.i. to call together

convent v.t. to convene

convention the act of convening, :an assembly, esp. of

special delegates for some common object, an agreement

(Geneva Convention)

invent L. invenire, inventum, in-, upon, venire, to come

v.t. to find, to device or contrive

prevent L. preavenire, prea- in front of, venire, to come

v.t. to precede, to be, go, act, earlier than, to preclude, to stop,

keep, or hinder effectually, to keep from coming to pass

Benefits of Neural Networks

Synonym:1432 (but rare before 18c.), from L.

synonymum, from Gk. synonymon "word having the

same sense as another," noun use of neut. of

synonymos "having the same name as,

synonymous," from syn- "together, same" +

onyma, Aeolic dialectal form of onoma "name"

(see name). Synonymous is attested from 1610.

Benefits of Neural Networks

Antonym:1870, created to serve as opposite of

synonym, from Gk. anti- "equal to, instead of,

opposite" (see anti-) + -onym "name" (see name).

Anonymous:1601, from Gk. anonymos "without a

name," from an- "without" + onyma, Æolic

dialectal form of onoma "name" (see name).

Benefits of Neural Networks

These two information-processing

capabilities,i.e.,

(1) massively parallel distributed structure

(2) the ability to generalize

make it possible for neural networks to solve

complex (large-scale) problems that are

currently intractable. In practice, however,

neural networks cannot provide the solution

working by themselves alone. Rather, they need

to be integrated into a consistent system

engineering approach.

Benefits of Neural Networks

1.

2.

3.

Specifically, a complex problem of interest is

decomposed into a number of relatively simple tasks,

and neural networks are assigned a subset of the tasks

e.g.,

pattern recognition,

associative memory,

control,etc.

that match their inherent capabilities. It is important to

recognize, however, that we have a long way to go (if

ever) before we can build a computer architecture that

mimics the human brain.

Properties and Capabilities of Neural

Networks

1. Nonlinearity

A neuron is basically a nonlinear device.

Consequently, a neural network, made up of an

interconnection of neurons, is itself nonlinear.

Moreover, the nonlinearity is of a special kind in

the sense that it is distributed throughout the

network. Nonlinearity is a highly important

property, particularly if the underlying physical

mechanism responsible for the generation of an

input signal (e.g., speech signal) is inherently

nonlinear.

Properties and Capabilities of Neural

Networks

2. Input-Output Mapping

A popular paradigm of learning called

supervised learning involves the modification

of the synaptic weights of a neural network by

applying a set of labeled training samples or

task examples. Each example consists of a

unique input signal and the corresponding

desired response.

Properties and Capabilities of Neural

Networks

The network is presented an example picked at

random from the set,

and

the synaptic weights(free parameters) of the

network are modified so as to minimize the

difference between the desired response and the

actual response of the network

Properties and Capabilities of Neural

Networks

The training of the network is repeated for many

examples in the set until the network reaches a

steady state, where there are no further significant

changes in the synaptic weights;

The previously applied training examples may be

reapplied during the training session but in a

different order.

Properties and Capabilities of

Neural Networks

Thus the network learns from the examples by

constructing an input-output mapping for the

problem at hand.

Properties and Capabilities of Neural

Networks

Such an approach brings to mind the study of

nonparametric statistical inference which is a branch of

statistics dealing with model-free estimation, or, from a

biological viewpoint, tabula rasa learning (Geman et al.,

1992).

(tabula rasa: a smoothed or blank tablet, a mind not yet

influenced by outside impressions and experiences)

([Medieval Latin tabula rāsa : Latin tabula, tablet + Latin

rāsa, feminine of rāsus, erased.]

Properties and Capabilities of

Neural Networks

Consider, for example, a pattern classification task,

where the requirement is to assign an input signal

representing a physical object or event to one of several

prespecified categories (classes).

In a nonparametric approach to this problem, the

requirement is to "estimate" arbitrary decision

boundaries in the input signal space for the patternclassification task using a set of examples, and to do so

without invoking a probabilistic distribution model.

Properties and Capabilities of Neural

Networks

A similar point of view is implicit in the

supervised learning paradigm, which suggests

a close analogy between the input-output

mapping performed by a neural network and

nonparametric statistical inference.

paradigm:1.Grammar. a.a set of forms all of which

contain a particular element, esp. the set of all

inflected forms based on a single stem or theme.

b.a display in fixed arrangement of such a set, as

boy, boy's, boys, boys'. 2.an example serving as a

model; pattern. [Origin: 1475–85; < LL paradīgma

< Gk parádeigma pattern (verbid of paradeiknýnai

to show side by side), equiv. to para- para-1 + deik, base of deiknýnai to show (see deictic) + -ma n.

suffix ]

Properties and Capabilities of Neural

Networks

analogy: 1550, from L. analogia, from Gk.

analogia "proportion," from ana- "upon,

according to" + logos "ratio," also "word,

speech, reckoning." A mathematical term

used in a wider sense by Plato.

Properties and Capabilities of Neural

Networks

3. Adaptivity.

Neural networks have a built-in capability to

adapt their synaptic weights to changes in the

surrounding environment. In particular, a neural

network trained to operate in a specific

environment can be easily retrained to deal with

minor changes in the operating environmental

conditions.

Properties and Capabilities of Neural

Networks

Moreover, when it is operating in a nonstationary

environment (i.e., one whose statistics change

with time), a neural network can be designed to

change its synaptic weights in real time. The

natural architecture of a neural network for

pattern classification, signal processing, and

control applications, coupled with the adaptive

capability of the network, make it an ideal tool for

use in adaptive pattern classification, adaptive

signal processing, and adaptive control.

Properties and Capabilities of

Neural Networks

As a general rule, it may be said that the more

adaptive we make a system in a properly

designed fashion, assuming the adaptive system

is stable, the more robust its performance will

likely be when the system is required to operate

in a nonstationary environment.

Properties and Capabilities of

Neural Networks

It should be emphasized, however, that

adaptivity does not always lead to

robustness; indeed, it may do the very

opposite. For example, an adaptive system

with short time constants may change

rapidly and therefore tend to respond to

spurious disturbances, causing a drastic

degradation in system performance.

Properties and Capabilities of

Neural Networks

To realize the full benefits of adaptivity, the

principal time constants of the system should be

long enough for the system to ignore spurious (L.

spurius, false) disturbances and yet short enough

to respond to meaningful changes in the

environment; the problem described here is

referred to as the stability-plasticity dilema

(Grossberg, 1988). Adaptivity (or “in situ,(L.)in

the original situation” training as it is sometimes

referred to) is an open research topic.

Properties and Capabilities of

Neural Networks

4. Evidential Response

In the context of pattern classification, a neural

network can be designed to provide information

not only about which particular pattern to select,

but also about the confidence in the decision

made. This latter information may be used to

reject ambiguous patterns, should they arise, and

thereby improve the classification performance of

the network.

Properties and Capabilities of

Neural Networks

5.

Contextual Information

(L. contextus,contexere-con-, texere, textum, to weave)

Knowledge is represented by the very structure and

activation state of a neural network.

Every neuron in the network is potentially affected by the

global activity of all other neurons in the network.

Consequently, contextual information is dealt with

naturally by a neural network.

Properties and Capabilities of

Neural Networks

6. Fault Tolerance

A neural network, implemented in hardware form, has

the potential to be inherently fault tolerant in the sense

that its performance is degraded gracefully under adverse

operating conditions (Bolt, 1992).

For example, if a neuron or its connecting links are

damaged, recall of a stored pattern is impaired in quality.

However, owing to the distributed nature of information

in the network, the damage has to be extensive before

the overall response of the network is degraded seriously.

Thus, in principle, a neural network exhibits a graceful

degradation in performance rather than catastrophic

failure.

Properties and Capabilities of

Neural Networks

7. VLSI Implementability

The massively parallel nature of a neural network makes it

potentially fast for the computation of certain tasks. This same

feature makes a neural network ideally suited for implementation

using very-Iarge-scale-integrated (VLSI) technology.

The particular virtue of VLSI is that it provides a means of

capturing truly complex behavior in a highly hierarchical fashion

(Mead and Conway, 1980), which makes it possible to use a neural

network as a tool for real-time applications involving pattern

recognition, signal processing, and control.

Properties and Capabilities of

Neural Networks

8. Uniformity of Analysis and Design. Basically, neural

networks enjoy universality as information processors.

We say this in the sense that the same notation is used in

all the domains involving the application of neural

networks. This feature manifests itself in different ways:

Neurons, in one form or another, represent an ingredient

common to all neural networks.

This commonality makes it possible to share theories

and learning algorithms in different applications of

neural networks.

Modular networks can be built through a seamless

integration of modules.

Properties and Capabilities of Neural

Networks

analysis: [Medieval Latin, from Greek analusis, a dissolving, from analūein, to

undo : ana-, throughout; see ana- + lūein, to loosen; see leu- in Indo-European

roots.]

(Download Now or Buy the Book) The American Heritage® Dictionary of the English

Language, Fourth Edition

Copyright © 2006 by Houghton Mifflin Company.

Published by Houghton Mifflin Company. All rights reserved.Online Etymology

Dictionary - Cite This Source - Share This

analysis

1581, "resolution of anything complex into simple elements" (opposite of

synthesis), from M.L. analysis, from Gk. analysis "a breaking up," from analyein

"unloose," from ana- "up, throughout" + lysis "a loosening" (see lose).

Psychological sense is from 1890. Phrase in the final (or last) analysis (1844),

translates Fr. en dernière analyse.

Properties and Capabilities of Neural

Networks

Design: 1548, from L. designare "mark out,

devise," from de- "out" + signare "to mark," from

signum "a mark, sign." Originally in Eng. with the

meaning now attached to designate (1646, from L.

designatus, pp. of designare); many modern uses

of design are metaphoric extensions. Designer

(adj.) in the fashion sense of "prestigious" is first

recorded 1966; designer drug is from 1983.

Designing "scheming" is from 1671. Designated

hitter introduced in American League baseball in

1973, soon giving wide figurative extension to

designated.

Properties and Capabilities of

Neural Networks

9. Neurobiological Analogy

The design of a neural network is motivated by

analogy with the brain, which is a living proof

that fault-tolerant parallel processing is not only

physically possible but also fast and powerful.

Neurobiologists look to (artificial) neural

networks as a research tool for the interpretation

of neurobiological phenomena.

Properties and Capabilities of

Neural Networks

For example, neural networks have been used to provide insight

on the development of premotor (relating to, or being the area of

the cortex of the frontal lobe lying immediately in front of the

motor area of the precentral gyrus(Any of the prominent, rounded,

elevated convolutions on the surfaces of the cerebral hemispheres.

[Latin gȳrus, circle; see gyre.] ) )circuits in the oculomotor

(1.Of or relating to movements of the eyeball: an oculomotor

muscle.

2.Of or relating to the oculomotor nerve.

[Latin oculus, eye; see okw- in Indo-European roots + motor.]

system (responsible for eye movements) and the manner in which

they process signals (Robinson, 1992). On the other hand,

engineers look to neurobiology for new ideas to solve problems

more complex than those based on conventional hard-wired

design techniques.

Properties and Capabilities of

Neural Networks

Here, for example, we may mention the development of

a model sonar receiver based on the bat (Simmons et aI.,

1992). The batinspired model consists of three stages:

(1) a front end that mimics the inner ear of the bat in

order to encode waveforms;

(2) a subsystem of delay lines that computes echo delays;

(3) a subsystem that computes the spectrum of echoes,

which is in turn used to estimate the time separation of

echoes from multiple target glints.

Properties and Capabilities of

Neural Networks

The motivation is to develop a new sonar receiver

that is superior to one designed by conventional

methods. The neurobiological analogy is also

useful in another important way: It provides a

hope and belief (and, to a certain extent, an

existence proof) that physical understanding of

neurobiological structures could indeed influence

the art of electronics and thus VLSI (Andreou,

1992).

Properties and Capabilities of

Neural Networks

With inspiration from neurobiological analogy in

mind, it seems appropriate that we take a brief

look at the structural levels of organization in the

brain.

Properties and Capabilities of

Neural Networks

1.2 Structural Levels of Organization in the

Brain

The human nervous system may be viewed

as a three-stage system,(Arbib, 1987).

Block-diagram representation of nervous system

Properties and Capabilities of

Neural Networks

Central to the system is the brain, represented by the

neural (nerve) net in this figure, which continually

receives information, perceives it, and makes

appropriate decisions. Two sets of arrows are shown in

this figure:

1 Those pointing from left to right indicate the forward

transmission of information-bearing signals through

the system.

2 The arrows pointing from right to left signify the

presence of feedback in the system.

Properties and Capabilities of

Neural Networks

The receptors in the figure convert stimuli from

the human body or the external environment into

electrical impulses that convey information to the

neural net (brain). The effectors, on the other

hand, convert electrical impulses generated by the

neural net into discernible responses as system

outputs. . In the brain there are both small-scale

and large-scale anatomical organizations, and

different functions take place at lower and higher

levels.

Properties and Capabilities of

Neural Networks

This figure shows a

hierarchy of interwoven

levels of organization that

has emerged from the

extensive work done on

the analysis of local

regions in the brain

(Churchland and

Sejnowski, 1992; Shepherd

and Koch, 1990).

Properties and Capabilities of

Neural Networks

Proceeding upward from synapses that represent

the most fundamental level and that depend on

molecules and ions for their action, we have

neural microcircuits dendritic trees, and then

neurons.

Properties and Capabilities of

Neural Networks

A neural microcircuit refers to an assembly

of synapses organized into patterns of

connectivity so as to produce a functional

operation of interest. A neural microcircuit

may be likened to a silicon chip made up of

an assembly of transistors.

Properties and Capabilities of

Neural Networks

The smallest size of microcircuits is measured in

micrometers (m), and their fastest speed of

operation is measured in milliseconds. The neural

microcircuits are grouped to form dendritic

subunits within the dendritic trees of individual

neurons. The whole neuron, about 100 m in size,

contains several dendritic subunits.

Properties and Capabilities of

Neural Networks

At the next level of complexity, we have local

circuits (about 1 mm in size) made up of

neurons with similar or different properties;

these neural assemblies perform operations

characteristic of a localized region in the

brain.

Properties and Capabilities of

Neural Networks

This is followed by interregional circuits made up

of pathways, columns, and topographic maps,

which involve multiple regions located in different

parts of the brain.

Properties and Capabilities of

Neural Networks

Topographic maps are organized to respond to

incoming sensory information. These maps are

often arranged in sheets, as in the superior

colliculus, where the visual, auditory, and

somatosensory maps are stacked in adjacent

layers in such a way that stimuli from

corresponding points in space lie above each

other. Finally, the topographic maps, and other

interregional circuits mediate specific types of

behavior in the central nervous system .

Properties and Capabilities of

Neural Networks

It is important to recognize that the

structural levels of organization described

herein are a unique characteristic of the

brain. They are nowhere to be found in a

digital computer, and we are nowhere close

to realizing them with artificial neural

networks. Nevertheless, we are inching our

way toward a hierarchy of computational

levels similar to that described in the last

figure.

Properties and Capabilities of

Neural Networks

The artificial neurons we use to build our

neural networks are truly primitive in

comparison to those found in the brain.

The neural networks we are presently able to

design are just as primitive compared to the

local circuits and the interregional circuits in

the brain.

Properties and Capabilities of

Neural Networks

What is really satisfying, however, is the

remarkable progress that we have made on

so many fronts during the past 20 years.

With the neurobiological analogy as the

source of inspiration, and the wealth of

theoretical and technological tools that we

are bringing together, it is for certain that in

another 10 years our understanding of

artificial neural networks will be much more

sophisticated than it is today.

Properties and Capabilities of

Neural Networks

Our primary interest here is confined to the study

of artificial neural networks from an engineering

perspective, to which we refer simply as neural

networks. We begin the study by describing the

models of (artificial) neurons

that form the basis of the neural networks

considered in these lectures.

Models of a Neuron

Models of a Neuron

A neuron is an information-processing unit that is

fundamental to the operation of a neural network.

The figure on the next slide shows the model for a

neuron.

Models of a Neuron

Nonlinear model of a neuron

Models of a Neuron

1. A set of synapses or connecting links, each

of which is characterized by a weight or

strength of its own. Specifically, a signal xj

at the input of synapse j connected to

neuron k is multiplied by the synaptic

weight wkj. It is important to make a note

of the manner in which the subscripts of

the synaptic weight wkj are written.

Models of a Neuron

The first subscript refers to the neuron in

question and the second subscript refers to

the input end of the synapse to which the

weight refers; the reverse of this notation is

also used in the literature.

The weight wkj is positive if the associated

synapse is excitatory; it is negative if the

synapse is inhibitory (Middle English

inhibiten, to forbid, from Latin inhibēre, inhibit, to restrain, forbid : in-, in; see in-2 + habēre, to

hold; see ghabh- in Indo-European roots.] )

Models of a Neuron

2.An adder for summing the input signals,

weighted by the respective synapses of

the neuron; the operations described

here constitute a linear combiner.

Models of a Neuron

3.An activation function for limiting the amplitude

of the output of a neuron. The activation function,

is also referred to in the literature as a squashing

function in that it squashes (limits) the permissible

amplitude range of the output signal to some finite

value. Typically, the normalized amplitude range

of the output of a neuron is written as the closed

unit interval [0,1] or alternatively [-1,1].

Models of a Neuron

4. The model of a neuron also includes an

externally applied threshold k that has the

effect of lowering the net input of the

activation function.

On the other hand, the net input of the

activation function may be increased by

employing a bias term rather than a

threshold; the bias is the negative of the

threshold.

Models of a Neuron

In mathematical terms, we may describe neuron k by

writing the following pair of equations:

p

Mathematical Model

of a Neuron

uk wkj x j

j 1

yk ( uk k )

where xj’s are the input signals; wkj’s are the synaptic

weights of neuron k; uk is the linear combiner output;

k is the threshold; is the activation function;

and yk is the output signal of the neuron.

Models of a Neuron

Block-Diagram Representation of a Neuron

p

uk wkj x j

j 1

yk ( u k k )

Models of a Neuron

The use of threshold k

has the effect of

applying an affine transformation to the

output uk of the linear combiner in the

model of the figure, as shown by

vk u k k

Models of a Neuron

In particular, depending on

whether the threshold k is

positive or negative, the

relationship between the

effective internal activity level or

activation potential vk of neuron

k and the linear combiner

output uk is modified in the

manner illustrated in the figure.

Note that as a result of this

affine transformation, the graph

of vk versus uk no longer passes

through the origin.

Models of a Neuron

The threshold k is an external parameter

of artificial neuron k. We may account for its

presence as in the above equation.

Equivalently, we may formulate the

combination of the two equations as follows:

p

vk wkj x j

j 0

yk (vk )

Models of a Neuron

Here we have added a new synapse, whose input

is

x0 1

and whose weight is

wk 0 k

Models of a Neuron

We may therefore

reformulate the model

of neuron k as in the

figure, where the effect

of the threshold is

represented by doing

two things:

Models of a Neuron

(1) adding a new input signal fixed at -1, and

(2) adding a new synaptic weight equal to the

threshold k .

Alternatively, we may model the neuron as in

the following slide:

Y1

Models

of

a

Neuron

where the

combination of

fixed input

xo = + 1 and

weight wkO = bk

accounts for the

bias bk•

Although the

models of the

two figures are

different in

appearance,

they are

mathematically

equivalent.

Slayt 92

Y1

YTU; 15.03.2005

Models of a Neuron

Signal-Flow Graph Representation of a Neuron

uk

p

w

j 1

kj

xj

yk ( uk k )

Models of a Neuron

Signal-Flow Graph Representation of a Neuron

Two different types of links may be distinguished:

(a) Synaptic links, defined by a linear input-output

relation. Specifically, the node signal xj is

multiplied by the synaptic weight wkj to produce

the node signal vk .

(b) Activation links, defined in general by a

nonlinear input-output relation. This form of

relationship is the nonlinear activation function

given as

(.)

Models of a Neuron

The Activation Function

The activation function, denoted by

y (v )

defines the output y of a neuron in terms of the

activity level at its input v.

Models of a Neuron

We may identify three basic types of activation

functions:

Threshold Function

2. Piecewise-linear Function

3. Sigmoid Function

1.

Models of a Neuron

Threshold (hard limiter or binary activation )

Function (leading to discrete perceptron)

1.

(v )

1 1

(v) sgn(v)

2 2

1

0

(a) Unipolar

v

Models of a Neuron

(v )

(v) sgn(v)

1

v

00

0

-1

(b) Bipolar

Models of a Neuron

2. Piecewise-linear Function

(v )

1 1

1

1

(v ) v v

2 2

2

2

1

0.5

-0.5

0

0

0

(a) Unipolar

v

y ij (t ) f ( xij )

1

xij (t ) 1 x ij (t ) 1

2

Models of a Neuron

(v )

1

(v ) v 1 v 1

2

1

-1

0

1

-1

(b) Bipolar

v

Models of a Neuron

3. Sigmoid Function

(v )

1

0.5

(a) Unipolar

1

(v )

;a 0

av

1

e

v

Models of a Neuron

(v )

1

v

-1

(b) Bipolar

1 e av

2

=

-1 ; a 0

(v )

av

av

1 e

1 e

Models of Artificial Neural Networks

DEFINITION OF Neural Network

(Jacek M. Zurada, ARTIFICIAL NEURAL SYSTEMS, 1992, West Publishing Company)

A Neural Network is an interconnection of

neurons such that neuron outputs are

connected, through weights, to all other

neurons including themselves; both lagfree

and delay connections are allowed.

Models of Artificial Neural Networks

Neural Networks Viewed as Directed Graphs

1.

2.

Block-Diagram Representation (BDR)

Signal-Flow Graph Representation (SFGR)

These are obtained when BDR and SFGR for

the neurons are used.

Models of Artificial Neural Networks

An alternative definition of Neural Network

(Simon Haykin, NEURAL NETWORKS, 1994, Macmillan College Publishing Company)

A neural network is a directed graph (SFG) consisting of

nodes with interconnecting synaptic and activation

links, and which is characterized by four properties:

Each neuron is represented by a set of linear synaptic

links, an externally applied threshold, and a nonlinear

activation link. The threshold is represented by a

synaptic link with an input signal fixed at a value of -1.

Models of Artificial Neural Networks

2. The synaptic links of a neuron weight their

respective input signals.

3. The weighted sum of the input signals

defines the total internal activity level of

the neuron in question.

4. The activation link squashes the internal

activity level of the neuron to produce an

output that represents the output of the

neuron.

Network Architectures

In general, we may identify four different

classes of network architectures:

1. Single-Layer Feedforward Networks

2. Multilayer Feedforward Networks

3. Recurrent Networks

4. Lattice Structures

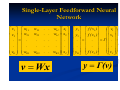

Network Architectures

1.

Single-Layer Feedforward Networks

A layered neural network is a network of

neurons organized in the form of layers. In

the simplest form of a layered network, we

just have an input layer of source nodes that

projects onto an output layer of neurons

(computation nodes), but not vice versa.

Network Architectures

In other words, this network is strictly of a

feedforward type. It is illustrated on the

following slide for the case of four nodes in

both the input and output layers. Such a

network is called a single-layer network,

with the designation "single layer" referring

to the output layer of computation nodes

(neurons). In other words, we do not count

the input layer of source nodes, because no

computation is performed there.

Network Architectures

Network Architectures

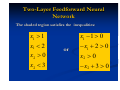

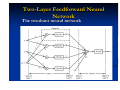

2. Multilayer Feedforward Networks

The second class of a feedforward neural

network distinguishes itself by the presence

of one or more hidden layers, whose

computation nodes are correspondingly

called hidden neurons or hidden units. The

function of the hidden neurons is to

intervene between the external input and the

network output.

Network Architectures

Network Architectures

By adding one or more hidden layers, the network is

enabled to extract higher-order statistics, for (in a rather

loose sense) the network acquires a global perspective

despite its local connectivity by virtue of:

the extra set of synaptic connections

the extra dimension of neural interactions.

The ability of hidden neurons to extract higher-order

statistics is particularly valuable when the size of the

input layer is large.

Network Architectures

The source nodes in the input layer of the

network supply respective elements of the

activation pattern (input vector), which constitute

the input signals applied to the neurons

(computation nodes) in the second layer (i.e., the

first hidden layer). The output signals of the

second layer are used as inputs to the third layer,

and so on for the rest of the network.

Network Architectures

Typically, the neurons in each layer of the

network have as their inputs the output signals of

the preceding layer only.

The set of output signals of the neurons in the

output (final) layer of the network constitutes the

overall response of the network to the activation

pattern supplied by the source nodes in the input

(first) layer.

Network Architectures

This graph illustrates the

layout of a multilayer

feedforward neural

network for the case of a

single hidden layer. For

brevity this network is

referred to as a 10-4-2

network in that it has 10

source nodes, 4 hidden

neurons, and 2 output

neurons.

Network Architectures

As another example, a feedforward

network with p source nodes, h1

neurons in the first hidden layer, h2

neurons in the second layer, and q

neurons in the output layer, say, is

referred to as a p-h1-h2-q network.

Network Architectures

The neural network of

this figure is said to

be fully connected in

the sense that every

node in each layer of

the network is

connected to every

other node in the

adjacent forward

layer.

Network Architectures

If, however, some of the communication links

(synaptic connections) are missing from the

network, we say that the network is partially

connected. A form of partially connected

multilayer feedforward network of particular

interest is a locally connected network. An

example of such a network with a single hidden

layer is presented on the next slide. Each neuron

in the hidden layer is connected to a local (partial)

set of source nodes that lies in the immediate

neighborhood.

Network Architectures

Such a set of localized

nodes feeding a neuron

is said to constitute the

receptive field of the

neuron.

Likewise, each neuron in

the output layer is

connected to a local set

Partially connected

of hidden neurons.

feedforward neural network

Network Architectures

3. Recurrent (Feedback or Dynamical)

Networks

A recurrent neural network

distinguishes itself from a feedforward

neural network in that it has at least

one feedback loop. For example, a

recurrent network may consist of a

single layer of neurons with each

neuron feeding its output signal back

to the inputs of all the other neurons,

as illustrated in the architectural

graph of the figure on the right. In the

structure depicted in this figure there

are no self-feedback loops in the

Recurrent network with no

network; self-feedback refers to a

situation where the output of a neuron self-feedback loops and no

is fedback to its own input.

hidden neurons

Network Architectures

The recurrent network

illustrated on the previous

slide also has no hidden

neurons. Here we illustrate

another class of recurrent

networks with hidden

neurons. The feedback

connections shown originate

from the hidden neurons as

well as the output neurons.

Recurrent network with

hidden neurons

Network Architectures

The presence of feedback loops, be it as in the

recurrent structure with or without hidden

neurons, has a profound impact on the learning

capability of the network, and on its

performance. Moreover, the feedback loops

involve the use of particular branches

composed of unit-delay elements (denoted by

z-1), which result in a nonlinear dynamical

behavior by virtue of the nonlinear nature of

the neurons.

Network Architectures

4. Lattice (Multicategory Perceptron)

Structures

A lattice consists of a one-dimensional,

two-dimensional, or higher-dimensional

array of neurons with a corresponding set

One dimensional lattice of 3 neurons

of source nodes that supply the input

signals to the array; the dimension of the

lattice refers to the number of the

dimensions of the space in which the

graph lies.

A lattice network is really a feedforward

network with the output neurons

arranged in rows and columns.

Two dimensional lattice of 3-by-3 neurons

The Perceptron

The perceptron is the simplest

form of a neural network used

for the classification of a special

type of patterns said to be

linearly separable (i.e., patterns

that lie on opposite sides of a

hyperplane).

Basically, it consists of a single

neuron with adjustable synaptic

weights and threshold, as shown

in the figures.

The Perceptron

The algorithm used to adjust the free parameters of this

neural network first appeared in a learning procedure

developed by Rosenblatt (1958, 1962) for his perceptron

brain model. Indeed, Rosenblatt proved that if the

patterns (vectors) used to train the perceptron are drawn

from two linearly separable classes, then the perceptron

algorithm converges and positions the decision surface in

the form of a hyperplane between the two classes. The

proof of convergence of the algorithm is known as the

perceptron convergence theorem.

The Perceptron

The single-layer perceptron depicted has a single

neuron. Such a perceptron is limited to performing

pattern classification with only two classes.

By expanding the output (computation) layer of the

perceptron to include more than one neuron, we may

correspondingly form classification with more than two

classes. However, the classes would have to be linearly

separable for the perceptron to work properly.

The Perceptron

From this model we find that

the linear combiner output

(i.e., hard limiter input) is

p

v wkj x j

j 1

The purpose of the perceptron is to classify the set of

externally applied stimuli x1 ,x2,…., xp into one of two

classes, C1 or C2, say. The decision rule for the

classification is to assign the point represented by the

inputs x1 ,x2,…., xp to class C1, if the perceptron output y is

+ 1 and to class C2 if it is -1.

The Perceptron

To develop insight into the behavior of a pattern

classifier, it is customary to plot a map of the decision

regions in the p-dimensional signal space spanned by

the p input variables x1 ,x2,…., xp. In the case of an

elementary perceptron, there are two decision regions

separated by a hyperplane defined by

p

w

j 1

kj

xj 0

The Perceptron

This is illustrated here for the case of

two input variables xl and x2, for which

the decision boundary takes the form of

a straight line called the decision line. A

point (x1,x2) that lies above the decision

line is assigned to class C1, and a point

(x1,x2) that lies below the decision line

is assigned to class C2. Note also that

the effect of the threshold is merely to

shift the decision line away from the

origin. The synaptic weights w1 w2, ..,wp

of the perceptron can be fixed or

adapted on an iteration-by-iteration

basis. For the adaptation, we may use

an error-correction rule known as the

perceptron convergence algorithm.

The Perceptron

We find it more convenient to

work with the modified

signal

flow graph given here.

In this second model, which is

equivalent to that of the previous

figure, the threshold is treated as

a synaptic weight is connected

to a fixed input equal to -1. We may thus define the

(p + 1)-by-1 (augmented) input vector and the

corresponding (augmented) weight vector as:

x [ x1

x2 ... ... x p 1]t

w [ w1

w2 ... ... wp

]t

The Perceptron

Pattern Space

Any pattern can be represented by a point in

n-dimensinal Euclidean space En called the

pattern space. Points in that space corresponding to

members of the pattern set are n-tuple vectors x.

The Perceptron

Example 1: Consider the six patterns in two dimensional

pattern space shown in the following figure.

x2

(0, 0)

(-0.5, -1)

(-1, -2)

(2,0)

x1

(1.5,-1)

(1, -2)

The Perceptron

Design a perceptron such that these are classified

according to their membership in sets as follows :

2

1.5

1

, , : class 1

1

2

0

0

0.5

1

, : class 2

,

1

2

0

The Perceptron

One possible decision line is given by x2= 2x1-2

which is drawn in the following figure.

x2

x = 2x -2

2

1

(0, 0)

(-0.5, -1)

(-1, -2)

(2,0)

x1

(1.5,-1)

(1, -2)

The Perceptron

One decision surface for this line is obtained as:

x3 2 x1 x2 2

x3 0 2 x1 x2 2 0 gives the points on the decision line

x3 0 2 x1 x2 2 0 gives the part of the surface above the decision line

x3 0 2 x1 x2 2 0 gives the part of the surface below the decision line

Such a pattern classification can be performed by

the following (discrete) perceptron (dichotomizer):

dichotomize: to divide or separate into two parts

dicha: in two; tomia: to cut

The Perceptron

x1

x2

-2

1

-2

-1

+

v

sgn(v)

y

y sgn(2 x1 x2 2)

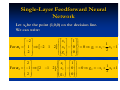

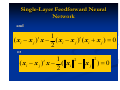

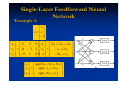

Single-Layer Feedforward Neural

Network

Example 2: Assume that a set of eight points,

P0, P1... , P7 , in three-dimensional space is available.

The set consists of all vertices of a three-dimensional

cube as follows:

{P0(-l, -1, -l), P1(-l, -1, l), P2(-1, 1, -1), P3(-1, 1, 1),

P4(1, -1, -l), P5(1, -1, 1), P6(1,1, -1), P7(1, 1, 1)}

Elements of this set need to be classified into two categories

The first category is defined as containing points with two

or more positive ones; the second category contains all the

remaining points that do not belong to the first category.

Single-Layer Feedforward Neural

Network

Classification of points P3, P5, P6, and P7 can be

based on the summation of coordinate values for

each point evaluated for category membership.

Notice that for each point Pi (x1, x2, x3) ,where

i = 0, ... , 7, the membership in the category can be

established by the following calculation:

1, then category 1

If sgn( x1 x2 x3 )

1, then category 2

Single-Layer Feedforward Neural

Network

The neural network given below implements the

above expression:

Single-Layer Feedforward Neural

Network

The network above performs the

threedimensional Cartesian space partitioning

as illustrated below :

Single-Layer Feedforward Neural

Network

Discriminant Functions

In Example 1

x3 2 x1 x2 2

can be viewed as a Discriminant Function. We

may also write

g ( x1 , x2 ) 2 x1 x2 2

or

x1

g ( x ) 2 x1 x2 2 where x =

x2

Single-Layer Feedforward Neural

Network

g ( x1 , x2 ) 2 x1 x2 2

can also be viewed as the equation of a plane in

3-D Euclidean space.

On the other hand

g ( x1 , x2 ) 0 2 x1 x2 2 0

is the intersection line of the above plane with the

xy-plane.

Single-Layer Feedforward Neural

Network

Obviously:

g ( x ) 0 2 x1 x2 2 0 gives the points on the decision line

g ( x ) 0 2 x1 x2 2 0 gives the points on the plane above the decision line

g ( x ) 0 2 x1 x2 2 0 gives the points on the plane below the decision line

Single-Layer Feedforward Neural

Network

Since on the decision line we have

g ( x1 , x2 ) 0

we can write

g ( x1 , x2 )

g ( x1 , x2 )

dg ( x1 , x2 )

dx1

dx2 0

x1

x2

where dx1 and dx2 are the increments given to

x1 and x2 on the decision line.

Single-Layer Feedforward Neural

Network

Now defining

where

g ( x1 , x2 )

x

dx1

1

and dr

g ( x1 , x2 )

g ( x1 , x2 )

dx2

x

2

g and dr

are known to be the gradient vector (or normal

vector) and the tangent vector, respectively,

Single-Layer Feedforward Neural

Network

The gradient vector points toward the positive side

of the decision line. However, there are two normal

vectors, one pointing toward the positive side, 1,

and the other toward the negative side, 2 =-1.

For the above example the gradient and normal

vectors are given by:

g ( x1 , x2 )

x

2

2

2

1

, 1 g ( x1 , x2 ) ,2

g ( x1 , x2 )

g ( x1 , x2 ) 1

1

1

x

2

Single-Layer Feedforward Neural

Network

In fact 2 is obtained from

g ( x1 , x2 ) 0

Note that 1 and 2 are the projections of the

normal vectors on the x-y plane of two intersecting

planes whose intersection line is given by

g ( x1 , x2 ) 0

Single-Layer Feedforward Neural

Network

Although 1 and 2 are unique, there are infinetely

many plane pairs whose intersection line is given

by

g ( x1 , x2 ) 0

Plane pairs can be built by appropriately

augementing the 2-D normal vectors 1 and 2

to 3-D normal vectors which will be the normal

vectors of the two intersecting planes.

Single-Layer Feedforward Neural

Network

The 2-D normal vectors are plane vectors given in

the x-y plane.

2

2

1 ,2

1

1

These can be augmented to 3-D by adding a third

component, say 2, yielding

2

2

n1 1 , n2 1

2

2

Single-Layer Feedforward Neural

Network

The details of building the augmented vectors

are shown below:

g

n1

-2

-1

-2

x2

Decision

line

n

1 2

0

1

2

x1

Single-Layer Feedforward Neural

Network

Note that 1 and 2 are the normal vectors of the

plane that is perpendicular to the x-y plane and

intersects the x-y plane at the decision line.

On the other hand the vectors n1 and n2 are the

normal vectors of the planes obtained by rotating

the above perpendicular plane around the decision

line by and , respectively.

Single-Layer Feedforward Neural

Network

We can now determine the equations for the these

planes by using the normal vector-point form of

plane equation given as:

t

n ( x x0 ) 0

where:

•n is the normal vector of the plane,

•x is the vector connecting any point on the plane

to the origin,

•x0 is the vector connecting a fixed point on the

plane to the origin.

Single-Layer Feedforward Neural

Network

This means that x-x0 represents the vector

connecting all possible points x on the plane

to fixed point x0 on the same plane. That is x-x0

is a vector that lies on the plane.

Now let us find the plane equations for the two

normal vectors found above.

Single-Layer Feedforward Neural

Network

Let x0 be the point (1,0,0) on the decision line.

We can write:

x1 1

2

1

For n1 1 2 1 2 x2 0 0 g1 x1 x2 1

2

2

g1 0

x1 1

2

1

For n2 1 2 1 2 x2 0 0 g 2 x1 x2 1

2

2

g 2 0

Single-Layer Feedforward Neural

Network

Because of the way g1(x) and g2(x) are built we can

state the following:

g1 ( x) g 2 ( x) 0 on the positive side of the decision line

g 2 ( x) g1 ( x) 0 on the negative side of the decision line

2

2

1

1

For n1 1 g1 x1 x2 1 For n2 1 g 2 x1 x2 1

2

2

2

2

Single-Layer Feedforward Neural

Network

g

n2

n1

g2

Decision

Decisio

line

g1

x1

Decision

line

x2

Single-Layer Feedforward Neural

Network

Now we can compute g1(x) and g2(x) for the selected

patterns in Example 1.

Class 1

Class 2

(2,0)

(1.5,-1)

(1,-2)

(0,0)

(-0.5,1)

(-1,-2)

g1-g2>0

g1-g2>0

g1-g2>0

g2-g1>0

g2-g1>0

g2-g1>0

Single-Layer Feedforward Neural

Network

Henceforth, such gi(x) functions will be called

Discriminant Functions. We can conclude that:

g1 ( x) g 2 ( x) for the patterns in Class 1

g 2 ( x) g1 ( x) for the patterns in Class 2

Single-Layer Feedforward Neural

Network

Minimum Distance Classification

The classification of two clusters is carried out in

such a way that the boundary of these two clusters

is drawn as a line perpendicular to and passing

through the midpoint of the line connecting the

center points of two clusters . Therefore the

boundary line is the perpendicular bisector of a

connecting line.

Single-Layer Feedforward Neural

Pi Network

Positive side

xi-xj

xi

Negative side

P0=(xi+xj)/2

Pj

xj

0

Single-Layer Feedforward Neural

Network

Now we will derive the equation of the boundary line.

Let the vector x and x0 represent any point on this and

the point P0, respectively. Then the following must hold:

( xi x j ) ( x x 0 ) 0

t

which can be written in the form

1

( xi x j ) ( x ( xi x j )) 0

2

t

Single-Layer Feedforward Neural

Network

and

1

t

( xi x j ) x ( xi x j ) ( xi x j ) 0

2

t

or

2

1

2

( xi x j ) x ( xi x j ) 0

2

t

Single-Layer Feedforward Neural

Network

Now defining

1

gij ( x ) ( xi x j ) x ( xi

2

t

2

2

xj )

We have already seen that the boundary (decision)

line can be taken as the intersection of two planes

gi and gj .

Single-Layer Feedforward Neural

Network

Therefore

gij ( x ) gi ( x ) g j ( x )

where we have called gi (x) discriminant functions

and shown that they are associated with plane

equations.

Single-Layer Feedforward Neural

Network

Now using the two equations above we obtain

2

1

2

( xi x j ) x ( xi x j ) gi ( x ) g j ( x )

2

t

which can be used to make the following

identification:

1

gi ( x ) xi x xi

2

t

2

1

g j ( x) x j x x j

2

t

2

Single-Layer Feedforward Neural

Network

gi (x) can also be expressed as:

gi ( x ) wi x wi ,n1

t

Therefore we can make the identification:

wi = x i

1

wi ,n1 xi

2

2

Single-Layer Feedforward Neural

Network

An alternative approach towards the construction

of discriminant functions may be taken as follows:

Let us assume that a minimum–distance

classification is requried to classify patterns into

R categories. Each of the classes is represented by

its center point Pi , i=1,2,…..,R. The Euclidean

distance between an input pattern x and the point

Pi is given by the norm of the vector x-xi as:

x xi ( x xi ) ( x xi )

t

Single-Layer Feedforward Neural

Network

A minimum–distance classifier computes the distance

from a pattern of unknown classification to each of the

center points Pi . Then the category number of the point

that yields the minimum distance is assigned to the

unknown pattern.

Squaring the above equation yields

x xi

2

1 t

x x - 2x x + x xi = x x - 2(x x - xi xi ) > 0

2

t

t

i

t

i

t

t

i

Single-Layer Feedforward Neural

Network

Since xxt is independent of i, this term is constant

with respect to the categories. Therefore, in order

to minimize the distance

x xi

we need to maximize

1 t

gi (x) = x x - xi xi

2

t

i

which is called a discriminant function.

Single-Layer Feedforward Neural

Network

Example 3: A linear minimum-distance classifier

will be designed for the three points given as:

10

2

5

x1 , x2 , x3

2

5

5

It is also assumed that the index of each point

(pattern)corresponds to its class number.

The three points and the connecting lines

constitute a triangle which is shown on the

next slide:

Single-Layer Feedforward Neural

Network

x2

P3(-5,5)

P1(10,2)

0

P2(2,-5)

x1

Single-Layer Feedforward Neural

Network

Now let us draw the circle passing through all three

vertices of the triangle, the circumcircle. We can

conclude that each boundary is a perpendicular

bisector of the triangle. A perpendicular bisector of a

triangle is a straight line passing through the

midpoint of a side and being perpendicular to it, i.e.

forming a right angle with it. The three

perpendicular bisectors meet at a single point, the

triangle's circumcenter; this point is the center of the

circumcircle.

Single-Layer Feedforward Neural

Network

x2

P3(-5,5)

0

P2(2,-5)

P1(10,2)

x1

Single-Layer Feedforward Neural

Network

Now using

10

2

5

x1 , x2 , x3

2

5

5

and

1

t

gij ( x ) ( xi x j ) x ( xi

2

we obtain

2

2

xj )

Single-Layer Feedforward Neural

Network

1

2

2

g12 ( x ) ( x1 x2 ) x ( x1 x2 )

2

t

t

10 2 x1 1

[(100 4) (4 25)]

2 5 x2 2

8 x1 7 x2 37.5

Single-Layer Feedforward Neural

Network

1

2

2

g13 ( x ) ( x1 x3 ) x ( x1 x3 )

2

t

t

10 5

2 5

15 x1 3 x2 27

x1 1

x 2 [(100 4) (25 25)]

2

Single-Layer Feedforward Neural

Network

1

2

2

g 23 ( x ) ( x2 x3 ) x ( x2 x3 )

2

t

t

2 5 x1 1

[(4 25) (25 25)]

5 5 x2 2

7 x1 10 x2 10.5

Single-Layer Feedforward Neural

Network

Now using

wi = x i

1

wi ,n1 xi

2

2

we obtain

10

2

5

w1 2 ; w2 5 ; w3 5 ;

-52

-14.5

-25

Single-Layer Feedforward Neural

Network

and using

gi ( x ) wi x wi ,n1

t

we obtain

g1 ( x ) 10 x1 2 x2 52

g 2 ( x ) 2 x1 5 x2 14.5

g3 ( x ) 5 x1 5 x2 25

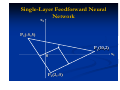

Single-Layer Feedforward Neural

Network

A block diagram producing the three discriminant

functions is shown below:

x1

x2

-1

10

2

52

2

-5

14.5

5

-5

25

10 x1 2 x2 52

2 x1 5 x2 14.5

5 x1 5 x2 25

Single-Layer Feedforward Neural

Network

The discriminant values for the three patterns

P1(10,2), P2(2,-5) and P3(-5,5) are shown in the

table below:

Input

g1(x)=10x1+2x2-52

Class 1

[10 2]t

52

Class 2

[2 -5]t

-42

Class 3

[-5 5]t

-92

g2(x)= 2x1-5x2-14.5

-4.5

14.5

-49.5

g3(x)=-5x1+5x2-25

-65

-60

25

Discriminant

Single-Layer Feedforward Neural

Network

As required by the definition of the discriminant

function, the responses on the diagonal are the

largest in each column. It will be shown later that

the same is true for any three points P1,P2 ,P3 taken

from the three decision regions H1,, H2, H3 provided

that the decision regions are determined as shown

above. Therefore using a maximum selector at the

output will provide the required function from the

network.

Single-Layer Feedforward Neural

Network

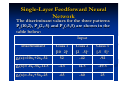

Using the same network with TLUs (bipolar

activation functions) will result in the outputs

given in the table below:

Input

Class 1

[10 2]t

sgn(g1(x)=5x1+3x2-5)

1

Class 2

[2 -5]t

-1

Class 3

[-5 5]t

-1

sgn(g2(x)= -x2-2)

-1

1

-1

sgn(g3(x)=-9x1+x2)

-1

-1

1

Single-Layer Feedforward Neural

Network

The diagonal entries=1

The offdiagonal entries=-1

However, as the next example will demonstrate

this is not true for any three points P1,P2 ,P3 taken

from the three decision regions H1, H2, H3.

Single-Layer Feedforward Neural

Network

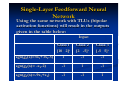

The response of the same network to the patterns

Q1(5,0), Q2(0,1) and Q3(-4,0) are shown in the table below:

Input

Discriminant

g1(x)=10x1+2x2-52

Class 1

[5 0]t

-2

Class 2

[0 1]t

-50

Class 3

[-4 0]t

-92

g2(x)= 2x1-5x2-14.5

-4.5

-19.5

-22.5

g3(x)=-5x1+5x2-25

-50

-20

-5

Single-Layer Feedforward Neural

Network

The responses on the diagonal are still the largest

in each column. However, using the same network

with TLUs (bipolar activation functions) will result

in the outputs given in the table on the next slide:

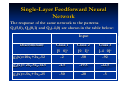

Single-Layer Feedforward Neural

Network

Input

sgn(g1(x)=10x1+2x2-52)

Class 1

[5 0]t

-1

Class 2

[0 1]t

-1

Class 3

[-4 0]t

-1

sgn(g2(x)= 2x1-5x2-14.5)

-1

-1

-1

sgn(g3(x)=-5x1+5x2-25)

-1

-1

-1

Discriminant

Single-Layer Feedforward Neural

Network

It is therefore impossible to use TLUs once the

decision lines are calculated using the

minimum-distance calssification procedure.

The only way out is using a maximum selector.

The explanation of the responses on the diagonal

being the largest in each column will now be made

in detail.

Single-Layer Feedforward Neural

Network

The discriminant functions determine the plane

equations

g1 10 x1 2 x2 52 0

g 2 2 x1 5 x2 14.5 0

g3 5 x1 5 x2 25 0

Single-Layer Feedforward Neural

Network

These planes are shown on the next slide.

It is easily seen that:

For any point in H1 : g1(x)>g2(x) and g1(x)>g3(x)

For any point in H2 : g2(x)>g1(x) and g2(x)>g3(x)

For any point in H3 : g3(x)>g1(x) and g3(x)>g2(x)

Single-Layer Feedforward Neural

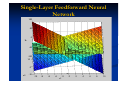

Network

100

50

0

gi

-50

-100

-150

-200

10

5

0

-5

x2

-10

-10

0

-5

x1

5

10

Single-Layer Feedforward Neural

Network

The decision regions H1,H2, H3 are projections of

the planes g1,g2 and g3, respectively, on the x1-x2

plane and the decision lines are the projections of

the intersection lines of the planes gi on the x1-x2

plane which are shown on the next slide.

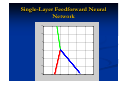

Single-Layer Feedforward Neural

Network

x

2

H3

g13 (x)=0

H1,,

g1 ( x) g 2 ( x)

P3(-5,5)

g1 ( x) g3 ( x)

g3 ( x) g1 ( x)

P123(2.337,2.686)

g3 ( x) g 2 ( x)

0

g 2 ( x) g1 ( x)

g23 (x)=0

H2

g 2 ( x) g3 ( x)

P2(2,-5)

g12 (x)=0

P1(10,2)

x1

Single-Layer Feedforward Neural

Network

A MATLAB plot of the projections of the

intersection lines of the planes gi are shown

on the next slide

Single-Layer Feedforward Neural

Network

30

20

10

0

-10

-20

-30

-30

-20

-10

0

10

20

30

Single-Layer Feedforward Neural

Network

The projections of the intersection lines of the

planes gi on the x1-x2 plane are shown to be given

by the following line equations:

g12 ( x ) 8 x1 7 x2 37.5 0

g13 ( x ) 15 x1 3 x2 27 0

g 23 ( x ) 7 x1 10 x2 10.5 0

The previous slide shows the segments that can

be seen from the top.

Single-Layer Feedforward Neural

Network

The continuation of the line g12=0 remains

underneath the plane g3.

The continuation of the line g23=0 remains

underneath the plane g1.

The continuation of the line g13=0 remains

underneath the plane g2.

Single-Layer Feedforward Neural

Network

A classifier using a maximum selector is shown on

the next slide. The maximum selector selects the

maximum discriminant and responds with the

number of the discriminant having the largest

value.

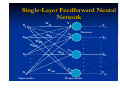

Single-Layer Feedforward Neural

Network

x1

x2

-1

10

g1(x)

2

52

2

-5

14.5

5

-5

25

1

g2(x)

Maximum i=1,2, or 3

2 selector

g3(x)

3

Classifier using the maximum selector

Single-Layer Feedforward Neural

Network

The classifier can be redrawn as follows:

10

x1

g1(x)

2

x2

1

52

-1

2

x1

g2(x) Maximum i=1,2, or 3

5

2 selector

x2

14.5

-1

-5

x1

g3(x)

x2

5

3

25

-1

Classifier using the maximum selector

Single-Layer Feedforward Neural

Network

x1

x2

-1

x1

x2

-1

10

x1

x2

-1

-5

2

g1(x)

52

2

-5

g2(x)

Maximum i=1,2, or 3

2 selector

g3(x)

3

14.5

5

25

1

Classifier using the maximum selector

Single-Layer Feedforward Neural

Network

In the above we have designed a classifier which was

based on the minimumdistance classification for known

clusters and derived the network with three perceptrons

from the discriminant functions which were interpreted

as plane equations. Instead, now let us consider the

network on the next slide which is obtained as a result of

training a network with three perceptrons using the same input

patterns P1(10,2), P2(2,-5) and P3(-5,5) as in the previous network .

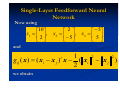

Single-Layer Feedforward Neural

Network

x1

x2

-1

5 x1 3 x2 5

5

TLU#1

3

5

0

-1

2

1

-9

0

x2 2

9x1 x2

TLU#2

TLU#3

Single-Layer Feedforward Neural

Network

In fact gi(x)=0 define the intersection of gi planes

with x1-x2 plane. Therefore the TLU divides the gi

planes into two regions:

(1)the upper-half plane which is above x1-x2 plane

and

(1)the lower-half plane which is below x1-x2 plane.

Single-Layer Feedforward Neural

Network

The decision lines are obtained by setting gi(x)=0

5 x1 3x2 5 0

x2 2 0

9 x1 x2 0

which are given on the next slide. The shaded

areas are indecision regions which will become

clear in the following discussion.

Single-Layer Feedforward Neural

Network

5 x1 3 x2 5 0

g1 (5,5) 15 1

g (5,5) 7 1

2

g 2 (5,5) 50 1

x2

Q1(0,9)

g1 (0,9) 22 1

g (0,9) 29 1

2

g 2 (0,9) 9 1

9 x1 x2 0

P3(-5,5)

P1(10,2)

x1

0

x2 2 0

g1 (1, 3) 19 1

g (1, 3) 1 1

2

g 2 (1, 3) 6 1

g1 (10, 2) 51 1

g (10, 2) 4 1

2

g 2 (10, 2) 88 1

Q2(4,-4)

Q3(-1,-3)

P2(2,-5)

g1 (2, 5) 10 1

g (2, 5) 3 1

2

g 2 (2, 5) 23 1

g1 (4, 4) 3 1

g (4, 4) 2 1

2

g 2 (4, 4) 40 1

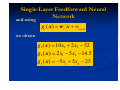

Single-Layer Feedforward Neural

Network

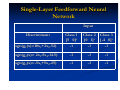

The discriminant values g1(x), g2(x), g3(x) for

the same three patterns P1(10,2), P2(2,-5) and

P3(-5,5) are shown in the table below:

Input

Class 1

[10 2]t

51

Class 2

[2 -5]t

-10

Class 3

[-5 5]t

-15

g2(x)= -x2-2

-4

3

-7

g3(x)=-9x1+x2

-88

-23

50

Discriminant

g1(x)=5x1+3x2-5

Single-Layer Feedforward Neural

Network

The outputs of the network with three discrete

perceptrons are shown in the table below:

Input

Class 1

[10 2]t

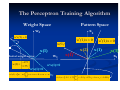

1