* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Respondent and Operant Conditioning

Behavioral modernity wikipedia , lookup

Abnormal psychology wikipedia , lookup

Observational methods in psychology wikipedia , lookup

Thin-slicing wikipedia , lookup

Attribution (psychology) wikipedia , lookup

Theory of planned behavior wikipedia , lookup

Theory of reasoned action wikipedia , lookup

Neuroeconomics wikipedia , lookup

Learning theory (education) wikipedia , lookup

Sociobiology wikipedia , lookup

Descriptive psychology wikipedia , lookup

Insufficient justification wikipedia , lookup

Applied behavior analysis wikipedia , lookup

Adherence management coaching wikipedia , lookup

Behavior analysis of child development wikipedia , lookup

Eyeblink conditioning wikipedia , lookup

Verbal Behavior wikipedia , lookup

Psychophysics wikipedia , lookup

Psychological behaviorism wikipedia , lookup

Behaviorism wikipedia , lookup

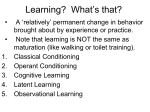

Notes for EDU301 Respondent and Operant Conditioning A. Classical Conditioning (Respondent Learning) Pavlov In the early years of the 20th century, IVAN PAVLOV was interested in investigating the process of digestion. In contrast to other researchers, he decided to study the digestive process of experimental animals -- dogs -- while they were awake. He performed simple surgery on the animals and inserted a tube in their salivary glands to record how much saliva they secreted to digest food. What he wanted to know was how much saliva an animal needed to secrete in order to ingest `a measured' amount of food placed in the animal's mouth. Pavlov eventually won Nobel prize in physiology for his work on digestion. Much to his dismay, Pavlov discovered that the animals would begin to salivate as soon as he walked into the laboratory. That is, even before he could even begin the day's experiment. Apparently, the sight of a person in a lab coat was enough to cause salivation, a response Pavlov believed was somehow related to the food the dog was to be fed. He changed the course of his research to investigate these anticipatory responses. This became his basic paradigm: He would find a neutral stimulus (e.g., a bell) which, by itself had no initial affect on dog's rate of salivation. He would then pair the sound of the bell with (a 1/2 second later) a stimulus known to strongly effect the dog's salivation (such as food-meat powder). Each pairing of the bell -- known as the conditioned stimulus (CS) -- and the food -- the unconditioned stimulus (UCS) -- served as a conditioning trial. Each dog participated in a number of these trials (say 50). The major question was whether the bell would acquire the ability to evoke salivation on its own. That is, if the dog heard the bell but didn't receive any food, 1 would the dog salivate. In other words, had the dog learned to associate the bell with food and respond by salivating? Of course, the dogs did salivate to the bell. And because such reaction to the bell began to occur only after the conditioning trials, the salivation was termed a conditioned response (CR). The seemingly automatic, instinctive reaction to the meat was termed an unconditioned response (UCR). Explanation Pretrial UCS---------->UCR CS -----------no response Posttrial CS----------->CR When CS is presented alone, without UCS, CR occurs. This became known as classical or respondent conditioning. Definitions UCS = any strong, biologically important event that elicits a predictable and reflexive reaction.(Note the emphasis on "biologically important event" and "reflexive reaction"). UCR = the regular, measurable reaction given in response to the UCS. This reaction in unlearned, instinctive, automatic. CS =an originally neutral cue that acquires the ability to elicit the response after being repeatedly paired or associated with the UCS. CR =the learned behavior or learned response given to the CS alone.(Although the CR is nearly identical to the UCR, it is not always so. For example, while the UCR to food may be salivation and chewing, the CR may only be salivation.) What about a more "human" example of classical conditioning. For example, is it possible that certain attitudes, values, and beliefs can be classically conditioned? More specifically, can the meaning attached to certain words be classically conditioned? 2 In the 1950's, Arthur Staats conducted a series of experiments to investigate these issues. In a simple experiment, he employed a group of hungry human subjects. Then he took a number of nonsense syllables, which prior to the experiment excited no response from the subjects. UBXM LOCTU OBNIF He then paired the nonsense syllables with the presentation of food, or pictures of food, or food words (steak, baked potato). In other words, he paired the nonsense syllables with stimuli he knew would elicit salivation inhungry human subjects. After a certain number of conditioning trials, he was hoping that the neutral stimuli would begin to elicit salivation all on their own. And they did. Here's an example of a rather novel application of classical conditioning in a school setting: Two educational psychologists (Henderson and Burke) were investigating the problems of minority students and lower SES students from a school in Michigan. They found that these students were generally reluctant to come to school, somewhat disruptive, and became more so as the school morning progressed. They were particularly a problem in science class, a period that just preceded lunch break. What Henderson and Burke found was that many of these children were coming to school hungry, without breakfast. How would we apply the classical conditioning model to what has occurred here? UCS = lack of food (hunger) UCR = discomfort, anxiety, tension CS= school, particularly science CR= discomfort, rowdiness, dislike of science What did the researchers suggest as a way to modify these students attitudes and behavior toward school and, in particular, science? Of course, a school breakfast program. UCS = breakfast UCR = pleasure from relief of hunger 3 CS= school CR= pleasure Principles of classical conditioning - What factors affect learning by classical conditioning? Number of conditioning trials. The more trials, the more likely the CS will elicit a CR regularly. But the relationship levels off -- the relationship is curvilinear. The interval between presentation of the CS and UCS affects conditioning. The maximal time for many (but not all) situation seems to be 1/2 sec. The longer, the less likely learning will occur. Extinction What happens after the CS-CR link, the CS is never paired again with the UCS (the bell with food).Well, eventually the CR fades and may even disappear. This kind of "un learning" is known as extinction and depends on many things including the number of conditioning trials. But following extinction, if we repair the CS-UCS, conditioning occurs again very quickly. This is called spontaneous recovery. Higher-order conditioning More important to human learning is an understanding of how higher order learning or associations occur. Does the theory of classical conditioning require that all learning occur via pairing of some neutral stimulus with some "biologically important event" or UCS? No. I it is possible for learning to occur in another way -- through the pairing of one CS with another CS or higher-order conditioning. Remember the bell from Pavlov's experiment. This was the original CS. When it was paired with UCS for 50 trials, learning occurred: CS alone elicited CR. Now, what would happen if we took another neutral stimulus, a light, and paired it with the BELL after these 50 conditioning trials? We conduct, say, 20 trials where we pair the light,CS2, followed by the bell CS1 CS1 (Bell)------------> CR1 (Salivation) 4 CS2 (Light)------------> CR2 (Salivation) Important: Remember in this higher-order conditioning experiment, NO UCS, no food, is ever presented to the dog. When CS2 (LIGHT) is presented alone, without CS1(BELL) a CR2 (salivation) will occur. Typically, however, the strength of the CR2 is weaker than the CR1. Among dogs and rats and other lower-level organisms even third-order conditioning is sometimes possible. For example, we could now pair a clicker(CS3) with the light (CS2) and it might eventually come to elicit salivation on its own (CR3). In humans, classical conditioning theorists believe a vast network of these kind of higher-order relationships exist. Thus, learning via classical conditioning in humans can be traced back to biological needs, but because of the process of higher order conditioning, this tracing may be pretty far removed. Stimulus generalization What happens when someone develops a conditioned response to a CS. Does the CR happen with a slightly different stimulus? E.G.(a bell rung at 60 db loudness vs. one at 90 db loud). The more similar the stimulus, the more likely stimulus generalization will occur. Discrimination Although similar stimuli are often associated with similar consequences, this is not always the case. Sometimes, we must make very fine discriminations between stimuli. Within classical conditioning, such an ability developed when one (60 db bell) of two similar stimuli is consistently followed by an unconditioned stimulus, while the other is not (90 db bell). Research seems to indicate that humans can learn to make very fine distinctions between stimuli. But there are definite limits. What happens when the situation requires that these limits be exceeded? That humans are asked to make discriminations they can't make. 5 First, extreme stress may be generated. Second, such failure to make fine discriminations impairs subjects ability to make easier discriminations. That is, their performance may get worse. Some scientists have speculated that life in modern technological society is so stressful because of the continuing requirement that we make many fine, precise discriminations. Limitations of classical conditioning It became apparent to many (e.g., Watson) despite higher order conditioning, classical conditioning as a theory of learning was limited to essentially involuntary reflex actions such as salivation or reacting with fear. Furthermore, successful attempts at demonstrating (very) higher order conditioning were difficult and rare. This was what led Watson and later Skinner to concentrate not on involuntary actions aroused by a previously neutral stimulus, but on voluntary behavior strengthened by reinforcement. 6 B. Operant or Instrumental Learning B. F. Skinner -- biggest figure Early pioneers include E. L. Thorndike (connectionism, laws of learning and particularly the law of effect). John B. Watson -- "pure" behaviorist, non-mentalist, mechanistic, stimulus substitution -- any response which an organism is capable of may be linked with any stimulus he is sensitive to. Examples include: behavior modification, token economies, contingency contracting, PSI or Keller plan, teaching machines, programmed instruction, CAI. John B. Watson The father of behaviorism who had quite a checkered career: Passed his doctoral language exams by supposedly studying continuously with the help of Coca-Cola syrup when it truly was the taste you never tire of (it contained cocaine). Reputed to have had an affair with a student and resigned his academic position. Ended up working for an advertising company, using some of the learning principles he had discovered to sell merchandise. Behaviorism was a Reaction to Introspectionism: In American psychology at the turn of the century, introspection was a common experimental method – the experimental participant was supposed to describe the mental processes which occurred as he/she participated in research. This was even extended to animal research, where speculations were made about the state of the animal’s consciousness. (And we're doing this very thing again!) Watson thought it would be far more sensible and scientific to concentrate on overt behavior, which could be observed and described objectively. Similar sentiments would later be echoed by B. F. Skinner. Watson received reports of Pavlov's discoveries with great enthusiasm -claiming that the key to behavior manipulation had been found. Watson helped to popularize (in America) Pavlov's research and expand it. In one of the classic experiments in the psychology of learning, Watson and Rayner (1920) demonstrated that human behavior could be classically conditioned. 7 He encouraged an 11 year-old boy Albert to play with a white rat, which Albert began to enjoy (later repeated with a rabbit). Then Watson suddenly hit a steel bar with a hammer, just as the child would reach for the rat (The hammer striking steel really frightened the child). Eventually, after repeated pairings of the frightening sound and thereat, the child developed a real fear of the rat even when it was presented alone. Albert was also shown to generalize this fear to anything white and fuzzy. Classical conditioning had been demonstrated on humans. Other psychologists, most notably Clark Hull would greatly expand on this pioneering work of Pavlov and Watson in the area of classical conditioning. Definition and basic clarifications A form of learning in which the presentation of a positive or negative reinforcer following a response alters the rate at which responses are emitted. Note that unlike Thorndike, who study the speed with which animals could run a maze or solution time, Skinner was more interested in the number of behaviors emitted or rate of response. Unlike classical conditioning, where behaviors were elicited by stimuli, in operant conditioning, the behaviors are emitted and then reinforced. Thus, a far broader class of behaviors came more readily under the scrutiny of scientific psychology. Two general principles are associated with operant conditioning: Any response that is followed by a reinforcing stimulus tends to be repeated. A reinforcing stimulus is anything that increases the rate with which an operant response occurs. Famous saying by some radical behaviorist "All behavior is under the control of reinforcement". In operant conditioning, the emphasis is on behavior and its consequences; with operant conditioning, the organism must respond in such a way as to produce the reinforcing stimulus. This process also exemplifies contingent reinforcement, because getting the reinforcer is contingent (dependent) on the organism's emitting a certain response. 8 Some common questions (and equally common answers) How does operant conditioning differ from classical conditioning? In operant conditioning the behavior of interest initially appears spontaneously without being elicited by any known stimuli. The behavior occurs while the organism is” operating" on the environment. It is behavior which is emitted, not elicited, and the consequence of the behavior is the crucial variable. In CC: stimulus followed by response. In OC: response followed by stimulus. Why did Skinner study rats, pigeons, and other low-level animals? Animal investigations provided an inexpensive and flexible method to conduct investigations of learning. Skinner was interested in generalizable laws of behavior applicable across all sentient beings, not just humans, much like Einstein's theory of relativity attempts to provide universal laws for physics. One of Skinner's greatest works, The behavior of organisms, (1938) is a grand treatise which exemplifies this principle. Why does Skinner appear to so actively avoid examining or including mental activity as part of his investigations? Skinner wanted to avoid the fruitlessness of inquiry so apparent in Titchener's introspectionism. He wished to concentrate on examining things that were directly observable rather than try to make inferences about private, mental invents or internal states. Skinner did not dispute the fact that these internal conditions existed but he felt it was far more profitable to examine, for example, the environmental antecedents of these internal states. How can Skinner avoid mentioning an organism's need or desire when this is so obviously important as a condition of learning? Skinner did recognize the importance of what you or I would call "need". However, he elected not to discuss it as an internal state. Instead, he used 9 "length of deprivation" instead as a way to avoid internal phenomenon and concentrate on what could be directly observed and measured. Since in operant conditioning, the organism is free to omit behaviors and then get reinforced, it will not do so when it is satiated or lacks deprivation. What are the primary, biological drives? There are some basic drives which are indisputable--the appetitive drives such as hunger, thirst, and sex. But there may be others and these are still disputed. For example, curiosity and exploratory behavior may be adaptive behaviors which the organism uses to learn about and control his/her/its environment. Gathering information for mastery purposes may be directly associated with the basic drive or desire to survive--the survival instinct. Reinforcers and punishment A positive reinforcer is something of value (food, water, praise, sex) which is added to the situation following the appropriate behavior. A negative reinforcer is something aversive (shock, loud noise, cold, heat) which is taken away following the appropriate behavior. In both cases -- negative and positive reinforcers -- the effect is the same. The probability of a response is increased. Do not confuse negative reinforcement with punishment. Punishment is the addition of an aversive stimulus following an undesired response. The purpose of punishment is to extinguish undesirable behavior but it normally functions to suppress behavior generally (i.e., its effects are not specific). Secondary reinforcers How does something become a reinforcer? We start with stimuli such as food and water that meet certain basic physiological needs. These are called primary reinforcers. Other stimuli become linked with primary reinforcers -- i.e., we learn that money buys food. These are called secondary reinforcers. They can become as 10 powerful as primary reinforcers. Money, praise, going to movies, dates on Friday, etc. etc. (token reinforcers e.g., plastic chips). Operant techniques for aiding the acquisition of new behaviors and preventing them from extinction: shaping, continuous, and partial reinforcement. Acquisition The fastest way to teach someone through operant techniques is to continuously give reinforcement. Shaping The use of continuous reinforcement at the outset should be accompanied by shaping or the rewarding of successive approximations to the desired response. At the beginning anything close to the desired response is reinforced. As training proceeds, the demands for more appropriate responses increases until the organism is responding exactly as originally desired. Example: You want to reduce your caloric intake to 1500 calories a day from a base rate of 4000.You don't just go from 4000 immediately to 1500 -- but proceed in successive approximations: 3500, 3000, 2500, 2000, 1500. Fading is the opposite technique used to extinguish a behavior by gradual approximation e.g, systematic desensitization. Schedules of reinforcement The use of partial reinforcement result in behaviors that persist longer without reinforcement than if training with continuous reinforcement. Partial reinforcement may occur in either of two ways: May be based on the number of responses -- ratio schedule. May be based on the time interval between reinforcements -- interval schedule. A further variation may be introduced by making the partial reinforcement schedule either fixed or variable. fixed ratio fixed interval variable ratio variable interval Fixed ratio Reinforcement occurs after a fixed number of responses, say, 1 in 5 or 1 in 100. 11 Fixed ratio scheduled were used in CAI or programmed material. Every fifth correct response computer says "You're doing fine". Fixed ratio schedules result in stable rate and time of responding, especially when ratio of reinforcements is low -- i.e., 1/1000. Often, instructor starts with continuous reinforcement and switches to intermittent one, eventually lowering the ratio. Variable ratio Here reinforcers are given on a certain average ratio, but each individual reinforcer comes after a different number of correct responses. Ratio could be l:5 but you could have reinforcement on trial 1, 3, 15, 17, 19, 35, so it averages 1:5. People on variable ratio schedules emit more responses than on a fixed ratio schedule. Behavior maintained on variable ratio schedule is the hardest to extinguish. Slot machines are variable ratio schedules. Fixed interval. Number of responses in not important. Reinforcement occurs after correct response following time period (e.g., 1 minute, 1 hour, 10 days, etc.) After reinforcement on this schedule, there is a period of decreased responding. Just before reinforcement, there is increased responding. This is called the scalloping effect. Variable interval schedule Average time period between reinforcers. Responses are quite regular here, no scalloping. Responses last a long time after reinforcement is withdrawn. Stimulus control 12 Not all behaviors are appropriate at all times. For example, student should only give answer when called on by teacher. If you use operant techniques the cues for behavior should be clear, hence stimulus control. Suppose a certain stimulus occurs prior to the emission of a given response, and then the response is reinforced. Imagine also that when the stimulus is not present, the response is not reinforced. Example: A flashing light tells rat when bar pressing will lead to reinforcement. When the behavior is emitted more frequently after the given stimulus, it is said to be under stimulus control. Stimulus control is like a cue for responding. In some ways, it is like a CS. The behavior is not elicited by the cue, rather the stimulus sets the stage for the behavior. (It has no reinforcing properties). Premark principle (David Premack, 1959) What kinds of stimuli can serve as reinforcers? Only events or activities which the subject greatly enjoys? No, Premack showed that reinforcements are relative. Premack's principle (AKA Grandma' rule): An activity more preferred at Time X can reinforce an activity less preferred at Time X. 13 Classroom applications of operant conditioning On November 11, 1953 Skinner visited his daughter's classroom. He found that the teacher was violating just about every principle known about the learning process. To Skinner, these operant principles were threefold: The information to be learned should be presented in small steps. The learners should be given immediate feedback about whether they have learned. Learners should proceed at their own pace. We should also add: learners should be positively reinforced when they learn/act correctly and not punished when they fail to learn or act incorrectly. Applications Programmed instruction and teaching machines Contingency contracting Behavior therapy (e.g., systematic desensitization) Behavioral/learning objectives Fred Keller’s Personalized System of Instruction Some Criticisms Verbal behavior Noam Chomsky criticized behaviorism for failing to account for the conditioning of much verbal behavior. Perceptions of control Deci & Ryan--mastery vs. pawn orientation Bandura--self-efficacy beliefs Weiner--attribution theory Classroom Rewards: Behaviorism and the Dark Side 14 Attacks on the use of operant principles for classroom instruction began with Skinner’s debates with the humanist Carl Rogers Rogers (Freedom to learn) Skinner (Learning to be free) It continues today (e.g., Alfie Kohn) and is attacked as undermining the natural curiosity, intrinsic interest, and creativity of children. One of the most influential lines of research centered around the effects of extrinsic rewards on subsequent intrinsic interest (Lepper, Greene, & Nesbitt) Children were allowed to play with toys they liked. Some children were told that doing so would get them rewards. Others received no rewards. Following the experimental phase, the children were observed in a free choice situation. The rewarded group showed less interest in the toys, even when amount of activity in the experimental period was controlled for. Over-justification hypothesis Recent research and the meta-analysis of Cameron & Pierce Informational rewards vs. controlling rewards Task contingent vs. performance contingent rewards Rewards required when initial interest of learners is low. In certain teaching situations, the UCS and UCR are not truly unconditioned or instinctive but through long associations take on UCS and UCR properties. 15