* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Lecture 31

Neuroscience in space wikipedia , lookup

Process tracing wikipedia , lookup

Haemodynamic response wikipedia , lookup

Optogenetics wikipedia , lookup

Neural modeling fields wikipedia , lookup

Neuroanatomy wikipedia , lookup

Visual servoing wikipedia , lookup

Nervous system network models wikipedia , lookup

Biological neuron model wikipedia , lookup

Mathematical model wikipedia , lookup

Agent-based model in biology wikipedia , lookup

Metastability in the brain wikipedia , lookup

Biological motion perception wikipedia , lookup

Holonomic brain theory wikipedia , lookup

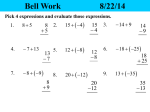

Computational Vision CSCI 363, Fall 2012 Lecture 31 Heading Models 1 Motion over a Ground Plane 2 Image motion for Translation plus Rotation TRANSLATION WITH ROTATION 3 Models for Computing Observer Motion 1. Error minimization: Minimize the equation 2 2 TX xTZ TY yTZ vx vy Z Z 2. Template models: Use a group of "templates" that correspond to the flow fields that would be created by a given set of translation and rotation parameters. Find the template that matches the flow field best. 3. Motion Parallax models: Make use of the fact that image velocities due to translation are dependent on Z. Image velocities due to rotation are not. 4 Image Velocities Longuet-Higgins and Prazdny, 1980 TY Y RY TX X y P p O x RX Far Near Diff RZ Z TZ x and y components of image velocity: vx = (-TX + x TZ) / Z - (1 + x2) RY + xy RX + y RZ vy = (-TY + y TZ) / Z + (1 + y2) RX - xy R Y - x RZ Translation Component Depth Dependent Rotation Component Depth Independent 5 Neurons vs. Pure Math Asymmetric Surround CircularlySymmetric Surround Vector Subtraction Middle Temporal Area Difference Vectors 1. Spatially extended receptive fields. 1. Vector subtraction at a single point. 2. Response is tuned to speed and direction. 2. Accurate velocity measurements assumed. 3. Center and surround tend to have the same best direction. 3. Vectors may differ substantially in direction. 6 Motion-subtraction by neurons Odd Symmetric Even Symmetric 7 Computing Heading Visual Field Operator Group Receptive Field Spacing 8 Layer 2 is a Template Translational Heading Template Maximally Responding Operators Template & MST cells both: 1. Have large receptive fields. 2. Respond to expansion/contraction. 3. Are tuned to center of expansion. 9 Motion toward a 3D cloud Observer 10 Translation + Rotation Flow Field 11 Operator Responses 12 Model Response Model Heading Estimates 13 Moving Objects Demo 14 15 Heading Bias (deg) Model vs. Experiment . Response Bias, Model vs. Psychophysical Data Response Bias (deg) 0.75 Right Object Motion Left Object Motion 0.5 0.5 0 0.25 -0.5 0 -0.25 -10 -5 0 5 10 -1 -5 Center Position (deg) 0 5 10 15 Psychophysics Model 16 Radial Optic Flow Field Scene Focus of Expansion (FOE) 17 Lateral Flow Field 18 Illusory Center of Expansion (Duffy and Wurtz, 1993) Scene Focus of Expansion (FOE) Perceived Focus of Expansion Demo 19 Difference Vectors for Illusion Center of Difference Vectors 20 Model Response to Illusion Estimated Center 15 10 5 Calculated 0 Model -5 -10 -15 -15 -10 -5 0 5 10 15 Lateral Dot Speed 21 Model vs. Human Response Estimated Center 15 10 5 Average 0 Calculated -5 Model -10 -15 -15 -10 -5 0 5 10 15 Lateral Dot Speed 22 Conclusions 1. A model based on motion subtraction done by neurons in MT can accurately compute observer heading in the presence of rotations. 2. The model shows biases in the presence of moving objects that are similar to the biases shown by humans. 3. The model responds to an illusory stimulus in the same way that people do. 4. The fact that the model responds in the same way as humans with stimuli for which it was not designed provides evidence that the human brain uses a mechanism similar to 23 that of the model to compute heading. How does the brain process heading? •It is not known how the brain computes observer heading, but there are numerous models and hypotheses. •One of the simplest ideas is based on template models: Neurons in the brain are tuned to patterns of velocity input that would result from certain observer motions. •Support for this idea: •Tanaka, Saito and others found cells in the dorsal part of the Medial Superior Temporal area (MSTd) that respond well to radial, circular or planar motion patterns. •Since then, people have assumed that MSTd is involved in heading computation. 24 Visual Pathway 25 Types of Responses in MSTd 26 (from Duffy & Wurtz, 1991) Combinations of Patterns •Duffy and Wurtz (1991) tested cell responses to planar, circular and radial patterns. •They found some cells responded only to one type of pattern (e.g. only to circular). Others responded to two or three types of patterns (e.g. both planar and circular). Single Component Radial Circular Planar Double Component Plano-Radial Plano-Circular Triple Component Plano-Circulo-Radial •They did not suggest a model of how these might be involved in heading detection. •They also showed there is not a simple way that MST receptive fields are made from inputs from MT cell receptive fields. 27 Spiral Patterns Graziano et al. (1994) showed that MSTd cells respond to spiral patterns of motion: 28 Does MST compute heading? Prediction: If MST is involved in heading computation, one would expect to find cells tuned to a particular position for the center of expansion. Duffy and Wurtz (1995) tested this prediction. 29 Do MSTd cells use eyemovement information? •Psychophysical experiments showed that humans can make use of eye movement information to compute heading. •Some MSTd cells have responses that are modulated by eye movements. •Do eye movements affect the responses of MSTd cells to compensate for rotation? •This was tested in an experiment by Bradley et al (1996). •They recorded from MSTd cells while showing flow fields that consisted of an expansion plus a rotation. The rotation was generated by real or simulated eye movements. 30 Real eye movement condition 31 Simulated eye movement condition 32 Results No eye movement Eye movement in preferred direction. Eye movement in anti-preferred direction. This cell seems to take into account eye movements. The effect was not consistent among all cells tested. 33