* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

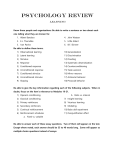

Download LEARNING AND INFORMATION PROCESSING

Behavior analysis of child development wikipedia , lookup

Verbal Behavior wikipedia , lookup

Behaviour therapy wikipedia , lookup

Learning theory (education) wikipedia , lookup

Psychophysics wikipedia , lookup

Behaviorism wikipedia , lookup

Eyeblink conditioning wikipedia , lookup

Psychological behaviorism wikipedia , lookup

LEARNING AND INFORMATION PROCESSING PSY 111 LECTURER MRS.T.N TUTURU THIS COURSE IS COMPOSED OF TWO PARTS • The first part we will look at learning • Then the second part looks at information processing • These two however are connected they are not totally separate. WHAT IS LEARNING? • Santrock 2003 defines learning as: • “Learning is a process by which experience produces a relatively enduring change in an organism’s behaviour or capabilities WHAT IS INFORMATION PROCESSING? • Information processing refers to the mental operations by which our sensory experiences are converted into knowledge. It focuses upon the ways in which we receive, integrate, retain and use information. • According to the information processing view point three stages are involved in in acquiring every relatively permanent memory: i) Encoding ii) Storage iii) retrieval NOW BACK TO LEARNING LETS LOOK AT THE FOUR FACTORS THAT FORM THE DEFINITION OF LEARNING 1) Learning is inferred from a change in behaviour/performance 2) Learning results in an inferred change in memory 3) Learning is the result of experience 4) Learning is relatively permanent MORE DEFINITIONS OF LEARNING Wood & Wood (2000) define learning as “A relatively permanent change in behaviour, knowledge, capability or attitude that is acquired through experience and cannot be attributed to illness, injury or maturation. Gross (2005) definition of learning is “ Learning therefore, normally implies a fairly permanent change in a person’s behavioural performance. Ormrod (1995) defines learning as a relatively permanent change in mental associations due to experience. Theories of learning • These can be classified into three broad groups: 1. Behaviourist/Connectionist: chiefly concerned with Stimulus (S) and response (R) connections 2. Cognitivist: based on the belief that inner functions of human are also worthy of study. The brain, perception, memory, personality motivation are but a few internal mental structures and processes which affect human behaviour. Child (2004) 3. Social Learning Theory: Social learning theory focuses on the learning that occurs within a social context. It considers that people learn from one another, including such concepts as observational learning, imitation, and modeling Behaviourist Theories • The Behavioral Learning Theory focuses on the learning of tangible, observable behaviors or responses. Through a continual process of stimulating a desired response and reinforcing that desired response, the learner eventually changes their behavior to match the desired response. Learning occurs in the most basic way, and it is something that we can see. As long as the desired behavior is occurring, then learning has occurred. That is not to say that there is not a mental process involved in learning the behavior, but the behavioral theorists do not address this mental process. They limit their explanation to the very rudimentary behavioral change. They don’t really even acknowledge that the change in behavior might require in-depth mental processes to bring about Cognitive Theories • The Cognitive Learning Theory focuses not on the behavioral outcomes but on the thought processes involved in human learning. These theorists make a distinction between learning and memory. Learning is viewed as the acquisition of new information. Memory, on the other hand, is related to the ability to recall information that has been previously learned. Cognition is about a process by which we receive information, we process that information, and then we do something with that information, either discarding it or keeping it. Information is everything. The weather, the time, the lighting, and what’s on the chalkboard, are all information that could be processed concurrently. How we take in these pieces of information and filter or encode them so that only the information on the chalkboard is hopefully remembered, is part of our cognitive learning process. The Social Learning Theory • Social Learning Theory [SLT] describes how the environment affects a person’s behavior. It’s not that SLT is a combination of behavioral and cognitive, but that SLT rejects that either one of these occurs alone or without the affect of outside stimulus. The general principles of SLT are that people learn by observing; that learning can occur without a change in behavior; and that consequences of behavior and cognition, both play a role in learning. THE BEHAVIOURISTS CLASSICAL CONDITIONING: I.P PAVLOV • Classical Conditioning One important type of learning, Classical Conditioning, was actually discovered accidentally by Ivan Pavlov(1849-1936). Pavlov was a Russian physiologist who discovered this phenomenon while doing research on digestion. His research was aimed at better understanding the digestive patterns in dogs. During his experiments, he would put meat powder in the mouths of dogs who had tubes inserted into various organs to measure bodily responses. What he discovered was that the dogs began to salivate before the meat powder was presented to them. Then, the dogs began to salivate as soon as the person feeding them would enter the room. He soon began to gain interest in this phenomenon and abandoned his digestion research in favour of his now famous Classical Conditioning study CLASSICAL CONDITIONING • Pavlov began pairing a bell sound with the meat powder and found that even when the meat powder was not presented, the dog would eventually begin to salivate after hearing the bell. • Since the meat powder naturally results in salivation, these two variables are called the unconditioned stimulus (UCS) and the unconditioned responses (UCR), respectively. • Bell and salivation are not naturally occurring; the dog was conditioned to respond to the bell. Therefore, the bell is considered the conditioned stimulus (CS), and the salivation to the bell, the conditioned response (CR). CLASSICAL CONDITIONING Stage 1 (Before learning) Stage 2 (During learning) Stage 3 (After learning) Food Unconditioned Stimulus Bell Conditioned Stimulus Bell Conditioned Stimulus Food Unconditioned Stimulus Salivation Unconditioned Response Salivation Unconditioned Response Salivation Conditioned Response Terminology in Classical Conditioning a) Unconditioned Stimulus (US) - a stimulus that evokes an unconditioned response without any prior conditioning (no learning needed for the response to occur). b) Unconditioned Response (UR) - an unlearned reaction/response to an unconditioned stimulus that occurs without prior conditioning. c) Conditioned Stimulus (CS) - a previously neutral stimulus that has, through conditioning, acquired the capacity to evoke a conditioned response. d) Conditioned Response (CR) - a learned reaction to a conditioned stimulus that occurs because of prior conditioning. Basic Principles: In Classical Conditioning • Acquisition - formation of a new CR tendency. This means that when an organism learns something new, it has been "acquired". • Pavlov believed in contiguity - temporal association between two events that occur closely together in time. The more closely in time two events occurred, the more likely they were to become associated; as time passes, association becomes less likely. • For example, when people are toilet training a child -- you notice that the child messed himself on his way to the bathroom. If the child had the accident hours ago, it will not do any good to scold the chid because too much time has passed for the child to associate your scolding with the accident. But, if you catch the child right after the accident occurred, it is more likely to become associated with the accident. Four different ways conditioning can occur -- order that the stimulus-response can occur: • 1. delayed conditioning (forward) - the CS is presented before the US and it (CS) stays on until the US is presented. This is generally the best, especially when the delay is short. example - a bell begins to ring and continues to ring until food is presented. • 2. trace conditioning - discrete event is presented, then the US occurs. Shorter the interval the better, but as you can tell, this approach is not very effective. example - a bell begins ringing and ends just before the food is presented. • 3. simultaneous conditioning - CS and US presented together. Not very good. example - the bell begins to ring at the same time the food is presented. Both begin, continue, and end at the same time. • 4. backward conditioning - US occurs before CS. example - the food is presented, then the bell rings. This is not really effective. Basic Principles: In Classical Conditioning • Extinction - this is a gradual weakening and eventual disappearance of the CR tendency. Extinction occurs from multiple presentations of CS without the US. • Essentially, the organism continues to be presented with the conditioned stimulus but without the unconditioned stimulus the CS loses its power to evoke the CR. For example, Pavlov's dogs stopped salivating when the dispenser sound kept occurring without the meat powder following. • Spontaneous Recovery - sometimes there will be a reappearance of a response that had been extinguished. The recovery can occur after a period of non-exposure to the CS. It is called spontaneous because the response seems to reappear out of nowhere. • Stimulus Generalization - a response to a specific stimulus becomes associated to other stimuli (similar stimuli) and now occurs to those other similar stimuli. • For Example - a child who gets bitten a dog, later becomes afraid of all dogs. The original fear evoked by one dog has now generalized to ALL dogs. Little Albert and generalization • John Watson conditioned a baby (Albert) to be afraid of a white rabbit by showing Albert the rabbit and then slamming two metal pipes together behind Albert's head. The pipes produced a very loud, sudden noise that frightened Albert and made him cry. Watson did this several times (multiple trials) until Albert was afraid of the rabbit. Previously he would pet the rabbit and play with it. After conditioning, the sight of the rabbit made Albert scream -- then what Watson found was that Albert began to show similar terrified behaviours to Watson's face (just looking at Watson's face made Albert cry. What a shock!). What Watson realized was that Albert was responding to the white beard Watson had at the time. So, the fear evoked by the white, furry, rabbit, had generalized to other white, furry things, like Watson's beard. Basic Principles: In Classical Conditioning • Stimulus Discrimination - learning to respond to one stimulus and not another. Thus, an organisms becomes conditioned to respond to a specific stimulus and not to other stimuli. • For Example - a puppy may initially respond to lots of different people, but over time it learns to respond to only one or a few people's commands. • Higher Order Conditioning - a CS can be used to produce a response from another neutral stimulus (can evoke CS). There are a couple of different orders or levels. Let's take a "Pavlovian Dog-like" example to look at the different orders: Higher Order Conditioning • In this example, light is paired with food. The food is a US since it produces a response without any prior learning. Then, when food is paired with a neutral stimulus (light) it becomes a Conditioned Stimulus (CS) – • First Order 1) Light US (food) \-->UR salivation • Second Order 3) tone -- light \--> CR (salivation) • 2) Light—(Food) \-->CR (Salivation) 4) tone -- light \--> CR (salivation ) Higher Order Conditioning • Higher Order Conditioning: Conditioning that occurs when a neutral stimulus is paired with an existing conditioned stimulus, becomes associated with it, and gains the power to elicit the same conditioned response. Wood & Wood (2000). Classical Conditioning in Everyday Life • One of the great things about conditioning is that we can see it all around us. Here are some examples of classical conditioning that you may see: 1. Conditioned Fear & Anxiety - many phobias that people experience are the results of conditioning. • For Example - "fear of bridges" - fear of bridges can develop from many different sources. For example, while a child rides in a car over a dilapidated bridge, his father makes jokes about the bridge collapsing and all of them falling into the river below. The father finds this funny and so decides to do it whenever they cross the bridge. Years later, the child has grown up and now is afraid to drive over any bridge. In this case, the fear of one bridge generalized to all bridges which now evoke fear. • Classical conditioning can be used to help people reduce fears. Counter conditioning involves pairing the CS that elicits the fear with a US that elicits positive emotions (UR). For example a person afraid of snakes but loves chocolate ice cream is shown a snake then given the ice cream , Classical conditioning helps associate the snake with good feelings. Classical Conditioning in Everyday Life 2. Classical conditioning (CC) has a grate deal of survival value for the individual (Vernoy 1995) Because of CC we jerk our hands away before they are burnt by fire 3. Advertising - modern advertising strategies evolved from John Watson's use of conditioning. The approach is to link an attractive US with a CS (the product being sold) so the consumer will feel positively toward the product just like they do with the US. US --> CS --> CR/UR attractive person --> car --> pleasant emotional response 4. CC is not restricted to unpleasant emotions, such as fear. Among other things in our lives that produce pleasure because they have been conditioned might be the sight of a rainbow, hearing a favourite song. If you have positive romantic experience , the location in which the experience took place can become a CS thru the pairing of the place with the event (UCS). Classical Conditioning in Everyday Life 5. CC conditioning can be involved in certain aspects of drug use. When drugs are administered in particular circumstances, at a particular time of day, in a particular location the body reacts in anticipation of receiving the drug FACTORS INFLUENCING CC: Wood & Wood (2000) 1. 2. 3. 4. How reliably the CS predicts the UCS: The neutral stimulus must reliably predict the occurrence of the UCS. A smoke alarm that never goes off except in response to a fire will elicits more fear when it sounds than one that occasionally gives false alarms The number of pairings of the CS and the UCS: The greater the number of pairings, the stronger the conditioned response. But one pairing is all that is needed to classically condition a taste aversion or a strong emotional response. The intensity of the UCS: If a CS is paired with a very strong UCS, the CR will be stronger and will be acquired more rapidly than if paired with a weaker UCS. The temporal relationship between the CS and the UCS: Conditioning takes place faster if the CS occurs shortly before the UCS. It takes place more slowly or not at all when the two stimuli occur at the same time. Conditioning rarely takes place when the CS follows the UCS. OPERANT CONDITIONING • In the late nineteenth century, psychologist Edward Thorndike proposed the law of effect. The law of effect states that any behaviour that has good consequences will tend to be repeated, and any behaviour that has bad consequences will tend to be avoided. In the 1930s, another psychologist, B. F. Skinner, extended this idea and began to study operant conditioning. Operant conditioning is a type of learning in which responses come to be controlled by their consequences. Operant responses are often new responses • Operant conditioning can be defined as a type of learning in which voluntary (controllable; non-reflexive) behaviour is strengthened if it is reinforced and weakened if it is punished (or not reinforced). • The term "Operant" refers to how an organism operates on the environment, and hence, operant conditioning comes from how we respond to what is presented to us in our environment. It can be thought of as learning due to the natural consequences of our actions. Skinner's views of Operant Conditioning • a) Operant Conditioning is different from Classical Conditioning in that the behaviours studied in Classical Conditioning are reflexive (for example, salivating). However, the behaviours studied and governed by the principles of Operant Conditioning are non-reflexive (for example, gambling). So, compared to Classical Conditioning, Operant Conditioning attempts to predict non-reflexive, more complex behaviours, and the conditions in which they will occur. In addition, Operant Conditioning deals with behaviours that are performed so that the organism can obtain reinforcement. • b) there are many factors involved in determining if an organism will engage in a behaviour - just because there is food doesn't mean an organism will eat (time of day, last meal, etc.). SO, unlike classical conditioning...(go to "c", below) • c) in Operant Cond., the organism has a lot of control. Just because a stimulus is presented, does not necessarily mean that an organism is going to react in any specific way. Instead, reinforcement is dependent on the organism's behaviour. In other words, in order for an organism to receive some type of reinforcement, the organism must behave in a specific manner. For example, you can't win at a slot machine unless several things happen, most importantly, you pull the lever. Pulling the lever is a voluntary, nonreflexive behaviour that must be exhibited before reinforcement (hopefully a jackpot) can be delivered. Skinner's views of Operant Conditioning • d) in classical conditioning, the controlling stimulus comes before the behaviour. But in Operant Conditioning, the controlling stimulus comes after the behaviour. If we look at Pavlov's meat powder example, you remember that the sound occurred (controlling stimulus), the dog salivated, and then the meat powder was delivered. With Operant conditioning, the sound would occur, then the dog would have to perform some behaviour in order to get the meat powder as a reinforcement. (like making a dog sit to receive a bone). • e) Skinner Box - This is a chamber in which Skinner placed animals such as rats and pigeons to study. The chamber contains either a lever or key that can be pressed in order to receive reinforcements such as food and water. • * the Skinner Box created Free Operant Procedure - responses can be made and recorded continuously without the need to stop the experiment for the experimenter to record the responses made by the animal. • f) Shaping - operant conditioning method for creating an entirely new behaviour by using rewards to guide an organism toward a desired behaviour (called Successive Approximations). In doing so, the organism is rewarded with each small advancement in the right direction. Once one appropriate behaviour is made and rewarded, the organism is not reinforced again until they make a further advancement, then another and another until the organism is only rewarded once the entire behaviour is performed. Principles of Reinforcement • Reinforcement • Reinforcement is delivery of a consequence that increases the likelihood that a response will occur. Positive reinforcement is the presentation of a stimulus after a response so that the response will occur more often. Negative reinforcement is the removal of a stimulus after a response so that the response will occur more often. In this terminology, positive and negative don’t mean good and bad. Instead, positive means adding a stimulus, and negative means removing a stimulus. • Skinner identified two types of reinforcing events - those in which a reward is given; and those in which something bad is removed. In either case, the point of reinforcement is to increase the frequency or probability of a response occurring again. 1) positive reinforcement - give an organism a pleasant stimulus when the operant response is made. For example, a rat presses the lever (operant response) and it receives a treat (positive reinforcement) 2) negative reinforcement - take away an unpleasant stimulus when the operant response is made. For example, stop shocking a rat when it presses the lever. Two Types of Reinforcers 1) primary reinforcer - stimulus that naturally strengthens any response that precedes it (e.g., food, water, sex) without the need for any learning on the part of the organism. These reinforcers are naturally reinforcing. • Primary reinforcers, such as food, water, and caresses, are naturally satisfying. • 2) secondary/conditioned reinforcer - a previously neutral stimulus that acquires the ability to strengthen responses because the stimulus has been paired with a primary reinforcer. For example, an organism may become conditioned to the sound of food dispenser, which occurs after the operant response is made. Thus, the sound of the food dispenser becomes reinforcing. Notice the similarity to Classical Conditioning, with the exception that the behaviour is voluntary and occurs before the presentation of a reinforcer. • Secondary reinforcers, such as money, fast cars, and good grades, are satisfying because they’ve become associated with primary reinforcers. Schedules of Reinforcement • • • • • • There are four main types of intermittent schedules, which fall into two categories: ratio or interval. In a ratio schedule, reinforcement happens after a certain number of responses. In an interval schedule, reinforcement happens after a particular time interval. In a fixed-ratio schedule, reinforcement happens after a set number of responses, such as when a car salesman earns a bonus after every three cars he sells. In a variable-ratio schedule, reinforcement happens after a particular average number of responses. For example, a person trying to win a game by getting heads on a coin toss gets heads every two times, on average, that she tosses a penny. Sometimes she may toss a penny just once and get heads, but other times she may have to toss the penny two, three, four, or more times before getting heads. In a fixed-interval schedule, reinforcement happens after a set amount of time, such as when an attorney at a law firm gets a bonus once a year Another example - when you wait for a bus example. The bus may run on a specific schedule, like it stops at the nearest location to you every 20 minutes. After one bus has stopped and left your bus stop, the timer resets so that the next one will arrive in 20 minutes. You must wait that amount of time for the bus to arrive and stop for you to get on it. In a variable-interval schedule, reinforcement happens after a particular average amount of time. For example, a boss who wants to keep her employees working productively might walk by their workstations and check on them periodically, usually about once a day, but sometimes twice a day, or some-times every other day. If an employee is slacking off, she reprimands him. Since the employees know there is a variable interval between their boss’s appearances, they must stay on task to avoid a reprimand. Reinforcement Schedules Compared: Wood & Wood Schedules of reinforcement Response Rate Pattern of responses Resistance to extinction Fixed-Ratio Schedule Very High Steady Response with low ratio. Brief pause after each reinforcement with very high ratio. The high the ratio the more resistance to extinction Variable-Ratio Schedule Highest Response Rate Constant response pattern no pauses. Most resistance to extinction Fixed-Interval Schedule Lowest Response Rate Long pause after reinforcement, followed by gradual acceleration The longer the interval the more resistance to extinction Variable-Interval Schedule Moderate Stable uniform response. More resistance to extinction than fixed interval schedule with same average interval Punishment • Punishment is the delivery of a consequence that decreases the likelihood that a response will occur. • Whereas reinforcement increases the probability of a response occurring again, the premise of punishment is to decrease the frequency or probability of a response occurring again. • b) there are two types of punishment: • 1) Positive - presentation of an aversive stimulus to decrease the probability of an operant response occurring again. For example, a child reaches for a cookie before dinner, and you slap his hand. • 2) Negative - the removal of a pleasant stimulus to decrease the probability of an operant response occurring again. For example, each time a child says a curse word, you remove one dollar from their piggy bank. Applications of Operant Conditioning • • • a) In the Classroom Skinner thought that our education system was ineffective. He suggested that one teacher in a classroom could not teach many students adequately when each child learns at a different rate. He proposed using teaching machines (what we now call computers) that would allow each student to move at their own pace. The teaching machine would provide self-paced learning that gave immediate feedback, immediate reinforcement, identification of problem areas, etc., that a teacher could not possibly provide. b) In the Workplace many factory workers are paid according to the number of some product they produce. A worker may get paid $50 000 for every 100 candles he makes. Another example - study by Pedalino & Gamboa (1974) - To help reduce the frequency of employee tardiness, the researchers implemented a game-like system for all employees that arrived on time. When an employee arrived on time, they were allowed to draw a card. Over the course of a 5-day workweek, the employee would have a full hand for poker. At the end of the week, the best hand won $20. This simple method reduced employee tardiness significantly and demonstrated the effectiveness of operant conditioning on humans. Applications of Operant Conditioning • c) Behaviour modification: is the application of operant conditioning techniques to modify behaviour. It is used to help people with a wide variety everyday behaviour problems, including, obesity, smoking, alcoholism, delinquency and aggression. • d) The Premack Principle: States that of any two responses the one that is more likely to occur can be used to reinforce the response that is less likely to occur. People prefer doing certain things more than others. E.g. a child may prefer to play outside rather than do his homework. A parent could then use the playing response to reinforce the and increase the occurrence of doing homework by promising more play time only after homework was finished.