* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Models of Attentional Learning - Indiana University Bloomington

Donald O. Hebb wikipedia , lookup

Types of artificial neural networks wikipedia , lookup

Catastrophic interference wikipedia , lookup

Vocabulary development wikipedia , lookup

Eyeblink conditioning wikipedia , lookup

Neural modeling fields wikipedia , lookup

Psychophysics wikipedia , lookup

Sensory cue wikipedia , lookup

Perceptual learning wikipedia , lookup

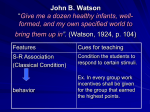

Psychological behaviorism wikipedia , lookup

Learning theory (education) wikipedia , lookup