* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Method and device for parsing natural language sentences and

Chinese grammar wikipedia , lookup

Lojban grammar wikipedia , lookup

Musical syntax wikipedia , lookup

Word-sense disambiguation wikipedia , lookup

Construction grammar wikipedia , lookup

Context-free grammar wikipedia , lookup

Preposition and postposition wikipedia , lookup

Compound (linguistics) wikipedia , lookup

Agglutination wikipedia , lookup

Macedonian grammar wikipedia , lookup

Scottish Gaelic grammar wikipedia , lookup

Esperanto grammar wikipedia , lookup

Sloppy identity wikipedia , lookup

Untranslatability wikipedia , lookup

Latin syntax wikipedia , lookup

Symbol grounding problem wikipedia , lookup

Distributed morphology wikipedia , lookup

Polish grammar wikipedia , lookup

Semantic holism wikipedia , lookup

Contraction (grammar) wikipedia , lookup

Focus (linguistics) wikipedia , lookup

Spanish grammar wikipedia , lookup

Antisymmetry wikipedia , lookup

Integrational theory of language wikipedia , lookup

Determiner phrase wikipedia , lookup

Malay grammar wikipedia , lookup

Probabilistic context-free grammar wikipedia , lookup

Cognitive semantics wikipedia , lookup

Pipil grammar wikipedia , lookup

Dependency grammar wikipedia , lookup

Transformational grammar wikipedia , lookup

Morphology (linguistics) wikipedia , lookup

Lexical semantics wikipedia , lookup

US005960384A

United States Patent [19]

[11] Patent Number:

Brash

[45]

[54]

METHOD AND DEVICE FOR PARSING

NATURAL LANGUAGE SENTENCES AND

OTHER SEQUENTIAL SYMBOLIC

EXPRESSIONS

[76] Inventor: Douglas E. Brash, 59 Bargate Trail,

KillingWorth, Conn. 06419

[52]

US. Cl. ............................... .. 704/9; 704/10; 704/235;

[58]

Field of Search ................................ .. 704/1, 8, 9, 10,

704/270

704/270, 271, 275, 231, 235

References Cited

U.S. PATENT DOCUMENTS

4,829,423

4,852,170

4,864,502

5/1989

7/1989

Tennant ..................................... .. 704/8

Bordeaux .............................. .. 704/277

9/1989

Kucera .... ..

4,868,750

9/1989 Kucera ..

155—184, 1976 pp. 169—173, 158—169.

Winograd, T. Language as a Cognitive Process: Syntax.

Addison—Wesley, Reading, MA (1983) pp. 36—37, 46,

54—55, 73—76, 79—80, 139—143, 162, 196—198, 204—210,

314—317, 328—330, 334—337, 345—346, 349, 368—369,

560—562.

704/8

704/8

704/8

4,914,704

4/1990 Cole et al. .

4,994,966

2/1991

.. ... ... ...

704/235

. . . . . . ..

9/1991 Smallwood et al. ..

11/1991 van Vliembergen .

1/1992 Hemphill et al. .

3/1992

9/1992

Schank, R. Conceptual Information Processing, North—Hol

land, Amsterdam (1975) pp. 22—25, 37—39, 41—43.

Wilks, Y. Parsing English II. In: E. Charniak et al. (eds.)

Computational Semantics. North—Holland, Amsterdam, pp.

704/8

4,887,212 12/1989 Zamora

4,914,590 4/1990 Loatman

5,101,349

5,146,406

Parsing, D. DoWty et al. (eds). Cambridge Univ. Press,

Cambridge (1985) pp. 206—209, 214—215.

Marcus, M. A Theory of Syntactic Recognition for Natural

Language. MIT Press, Cambridge, MA (1980) pp. 2—6,

3:552—631, 1972. pp. 556—560.

Int. Cl.6 .................................................... .. G06F 17/27

5,047,953

5,068,789

5,083,268

DoWty, D. Introduction to Montague Semantics. D. Reidel,

Boston (1981) pp. 180—183.

Joshi, A. Tree—adjoining grammers. In: Natural Language

Schank, R. Conceptual dependency. Cognitive Psychology

[51]

Hutchins

Sep. 28, 1999

17—21.

[21] Appl. No.: 08/922,494

[22] Filed:

Sep. 3, 1997

[56]

Date of Patent:

5,960,384

704/9

704/271

704/9

.. 706/11

Tokuume .... ..

Jensen ......... ..

704/9

704/9

5,210,689

5/1993 Baker et al.

704/1

5,297,040

3/1994

704/9

5,299,125

5,309,546

3/1994 Baker et al. .... ..

5/1994 Baker et al. .... ..

704/9

704/271

5,345,538

9/1994 Narayannan et al.

704/275

Hu ............... ..

Primary Examiner—Joseph Thomas

[57]

ABSTRACT

Aparsing method and apparatus for symbolic expressions of

thought such as English-language sentences is provided. The

basic procedure distinguishes only betWeen symbols for

picture-like entities (such as “squirrel” or “justice”) and

symbols for relations (such as “above” or “pushed”). For

example, to the cognitive parser the sentences “The squirrel

buried a nut” and “My aunts sent a telegram” are equivalent.

The parser thus operates at a level more basic than syntactic

OTHER PUBLICATIONS

parsing, making it simpler. The illustrative embodiment, a

cognitive parser for English sentences, comprises: a

microprocessor, a stored lexicon including symbols and

associated entity-types (picture or relation), an input device

Allen, J. Natural Language Understanding. Benj amin/Cum

mings, RedWood City, CA (1995) pp. 95, 295—296,

microprocessor for grouping the inputted picture

designating and relation-designating symbols according to

305—307, 332—334, and 341—342.

Beardon, C., et al. Natural Language and Computational

Linguistics—An Introduction. Ellis HorWood, NeW York

(1992) pp. 129—130, 133—134, 187—188.

rules based on entity type. A method comprising operation

of the cognitive parser is also provided.

5,457,768

10/1995

Tsuboi et al. ......................... .. 704/231

for a symbol sequence, and a procedure executing on the

20 Claims, 17 Drawing Sheets

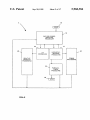

INPUT DEVICE

LEXlcoN

Loom?

saw

REGISTERS

a

7

01

5

LEXICON

U.S. Patent

Sep.28, 1999

Sheet 1 0f 17

5,960,384

INPUT DEVICE

2

\

‘

SYMBOL

SEQUENCE

3

v

/5

/

/4

SHIFT

'

REGISTERS

INSTRUCTION

SET

8\

‘I

PARSED

SYMBOL SEQUENCE

9\

I

OUTPUT DEVICE

/6

'

LEXICON

LOOKUP

/ 7

'

LEX'CON

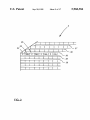

U.S. Patent

Sep.28, 1999

Sheet 2 0f 17

4

5,960,384

10

I START i

‘V

/

12

PICTURE-SYMBOL

GROUPING

PROCEDURE

4

2 x

26

\

v

24,

4

HIERARCHYBUILDING

PROCEDURE

PROCEDURE

RELATIVE

PRONOUN

PROCESSING

22

COMMA

PROCESSING

20

N

PARALLEL

TRACK

PROCEDURE

28

,

I STOP

7

Fl .2

14

U.S. Patent

Sep.28, 1999

Sheet 3 0f 17

5,960,384

32

37

P

REL

P

REL

P

REL

P

REL

30

38

39

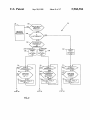

U.S. Patent

Sep.28, 1999

Sheet 5 0f 17

5,960,384

14

/

70

COMMA

IEREECm-CRTJILD

COMPLETELY

COMMA

PRQCESS'NG

WEAKLY

STRONGLY

72

NO

y

‘

72

P REL P

PRECEDING ?

YES

72

P FIEL P

PRECEDING '2

P REL P

PRECEDING ?

P1 0R P2 IS "TH E"7

EEEXLOIgS

NOT IN A PICTURE .

ls j 7

74 \

v

BRACKET P REL P

74 \

BRACKET P REL P

74 \

BRACKET P REL P

WITH ( )

WITH ( )

WITH ()

‘Y

/ 20

PARALLEL

TRACK

PROCEDURE

22

v

v

sTEP 5a

STEP 5:;

U.S. Patent

Sep.28, 1999

Sheet 6 0f 17

OZ

3mmr

x20mm2”;

m0a2m;

mm

v

0.1m

10km

5,960,384

U.S. Patent

Sep.28, 1999

Sheet 7 0f 17

94

100

E_OJ.D_SLLBIBAQIS

MOVE CONNECTING REL,

P, CURRENT REL, AND "("

TO SUBTRACK-2

72

P REL P

PRECEDING ?

BRACKET P REL P

WITH ( )

I

102

ON SHALLOWEST

SUBTRACK

OF SIDETRACK ?

FIG. 7

YES

104

iQLDilDEIEAQlS

MOVE CONNECTING REL,

P, CURRENT REL, AND "('

TC SIDETRACK-2

72

P REL P

PRECEDING ?

BRACKET P REL P

WITH ()

|____‘

TO

STEP 92

5,960,384

U.S. Patent

Sep.28, 1999

Sheet 8 0f 17

5,960,384

22

110

PlCTURE 0R NoN-vERB RELATION

WORD AFTER

COMMA IS ?

VERB

72

P REL P

PHECEDING ?

72

‘L

NO

P REL P

PRECEDING '2

112

YES

PREVIOUS

RELATION

IS [- oR ‘I ?

74 \

74\

BRACKET P REL P

WITH ()

114 N

WITH ()

v

sToRE LAST PICTURE

"

BRACKET P REL P

118

YES

‘

WORD READER

ON MA'N TRACK,

T0

N0

STEP 5a

116

v

SIABLSLQEIBAQS

MOVE COMMA (AS "¢") TO

SIDETRACKH

MOVE "(" TO SIDETHACK+2

R EAD ON SIDETRACK+2

TO

STEP 58

FIG. 8

120

\

TRACK_FOLD|NG

PROCEDURE

U.S. Patent

Sep. 28, 1999

26 \

5,960,384

Sheet 9 0f 17

24

130

ONE OF 2P'S IS

RELATIVE

PRONOUN ?

YES

RELATIVE

PRONOUN

PROCESSING

132

p2

YES

A DETERMINER ?

134

CATEGORY OF

PRECEDING P

5.

CURRENT P 7

LESS oR EQUAL

135

INSERT

\ ") _| ("

BETWEEN

GREATER

P'S

72

142

INSERT

138

"

(" /

BETWEEN

v

INSERT

") F ("

BETWEEN

P'S

P'S

72

72

PRELP

PRECEDING ?

PRELP

PRECEDING ?

PREVIOUS

RELATION

DETERMINER 8

v l——_

P REL P

PRECEDING 7

P1 NOT A

PREVIOUS

RELATION

pREvuous

RELATION IS

A VERB ?

74 \

74 \

BRACKET P REL P

WITH ()

‘BRACKET P REL P

BRACKET P REL P

WITH ()

WITH ()

v

‘Y

‘I

TO

STEP 5B

TO

STEP 58

TO

STEP 58

U.S. Patent

Sep. 28, 1999

Sheet 10 0f 17

26

150

152

THAT/ P

RELATIVE PRONOUN / P

&

P UNQUANTITATED

81 P QUANTITATED

OR

P / RELATIVE PRONOUN

154

INSERT

..)

V

\

INSERT

..) j (.

(a

BETWEEN

P'S

BETWEEN

P'S

I

TO

STEP 58

72

P REL P

PRECEDING ?

' REVIOUS REL

IS VERB OR

P1 OR P2 IS "THE"

NOT IN A

PICTURE?

74 \

NO

BRACKET P REL P

WITH ()

158

MOVE "-1" TO SUBTRACK+1

MOVE “(P2" To SUBTRACK+2

READ ON SUBTRACK+2

TO

STEP 58

YES

162

REPLACE RELATIVE PRONOUN

WITH PREVIOUS PICTURE

FROM PREVIOUS SUBTRAOK

(OPTIONAL)

I

TO

STEP 58

5,960,384

U.S. Patent

Sep.28, 1999

Sheet 17 0f 17

5,960,384

.OGmE?

/

mt

/

OZFDmE

A

9:

/

/

m

RF

5,960,384

1

2

Syntactic Parsing

METHOD AND DEVICE FOR PARSING

NATURAL LANGUAGE SENTENCES AND

A Pattern-Matching parsing device accepts a sentence if

each Word matches part of a stored template of alloWed

Words or parts of speech (“lexical categories,” such as

“verb”). To cover a language, a large number of template

patterns are stored in advance and each compared to the

OTHER SEQUENTIAL SYMBOLIC

EXPRESSIONS

FIELD OF THE INVENTION

The invention relates to a method and device for parsing

input sentence. The result is a ?at structure Whose sentence

natural language sentences and other symbolic expressions,

organiZation is simply the before/after relation betWeen

Words or lexical categories (Winograd, 1983, pp. 36—37, 46).

More complex pattern-matching strategies build hierarchical

and more speci?cally, the invention relates to a method and

device for parsing natural language sentences and other

10

structures using syntactic categories (e.g. “noun phrase”).

symbolic expressions into cognitive units.

These “head and modi?er” structures include the classic

BACKGROUND OF THE INVENTION

Aparsing device converts a linear symbol sequence into

Diagrammed Sentence, composed of a subject-verb pattern

to Which modi?ers are attached, and Dependency Grammar,

an organiZed structure. The diagrammed sentence encoun 15 in Which even the subject is represented as a modi?er of the

tered in grade-school grammar is one kind of parse. This

verb. HoWever, the prior art does not emphasiZe identi?ca

organiZed structure can then be displayed for instructional

tion of rules that Would enable automated generation of such

purposes, or other devices can use it to initiate commands,

trees (Winograd, 1983, pp. 73—75). A practical pattern

store information, or make logical inferences. A parsing

device is a prerequisite for using natural language to com

municate With computers and, moreover, a simple parsing

device is needed if natural language is to be used for

controlling household devices such as kitchen appliances,

videocassette recorders, lighting, and heating or air condi

tioning. The central problem in parsing is to achieve both

accuracy and speed. For synthetic languages, such as com

puter languages, many effective solutions are available.

matching device has been described that tags a sentence’s

Words With symbols denoting the words possible syntactic

features, and then matches the pattern of tags to stored

template-patterns of tags (Kucera and Carus, 1989, US. Pat.

No. 4,864,502).

The Generative paradigm replaces the long list of

25

HoWever, the complexity and ambiguity of natural lan

template-patterns With a shorter list of rules involving mini

patterns; these rules are used combinatorially and repeti

tively. Flat structures are generated by Transition-Networks,

in Which rules about the succession of Words or lexical

categories are embodied as transitions alloWed betWeen

guages have made them resistant to ef?cient parsing.

Automated sentence parsing devices—usually general

states of the parser (Winograd, 1983, pp. 54—55). If the input

purpose digital computers—have operated by matching

Word matches a lexical category alloWed for the current

parts of the input sentence With a very large number of stored

parser state (e.g., “adjective”), the parser sWitches to the

rules (Tennant et al., 1989, US. Pat. No. 4,829,423). If the

next state. There it has a neW set of alloWed categories and

the next Word must match one of these. The resulting parse

?rst rule does not apply, the next is chosen for examination.

Three broad classes of devices have been used; these employ

increasingly deep levels of sentence analysis. The most

common approach is Syntactic Parsing, in Which the rules

35

other in the sentence.

Hierarchical structures can be constructed generatively,

hoWever. Recursive Transition NetWorks alloW transition

driving the parsing device describe the organiZation of

Words in a particular language. In practice, achieving a parse

netWorks to accomplish a hierarchical parse by alloWing

parser transitions to be triggered not only by lexical catego

ries like “adjective,” but also by syntactic categories such as

also requires resolving the possible senses of ambiguous

Words. This is done by a semantic interpreter, Which uses

information about Word meaning. Semantic Parsing aims at

the level of meaning directly, driving the parser With rules

relating ordered Words to the underlying meaning of the

sentence. Conceptual Analysis also aims directly for the

level of meaning, but does not attempt to preserve Word

order. Conceptual analysis parsers identify key Words as

tokens for the underlying objects or actions, and the orga

niZation sought is that of the objects being discussed rather

“noun phrase” (Winograd, 1983, pp. 196—198). Deciding

Whether the input sentence contains a noun phrase, hoWever,

45

requires ?rst activating additional transition netWorks for

describing the constituents of noun phrases. Immediate

Constituent Grammar parsers build the tree-like structure by

using a list of rules that relate tWo levels of structure

(Winograd, 1983, pp. 75—76). The list includes rules linking

sentences to noun-, verb-, and prepositional phrases; these to

lexical categories such as noun, adjective, and determiner;

and these to speci?c Words. An example is: sentenceQnoun

than that of the Words used to discuss them. The processor

reconstructs this organiZation by using “encyclopedia infor

mation” about the objects, rather than “dictionary informa

tion” about the Words.

These three approaches to sentence parsing Will noW be

explained in more detail. In each approach, the stored rules

can be either template-patterns, Which must be matched

exactly, or general rules, Which are applied to parts of the

is ?at, indicating only Which lexical categories folloW each

phrase+verb phrase; noun phraseQdeterminer+adjective+

noun; verb phraseQverb+adverb+direct object. Successive

55

application of the rules produces a tree of increasing detail:

parsing a sentence is achieved by successively checking

combinatorial since rules can be used more than once in

various combinations. Rules can be chosen so as to divide a

each rule in the list for ?t to the input sequence of Words, and

choosing from the rule list those that match. One list of rules,

one grammar, is capable of building many different trees.

The particular tree that results depends on the subset of rules

that matched the particular sentence’s Words.

In Role Structures parsers, the syntactic categories are

themselves in effect constituents of a pattern of syntactic

sentence into smaller units (“top-doWn parsing”) or, less

frequently, to assemble Words into higher-level units

(“bottom-up” parsing). By storing the result of each suc

pp. 79—80). Word tags can also be processed generatively

(Hutchins, 1991, US. Pat. No. 4,994,966). Arelated strategy

sentence in succession to build a tree-like parsed structure of

increasingly ?ner detail, from a sentence to its phrases to

Words. The successive-rule strategy, termed “generative,” is

cessful pattern-matching or rule application, the parse accu

mulates aspects of the sentence’s organization.

roles (such as the pattern “subject-object”) (Winograd, 1983,

65

is to copy the dictionary entries of the Words of a sentence

directly into memory, and then use the grammar rules to

5,960,384

3

4

directly remove properties from, or add to, these dictionary

entries (Zamora et al., 1989, US. Pat. No. 4,887,212).

A disadvantage of the above devices is that they use

unbuilt or ignored. The deterministic parser achieves this

result by vieWing not just one Word at a time, but a 3- to

5-constituent WindoW constructed from the incoming sen

context-free grammars and are thus inadequate for sentences

tence (Marcus, 1980, pp. 17—21). Incoming Words and

that have long-distance dependencies. Such dependencies

arise from number/case agreement, passive-voice

constructions, and questions. Transformational Grammars

buffer, the ?rst 3—5 positions of Which are available to the

use a series of context-sensitive reWrite rules to transform a

push-doWn stack. The syntactic tree is built using syntactic

already-built constituents are stored in a ?rst-in ?rst-out

parser. NeW constituents are built from these in a separate

context-free syntactic tree structure into another tree that

matches the Word order found in, for example, the passive

voice (Winograd, 1983, pp. 139—143). HoWever, these pro

10

have appeared since the original description.

The second problem in syntactic parsing has been that

Words in natural languages often have multiple meanings;

yet, the parsing strategies described above require that the

cesses have not been Widely incorporated into devices

(Winograd, 1983, p. 162).

Phrase Structure Grammar uses immediate-constituent

rules, but adds “derived constituents” of the form “verb

phrase but missing x.” For example, a derived constituent

pattern-matching rules and semantic case rules that involve

both the stack and the buffer. FeW reports on this method

15

correct meaning be identi?ed before the parse can be com

like VP/NP carries the information that a noun phrase is

pleted. One strategy has again been to try each of the

alternative possible parses, most again fruitless.

missing from the verb phrase. Long-distance dependencies

Alternatively, parsers have reduced ambiguity by using

are resolved by using semantic knoWledge to ?nd an NP that

?lls in this hole (Winograd, 1983, pp. 345—346). The strat

egy most frequently used in practical parsers is the Aug

mented Transition Network (Loatman et al., 1990, US. Pat.

No. 4,914,590). Here, the recursive transition netWork’s set

of alloWed transitions betWeen parser states is supplemented

With registers that record a Word’s features, such as number

or gender, and a Word’s role, such as subject, object, or

determiner. Rules governing the alloWed transitions are

made dependent on the condition of these registers

co-occurrence relations—statistical information on the fre

20

1989, US. Pat. No. 4,868,750).

Semantic Interpretation of a Syntactic Parse

More direct reduction of Word ambiguity, and rule choice,

has been sought by associating Word-meaning information

25

With each element of the syntactic parse tree after the

syntactic tree is constructed. Semantic interpretation then

usually uses a Pattern-Matching strategy against stored

semantic template-patterns. These stored patterns can be of

several types. Selection Restriction Rules specify the objects

(Winograd, 1983, pp. 204—210). In this Way, long-distance

dependencies are incorporated. Charts are kept of the inter

mediate states of the parse to improve ef?ciency.

quency With Which Words are found together (Kucera et al.,

30

With Which a given Word may have a relation; these are

embodied as arguments to the words entry in the dictionary,

A completely different generative approach is Tree

or “lexicon.” An example is: “green”+(physical object)

agreen color (Allen, 1995, pp. 295—296). Rather than

Adjoining Grammar, Which combines not rules but trees

(Joshi, 1985). Each initial tree, actually a “mini-tree,”

denoting a meaning, these rules lead to a choice among

describes a sentence fragment. These are combined to build 35

previously-cataloged meanings. Semantic NetWork patterns

a tree for the complete sentence. By collecting several rules

into one mini-tree, a long-distance dependency can be

incorporated from the outset as a mini-tree having links

betWeen tWo of its branches. HoWever, since each mini-tree

represents just one of the combinations of several rules, the

initial stock of mini-trees is very large. The number of

are hierarchies of object-types or action-types, based on

semantic primitives, Which the designer constructs in

40

pp. 305—307). In actual devices, such hierarchies are imple

tree-choice decisions is accordingly large and the parsing

time is proportional to the fourth poWer of sentence length.

A “molecular parsing” device has been described in Which

each Word’s dictionary entry contains both phrase structure

rules and template-patterns for the words possible succes

sors (Hu, 1994, US. Pat. No. 5,297,040).

advance. If the semantic netWork also includes “semantic

functions,” such as “agent,” “instrument,” or “result,” it can

be used to limit the possible senses of a Word (Allen, 1995,

mented as a nested list.

Case Grammar is related more to the ?xed patterns in

45

Which meanings tend to occur (“semantic case” patterns)

than to the meanings themselves. Examples are agent,

instrument, and theme. The semantic case of a Word or

phrase depends on its position With respect to the verb, just

Implementing these syntactic parsing approaches has not

been straightforWard, for tWo reasons. First, a correct parse

as the familiar syntactic cases like subject and object do.

Since verbs are restricted in the semantic cases they alloW,

requires a single grammar rule to be chosen at each Word.

But the correctness of a rule is usually not evident until

patterns found in the syntactic parse (Winograd, 1983, pp.

several Words later. Therefore, the large number of rules

314—317). Alternatively, semantic case patterns can be iden

required to capture real sentences means that the device Will

ti?ed independently of speci?c verbs by having the parser

pursue many fruitless parsing paths, folloWed by backtrack

ing When the parse becomes clearly incorrect. This rule

choice problem has fostered strategies to minimiZe the time

the parser can identify semantic case patterns using syntactic

55

derive them from syntactic case patterns; for example, the

identi?cation of a direct object in the syntactic parse implies

the presence of an agent and a theme in the semantic

spent on fruitless parsing paths, including pursuing multiple

interpretation (Beardon et al., 1992, pp. 133—134).

parses in parallel or retaining information about partially

60

Other strategies are less often used in practical parsing

devices. Systemic Grammar uses hierarchies of template

patterns based not only on semantic functions, but also on

Word and pragmatic functions. The functions are arranged

into systems organiZed as a tree of choices: mood (question,

The Deterministic Parsing conjecture asserts that, for sen

statement, command); transitivity (actor, action, goal; agent,

tences that can be understood Without dif?culty, a parser

need not backtrack or construct the alternative parses that 65

instrument, result); theme (focus of attention); and informa

tion (already-given, neW) (Allen, 1995, p. 95; Beardon,

arise from rule-order choice (Marcus, 1980, pp. 2—6). The

1992, pp. 187—188). These patterns are re?ected in the

sentence’s Word order and lexical-category patterns. Thus, a

successful parses (Winograd, 1983, pp. 368—369).

Some parsers prune the parallel parsing paths by using

syntactic rules to assess the likelihood that the parse thus far

is correct (van Vliembergen, 1991, US. Pat. No. 5,068,789).

parsing is deterministic in that, once built, nothing can be