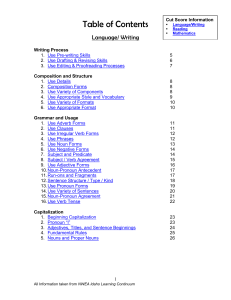

Vocabulary - For the Teachers

... and state; Edit sentence fragments; Use correct return address format; Capitalize government bodies; Use parallelism between subject and direct object; Use appositives ...

... and state; Edit sentence fragments; Use correct return address format; Capitalize government bodies; Use parallelism between subject and direct object; Use appositives ...

the cookbook as PDF

... One of the most important aspects of lemon is that senses should be unique to a given lexical entry/ontology reference pair, this means that “creature” and “animal”, should not refer to the same sense entity, but can be related using the equivalent property. If two lexical entries do share a sense, ...

... One of the most important aspects of lemon is that senses should be unique to a given lexical entry/ontology reference pair, this means that “creature” and “animal”, should not refer to the same sense entity, but can be related using the equivalent property. If two lexical entries do share a sense, ...

Copyright by Ulf Hermjakob 1997 - Information Sciences Institute

... encoded in currently 205 features describing the morphological, syntactical and semantical aspects of a given parse state. Compared with recent probabilistic systems that were trained on 40,000 sentences, our system relies on more background knowledge and a deeper analysis, but radically fewer examp ...

... encoded in currently 205 features describing the morphological, syntactical and semantical aspects of a given parse state. Compared with recent probabilistic systems that were trained on 40,000 sentences, our system relies on more background knowledge and a deeper analysis, but radically fewer examp ...

Semantic field of ANGER in Old English

... lexicon and within the broader socio-cultural context of the period. It also helps refine the interpretations of wide-ranging issues such as authorial preference, translation practices, genre, and interpretation of literary texts. The thesis contributes to diachronic lexical semantics and the histor ...

... lexicon and within the broader socio-cultural context of the period. It also helps refine the interpretations of wide-ranging issues such as authorial preference, translation practices, genre, and interpretation of literary texts. The thesis contributes to diachronic lexical semantics and the histor ...

Resúmenes - Colloque international de Linguistique de Corpus

... aprendientes de inglés., Nicolas Ballier [et al.] . . . . . . . . . . . . . . . . . . . . ...

... aprendientes de inglés., Nicolas Ballier [et al.] . . . . . . . . . . . . . . . . . . . . ...

Further and Farther

... and sometimes for being prosodically and orthographically separated from the rest of the clause (Biber et al. 1999: 876). For this reason, Huddleston & Pullum (2002: 777) classify linking further as a member of the larger category ‘pure connectives’ along with moreover, besides, and also. Third and ...

... and sometimes for being prosodically and orthographically separated from the rest of the clause (Biber et al. 1999: 876). For this reason, Huddleston & Pullum (2002: 777) classify linking further as a member of the larger category ‘pure connectives’ along with moreover, besides, and also. Third and ...

A Diachronic Study on the Complementation Patterns of the Verb

... Nevertheless, there are also some disadvantages to using corpora in research. Lindquist (2009, 8-9) brings up Chomsky's criticism of corpus linguistics: he has claimed that corpus linguists' findings are insignificant on the basis of the fact that the sentence I live in New York appears in corpora m ...

... Nevertheless, there are also some disadvantages to using corpora in research. Lindquist (2009, 8-9) brings up Chomsky's criticism of corpus linguistics: he has claimed that corpus linguists' findings are insignificant on the basis of the fact that the sentence I live in New York appears in corpora m ...

rtf - MIT Media Lab

... swimming. There are underlying meanings behind the situation that specify {\i where} a person does not want to get wet (on the clothes that they are currently wearing) and {\i why} they do not want to get wet (wearing soggy clothes is uncomfortable), which in aggregate may form the majority of the { ...

... swimming. There are underlying meanings behind the situation that specify {\i where} a person does not want to get wet (on the clothes that they are currently wearing) and {\i why} they do not want to get wet (wearing soggy clothes is uncomfortable), which in aggregate may form the majority of the { ...

对英语中歧义的初步研究

... ambiguity and studied ambiguity systematically. He also proved that ambiguity was not only negative but also positive.① From what are said above, we can know though ambiguity has been generated ...

... ambiguity and studied ambiguity systematically. He also proved that ambiguity was not only negative but also positive.① From what are said above, we can know though ambiguity has been generated ...

An Empirical Analysis of Source Context Features for Phrase

... the relation between source and target language as well as target language similarity by requiring that a phrase is at the same time a good translation of the source phrase and also leads to a good target language string. Phrase translation probabilities (and other translational features) are learne ...

... the relation between source and target language as well as target language similarity by requiring that a phrase is at the same time a good translation of the source phrase and also leads to a good target language string. Phrase translation probabilities (and other translational features) are learne ...

Lecture 12: Semantics and Pragmatics

... ➣ The language we think in makes some concepts easy to express, and some concepts hard ➣ The idea behind linguistic relativity is that this will effect how you think ➣ Do we really think in language? ➢ We can think of things we don’t have words for ➢ Language under-specifies meaning ➣ Maybe we store ...

... ➣ The language we think in makes some concepts easy to express, and some concepts hard ➣ The idea behind linguistic relativity is that this will effect how you think ➣ Do we really think in language? ➢ We can think of things we don’t have words for ➢ Language under-specifies meaning ➣ Maybe we store ...

Oxford English Dictionary

... volume (H to N) of the Supplement was in preparation, and the word hobbit came up for consideration, as the evidence in the OED’s files showed that it had achieved currency. The editor of the Supplement, Robert Burchfield, had studied under Tolkien in Oxford, and knew him well: in an appreciation pu ...

... volume (H to N) of the Supplement was in preparation, and the word hobbit came up for consideration, as the evidence in the OED’s files showed that it had achieved currency. The editor of the Supplement, Robert Burchfield, had studied under Tolkien in Oxford, and knew him well: in an appreciation pu ...

Consistency in the evaluation methods of machine translation quality

... Machine translation is the general term for the programs concerning the automatic translation with or without human assistance. It is also an interdisciplinary research area with different questions yet to be answered. One of the fundamental questions of the area is related to the quality assessment ...

... Machine translation is the general term for the programs concerning the automatic translation with or without human assistance. It is also an interdisciplinary research area with different questions yet to be answered. One of the fundamental questions of the area is related to the quality assessment ...

Applying the Constraint Grammar Parser of English to the Helsinki

... "round" V SUBJUNCTIVE VFIN @+FMAINV

"round" V IMP VFIN @+FMAINV

"round" V INF

"round" V PRES -SG3 VFIN @+FMAINV

...

... "round"

The Verb live in Dictionaries: A - TamPub

... define what constitutes as a word in a dictionary, a matter which is crucial for both dictionary compiling and this study, but is more complex than one might initially assume. The verb live has been chosen as a case study because it is a good example of a word that has multiple meanings, and similar ...

... define what constitutes as a word in a dictionary, a matter which is crucial for both dictionary compiling and this study, but is more complex than one might initially assume. The verb live has been chosen as a case study because it is a good example of a word that has multiple meanings, and similar ...

A Broad-Coverage Model of Prediction in Human Sentence

... Modeling prediction is a timely and relevant contribution to the field because recent experimental evidence suggests that humans predict upcoming structure or lexemes during sentence processing. However, none of the current sentence processing theories capture prediction explicitly. This thesis prop ...

... Modeling prediction is a timely and relevant contribution to the field because recent experimental evidence suggests that humans predict upcoming structure or lexemes during sentence processing. However, none of the current sentence processing theories capture prediction explicitly. This thesis prop ...

Morphological Processing of Compounds for Statistical Machine

... line in the other language. After training the statistical models, they can be used to translate new texts. However, one of the drawbacks of Statistical Machine Translation (SMT) is that it can only translate words which have occurred in the training texts. This applies in particular to SMT systems ...

... line in the other language. After training the statistical models, they can be used to translate new texts. However, one of the drawbacks of Statistical Machine Translation (SMT) is that it can only translate words which have occurred in the training texts. This applies in particular to SMT systems ...

Identifying Relations for Open Information Extraction

... captured by existing open extractors. Our syntactic constraint leads the extractor to include nouns in the relation phrase, solving this problem. Although the syntactic constraint significantly reduces incoherent and uninformative extractions, it allows overly-specific relation phrases such as is of ...

... captured by existing open extractors. Our syntactic constraint leads the extractor to include nouns in the relation phrase, solving this problem. Although the syntactic constraint significantly reduces incoherent and uninformative extractions, it allows overly-specific relation phrases such as is of ...

the EMNLP 2011 paper - ReVerb

... captured by existing open extractors. Our syntactic constraint leads the extractor to include nouns in the relation phrase, solving this problem. Although the syntactic constraint significantly reduces incoherent and uninformative extractions, it allows overly-specific relation phrases such as is of ...

... captured by existing open extractors. Our syntactic constraint leads the extractor to include nouns in the relation phrase, solving this problem. Although the syntactic constraint significantly reduces incoherent and uninformative extractions, it allows overly-specific relation phrases such as is of ...

Natural Language Generation

... information Developed by Princeton CogSci lab Freely distributed Widely used in NLP, ML applications Command line interface, web, data files www.princeton.cogsci.edu/~wn ...

... information Developed by Princeton CogSci lab Freely distributed Widely used in NLP, ML applications Command line interface, web, data files www.princeton.cogsci.edu/~wn ...

PALAVRAS

... Fred Karlsson presenting his Constraint Grammar formalism for context based disambiguation of morphological and syntactic ambiguities. I was fascinated both by the robustness of the English Constraint Grammar (Karlsson et. al., 1991) and its word based notational system of tags integrating both morp ...

... Fred Karlsson presenting his Constraint Grammar formalism for context based disambiguation of morphological and syntactic ambiguities. I was fascinated both by the robustness of the English Constraint Grammar (Karlsson et. al., 1991) and its word based notational system of tags integrating both morp ...

Planning at the Phonological Level during Sentence Production

... the extent of phonological planning is determined by phrase boundaries (major grammatical or phonological phrase boundaries), not by phonological word boundaries (a content word and any unstressed function word) suggesting that entire phrases are phonologically planned before articulation begins. We ...

... the extent of phonological planning is determined by phrase boundaries (major grammatical or phonological phrase boundaries), not by phonological word boundaries (a content word and any unstressed function word) suggesting that entire phrases are phonologically planned before articulation begins. We ...

Contextually-Dependent Lexical Semantics

... contextual effects, such as the structure of rhetorical relations in discourse and pragmatic constraints on co-reference. What is necessary is for research to tackle the difficult question of how other components in the natural language interpretation process interact with the lexicon to disambiguat ...

... contextual effects, such as the structure of rhetorical relations in discourse and pragmatic constraints on co-reference. What is necessary is for research to tackle the difficult question of how other components in the natural language interpretation process interact with the lexicon to disambiguat ...

The acquisition of a unification-based generalised categorial grammar

... input from a corpus of spontaneous child-directed transcribed speech annotated with logical forms and sets the parameters based on this input. This framework is used as a basis to investigate several aspects of language acquisition. In this thesis I concentrate on the acquisition of subcategorisatio ...

... input from a corpus of spontaneous child-directed transcribed speech annotated with logical forms and sets the parameters based on this input. This framework is used as a basis to investigate several aspects of language acquisition. In this thesis I concentrate on the acquisition of subcategorisatio ...

Microsyntax

... mechanisms of meaning amalgamation than in the meanings as such. For meaning representation, it uses a logical metalanguage which is less suitable for describing the spectrum of linguistically relevant meanings. On the other hand, this metalanguage is much more convenient for describing logical prop ...

... mechanisms of meaning amalgamation than in the meanings as such. For meaning representation, it uses a logical metalanguage which is less suitable for describing the spectrum of linguistically relevant meanings. On the other hand, this metalanguage is much more convenient for describing logical prop ...