* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Unit 5 Notes

Attribution (psychology) wikipedia , lookup

Neuroeconomics wikipedia , lookup

Insufficient justification wikipedia , lookup

Applied behavior analysis wikipedia , lookup

Verbal Behavior wikipedia , lookup

Behavior analysis of child development wikipedia , lookup

Learning theory (education) wikipedia , lookup

Psychophysics wikipedia , lookup

Social cognitive theory wikipedia , lookup

Behaviorism wikipedia , lookup

Psychological behaviorism wikipedia , lookup

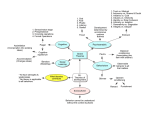

Unit V: Behaviorism & Learning/Conditioning Overview There are 3 core schools of thought when it comes to answering “We learn by . . . .” o 1) We learn by association, we learn that certain stimuli or events are associated with other stimuli or events: called Classical or Pavlovian Conditioning (Pavlov, Watson, Rescorla, Garcia) o 2) We learn by the consequences or results of our actions: called Operant Conditioning (Skinner, Thorndike, Tolman, Seligman) o 3) We learn by watching the consequences of others’ actions: called Modeling or Observational Conditioning (Bandura) Conditioning: every time you see the word conditioning, immediately think of it as the word learning. Conditioning is the changing of behaviors in response to new outcomes or new consequences or new associations. Learning: a relatively permanent change in an organism’s behavior due to experiences Classical conditioning Associative learning: learning that certain events occur together and responding accordingly Classical conditioning (a.k.a. Pavlovian conditioning, a.k.a. respondent conditioning): type of learning in which an organism begins to associate two stimuli as being related. This learning eventually creates a scenario in which the appearance of a stimuli causes an anticipation of its companion stimuli. If an organism has a behavior for one stimulus and knows that it and another stimulus are together, it may start to show the behavior in anticipation and learn to associate those two stimuli together. “Humans are in essence, nothing more than anticipation machines” Pavlov’s initial discovery Ivan Pavlov began his work studying the biology of the digestive system of dogs. Over time, he began to notice that dogs did not just salivate at the sight of food, but also at the sight or sound of its feeder, the feedbag, the introduction of its empty food dish, etc. Pavlov made the leap that perhaps the dog had become so experienced with its feeding procedures, that it recognized clues or incidents that would precede feeding and it salivated in anticipation when these clues (stimuli) happened. Pavlov took it a step further and suggested perhaps dogs could learn to pair any type of normally inconsequential stimuli (like a bell or tone) and salivate in anticipation once they connected the stimuli to food. Before any training or conditioning: 1): Unconditioned stimulus (US) (“unlearned stimulus”): the stimulus that causes an automatic, non-thinking response. In Pavlov’s experiment, the US was the food 2): Unconditioned response (UR) (“unlearned response”): the automatic, non-thinking response to the unconditioned stimulus. In Pavlov’s experiment, the UR was salivating at the sight of food. 3): Neutral stimulus (NS): any stimulus that provokes no response from an organism or has no meaning to it. Before pairing the bell or tone with food, they were NS. Pavlov could ring the bell all day long at random times, but it remained neutral because it was not yet paired or occurring with another stimulus, thus it stayed inconsequential to the dog Once training and conditioning began: 4): Conditioned stimulus (CS) (“learned stimulus”): a stimulus that an organism learns is associated and will predict or preceded another stimulus. In Pavlov’s experiment, the CS was the bell or tone after it was paired with feeding long enough that the dog learned that bell meant the arrival of food 5): Conditioned response (CR) (“learned response”): response to the presence of the conditioned stimulus. In Pavlov’s experiment, the CR was the salivating at the sound of the bell or tone once they were CS. Once the CS was established, the CR could occur, even without the presence of food. Acquisition: the term Pavlov used to describe the procedure of learning by pairing a neutral stimulus (bell) with an unconditioned stimulus (salivating to food) until the organism successful associated the NS with the US, causing the NS to become a CS and invoking the CR. Findings of Pavlov’s initial work Because the CS causes a CR with little to no rational thinking behind it, this response of classical conditioning is often seen as primitive and instinctual in nature B/c acquisition takes time, Pavlov tested to see the ideal timing between pairing the NS with the US to maximize learning speed. He determined that ½ second is ideal between bell tone and presentation of food. While dogs could acquire CR from CS after 30 secs or even 5 mins, ½ second provided the fastest learning curve. Pavlov argued that largely, other than humans, pairing a NS and US too far apart, acquisition cannot occur. However, studies by Garcia showed that rats associated sweetened water with radiation nausea even though they did not get sick several hours until after drinking, and they eventually refused to drink. Pavlov’s extended experimentation 1) Just like the boy who cried wolf too many times, a CS that sporadically or stops preceding a US will eventually slow or stop a CR. Pavlov called this extinction: learning through lack of association that a former CS apparently is a NS once again, and the CR stops occuring. Basically, what has become a conditioning stimulus can go right back to being neutral again if the rewards stop. (dog and fake fetch) 2) Pavlov also noticed that if after extinction, the former CS, now NS starts to be paired again with the US, the organism begins to show off the CR again, and learns it much faster than the first time. It is much faster learning because the organism remembers the previous time. Pavlov called this spontaneous recovery. 3) Once Pavlov’s dogs were trained to respond to the bell, he noticed that any bell tone or anything sounding like a bell, also generated the CR. He called this generalization: treating anything similar to the CS as the CS and believing they all precede the US. Organisms will naturally generalize until taught otherwise. 4) Through more associated, Pavlov also showed that he could cause the dogs to only respond to a certain bell tone but not others, keeping one tone a CS and all others NS. He called this discrimination: distinguishing the difference between the CS that means US and similar type stimuli Garcia & Koelling prove sensible discrimination of stimuli Research conducted by Garcia and Koelling demonstrated that animals learn to associate certain conditioned stimuli with unconditioned stimuli based on how salient they are: how reasonable/obvious of a connection they should have. Rats were exposed to sweetened water and to loud noises. The rats were also shocked and given radioactive air that made them nauseous. Over time, the rats associated the loud noise with signal for shock coming, and the sweetened water for their nausea. The loud noise was never connected to the nausea, the sweetened water never to their shock. Rescorla’s model for classical conditioning Robert Rescorla devised his own classical conditioning theory to show the cognitive logic of an animal or human picking up the conditioning. Rescorla argued that successful classical conditioning was strongly dependent on how reliably the stimuli preceded the unconditioned stimuli. A sporadic stimuli would not make a cognitive impression on the organism like a frequent one, so reliability was key. For example, if you cross a busy intersection and occasionally have bad drivers, you may not classically condition fear to that intersection but if every time you are at the intersection, bad drivers happen, you will. It’s not just frequency of pairing as Pavlov indicated, it’s also reliability. Conditioning Taste Preferences Taste aversion (a.k.a Garcia Effect): Associating consumed food with later illness, causing an avoidance of that food. Wolves were conditioned to stop eating sheep when ranchers baited them with poisonous sheep meat. In humans, many of our taste aversions are explained by bad associations with food (even if that food did not cause it) Taste aversion can even be generated by association with another of the five senses (fudge experiment) John Watson’s Baby Albert Experiment John Watson believed that human emotions and behavior were largely the result of conditioning. He believed we are born neutral of all but a few primary need stimuli. By association of stimuli with things such as pleasurable or painful stimuli, we develop emotional responses to our world. He proved this in his little baby Albert experiments of the 1920’s. The baby basically feared nor loved anything until Watson conditioned him to them. Tabula Rasa: Latin for “clean slate”. Term used by Watson to show that humans have very few natural behaviors (ex. Responding to pain, intense heat/cold, loud sounds, bright lights, food and water) and that most must be conditioned (ex. Learning to love money, fear snakes, like sweets, desire popularity, etc) Biological preparedness: While Watson showed you can classically condition a fear to anything, certain phobias are faster developed than others due to biological safety: snakes, spiders, heights over Watson’s trigger of bunnies and fur. Classical Conditioning Strategies that Don’t Work 1) Trace conditioning: pairing the CS and the US with a large amount of time between them. (Garcia’s experiment showed it is possible, just takes much longer.) 2) Simultaneous conditioning: pairing the CS and the US at the same time. Ineffective because the animal doesn’t need to associate much, it’s getting the reward at the same time, how can the CS be predictive? 3) Backward conditioning: presenting the US before the CS, reversing the order. Extremely ineffective, animals are not reliant on future to predict past. Operant Conditioning Operant conditioning/behavior: behavior shaped by the environment through positive and negative consequences. In simpler terms: we do something, it has some kind of consequence, based on that consequence we alter future behavior. Edward Thorndike’s Law of Effect Law of effect: term coined by Edward Thorndike that says if an action is followed by a positive consequence, the action is more likely to be repeated in the future, and action is followed by a negative consequence it is less likely to be repeated Thorndike placed cats in puzzle boxes with food waiting on the outside. Through trial and error, cats eventually got out of the box. Each time, the cats solved the puzzle faster and faster. (clip) B.F. Skinner’s work with operant conditioning B.F. Skinner used operant chambers (a.k.a. Skinner boxes): with rats or pigeons where their actions were trained through reward of food (clip) Reinforcer: any event or reward that strengthens behavior Punisher: any event or undesired outcome that weakens behavior Positive reinforcement: increasing behavior by presenting a positive stimuli (such as food or money) Negative reinforcement(reinforcer): increasing behavior by stopping or reducing a negative stimuli (such as headaches or stress) Positive punishment: stopping an undesired behavior by inflicting painful stimuli (spanking) Negative punishment/omission training: stopping an undesired behavior by taking away something desired (taking away car keys/ favorite toy) Most psychologists agree that parents should use punishment and positive reinforcement together (spank the kid for making a mess, reward the kid for not making one next time or time out for making a mess, reward the kid for not making one next time) Primary vs. Conditioned reinforcers Primary reinforcer: any stimuli that is important b/c it fulfills a survival need (food, water) Conditioned reinforcer/secondary reinforcer/learned reinforcer: any stimuli that has meaningful power to an organism b/c of its association with a primary reinforcer (ex.-money) (monkey economics examples) Continuous vs. Partial reinforcement Continuous reinforcement: rewarding a desired response all the time. Pro: Has fast learning rate, but Con: also a fast extinction rate Partial reinforcement: rewarding a desired response only part of the time. Con: Has a slow learning rate, but Pro: also a slow extinction rate. Skinner’s Four schedules of reinforcement Reinforcement schedules are built off of two principles: Is the schedule time based or number of attempts based? Is the schedule pattern known or unknown? Fixed-ratio: based on a known number of attempts between successes (ex.-punch cards) Variable-ratio: based on an unknown number of attempts between successes (ex.-slot machines) Fixed-interval: based on a known amount of time between successes (ex.-monthly paycheck) Variable-interval: based on an unknown amount of time between successes (ex.-the weather) Reinforcement schedules help to explain the intensity and consistency levels of behavior. Shaping vs. Chaining Conditioning Shaping: operant conditioning by guiding actions through reinforcers to get closer and closer to desired behavior until it is learned (dog training, hot-cold game) Chaining: the process of learning a complicated stimuli one piece at a time, and then putting all aspects altogether at once (swimming, pitching) (don’t confuse with shaping, though shaping could get same results, just in different manner) Escape vs. Avoidance Conditioning Escape learning: behaviors attempting to stop an aversive stimulus that is already affecting you (type of negative reinforcement)(student causes a scene to get him removed from a class he hates) Avoidance learning: behaviors that attempt to avoid an aversive stimuli from even getting started (type of negative reinforcement) (student skips class) Latent Learning Tolman/Seligman proved that operant learning occurs, even when it isn’t necessarily obvious immediately. In their experiment, one group of rats were asked to run a maze twenty times, rewarded for finishing each time. Not surprisingly, they got faster with each trial. A second group of rats were asked to run the maze twenty times, never being rewarded for finishing, and not surprisingly, showed little increase in time. A third group of rats were asked to run it ten times with no reward, but then were rewarded for the second set of ten runs. Even on the very first of the rewarded runs, the third group ran just as fast if not faster than the first group, demonstrating they had known the maze all along, just never had a reason to do it fast yet. Latent learning: learning that is present but not demonstrated until there is incentive to do so. Cognitive map: a mental, visual representation of one’s environment Tolman/Seligman proved that not all learning is done as a result of incentives or consequences, but that this learning is often not realized until incentives or consequences arise. Modeling/Observational Learning Observational learning aka vicarious (experienced through others) learning: learning by observing others and then changing our own behavior Modeling: the process of observing and then either imitating or disimitating behavior (don’t confuse modeling with imitation, imitation is a type of modeling but modeling isn’t necessarily learning by imitation) Albert Bandura’s Social Learning Theory says that much of our actions and behaviors are learned through modeling the people around us. Mirror neurons: Clusters of nerve fibers in the cerebral cortex that activate when watching someone else perform a behavior or action. They are basically the observation “copy cat” learning regions of the brain for you to be able to then duplicate what you are witnessing. (Research shows that the same exact regions of the brain fire up when we watch someone do something as when we do it. So the strength of someone else’s behaviors are equally impressionable on our brain as our own actions). (Survival purposes?) “Do as I say, not as I do,” is a bad expression by parents. Bandura noted that almost all childhood behavior is modeled by a combination of what people say and do. (When in conflict, kids pay more attention to behavior than words) (commercial clip) Albert Bandura’s Bobo doll experiment demonstrated modeling of anger (clip) The experiment went on to show that children learned anger behaviors even when adults weren’t actually trying to teach it to them. T.V. violence is linked to child violence. The largest detriment is that most T.V. violence is either rewarded or goes unpunished, sending the wrong message to children. Desensitization: the process of becoming less emotional or affected by graphic pictures or ideas over time. Causes these ideas to almost become accepted and commonplace. Conditioning terms that don’t fit anywhere Superstitions: conditioned beliefs/behaviors with no statistical correlations to support them. So are superstitions the result of classic, operant, or observational conditioning?? Basically, yes. If you walk under a ladder and then trip and break your arm and that causes you to avoid walking under a ladder in the future, you’ve altered your behavior. So there’s operant conditioning. If you now associate walking under ladders with bad luck, you’ve made a pairing of classical conditioning. If a child observes other people intentionally avoid walking under ladders and mimics the behaviors even if they don’t entirely understand why, there’s your observational conditioning. So superstitions are influenced by all of them. Premack principle: some reinforcers and punishers work while others won’t. You have to know the person/animal to know what works as a reinforcer and punisher. The person/animal must see the thing as a reinforcer or punisher for it to change behavior. (Ex.- twins: one loves to play an instrument, but hates to get exercise, one loves to exercise but hates playing instruments. Parents could make one child get exercise otherwise no instrument, the other child play an instrument otherwise no exercise.) (Ex.- seed would be a great reinforcer for birds, but not for cats. Ham would be a great reinforce for cats, but not birds) Instinctive drift: the tendency for reinforcers to have no effect on animals if they are asked to go against their own genetic instincts. (Ex.- rats won’t walk backwards, they will not do it based on reward reinforcement, it is just too strong of an instinct to overcome through conditioning) Habituation: the gradual loss of response or feelings towards something as it occurs again and again. (Ex.- after buying a new car or home, there is a surge of happiness and excitement. Over time, it will become more and more “same old same old” so it will cause less reaction on your part over time. New phone, new hair cut, new clothes/shoes generate behavioral reactions but over time as you get accustomed to them, the newness wears off) YouTube Channel: “SciShow Crash Course Psychology” videos relevant to this unit: #11 & 12