* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Direct3D 9

Color vision wikipedia , lookup

List of 8-bit computer hardware palettes wikipedia , lookup

Color Graphics Adapter wikipedia , lookup

Comparison of OpenGL and Direct3D wikipedia , lookup

Waveform graphics wikipedia , lookup

InfiniteReality wikipedia , lookup

Apple II graphics wikipedia , lookup

Spatial anti-aliasing wikipedia , lookup

Rendering (computer graphics) wikipedia , lookup

Original Chip Set wikipedia , lookup

Indexed color wikipedia , lookup

BSAVE (bitmap format) wikipedia , lookup

Framebuffer wikipedia , lookup

Hold-And-Modify wikipedia , lookup

Graphics processing unit wikipedia , lookup

General-purpose computing on graphics processing units wikipedia , lookup

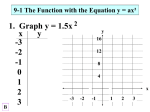

Direct3D 9 Or why programmable hardware kicks ass Matthew M Trentacoste Introduction ► Direct3D API has changed fundamentally to meet changes in hardware ► API has been adjusted to fit the paradigm shift that has occurred in real-time graphics ► Adapted to the fact that someone programming real-time graphics is writing code for 2 asymmetric processors, the GPU and CPU Differences ► OpenGL not as much bad, as outdated ► OpenGL was very well designed, but that was 15 years ago ► Everything that has happened in real-time graphics since then has been stapled on ► It is more of a pain for beginners learning to program graphics in Direct3D ► But much more elegant once you are experienced enough to fully utilize the functionality provided ► Much less of a state machine than OpenGL Differences (2) ► Has no immediate mode, can’t just specify vertices, colors etc… directly from code ► Built around a stream based model of data ► All data must be put into a buffer of elements to be loaded onto the hardware ► Trying to gracefully give control of the flow of data between CPU and GPU while still being efficient ► API is getting there, streamlining of functionality means fewer objects to accomplish all tasks Direct3D 9 API Object List ► ► ► ► ► ► ► ► ► ► IDirect3DSwapChain9 IDirect3DTexture9 IDirect3DVolume9 IDirect3DVertexBuffer9 IDirect3DIndexBuffer9 IDirect3DSurface9 IDirect3DStateBlock9 IDirect3DVDecl9 IDirect3DVertexShader9 IDirect3DPixelShader9 (back buffers) (textures) (volume textures) (vertex lists) (index lists) (render targets) (render state container) (vertex format) (vertex shader) (pixel shader) Other Reason D3D rocks ► D3DX!!!! ► All the math you could possibly need for graphics already written ► Vectors, matrices, quaternions, textures, models, etc… ► Optimized code using all special instruction sets (3Dnow, SSE2, and what not) ► Best solution for almost anything you could want to do, unless some crazy special case Cool Shit ► Still with me? ► High-order primitives ► Adaptive tessellation ► Displacement maps ► And pretty pictures of them Higher Order Primitives ► ► ► ► ► Current primitives are not ideal for representing smooth surfaces Direct3D 9 supports points, lines, triangles, and grid primitives Higher-order interpolation methods, such as cubic polynomials, allow more accurate calculations in rendering curved shapes The application need only provide a desired level of tessellation Transmit the data using standard triangle syntax that includes normal vectors Adaptive Tessellation ► Adaptively tessellates a patch, based on the depth value of the control vertex in eye space ► Tessellation level computed per-vertex From API value scaled by 1.0 / Zeye ► Then surface is tessellated accordingly ► API takes triangles, defines high order surfaces from them, and then tessellates those surfaces as needed ► Meaning : more detail the closer you get Demo Time #1 Displacement Mapping ► ► ► ► ► Adaptive tessellation enables us to use a texture to deform a surface A texture of a height field is spread across a high-order surface Tessellates surface until the detail of the geometry is high enough to represent height field Changes shape of surface to match displacement as opposed to merely modifying the surface normal vector to appear like a deformed surface What bump maps wish they were Demo Time #2 DirectX Graphics Architecture Vec0 Vec2 Vertex Shader VB Pos Image Surface Vec1 Color TC1 Tex0 Primitive Ops Tex1 Pixel Shader Tex2 Samplers Output pixels Vec3 Vector Data Geometry Ops TC2 Vertex Components Pixel Ops Pipeline Overview ► Create VertexBuffer ► Set up Vertex Stream ► Define VertexDecl ► Vertex Shader Object ► Pixel Shader Object ► FrameBuffer blender (where model goes) (put model there) (what data means) (operate on model) (render model) (add image of model to scene) Vertex Declaration Object ► New syntax for describing vertex formats for DMA engine and tessellator behavior ► New object IDirect3DVDecl9 ► Separately createable CreateVertexDeclaration() ► Separately settable SetVertexDeclaration() Settable independent of vertex shader Default Semantics ► VertexDecl now supports “usage” field Position, Normal, Tangent, Binormal, etc. Provided to enable default semantics ► Allows implementation to connect shaders together without requiring a fixed register convention ► Acts as symbol table for run-time linking of shaders to core API and therefore hardware ► No addl. policy is imposed over DirectX 8 ► ► Default semantics can be overridden Deals with concepts, not memory addresses DirectX 8 Vertex Declaration Strm0 Strm1 Vertex layout v0 skip vs 1.1 mov r0, v0 … v1 Declaration Shader handle Shader program New Vertex Declaration Strm0 Strm1 Strm0 Vertex layout pos norm diff Declaration pos norm diff vs 1.1 vs 1.1 dcl_position v0 dcl_position v0 dcl_diffuse v1 dcl_diffuse v1 Shader program (Shader handle) mov r0, v0 mov r0, v0 … … Vertex Shader Architecture Vec0 Vec1 Vec2 Vec3 Vec4 … Vec15 A0 R0 Const0 R1 Const1 R2 Const2 Vertex ALU R3 Const3 … … R11 Const95 Hpos TC0 TC1 TC2 TC3 Color0 Color1 Vertex Shaders Vertex Shader 2.0 Register Reference Name r/w? Description Count Port Count bn r Boolean 16 1 in r/w Loops-3 16 1 an * 4-D Address 1 1 cn rn r r/w Constant Temporary 256 12 1 3 vn r Vertex input 16 1 *an Can only be written to by mov and result used as integer offset in relative addressing Note: Port Count = number of times a different register of that class can be used in single instruction Math Instructions ► Parallel ops (componentwise): add, sub, mul, mad, frc, cmp ► Vector ops dp3, dp4 ► Scalar ops: rcp, rsq, exp2, log2 ► Macros LRP, NRM3, POW, CRS, SINCOS, SGN, ABS Vertex Shaders Instruction reference max Maximum min Minimum sge Set on greater or equal than slt Set on less than rcp Reciprocal rsq Reciprocal square root expp Exponential 16-bit precision logp Logarithm 16-bit precision Vertex Shader Flow Control DirectX 9 vertex shaders vs2.0 supports flow control ► Result is “Structured Assembly” language ► Control logic based on constants only ► Required by ISVs to solve ► Enable/Disable environment mapping, etc. “varying # of lights” problem Brings support == to nonprogrammable ► Ideally better skinning approach “varying # of bones” problem Instruction Counts vs. Slots Flow control means slots != counts ► Instruction store is 256, but more instructions can be executed than are stored ► Executed instruction count limit is higher ► Recommend to not exceed 1024 Sampler State Separation ► TextureStageState (TSS) has been split One category for Texture Sampler data One category for Texture Iterator control ► Why? Sampler State has 16 elements as 16 textures may be sampled in one pass Other state has only 8 elements Much of this state is for legacy pipelines ► All enum indices remain the same DDI impact is minimal Pixel Shaders ► Float data precision supported ► Enables photoreal rendering of high-dynamic range scenes - cf Debevec ► Pixel shader ALU must support At least s10e5 precision for color data At least s17e6 precision for all other data ►Any inputs data of 32-bit float such as texture iterators or reads of 32-bit float texture formats ► _pp modifier supported on any instruction Highlights operations where reduced precision is acceptable for performance Demo Time #3 Pixel Shader 2.0 Architecture v0 v1 t0 t1 t2 t3 t4 … t7 r0 c0 r1 c1 r2 c2 Pixel ALU r3 c3 … … r11 c31 oC0 oC1 oC2 oC3 Pixel Shaders Pixel Shader 2.0 Register Reference Name r/w? Description Count Port Count vn r 2 1 tn r/w 8 1 rn cn r/w r Color Texcoord Iterators Temporary 12 32 3 1* sn r 16 1 ► Constant Texture Samplers Port Count = # of times different registers of same class can be used in one instruction Texture Load Instructions ► ► 3 instructions provided in ps_2_0 Standard texture load: texld r0, t1, s3 ► Texture with per-pixel LOD bias: texldb r0, t0, s2 Bias value stored in t0.w ► Projected texture load: texldp r1, t2, s0 Does perspective divide before lookup Dependent Reads ► ► ► Can be serialized, but only to a max depth of 4: dcl t0.xy; dcl_2d s0.rg; texld r0, s0, texld r1, s1, texld r2, s1, texld r3, s1, Is legal t0; r0; r1; r2; Dependent Reads Rock ► What’s so great? ► Textures become functional maps ► Any continuous function that takes up to 3 inputs and produces up to 4 outputs can be stored as a texture ► Pre-compute results and store in texture ► Load texture at coordinates of input ► Returns output as value at that point Dependent Reads Rock ► Allows for results far too complicated to be calculated in real-time to be used on GPU with minimal cost ► Stop thinking of textures as mere images, but stores of data ► Lookup tables, noise generators, and most arbitrary functions are all capable of being emulated in current hardware quickly Multi-Render Target (MRT) ► Step towards rationalizing textures and vertex buffers ► Allow writing out multiple values from a single pixel shader pass Up to 4 color elements plus Z/depth Facilitates multipass algorithms ► Can have a pixel shader output 4 vector-4s + depth for each pixel ► That is 17 pieces floating point of data that can be stored MRT Example : Depth of Field Original Alpha of Original Blurred Result The images on the left are the original. The center is the alpha map. Black is in focus, white is out of focus. We can move the focal plane anywhere we like. MRT Example : Edge Detection World Space Normals Edge Detect Eye Space Depth ► Outlines Edge Detection, Images courtesy of ATI Technologies, Inc. MRT Example : Edge Detection ► Composite outlines to get a cell-shaded effect. Images courtesy of ATI High Level Shader Language ► ► ► ► ► Why? Because assembly sucks Allows all the things that make C so much better than machine code Can separate pixel and vertex shader code from data No longer have to map elements of a stream to registers, done semantically ® DirectX 8 Assembly tex t0 tex t1 ; base texture ; environment map add r0, t0, t1 ; apply reflection DirectX 9 HLSL Syntax outColor = tex2d( baseTextureCoord, baseTexture )+ texCube( EnvironmentMapCoord, Environment ); Maybe more characters, but makes much more sense Datatypes ► Ints, bools, floats, etc… ► All the things you know and love ► Plus things that make graphics easy like vectors and matrixes ► 1x1 up to 4x4 first order floating point data ► matrix4x4 not matrix[4][4] ► All operations designed to operate on up to 4x4 data-types natively DirectX 8 Vertex Declaration (again) Strm0 Strm1 Vertex layout v0 skip vs 1.1 mov r0, v0 … v1 Declaration Shader handle Shader program New Vertex Declaration (again) Strm0 Strm1 Strm0 Vertex layout pos norm diff Declaration pos norm diff vs 1.1 vs 1.1 dcl_position v0 dcl_position v0 dcl_diffuse v1 dcl_diffuse v1 Shader program (Shader handle) mov r0, v0 mov r0, v0 … … Vertex Shader Input Semantics ► ► ► ► ► ► ► ► ► ► position[n] blendweight[n] blendindices[n] normal[n] psize[n] diffuse[n] specular[n] texcoord[n] tangent[n] binormal[n] untransformed position skinning blending weight skinning blending indices normal vector point size (particle system) diffuse (matte) color specular (shiny) color texture coordinates these two with normal vector make a 3D coordinate system VS output / PS input semantics ► ► ► ► ► Position Psize Fog color[n] texcoord[n] transformed position Pointsize Fog blending value Computed colors Texture coordinates Uses for Semantics ► A data binding protocol: ► Between Between Between Between vertex data and shaders pixel and vertex shaders pixel shaders and hardware shader fragments One smooth process of describing the flow of data in an out of various elements of the render process So… ► Yeh, we got all this programmable hardware ► What does it really give us? OPTIONS!!! ► Are finally able to compute what you want ► No longer the fixed function pipeline’s bitch ► Can render Pong, even Wolfenstein on GPU ► Think of the GPU as a signal processor of vertex and pixel data, not merely rendering pictures Finally ► All graphics that use the fixed function pipeline, ie. Standard Lighting Equation fundamentally look the same ► Many hacks to work around ► But still stuck with: ambient + diffuse + specular ► Allows graphics programmers to tailor the look of their work to fit the content Choose Your Look ► ► ► ► ► ► ► ► Pick a unique “Look” and do it Toon several methods Cheesy unlit or flat shaded Retro standard FF pipelines Radiosity soft lighting only Shadows horror movie, Doom III Gritty ultra realistic And many more Time for Hands On Hemisphere Model Sky Color Ground Color Hemisphere Model Final Color Distributed Light Model Hemisphere of possible incident light directions q Surface Normal Microfacet Normal - defines axis of hemisphere 2-Hemisphere Model Sky Color q Ground Color Distributed Light Model Hemisphere of possible incident light directions q Microfacets Other facets can shadow this one: Occlusion Ray Cast Occlusion Model Microfacet Some rays hit this object, others miss it Occlusion Representations ► ► Can store result in various ways Compute ratio of hits / misses Occlusion Factor A single scalar parameter Should weight with cosine ► ► Use to blend in shadow color Sufficient for hemisphere lighting Hemisphere Lighting +Occlusion Sky Color Ground Color Object Color Sphere Model Occlusion Factor Final Color Back to Work