* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Multiple Regression and Model Building

Data assimilation wikipedia , lookup

Time series wikipedia , lookup

Instrumental variables estimation wikipedia , lookup

Interaction (statistics) wikipedia , lookup

Choice modelling wikipedia , lookup

Regression toward the mean wikipedia , lookup

Linear regression wikipedia , lookup

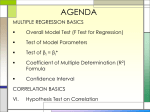

Chapter 15 Multiple Regression and Model Building McGraw-Hill/Irwin Copyright © 2014 by The McGraw-Hill Companies, Inc. All rights reserved. Multiple Regression and Model Building 15.1 The Multiple Regression Model and the Least Squares Point Estimate 15.2 Model Assumptions and the Standard Error 15.3 R2 and Adjusted R2 (This section can be read anytime after reading Section 15.1) 15.4 The Overall F Test 15.5 Testing the Significance of an Independent Variable 15.6 Confidence and Prediction Intervals 15-2 Multiple Regression and Model Building Continued 15.7 15.8 The Sales Territory Performance Case Using Dummy Variables to Model Qualitative Independent Variables 15.9 Using Squared and Interaction Variances 15.10 Model Building and the Effects of Multicollinearity 15.11 Residual Analysis in Multiple Regression 15.12 Logistic Regression 15-3 LO15-1: Explain the multiple regression model and the related least squares point estimates. 15.1 The Multiple Regression Model and the Least Squares Point Estimate Simple linear regression used one independent variable to explain the dependent variable ◦ Some relationships are too complex to be described using a single independent variable Multiple regression uses two or more independent variables to describe the dependent variable ◦ This allows multiple regression models to handle more complex situations ◦ There is no limit to the number of independent variables a model can use Multiple regression has only one dependent variable 15-4 LO15-2: Explain the assumptions behind multiple regression and calculate the standard error. 15.2 Model Assumptions and the Standard Error The model is y = β0 + β1x1 + β2x2 + … + βkxk + Assumptions for multiple regression are stated about the model error terms, ’s 15-5 LO15-3: Calculate and interpret the multiple and adjusted multiple coefficients of determination. 1. 2. 3. 4. 15.3 R2 and Adjusted R2 Total variation is given by the formula Σ(yi - ȳ)2 Explained variation is given by the formula Σ(ŷi - ȳ)2 Unexplained variation is given by the formula Σ(yi - ŷi)2 Total variation is the sum of explained and unexplained variation This section can be covered anytime after reading Section 15.1 15-6 LO15-3 R2 and Adjusted R2 Continued 5. 6. 7. The multiple coefficient of determination is the ratio of explained variation to total variation R2 is the proportion of the total variation that is explained by the overall regression model Multiple correlation coefficient R is the square root of R2 15-7 LO15-4: Test the significance of a multiple regression model by using an F test. 15.4 The Overall F Test To test H0: β1= β2 = …= βk = 0 versus Ha: At least one of β1, β2,…, βk ≠ 0 The test statistic is (Explained variation )/k F(model) (Unexplain ed variation )/[n - (k 1)] Reject H0 in favor of Ha if F(model) > F* or p-value < *F is based on k numerator and n-(k+1) denominator degrees of freedom 15-8 LO15-5: Test the significance of a single independent variable. 15.5 Testing the Significance of an Independent Variable A variable in a multiple regression model is not likely to be useful unless there is a significant relationship between it and y To test significance, we use the null hypothesis H0: βj = 0 Versus the alternative hypothesis Ha: βj ≠ 0 15-9 LO15-6: Find and interpret a confidence interval for a mean value and a prediction interval for an individual value. 15.6 Confidence and Prediction Intervals The point on the regression line corresponding to a particular value of x01, x02,…, x0k, of the independent variables is ŷ = b0 + b1x01 + b2x02 + … + bkx0k It is unlikely that this value will equal the mean value of y for these x values Therefore, we need to place bounds on how far the predicted value might be from the actual value We can do this by calculating a confidence interval for the mean value of y and a prediction interval for an individual value of y 15-10 LO15-7: Use dummy variables to model qualitative independent variables. 15.8 Using Dummy Variables to Model Qualitative Independent Variables So far, we have only looked at including quantitative data in a regression model However, we may wish to include descriptive qualitative data as well ◦ For example, might want to include the gender of respondents We can model the effects of different levels of a qualitative variable by using what are called dummy variables ◦ Also known as indicator variables 15-11 LO15-8: Use squared and interaction variables. 15.9 Using Squared and Interaction Variables The quadratic regression model relating y to x is: y = β0 + β1x + β2x2 + Where: 1. β0 + β1x + β2x2 is the mean value of the dependent variable y 2. β0, β1x, and β2x2 are regression parameters relating the mean value of y to x 3. is an error term that describes the effects on y of all factors other than x and x2 15-12 LO15-9: Describe multicollinearity and build a multiple regression model. 15.10 Model Building and the Effects of Multicollinearity Multicollinearity is the condition where the independent variables are dependent, related or correlated with each other Effects ◦ Hinders ability to use t statistics and p-values to assess the relative importance of predictors ◦ Does not hinder ability to predict the dependent (or response) variable Detection ◦ Scatter plot matrix ◦ Correlation matrix ◦ Variance inflation factors (VIF) 15-13 LO15-10: Use residual analysis to check the assumptions of multiple regression. 15.11 Residual Analysis in Multiple Regression For an observed value of yi, the residual is ei = yi - ŷ = yi – (b0 + b1xi1 + … + bkxik) If the regression assumptions hold, the residuals should look like a random sample from a normal distribution with mean 0 and variance σ2 15-14 LO15-11: Use a logistic model to estimate probabilities and odds ratios. 15.12 Logistic Regression Logistic regression and least squares regression are very similar ◦ Both produce prediction equations The y variable is what makes logistic regression different ◦ With least squares regression, the y variable is a quantitative variable ◦ With logistic regression, it is usually a dummy 0/1 variable 15-15