* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Chapter 8 Primal-Dual Method and Local Ratio

Inverse problem wikipedia , lookup

Exact cover wikipedia , lookup

Computational complexity theory wikipedia , lookup

Factorization of polynomials over finite fields wikipedia , lookup

Lateral computing wikipedia , lookup

Recursion (computer science) wikipedia , lookup

Perturbation theory wikipedia , lookup

Computational electromagnetics wikipedia , lookup

Corecursion wikipedia , lookup

Algorithm characterizations wikipedia , lookup

Knapsack problem wikipedia , lookup

Numerical continuation wikipedia , lookup

Dijkstra's algorithm wikipedia , lookup

Multi-objective optimization wikipedia , lookup

Dynamic programming wikipedia , lookup

Simulated annealing wikipedia , lookup

Weber problem wikipedia , lookup

Genetic algorithm wikipedia , lookup

Chapter 8

PD-Method and Local Ratio

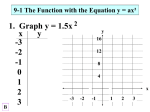

(4) Local ratio

This ppt is editored from a ppt of

Reuven Bar-Yehuda.

Reuven Bar-Yehuda

Introduction

• The local ratio technique is an approximation

paradigm for NP-hard optimization to obtain

approximate solutions

• Its main feature of attraction is its simplicity and

elegance; it is very easy to understand, and has

surprisingly broad applicability.

2

A Vertex Cover Problem:

Network Testing

• A network tester involves

placing probes onto the

network vertices.

• A probe can determine if a

connected link is working

correctly.

• The goal is to minimize the

number of used probes to

check all the links.

3

A Vertex Cover Problem:

Precedence Constrained

Scheduling

• Schedule a set of jobs on a single

machine;

• Jobs have precedence constraints

between them;

• The goal is to find a schedule which

minimizes the weighted sum of completion

times.

This problem can be formulated as a vertex cover problem [AmbuehlMastrolilli’05]

4

The Local Ratio Theorem

(for minimization problems)

Let w = w1 + w2 . If x is an r-approximate

solution for w1 and w2 then x is rapproximate with respect to w as well.

Proof

Let x1* , x2* and x* be optimal solutions for w1 , w2 and w, respective ly.

w1 ( x) / r w1 ( x1* ) w1 ( x* )

w2 ( x) / r w2 ( x2* ) w2 ( x* )

w( x) / r w( x* )

Note that the theorem holds even when negative weights are allowed.

5

Vertex Cover example

4

1

Weight functions:

62

13

W = [41, 62, 13, 14, 35, 26, 17]

W1 = [ 0, 0,

1

4

35

26

0, 14, 14, 0, 0]

W2 = [41, 62, 13, 0, 21, 26, 17]

W = W1 + W 2

17

6

Vertex Cover example (step 1)

4

1

62

13

1

4

35

26

17

=

0

0

0

1

4

14

0

+

4

1

62

13

0

21

26

0

Note: any feasible solution is a

2-approximate solution for weight

function W1

17

7

Vertex Cover example (step 2)

4

1

62

13

0

21

26

17

=

0

0

0

0

21

21

0

+

4

1

62

13

0

0

5

17

8

Vertex Cover example (step 3)

4

1

62

13

0

0

5

17

=

4

1

41

0

0

0

0

0

+

0

21

13

0

0

5

17

9

Vertex Cover example (step 4)

0

21

13

0

0

5

17

=

0

13

13

0

0

0

0

+

0

8

0

0

0

5

17

10

Vertex Cover example (step 5)

0

8

0

0

0

5

17

=

0

0

0

0

0

5

5

+

0

8

0

0

0

0

12

11

Vertex Cover example (step 6)

41

62

13

8

0

35

14

26

0

0

12

17

0

0

• The optimal solution value of

the VC instance on the left is

zero.

• By a recurrent application of

the Local Ratio Theorem we

are guaranteed to be within 2

times the optimal solution

value by picking the zero

nodes.

• Opt = 120 Approx = 129

12

2-Approx VC (Bar-Yehuda & Even 81)

Iterative implementation – edge by edge

1.

2.

3.

4.

5.

For each edge {u,v} do:

Let = min {w(u), w(v)}.

w(u) w(u) - .

w(v) w(v) - .

Return {v | w(v) = 0}.

13

Recursive

implementation

The Local Ratio Theorem leads naturally to the formulation

of recursive algorithms with the following general structure

1. If a zero-cost solution can be found, return one.

2. Otherwise, find a suitable decomposition of w into

two weight functions w1 and w2 = w − w1, and solve

the problem recursively, using w2 as the weight

function in the recursive call.

14

2-Approx VC (Bar-Yehuda & Even 81)

Recursive implementation – edge by edge

1. VC (V, E, w)

2.

If E= return ;

3.

If w(v)=0 return {v}+VC(V-{v}, E-E(v),

w);

4.

Let (x,y)E;

5.

Let = min{p(x), p(y)};

6.

Define w1(v) = if v=x or v=y and 0

otherwise;

7.

Return VC(V, E, w- w1 )

15

Algorithm Analysis

We prove that the solution returned by the algorithm is 2approximate by induction on the recursion and by using the

Local Ratio Theorem.

1. In the base case, the algorithm returns a vertex cover of zero

cost, which is optimal.

2. For the inductive step, consider the solution returned by the

recursive call.

By the inductive hypothesis it is 2-approximate with respect

to w2.

We claim that it is also 2-approximate with respect to w1 . In

fact, every feasible solution is 2-approximate with respect to

w1 .

16

Generality of the analysis

• The proof that a given algorithm is an rapproximation algorithm is by induction on the

recursion.

• In the base case, the solution is optimal (and,

therefore, r-approximate) because it has zero cost,

and in the inductive step, the solution returned by the

recursive call is r-approximate with respect to w2 by

the inductive hypothesis.

• Thus, different algorithms differ from one

another only in the choice of w1, and in the proof

that every feasible solution is r-approximate with

respect to w1.

17

The key ingredient

Different algorithms (for different

problems), differ from one another only

in the decomposition of W, and this

decomposition is determined completely

by the choice of W1.

W2 = W – W 1

18

The creative part…

find r-effective weights

w1 is fully r-effective if there

exists a number b such that

b w1 · x r · b

for all feasible solutions x

19

Framework

The analysis of algorithms in our

framework boils down to proving that w1

is r-effective.

Proving this amounts to proving that:

1. b is a lower bound on the optimum

value,

2. r ·b is an upper bound on the cost of

every feasible solution

…and thus every feasible solution is rapproximate (all with respect to w1).

20

A different W1 for VC

star by star (Clarkson’83)

4

1

62

13

1

6

35

26

=

16/

4

16/4

0

1

6

16/4

0

17

16/4

Let x 2 V wit h minimum " =

Let d(x) be the degree of vertex x

w(x)

d( x )

+

3

7

58

13

0

31

26

13

21

A different W1 for VC

star by star

b = 4 · is a lower bound

on the optimum value,

2 ·b is an upper bound on

the cost of every

feasible solution

0

4

0

W1 is 2-effective

Let x 2 V wit h minimum " =

w(x)

d( x )

22

Another W1 for VC

homogeneous (= proportional to the potential coverage)

b = |E| · is a lower bound on

the optimum value,

2 ·b is an upper bound on the

cost of every feasible

solution

4

2

4

5

3

3

W1 is 2-effective

Let " = minx 2 V

3

w(x)

d( x )

23

Partial Vertex Cover

4

1

1

4

62

25

17

13

26

Input: VC with a fixed number k

Goal: Identify a minimum cost

subset of vertices that hits at least k

edges

Examples:

• if k = 1 then OPT = 13

• if k = 3 then OPT = 14

• if k = 5 then OPT = 25

• if k = 6 then OPT = 14+13

24

Partial Vertex Cover

Weight functions:

w=[41, 62, 13, 14, 25, 26, 17]

4

1

62

13

w1=[ 0, 0,

0, 14, 14, 0, 0]

w2=[41, 62, 13, 0, 11, 26, 17]

1

4

}

w = w1 + w2

Assume k < |E| (number of edges)

Note: any feasible solution is NOT a

2-approximate solution for weight function w 1

25

26

17

In VC every edge must be hit by a vertex.

In partial VC, k vertices are sufficient. So the optimum for w1

is 0 (k<=5); vice versa the solution that takes for example

vertex 4 is infinite many times larger than the optimum

25

Positive Weight Function

• We do not know of any single subset that

must contribute to all solutions.

• To prevent OPT from being equal to 0, we

can assign a positive weight to every

element.

26

Positive Weight Function

Weight functions:

w=[41, 62, 13, 14, 25, 26, 17]

4

1

62

13

w1=[ 0, 0,

0, 14, 14, 0, 0]

w2=[41, 62, 13, 0, 11, 26, 17]

w = w1 + w2

1

4

25

26

Observe that 14 is NOT a lower bound

of the optimal value!

For example for k=1 then 13 is the optimal

value.

17

27

Positive Weight Function

Let d(x) be the degree of vertex x

What is the amortized cost to hit one edge by using x ?

x

What is the minimal amortized cost to hit any edge?

w( x)

min

x

min( d ( x), k )

Since we have to hit k edges, " k " represents a valid lower bound.

28

Positive Weight Function W1

4

1

62

13

w1(x) = · min{ d(x) , k }

For k = 3 then = 14/3

1

4

25

17

26

Weight functions (k=3):

w = [41, 62, 13, 14, 25, 26, 17]

w1= [14, 14, 28/3, 14, 14, 14, 14]

w2= [27, 48, 11/3, 0, 11, 12, 3]

w = w1 + w 2

29

Function W1

1

4

14

28/3

1

4

14

14

14

• [Lower Bound] Every feasible

solution costs at least k = 14

• [Upper Bound] There are

feasible solutions whose value

can be arbitrarily larger than k

(e.g. take all the vertices)

• But if you take all the vertices

then not all of them are strictly

necessary!!

• We can focus on Minimal

Solutions!!!

30

Minimal Solutions

• By minimal solution we mean a feasible solution that is minimal

with respect to set inclusion, that is, a feasible solution whose

proper subsets are all infeasible.

• Minimal solutions are meaningful mainly in the context of

covering problems (covering problems are problems for which

feasible solutions are monotone inclusion-wise, that is, if a set

X is a feasible solution, then so is every superset of X; MST is

not a covering problem).

31

Minimal Solutions:

r-effective weights

w1 is r-effective if there exists a

number b such that

b w1 · x r · b

for all minimal feasible solution x

32

The creative part…

again find r-effective weights

• If we can show that our algorithm uses an r-effective

w1 and returns minimal solutions, we will have

essentially proved that it is an r-approximation

algorithm.

• Designing an algorithm to return minimal solutions is

quite easy.

• Most of the creative effort is therefore expended in

finding an r-effective weight function (for a small r).

33

2-effective weight function

w1 ( x) min( d ( x), k )

1. In terms of w1 every feasible solution costs at least · k

2. In terms of w1 every minimal feasible solution costs at most 2 · · k

Minimal solution = any proper subset is not a feasible solution

34

Proof of 2. (= costs at most 2 · · k )

Consider a minimal solution C. If C contains a node x such that

min( d ( x), k ) k , that C {x} with cost k . Otherwise, the cost

value is xC d ( x). It suffices to show

xC

d ( x ) 2k .

For x C , let d i ( x) denote the number of edges with only i endpoints

in C , and hit by x. Then d ( x) d1 ( x) d 2 ( x).

Choose x* C such that d1 ( x*) min xC d1 ( x).

Then d1 ( x*) xC \{ x*} d1 ( x). (note : C contains at least two nodes.)

1

Denote t xC d 2 ( x). Since C is minimal,

2

t xC \{ x*} d1 ( x) k (otherwise , x * can be deleted.)

Hence,

xC

d ( x) d1 ( x*) xC \{ x*} d1 ( x) 2t 2(t xC \{ x*} d1 ( x)) 2k .

35

Proof of 2. (cont.)

d1(x) = 2

d2(x) = 3

x

36

The approximation

algorithm

Let C be t he set of edges and S(x) be t he set of edges t hat are hit by x

Algorithm from Bar-Yehuda et al. “Local Ratio: A Unified Framework for Approximation Algorithms” ACM Computing Surveys, 2004

37

Algorithm Framework

1. If a zero-cost minimal solution can be found, do:

optimal solution.

2. Otherwise, if the problem contains a zero-cost

element, do: problem size reduction.

3. Otherwise, do: weight decomposition.

38