* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Classical Electrodynamics - Duke Physics

Euclidean vector wikipedia , lookup

Electrostatics wikipedia , lookup

History of quantum field theory wikipedia , lookup

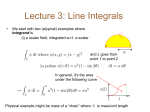

Path integral formulation wikipedia , lookup

Vector space wikipedia , lookup

Navier–Stokes equations wikipedia , lookup

Special relativity wikipedia , lookup

Work (physics) wikipedia , lookup

Maxwell's equations wikipedia , lookup

Noether's theorem wikipedia , lookup

Aharonov–Bohm effect wikipedia , lookup

Partial differential equation wikipedia , lookup

Electromagnetism wikipedia , lookup

Metric tensor wikipedia , lookup

Photon polarization wikipedia , lookup

Kaluza–Klein theory wikipedia , lookup

Equations of motion wikipedia , lookup

Mathematical formulation of the Standard Model wikipedia , lookup

Field (physics) wikipedia , lookup

Nordström's theory of gravitation wikipedia , lookup

Derivation of the Navier–Stokes equations wikipedia , lookup

Introduction to gauge theory wikipedia , lookup

Relativistic quantum mechanics wikipedia , lookup

Lorentz force wikipedia , lookup

Time in physics wikipedia , lookup

Theoretical and experimental justification for the Schrödinger equation wikipedia , lookup