* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Lecture 1: Review of Quantum Mechanics, Introduction to Statistical

Density matrix wikipedia , lookup

Bose–Einstein statistics wikipedia , lookup

Molecular Hamiltonian wikipedia , lookup

Probability amplitude wikipedia , lookup

Path integral formulation wikipedia , lookup

Renormalization group wikipedia , lookup

Particle in a box wikipedia , lookup

Matter wave wikipedia , lookup

Wave–particle duality wikipedia , lookup

Relativistic quantum mechanics wikipedia , lookup

Canonical quantization wikipedia , lookup

Atomic theory wikipedia , lookup

Elementary particle wikipedia , lookup

Theoretical and experimental justification for the Schrödinger equation wikipedia , lookup

Identical particles wikipedia , lookup

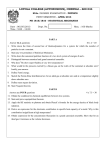

Version: November 7, 2011.

MIT 3.20, Rickard Armiento, Lecture 1

Lecture 1: Review of Quantum Mechanics, Introduction to

Statistical Mechanics, Time Averages vs. State Averages, First

Postulate

• October 14, 2011, McQuarrie 1.1 - 2.1

Formalities

Lectures by: Rickard Armiento, armiento mit.edu, (617) 715-4275, room 13-5065.

• Next 4 weeks: fundamentals of statistical mechanics. This ends with a partial exam, (25%

of grade.

• Book: McQuarrie “Statistical Mechanics”, (alt. “Statistical Thermodynamics”; both versions work). Chapters 1-6 + 11.

Introduction to Statistical Mechanics

Thermodynamics = very general macroscopic theory.

Statistical mechanics connects microscopic models with thermodynamics.

Statistical Mechanics = study of macroscopic systems from an atomistic point of view. “Many, many particles + statistics → the macroscopic world”.

Example: heat capacity. Why is the temperature dependence of the

heat capacity of Ag shaped like this? Solid of N vibrating ions

⇒? thermodynamical quantities, Cv , etc., Cv grows with T and

approaches 3kB T .

Will find that entropy is connected to the “uncertainty” of microscopic states. Counting of states → we will frequently enter into

combinatorics.

Goal: understand and predict macroscopic phenomena starting

from models of microscopic interactions between the atoms/particles in the system + statistics.

1

Statistical Mechanics

Statistical Mechanics connects microscopic models with

thermodynamics.

• What is entropy and internal energy in “reality”?

• Can we understand the second + third law?

Many, many particles + statistics → the macroscopic

world.

Quantum Mechanics

Statistical Mechanics

Thermodynamics

Heat Capacity, Experimental vs. Model

MIT 3.20, Rickard Armiento, Lecture 1

Short Review of Quantum Mechanics

Review of Quantum Mechanics,1

• The state vector: |Ψi represents the state of the system.

• Wave-function formulation: Ψ(q̄, t) ← wave function

q̄ = {set of coordinates necessary}, e.g. q̄ = (r̄, σ̄) × N.

• Probability interpretation: probability that the system is

between (q̄, q̄ + dq̄): Ψ∗ (q̄, t)Ψ(q̄, t)dq̄.

• Probability that the system is in any state:

Z

Ψ∗ (q̄, t)Ψ(q̄, t)dq̄ = 1 (normalized wavefunctions).

all q̄

Review of Quantum Mechanics, 2

• Time dependent Schrödinger eq.:

ĤΨ(q̄, t) = i~

∂Ψ(q̄, t)

∂t

• Stationary state: Ψ(q̄) represents the system if the

probability density does not change with t.

• Ψ(q̄) is a solution to the time-independent Schrödinger

eq. (an eigenvalue eq.)

ĤΨ(q̄) = EΨ(q̄),

~ ∂ 2 ψ(x)

+ v(x)ψ(x) = ψ(x)

2m ∂x2

Equation + boundary conditions ⇒ set of solutions:

E.g., one particle, 1D:

−

Ψ1 , Ψ2 , ... and eigenvalues E1 , E2 , ...

2

MIT 3.20, Rickard Armiento, Lecture 1

ĤΨ(q̄) = EΨ(q̄) and boundary conditions ⇒

Ψ1 , Ψ2 , ... and eigenvalues E1 , E2 , ...

Degenerate states:

Ω(E) = # states with energy E (discrete) = degeneracy

Ω(E)dE = # states between E and E + dE. (continous) = density of states

Simple 1D Examples:

1. “Particle in a box”: Single particle in a 1d infinite well:

Ĥ = −

h̄2 ∂ 2

+ v(x);

2m ∂x2

r

Ĥψ = ψ

⇒

ψn =

v(x) =

2

nπx

sin(

),

a

a

0 if 0 < x < a

∞ otherwise

n =

h2 2

n , n = 1, 2, 3, ...

8ma2

a

2. Harmonic oscillator:

Ĥ = −

r

ψn =

mω 1

h̄π 2n n!

h̄2 ∂ 2

1

+ mω 2 x2 ,

2

2m ∂x

2

1/2

r

hn

Ĥψ = ψ

mω 2

mω

x e− h̄ x /2 ,

h̄

⇒

n = (n + 1/2)h̄ω, n = 0, 1, 2, ...

ħω

Other examples: Finite well, delta-function potential, linear potential.

A First Meeting with Degenerate Microstates

Degeneracy in typical 3D system: How degenerate are the states? Start with one particle in a

box.

3D infinite well: Ĥψ = ψ:

h̄2

Ĥ = −

2m

∂2

∂2

∂2

+

+

∂x2 ∂y 2 ∂z 2

⇒ nx ,ny ,nz =

+ v(x, y, z),

v(x, y, z) =

h2

1 2

(n2 + n2y + n2z ) =

(n + n2y + n2z ),

8ma2 x

M x

0 if 0 < x, y, z < a

∞ otherwise

nx , ny , nz = 1, 2, 3, ...

Density of energy levels: E.g., m = 10−22 g, a = 10 cm ⇒ 1/M = 10−41 J = 10−22 eV. Very,

very dense.

3

E = kx²/2

MIT 3.20, Rickard Armiento, Lecture 1

nx

5

4

3

2

1

Count the ways n2x + n2y + n2z give the same number. Degeneracy ω = one quadrant of a sphere:

R2 = n2x + n2y + n2z .

ω() = # states as a function of the energy,

r

1 2 3 4 5 ny

φ() = # states with energy ≤ Degeneracy in 3D well

25

9000

8000

7000

6000

5000

4000

3000

2000

1000

00

10

15

States, φ(²)

States ω(²)

20

10

5

00

4

2

M²

6

8

75

50

25

00 1 2 3 4 5

5

10

M²

15

20

25

Idea: φ ≈ Volume of sphere quadrant/(volume of one state):

1

φ() =

8

4πR3

3

π

π

= (M )3/2 =

6

6

8ma2 h̄2

3/2

.

# states between and + ∆:

π 3/2 ( + ∆)3/2 − 3/2 =

M

6

π

= f ( + ∆) ≈ f () + f 0 ()∆ + O(∆2 ) = M 3/2 1/2 ∆+O(∆2 ).

4

ω(, ∆) = φ( + ∆) − φ() =

Same numbers as before, one gas particle: m = 10−22 g, a =

10 cm, at room temperature: ∼ kB T with T = 300 K. The

number of states in an energy interval of 1% (∆ = 0.01) is

ω(, ∆) ∼ 1028 .

Many indistinguishable non-interacting particles: (see McQuarrie

1.3), Ω(E, ∆E) ∼ 10N , N ∼ 1023 .

4

Many Noninteracting Indistinguishable

Particles

Ω(E, ∆E) =

1

Γ(N + 1)Γ[(3N/2)]

2πma2

h2

3N/2−1

⇒ Ω(E, ∆E) ∼ 10N , N ∼ 1023

E3N/2−1 ∆E

MIT 3.20, Rickard Armiento, Lecture 1

Time Averages and State Averages

Many microscopic states ⇔ one macroscopic / thermodynamic state

(e.g., fixed E; V , N .) Our particles in a box can be in many, many

degenerate states ∼ 10N .

Microstates vs. Macroscopic state

Macroscopic,

"Thermo state"

E, V, N

Starting point of statistical mechanics: many macroscopic quantities we observe (e.g., p) are changing microscopic quantities that

has been time averaged.

The idea is valid both for classical physics and quantum mechanics. In classical physics each particle is described by position and

momentum, q̄ = {r̄i , p̄i }N

i .

Time average over classical microstates:

1

Ē = hE(t)i =

∆t

Z

t+∆t

E(r̄, p̄)dt.

t

Time average over quantum mechanical states:

Ē = hE(t)i =

1

∆t

Z

Microstates

Starting point of statistical mechanics: many

macroscopic quantities we observe (e.g., p) are changing

microscopic quantities that has been time averaged.

1st postulate: The ensemble average of a

thermodynamic property is equivalent to the

time-averaged macroscopic value of the property

measured for the real system.

Time averages

t+∆t

hΨ(q̄, t)|Ĥ|Ψ(q̄, t)idt.

Macroscopic,

"Thermo state"

t

E, V, N

p

Calculating these time averages is hard!

t

Ensemble of microstates: A copies of the system, all in the same

thermodynamical state, with N , V and E fixed. All the degenerate

Ω(E) states are present equal number of times = an “ensemble of

points in the phase space”.

1st postulate: The ensemble average of a thermodynamic property is equivalent to the time-averaged macroscopic value of the

property measured for the real system.

Microstates

Ensemble

State average over classical microstates:

Z

Ē = hE(t)i = E(r̄, p̄)P (r̄, p̄)dr̄dp̄.

A collection of points (volumes) in phase space.

P (r̄, p̄)dr̄dp̄ ∼ measure of time that the system spends in a state

between [r̄; p̄, r̄ + dr̄; p̄ + dp̄].

5

MIT 3.20, Rickard Armiento, Lecture 1

State average over quantum mechanical states:

Ē = hE(t)i =

X

Ei Pi

i

Formally: Pi = probability to find the system in state i. (Informally: “probability of state i”.)

Example: sleepy students: N = 40 students falling asleep / waking up independently, probability

of being asleep = Psleep . How many are asleep?

n̄sleep

1st postulate

1

The ensemble = many copies of the lecture hall,

=

nsleep (t)dt =

= hn(t)i =

∆t ∆t

all possible combinations of sleeping students: 2N .

N

=

Z

2

X

nsleep

Pi

i

=

i=1

=

Group all states with same ni ;

Probability that exactly n students are sleeping = Pn

N

X

n=0

=

N

X

n=0

N!

nPn =

n!(N − n)!

N!

nP n (1 − Psleep )N −n = ... = N Psleep .

n!(N − n)! sleep

Given Pi → we can calculate everything! This will be a central concept of statistical mechanics.

Examples (glance ahead): Constant E, V, N → Pi constant. Constant N, V, T → Pi ∼ e−Ei /kT

Different boundary conditions → different Pi .

Homework suggestions

1.

2.

3.

4.

Think about sleepy students example, why is count of states with the same ni =

Exam problem 2001E2:3(a).

Exam problem 2004E2:1(a).

Exam problem 2007E2:3(a).

6

N!

n!(N −n)! .

MIT 3.20, Rickard Armiento, Lecture 2

Lecture 2: Microcanonical and Canonical Ensemble

• October 17, 2011, McQuarrie 2.1 - 2.2

Previous lecture

Ensemble of microstates: a collection of microstates that all are

in the same thermodynamical state.

Time averages

Macroscopic,

"Thermo state"

E, V, N

Postulate: The ensemble average of a thermodynamic property is

equivalent to the time-averaged macroscopic value of the property measured for the real system.

p

t

Microstates

State average over quantum mechanical states:

Ē = hE(t)i =

X

Ei Pi

Ensemble

i

Formally: Pi = probability to find the system in microstate i at a

specific instance in time. (Informally: “probability of state i”.)

The Microcanonical Ensemble

Isolated system, constant volume ⇒ E, N , and V fixed (both microscopically, i.e., in each microstate; and macroscopically).

Degenercay: Ω(E). Microcanonical ensemble contains all these degenerate states.

Ensemble average?

M̄ = hM (t)i =

X

Mi P i .

i

Where do we get Pi ?

Equal a Priori Probability:

Principle of equal a priori probability: Given an

isolated system in equilibrium, it is found with equal

probability in each of its accessible microstates.

Assume that all microstates are equally likely ⇒

7

A collection of points (volumes) in phase space.

MIT 3.20, Rickard Armiento, Lecture 2

State probability in microcanonical ensemble

Pi = 1/Ω(E) = constant.

The ergodic hypothesis was one (historical) motivation for this postulate.

The Ergodic Hypothesis

(Isolated, noninteracting particles)

A

B

For states degenerate in energy: over long time, we pass

through them equally much.

Equal a priori probability:

PA = P B

But of all possible degenerate states,

How many "look like" A? Like B?

Pspread out >> Pon left side

This specific hand:

2,598,960 : 1

This specific hand:

2,598,960:1

One pair

1.36 : 1

Royal straight flush

649,739 : 1

Microcanonical ensemble averages:

Ē =

X

Ei Pi =

i

1 X

EΩ

=E

Ei =

Ω(E)

Ω

i

M̄ =

X

p̄ =

X

Mi Pi =

i

i

1 X

Mi

Ω(E)

i

pi Pi =

1

Ω(E)

X

pi

i

Canonical Ensemble

System with constant N, V , but not thermally isolated. It is in thermal equilibrium with the

environment at temperature T (=“macroscopic temperature”, we do not yet know what the temperature is in terms of microstates, i.e. “in the microscopic world” where all microscopic degrees

of freedom are specified.)

Canonical ensemble C = all microstates corresponding to fixed N , V , T .

8

MIT 3.20, Rickard Armiento, Lecture 2

Ensemble average: sum the microcanonical way?:

M̄ =?

X

Mi P i

i

But, what are the {Pi } now?

System is not isolated ⇒ all accessible microstates generally not equally likely.

Cunning plan: construct a microcanonical ensemble that simulates a system in the canonical

ensemble + heat bath and use principle of equal a priori probabilities in the microcanonical

ensemble to derive probabilities Pi .

Step 1

1

1

2

3

3

2

...

1

...

1

2

3

...

1

2

3

2

1

A 2

N,V,T

...

3

... A

N,V,T

3

...

A

N,V,T

A

N,V,T

A

N,V,T

Abstract canonical ensemble,

all entries share the same

N, V, T.

All states NOT equally likely

𝒜

N,V,T

All possible states of heat bath =

microcanonical ensemble. Every

entry has the same total

E=𝓔 , N=𝒩 , V=𝒱.

All states equally likely

Simulated heat bath of 𝒜 systems,

1. Bring to thermal equilibrium at T

2. Isolate ⇒ E=𝓔 , N=𝒩 , V=𝒱.

Step 2

All possible states?

Every microstate of the

heat bath has the same total

E=𝓔 , N=𝒩 , V=𝒱.

1

1

2

3

2

3

1

...

1

...

2

1

3

A

3

2

A 2

N,V,T

...

The abstract canonical ensamble = all possible subsystem states.

Lay out 𝒜 subsystems in all ways that give E=𝓔 , N=𝒩 , V=𝒱.

= Combinatorial problem!

1

3

2

...

...

3

... A

A

A

1

2

3

...

A

A

Each microstate = same probability →

Subsystem probability ~ number of times it occur.

Create a very large “heat bath” system out of A microstates from the canonical ensemble, labeled

j = 1, 2, ... A . Bring the system to thermal equilibrium at T (using, e.g. an external heat bath),

and then isolate it. All possible microstates of this heat bath system = a microcanonical ensemble

9

MIT 3.20, Rickard Armiento, Lecture 2

D ⇒ 1) All microstates in D have the same energy value = E . 2) Principle of equal a priori

probabilities ⇒ finding the system in any of the possible microstates in D is equally likely.

Main idea: determine the probabilities by counting how all the subsystems within the equally

likely microstates in D distribute over the possible states:

Pi =

Number of subsystems in state i within all the microstates in D

Total number of subsystems in all the microstates in D

Average number of subsystems in state i over the microstates in D

=

Number of subsystems in one microstate of D

The canonical ensemble C contains every state a subsystem in D can be in. All possible microstates of D = every way systems in C can be mapped onto the labeled (thus distinguishable)

subsystems of D under the constraint that the total energy sums to E ⇒ a straightforward combinatorial problem!

Categorize entries in D according to the distribution of subsystems over quantum states: There

are ai subsystems in state i, a = {ai }. Count possible quantum states in i, labeled subsystems

in j.

X

X

X

X

Number of microstates: A =

1=

ai , total energy: E =

Ej =

ai Ei

j

Total number of subsystems

in state i

i

j

X

=

All distributions

=

X

i

ai in the

Microstates in D with

=

×

distribution

this distribution

W (a)ai (a).

a

What is W (a)?: Number of ways A labeled subsystems can be divided with a1 in one group, a2

in a second group, etc. ...

W (a) =

A!

A!

=

.

a1 !a2 !...

Πi ai !

Total number of subsystems in all systems in D:

X Number of subsystems X X

X

X

X

=

W (a)ai (a) =

W (a)

ai (a) = A

W (a).

in state i

i

i

a

a

P

⇒

Pi =

W (a)ai (a)

aP

A

a W (a)

=

i

Probability to find the system

.

in quantum state i

10

a

MIT 3.20, Rickard Armiento, Lecture 2

Finished? In principle we could calculate averages using this expression.

X

Mi P i

M̄ =

i∈All states

But dealing with Pi in this form is still hard.

Most probable distribution

Binomial distribution

W ({ai }) very peaked around a small set of {ai }. Larger and larger

heat bath ⇒ A → ∞ ⇒ more and more peaked (See McQuarrie

1.5)

Idea: find the most probable distribution a∗ that maximizes W ({ai })

over all {ai }. Assume it is enough to consider this distribution in

the limit A → ∞, ai → ∞.

P

W (a)ai (a)

1 W (a∗ )a∗i

1

a∗i

a

P

= {A → ∞} =

Pi =

=

A

A W (a∗ )

A

a W (a)

Purely

Pmathematics: find the {ai } that maximizes W (a) =

E = i ai Ei .

A!

Πi ai ! ,

a*

constraints: A =

P

ai , and

W (a) contains large factorials. Maximize ln W (a) instead, so we can use Stirling’s approximation, ln n! = n ln n − n.

Langrange multipliers (see McQuarrie 1.5) ⇒

(

)

X

X

∂

ln W (a) − α

ak − β

ak Ek = 0,

∂ai

k

i = 1, 2, ...

k

α and β are undetermined Lagrange multipliers. Insert Stirlings’ approximation:

⇒ − ln a∗i − α − 1 − βEi = 0

⇒

0

a∗i = e−α −βEi ,

α0 = α + 1.

1 −α−βEi

e

A

For a system in temperature equilibrium with its environment at thermodynamic temperature

T , the probability to find it in a state with energy Ei , falls off exponentially with that energy.

Pi =

Note: this is per individual energy. But a typical system has more energy levels at higher energies

⇒ likely to find the system in some intermediate state.

State averages:

M̄ =

X

i∈All states

Pi Mi =

X

P (Ei )Mi =

i

X

i

But, what are α and β? → story for next time.

11

e−α−βEi Mi

MIT 3.20, Rickard Armiento, Lecture 2

Suggested homework

•

•

•

•

McQuarrie 2.2

McQuarrie 2.1

McQuarrie 2.8

Exam problem: 2009E2 3(i).

12

MIT 3.20, Rickard Armiento, Lecture 3

Lecture 3: Identification of Beta and Entropy in the Canonical

Ensemble

• October 19, 2011, McQuarrie 2.2 - 2.3

The Story So Far

Ensemble: collection of microstates that appear the same macroscopically / thermodynamically.

P

Ensemble averages: thermodynamical quantities are given as: M̄ = i Mi Pi

Pi = probability of finding a system in microstate i of the ensemble.

Microcanonical Ensemble: E, N , and V fixed ⇒ Pi = 1/Degeneracy = 1/Ω(E) = constant.

Canonical Ensemble: N , V , and T fixed ⇒ Pi = (1/A )e−α−βEi , Ei = The energy of state

i; α, β are so far unknown Lagrange multipliers that may depend on N, V, T .

Identifying α

Just part of the normalization:

Pi =

1 −α−βEi

=

e

A

e−α

A

e−βEi

Normalize: The probability for the system to be in any state = 1, solve for α.

1=

1 −α X −βEi

e

e

A

⇒

eα =

i

1 X −βEi

e

A

e−βEi

Pi = P −βE .

i

ie

⇒

i

This denominator will occur very frequently in our expressions, so define:

The canonical partition function:

Q=

X

e−βEi =

i∈All states

X

Ω(Ei )e−βEi =

i∈All energies

X

Ω(E)e−βE

E

Q is a “weighted count of all microstates” (more on this later.)

Check: canonical ensemble with just one energy Ei = E is a microcanonical ensemble:

Pi =

e−βEi

e−βE

1

=P

=

.

−βE

Q

Ω(E)

Ω(E)e

E

13

MIT 3.20, Rickard Armiento, Lecture 3

The meaning and source of β

Take a system with a specific set of energy states {Ei }. The average energy is set by β:

P

−βEi

X

X Ei e−βEi

i Ei e

P −βE 0 = P

Ē =

Ei Pi =

−βE

i

i

ie

i0 e

i

i

Why is there a free parameter in the canonical ensemble probabilities that lets us choose the average energy?

Step 1

Recall the derivation: the simulated heat bath was first in thermal

equilibrium with its environment at T . Then, the system was isolated which resulted in a fixed total energy = E . This is the source

of the freedom. E is directly related to the average energy (and thus

β),

X

X

E =

ai Ei =

A Pi Ei = A Ē

i

1

1

2

3

1

Abstract canonical ensemble,

all entries share the same

N, V, T.

All states NOT equally likely

Simulated heat bath of 𝒜 systems,

1. Bring to thermal equilibrium at T

2. Isolate ⇒ E=𝓔 , N=𝒩 , V=𝒱.

Identifying β

Recall the “thermodynamic equation of state”:

dE = T dS−pdV ⇒

=T

N,T

∂S

∂V

−p

N,T

∂V

∂V

⇒

N,T

∂E

∂V

−T

N,T

∂p

∂T

= −p

V,N

Idea: compose the same relation from ensemble averages, and

β. Butwhat

identify

should be

∂p

∂ Ē

held constant? Try β. I.e., seek out relation between: 1) p̄, 2) ∂V

, and 3) ∂β

.

N,β

V,N

Energy from ensemble average: (derived in previous section)

P

{Measured energy} = Ē(N, V, β) =

)e−βEi (N,V )

.

Q(N, V, β)

i Ei (N, V

(1) Pressure from ensemble average: A system in state j: dEi = −pi dV is the work done on the

system for volume change dV , thus, we understand what pressure is for a single microstate:

∂Ei

pi = −

∂V N

Macroscopic pressure:

P ∂Ei p̄ =

X

pi Pi = −

i

∂V

N

e−βEi (N,V )

Q(N, V, β)

i

14

3

...

1

2

...

1

3

2

1

A 2

N,V,T

3

...

A

N,V,T

...

3

... A

N,V,T

A

N,V,T

All possible states of heat bath =

microcanonical ensemble. Every

entry has the same total

E=𝓔 , N=𝒩 , V=𝒱.

All states equally likely

i

∂E

∂V

2

A

N,V,T

𝒜

N,V,T

At this point it may seem logical to solve for β(E ) and then seek

E (T ). However, it turns out to be much better to directly seek β(T ).

3

2

...

MIT 3.20, Rickard Armiento, Lecture 3

(2) The energy derivative:

∂ Ē

∂V

n

= Calculus: f (x) =

N,β

g(x)

h(x)

⇒ f 0 (x) =

h(x)g 0 (x)−h0 (x)g(x)

h2 (x)

"

!

X

X ∂Ei 1

∂Ei

−βEi

−βEi

Q

e

−

Ei β

e

−

Q2

∂V N

∂V N

i

i

∂ Ē

∂V

o

=

!

!#

X ∂Ei X

−βEi

−βEi

−

β

e

Ei e

=

∂V N

i

i

= −p̄ + β(Ep) − β Ē p̄.

N,β

(3) The pressure derivative

∂p

⇒

= {Similar to energy derivative} = Ē p̄ − (Ep)

∂β N,V

Assembling (1-3):

⇒

∂ Ē

∂V

+β

N,β

∂ p̄

∂β

= −p̄

N,V

Compare ensemble averages vs. thermodynamics:

∂ Ē

∂V

+β

N,β

∂ p̄

∂β

= −p̄

⇐⇒

N,V

∂E

∂V

−T

N,T

∂p

∂T

= −p

N,V

Wrong sign? How do we fix this? Are the more than one solution?

∂ p̄

∂p

dT

dβ

β

= −T

⇒−

=

⇒

∂β N,V

∂T V,N

T

β

− ln T = ln β + C ⇒ − ln T − ln k = ln β ⇒ ln

β=

1

,

kT

e−Ei /(kT )

Pi = P −E /(kT ) ,

i

ie

1

= ln β

kT

k = constant.

What did we learn? In the derivation of the canonical ensemble, β appeared due to the freedom

created by the total energy of the simulated heath bath being left undetermined, E . We now have

the exact connection between the variable β and the thermodynamic temperature T . This defines

temperature in the “microscostate picture”.

15

MIT 3.20, Rickard Armiento, Lecture 3

What is k in β = 1/(kT )?

Canonical ensemble with pairs of systems: like the canonical ensemble, but every subsystem =

two separate systems in thermal equilibrium. Possible states A and B, energy state occupations

{ai }, {bi } ⇒

A ! B!

W (a, b) =

.

Πi ai ! Πi bi !

Most probable distribution:

A

B

e−βEi

e−βEi

Pij = P

= PiA PiB .

P

A

−βEi0

−βEiB0

i0 e

i0 e

From thermodynamics ⇒ the systems have the same T . From above ⇒ same β = 1/(kT ). ⇒

Two arbitrary systems in thermal equilibrium have the same k ⇒ k is a general numerical constant = kB . Take numerical value from any system, e.g., ideal gas;

kB = 1.38 · 10−23 J/K = 8.6 · 10−5 eV/K

Identifying the Entropy

Similar strategy as for β: use 2nd + 1st laws to express thermodynamical entropy in internal

energy change + work:

1st law

δQrev = T dS ⇒ dS = (1/T )δQrev =

= (1/T )(dE − δWrev )

dE = δQrev + dWrev

Corresponding quantities from canonical ensemble: Let the systems in the ensemble do some

reversible work: infinitesimal change of state (all system in the ensemble change by dV ) along

nice reversible path, in contact with heat bath so T = const.

X

X ∂Ei X

δ W̄rev = −p̄dV = −

Pi (pi )dV =

Pi

dV =

Pi dEi .

∂V N

i

i

i

P

Recall: partition function: Q = i e−βEi = Q(N, V, T ) = “weighted sum over all states the

system can occupy”. Microcanonical case {Ei } = 0 ⇒ Q = Ω(E0 ).

What can we construct that looks like dS? Q is a weighted count of the microstates. Entropy is

somehow connected to the number of microstates. But, entropy is additive and Q is multiplicative. Try: ln Q.

P

Define: f (β, {Ei }) = ln Q = ln( i e−βEi )

Total differential:

df =

∂f

∂β

X ∂f dβ +

dEi .

∂Ei β,Ej6=i

Ei

i

16

MIT 3.20, Rickard Armiento, Lecture 3

Note:

1.

2.

∂f

∂Ei

P

− i Ei e−βEi

∂f ∂Q

·

=

= −Ē

=

∂Q ∂β

Q

Ei

∂f ∂Q

βe−βEi

=

=−

= −βPi

∂q ∂Ei Ei6=j

Q

β,Ej6=i

∂f

∂β

This gives

df = −Ēdβ − β

X

Pi dEi ⇒

i

d(f + β Ē) = df + Ēdβ + βdĒ ⇒

⇒ d(f + β Ē) = β dĒ − δ W̄rev

Compare state averages and thermodynamics:

d(f + β Ē) = β dĒ − δ W̄rev

⇐⇒

dS =

1

(dE − δWrev )

T

We have found a way to define entropy on the microstate level of the theory, which is consistent

with thermodynamics!

dS = kB d(f + β Ē) = d(kB (f + β Ē)) ⇒

S = kB ln Q + Ē/T + constant

Set constant = 0. (Only ∆S is relevant in thermodynamics, constant related to third law.)

Entropy in canonical ensemble:

S = kB ln Q +

Ē

T

What did we learn? We can understand what entropy is on the microstate level! Its main component is: ln of a weighted count of the number of microstates. S is not a mechanistic entity, there

is no Si to be summed up for all states. S is a property of the whole probability distribution.

Its meaning is roughly “how large is the space of microstates this system may be in, given its

current thermodynamic properties”. (More on this next lecture.)

Entropy in the Microcanonical Ensemble

Q=

P

ie

−βEi

= Ei = E = Ω(E)e−βE ⇒

S = kB ln(Ω(E)e−βE ) +

Ē

T

⇒

Entropy in the microcanonical ensemble:

S = kB ln Ω,

(This is written on Boltzmann’s grave).

17

MIT 3.20, Rickard Armiento, Lecture 3

A Very General Definition of Entropy, Gibbs’ Entropy Formula

S = −kB

P

i Pi ln Pi .

(see McQuarrie problem 2.5, hint: just plug in Pi = e−βEi /Q.)

Suggested Homework

•

•

•

•

McQuarrie 2.5

Exam problem: 2006E2-1(a)

Exam problem: 2001E2-3(b)

Exam problem: 2001E2-5(a-d) (we have not talked about alloys yet, but just use the entropy expression for the microcanonical ensemble and combinatorics.)

18

MIT 3.20, Rickard Armiento, Lecture 4

Lecture 4: Entropy Discussion. Physical Insights from Statistical

Mechanics.

• October 21, 2011, parts from McQuarre 1.1 - 2.4

Past Lectures

Probabilities in the microcanonical ensemble: Pi = 1/Ω(E) = constant

Canonical partition function: Q =

P

ie

−Ei /(kB T )

=

P

E

Ω(E)e−E/(kB T )

Probabilities in the canonical ensemble: Pi = e−Ei /(kB T ) /Q

Entropy:

S=

kB ln Ω (Microcanonical)

(Canonical)

kB ln Q + Ē

T

= −kB

X

Pi ln Pi .

i

S is a property of the probability distribution of states, not a regular variable.

Interpretation of the Partition Function

Partition function = “how the probabilities are partitioned among

the microstates”.

Typical for probabilities:

Weight of one thing

P =

Summed weight of all things

Ensemble

⇐⇒

Pi =

e−Ei /(kB T )

,

Q

Q=

X

e−Ei /(kB T ) .

i

This interpretation fits well, since we got the sum in Q from normalizing the probabilities in the nominator. Hence, Q is the “sum of all

states multiplied by their weight” = “the size of the state space”.

Also note: the “size of the state space” is directly controlled by T .

Solving for Microstates

Solve ĤΨ = EΨ ⇒ energy levels with degeneracy:

Count like this: Ψj,k , where j = energy state (0, 1, ...) and k counts

the degenerate states for that energy k = 1, 2, ...Ω(Ej ).

E0 : {Ψ0,1 , Ψ0,2 , Ψ0,3 , ..., Ψ0,Ω(E0 ) }

E1 : {Ψ1,1 , Ψ1,2 , Ψ1,3 , ..., Ψ1,Ω(E1 ) }

E2 : {Ψ2,1 , Ψ2,2 , Ψ2,3 , ..., Ψ2,Ω(E2 ) }

19

A collection of points (volumes) in phase space.

MIT 3.20, Rickard Armiento, Lecture 4

... Probabilities Pi = Pj,k .

Example from lecture 1: one independent particle: ω ∼ 1028 .

23

Many non-interacting particles: Ω ∼ 1010 .

Physics is independent of E0

Q=

X

e−βEi = e−βE0

X

i

e−β(Ei −E0 ) = e−βE0

e−β∆Ei ;

(∆Ei ≥ 0); ⇒

i

i

Pi = Pj,k =

X

e−βE0 e−β∆Ej

e−β∆Ej

P

P

=

−β∆Ej

e−βE0 j,k e−β∆Ej

j,k e

This shows that the probabilities are independent of E0 , you can set your energy scale so that

E0 = 0. A partition function with only one energy E = 0:

X

Q=

e−β0 = Ω

j=[0]

3rd Law in Statistical Mechanics; S → 0 as T → 0 (β → ∞)

e−β∆Ej

−β∆Ej

j,k e

Pi = Pj,k = P

∆E0 = 0 (Groundstate); lim e−β∆E0 → 1.

β→∞

∆Ei > 0 (Excited state); lim e−β∆Ej → 0.

β→∞

⇒

lim P0 = lim P

β→∞

β→∞

e−β∆E0

1

=

−β∆E

j

Ω(E0 )

j,k e

e−β∆E0

=0

−β∆Ej

j,k e

lim Pi6=0 = lim P

β→∞

β→∞

No ground state degeneracy:

P0 = 1

Pi6=0 = 0

S = −kB

X

Pi ln Pi = 0.

Consistent with 3rd law.

i

Physics does not depend on absolute S, but the 3rd law sets S = 0 for T → 0, which for no

ground state degeneracy is consistent with our choice of ‘constant = 0’ when we derived entropy;

since ln 1 = 0.

20

MIT 3.20, Rickard Armiento, Lecture 4

With ground state degeneracy:

1

P0 = Ω(E

0)

Pi6=0 = 0

S = −kB

X

Pi ln Pi = kB ln Ω(E0 )

i

Inconsistent with 3rd law? But: the ground state degeneracy is generally small compared to the

huge numbers involved for larger T ⇒ 3rd law is valid in the macroscopic / thermodynamic

limit.

Typical example, Ω(E0 ) ∼ N , say 1023 ⇒ kB ln N = 1.38 · 10−23 ln(10)23 ∼ 10−21 J/K ≈ 0.

Infinite Temperature Limit, T → ∞, (β → 0)

e−β∆Ei → 1 for all states, All states are completely accessible.

Pi → constant, same for all states

S = Maximal, state space is as large as it can be.

This is a general observation: if all states are accessible and equally probable, S is maximal.

Entropy for Intermediate Temperatures 0 < T < ∞

Non-uniform distribution Pi .

Intermediate entropy between S = 0 and S = Smax (possibly ∞).

Probability distribution

Example: solid

Ordered

Liquid

Disordered

Eordered < Edisordered < Eliquid

T low: Pordered > Pdisordered , Pliquid . System resides in “few” states,

disorder energy-wise too expensive.

T intermediate: The many more energy levels available. Disordered

phase has higher energy but higher degeneracy. Pdisordered more

significant. Possibly other ordered states.

T high: Pliquid more significant. Ω(Eliq ) >> Ω(Eordered ). (More

about this later, 2nd law spontaneous processes).

21

MIT 3.20, Rickard Armiento, Lecture 4

Interpretation of Entropy

(Isolated, noninteracting particles)

The partition function ∼ size of state space. Temperature acts as a

tuning knob that limits the state space. ∆Sworld increase ⇒ “nature

wants to increase its state space” = heat transfers from one system

to another if this increases the total state space. Why? Purely because of the statistics involved. All states are “possible”, but larger

state space = more likely to find the system there. Huge numbers

involved ⇒ laws of thermodynamics. The balance is controlled

by the

in state space size to a change in internal energy;

response

∂S

1

∂U V,N = T .

A

B

Equal a priori probability:

PA = P B

But of all possible degenerate states,

How many "look like" A? Like B?

Pspread out >> Pon left side

This specific hand:

2,598,960 : 1

This specific hand:

2,598,960:1

One pair

1.36 : 1

Royal straight flush

649,739 : 1

Connection to disorder: There are almost always more disordered

states than ordered ones ⇒ disorder = high entropy, order = low

entropy, i.e., the increase of state space usually means the world

evolve from order → disorder.

Arrow of time, direction of memory: with reversible equations of

motions, why is time moving forward? ∆S > 0 and the idea that

disorder increases breaks time symmetry.

Entropy in Information Theory

Claude Shannon discussed the nature of “lost information” in communication signals.

Claude Shannon

“My greatest concern was what to call it. I thought of

calling it ‘information’, but the word was overly used, so I

decided to call it ‘uncertainty’. When I discussed it with

John von Neumann, he had a better idea. Von Neumann

told me, ‘You should call it entropy, for two reasons. In the

first place your uncertainty function has been used in

statistical mechanics under that name, so it already has a

name. In the second place, and more important, nobody

knows what entropy really is, so in a debate you will

always have the advantage.”

M. Tribus, E.C. McIrvine, “Energy and information”, Scientific American, 224 (1971).

22

MIT 3.20, Rickard Armiento, Lecture 4

Entropy in Information Theory

Entropy: a measure of uncertainty. Proportional to the

number of optimal binary questions needed to exactly

determine the state of a system.

For the complete system:

I

I

I

Only one state: S = 0, complete certainty, no

questions needed.

All probabilities equal: S=maximal uncertainty,

maximal number of questions needed.

Not all probabilities equal: S=intermediate, some

questions needed to determine the state.

Entropy = “uncertainty”: proportional to the number of optimal binary questions one needs to

ask to determine exactly which state a system is in.

Eight Sided Dice

“Dumb” questions

Probability

Pi

Probability

Pi

1/8

1/8

1 2 3 4 5 6 7 8

1 2 3 4 5 6 7 8

Is it face 1? probability 1/8 that the answer is yes, 7/8 that

it is no. Is it face 2? ...

Which side of the dice faces up?

Ask binary questions = questions that can be answered

with a yes or no.

Asking one such question = one bit of information.

23

Not optimal questions, average number needed =

1

(1 + 2 + 3 + 4 + 5 + 6 + 7 + 7) = 4.375

8

MIT 3.20, Rickard Armiento, Lecture 4

Unequal probabilities Pi

Probability

Pi

“Clever” questions

Probability

Pi

2/8

1/8

1/8

1 2 3 4 5 6

1 2 3 4 5 6 7 8

123456

12345678

Question 1

1234

12

5678

1

Question 2

12

34

56

3456

2

34

78

56

Question 3

1

2

3

4

5

6

7

8

3

Number of binary optimal questions: 18 (3 × 8) = 3

Can get this as: log2 (8) = 3

4

5

Average number of questions =

2 × 28 + 2 × 28 + 3 × 18 + 3 × 18 + 3 ×

6

1

8

= 2.5

Communication

Instead of a state, consider communication stream of bits.

General Case

What is the probability that the next bit is 0, 1?

Given a set of mutually exclusive possible states,

i = 1, 2, ..., each with probability Pi known beforehand,

I

I

Then:

I

The average number of optimal binary questions needed

to find the state is

X

I=−

Pi log2 Pi

i

Suggested homework

• 2002E2-2

• 2003E2-1a

• 2007E2-5(a-c)

24

I

Only 0:s in stream, S = 0, complete certainty.

Completely random: S=maximal uncertainty.

P0 = 0.6, P1 = 0.4, S=intermediate.

Some complicated function of previous bits (think:

message in english), S=intermediate.

I=−

P

i

Pi log2 Pi = bits of “information” in the message.

MIT 3.20, Rickard Armiento, Lecture 5

Lecture 5: Relations with Thermo. General Boundary Conditions

(the box)

• October 24, 2011, McQuarrie 2.4 - 3.2

What We Know

P

Ensemble averages: Mmeausured = M̄ = i Mi Pi

Pi = probability of finding a system in microstate i of the ensemble.

Probabilities in the microcanonical ensemble: Pi = 1/Ω(E) = constant

Entropy in the microcanonical ensemble: S = kB ln Ω(E)

P

P

Canonical partition function: Q = i e−Ei /(kB T ) = E Ω(E)e−E/(kB T )

Probabilities in the canonical ensemble: Pi = e−Ei /(kB T ) /Q

Entropy in the canonical ensemble: S = kB ln Q + Ē

T

Completing the Connection to Thermodynamics

Let T, V, N be constant: canonical ensemble.

Helmholtz Free energy for the canonical ensemble: the thermodynamic potential with the same

natural variables as the basis for the canonical ensemble:

n

o

Ē

A = E − T S = S = kB ln Q + Ē

= E − T kB ln Q + T ⇒

T

T

Fundamental relation in natural variables:

A(T, V, N ) = −kB T ln Q(T, V, N )

Connects the ‘microstate world’ (Q) and the macroscopic thermodynamical world (A). All other

relations follow from this one + thermodynamics:

Deriving equations of state:

∂A

S=−

∂T V,N

∂A

p=−

∂V T,N

∂A

µ=+

∂N T,V

∂ ln Q

∂T

S = kB ln Q + kB T

∂ ln Q

Eqns. of state ⇒ p = kB T

T,N

∂V

∂ ln Q

µ = −kB T

∂N T,V

25

V,N

MIT 3.20, Rickard Armiento, Lecture 5

It works equally well to just solve for the quantities:

E

Ē

A = E − T S ⇒ S = (E − A)/T = A = −kB T ln Q =

+ kB ln Q =

+ kB ln Q.

T

T

The energy:

E = A + T S = −kB T ln Q + T

kB ln Q + kB T

∂ ln Q

∂T

!

= kB T

2

V,N

∂ ln Q

∂T

V,N

Another useful formula for the energy:

)

( ∂Q

P

P

−βEi

−βEi

=

−

E

e

E

e

∂ ln Q

i

i

i

∂β

i

⇒ E=−

Ē =

⇒

.

d ln(f (x))

f 0 (x)

Q

∂β

V,N

dx

f (x) =

The partition function Q ⇒ everything (in the thermodynamical description of the system.)

Example

N distinguishable systems that can be in 2 energy states = 0, .

j = nj ,

⇒

nj = [0, 1],

E=

N

X

nj ,

ρ=

j=1

X

nj .

j

N

e−β

X

Q=

P

j

nj X

Y

{nj =1,0}N

j

j

=

{nj =1,0}N

j

e−βnj =

Y X

X

e−βnj =

j nj =1,0

e−βn

= (1+e−β )N

n=1,0

(General result we will see later, Q = q N for identical independent distinguishable systems.)

A = −kB T ln Q = −kB T ln(1 + e−β )N = −N kB T ln(1 + e−β ).

A

= −kB T ln(2) ≈ −0.69kB T

N

A

For T << 1, β >> 1 ⇒

= 0.

N

For T >> 1, β << 1 ⇒

The average energy is:

Ē = −

∂ ln Q

∂β

=−

V,N

∂N ln(1 + e−β )

∂β

=

V,N

n

d ln(f (x))

dx

=

f 0 (x)

f (x)

o

Entropy:

S = kB ln Q +

Ē

1 N

= kB N ln(1 + e−β ) +

.

T

T 1 + eβ

26

= −N

−e−β

N

=

−β

1+e

1 + eβ

MIT 3.20, Rickard Armiento, Lecture 5

Average occupation: note: this is a ‘microstate world’ question, and thus is not derived via

thermodynamics.

hρi =

X

Pi ρi =

i

(

X

P

j

nj =

Q

{nj =1,0}N

j

=−

−β

j nj )e

P

n

f 0 (x)

f (x)

=

d ln(f (x))

dx

o

=−

1 d

ln Q = Q above =

β d

N d

N −βe−β

N

= β

.

ln(1 + e−β ) = −

β d

β 1 + e−β

e +1

As expected: hρi · = Ē.

Microcanonical ensemble in large system limit

Energy levels of the system: m = 1...M,

Degeneracy: Ω(E, N ) =

m(E) = E/.

N!

(N − m)!m!

Stirling’s approximation

ln n! ≈ n ln n − n

=

Entropy: S = kB ln Ω(E, N ) =

Assumes n is large

kB (N ln N − N − (N − m) ln(N − m) + (N − m) − m ln m + m)

To get E as a function of temperature, we need to derive an expression for the temperature.

∂S

1

1

∂S

1

∂S

Temperature from thermodynamics:

= ⇒β=

=

=

∂E N

T

kB ∂E N

kB ∂m N

1 ∂

1

N −m

1

N

− m) + (N − m) −m ln m + m) = ln

(N ln N

= ln

−1

{z − N} |−(N − m) ln(N{z

}|

{z

} ∂m |

m

m

→0

→− ln m

→ln(N −m)

N

N

N

−1 ⇒ m=

⇒ E = m =

. (Same as above!)

β

m

1+e

1 + eβ

For N, m large, the microcanonical ensemble gives the same result as the canonical ensemble.

This is generally true (will be explored in a later lecture on ‘Fluctuations’.)

⇒ eβ =

Grand canonical Ensemble

Allow a system to exchange both energy and particles with its environment ⇒ µ, V , and T fixed.

What do we mean by µ for microstates? Same issue as with T for the canonical ensemble.

Derivation similar to canonical ensemble: Ensemble G contains all possible systems with the

same macroscopic values of µ, V , T . Lay out 1...A labeled subsystems in a huge energyparticle bath which at first is in particle and thermal equilibrium with its environment at T and

µ. Then isolate it. View the simulated bath as a microcanonical ensemble, D. Every system in

D has a distribution of subsystems over particle number N and state (N, j), counted as aN,j :

XX

aN,j = A

N

j

27

MIT 3.20, Rickard Armiento, Lecture 5

In how many ways can we take A systems from G and lay them out into the simulated heat +

particle bath system, in a way that fulfills:

XX

XX

aN,j EN,j = E ,

aN,j N = N .

N

j

j

N

A!

⇒W =

,

ΠN Πj aN,j !

P

W (a)aN,j

aP

PN,j =

A

a W (a)

(Exactly as for the canonical ensemble: number of ways to reorder all labeled subsystems: A !.

Reordering with a group represents identical states, so divide with aN0 ,0 !, aN0 ,1 !, ...)

In the limit of an infinitely large simulated heat bath, A → ∞, the only term in the sum over

distributions relevant is the one that maximies W (a), {a∗j }, given by:

a∗N,j = e−α e−βEN,j e−γN

PN,j (V, β, γ) =

a∗N,j

e−βEN,j (V ) e−γN

= P P −βE (V ) −γN

N,j

A

e

N

je

Grand canonical ensemble = the canonical ensemble but also with sums now also over all possible values of N . Identify β by suddenly making walls impenetrable ⇒ canonical ensemble

⇒ β = 1/(kB T ).

Identification of entropy and γ:

f (β, γ, {EN,j (V )}) = ln Ξ = ln

XX

N

df =

∂f

∂β

dβ +

γ,{EN,j }

∂f

∂γ

e−βEN,j (V ) e−γN .

j

X X ∂f dγ +

∂EN,j β,γ,E

β,{EN,j }

N

df = −Ēdβ − N̄ dγ − β

j

XX

N

|

dEN,j .

(M,k)6=(N,j)

PN,j dEN,j

j

{z

=−p̄dV

}

(For the last identification of −p̄dV , see derivation of canonical ensemble)

⇒

df = −Ēdβ − N̄ dγ + β p̄dV

Add d(β Ē) + d(γ N̄ ) to both sides, compare with thermodynamics:

d(f + β Ē + γ N̄ ) = βdĒ + β p̄dV + γdN̄ .

⇐⇒

T dS = dE + pdV − µdN

⇒

1) γ =

2) S =

−µ

kT

Ē N̄ µ

−

+ kB ln Ξ + constant (Third law ⇒ constant = 0).

T

T

28

MIT 3.20, Rickard Armiento, Lecture 5

Entropy in the grand canonical ensemble:

S=

Ē N̄ µ

−

+ kB ln Ξ.

T

T

The grand canonical partition function:

Ξ(V, T, µ) =

XX

e−βEN,j (V ) eβµN =

j

N

X

Q(N, V, T )eβµN .

N

Much more work to sum over N ? In many cases it can actually be harder to restrict N .

Which potential has the same natural variables as the grand canonical ensemble?

Φ(T, µ, V ) = E − T S − µN

(= −pV due to Eulers’s theorem E = T S − pV + µN )

Fundamental relation in natural variables:

Φ(T, µ, V ) = −kB T ln Ξ(T, µ, V ).

Represents the most straightforward connection between macroscopic (thermodynamical) quantities and the ‘microstate world’ represented by Ξ. A good starting point for deriving other

relations.

The Generalized “Box” Ensemble

Are there other ensembles? Yes, for example, the isothermal-isobaric ensemble of systems with

heat and volume exchange with the environment:

XX

⇒ ∆(N, T, p) =

Ω(N, V, E)e−E/kT e−pV /kT

E

V

Still others, other type of work (e.g., magnetic), all possible combinations, etc.

Generic ensemble, let Λ be the characteristic potential/function.

Pi =

e2i

,

Z

−βΛ = ln Z;

Z=

X

i

29

e2i

MIT 3.20, Rickard Armiento, Lecture 5

Reverse-engineering the proof above gives the following scheme:

Recipe for expressions for any ensemble with constant T :

1. Start from the definition of the thermodynamical potential with the right natural variables, e.g., E(S, Y ); dE = T dS − xdY ⇒ Λ(T, x) = E − T S + xY

2. Multiply by −β to get +S/kB on the right side ⇒ −βΛ(T, Y ) = S/kB − βE + βxY .

3. Everything on the right hand side except S/kB goes into the exponent of the partition

function: ⇒ 2i = −β(Ei − xYi )

4. The left hand side gives the thermodynamic connection: −βΛ = ln Z.

⇒ Pν =

e2i

e−β(Ei −xYi )

=

;

Z

Z

Z=

X

e2i =

i

X

eβ(Ei −xYi ) ;

Λ = −kB T ln Z

i

1. Canonical ensemble / Free energy

A(T, V, N ) = E − T S;

⇒ Pi =

e−βEi

,

Z

−βA = S/kB − βE

Z=Q=

X

⇒

e−βEi ,

2i = −βEi

⇒

A = −kB T ln Q

i

2. Grand canonical ensemble / Grand potential

Φ(T, V, µ) = E − T S − µN ;

⇒ Pi =

e−β(Ei −µNi )

,

Z

−βΦ = S/kB − βE + βµN

Z=

X

e−β(Ei −µNi ) =

i

XX

N

⇒

2i = −β(Ei − µNi )

e−β(EN,j −µN ) = Ξ,

⇒

Φ = −kB T ln Ξ

j

3. Isothermal-isobaric ensemble / Gibbs free energy

G(T, p) = E − T S + pV ;

⇒ Pi =

e−βEi

,

Z

Z=

−βG = S/kB − βE − βpV...

X

i

e−β(Ei +pVi ) =

XX

V

⇒

2i = −β(Ei + pV )

e−β(EV,j +pV ) = ∆,

⇒

G = −kB T ln ∆

j

If T is not constant? More complicated → since we cannot hold E constant easily when the

system exchanges energy as work. ⇒ e.g. the (H,p,N ) ensemble.

Suggested Homework

•

•

•

•

Exam problem 2001E2-3 (Note: (a) was already suggested for lecture 1, (b) for lecture 3)

Exam problem 2003E2-3

McQuarrie problem 2.11

McQuarrie problem 2.14

30

MIT 3.20, Rickard Armiento, Lecture 6

Lecture 6: Fluctuations

• October 26, 2011, McQuarrie 3.3

What We Have Covered So Far

P

Averages: Mmeasured = 1st postulate = M̄ = i Pi Mi

MicrocanonicalP

ensemble:

Pi = 1/Ω

Ω(N, V, E) = i = Degeneracy for energy E

Easiest connection to thermodynamics: S = kB ln Ω(N, V, E)

Canonical ensemble: Pi = e−Ei /(kB T ) /Q

P

P

Q(N, V, T ) = E Ω(E)e−E/(kB T ) = i e−Ei (V )/(kB T )

Easiest connection to thermodynamics:A = −k

B T ln Q(N, V, T )

∂ ln Q

Useful formula for the energy: Ē = − ∂β

V,N

Grand canonical ensemble: Pi = e−(Ei −µN )/(kB T ) /Ξ

P

P P

Ξ(V, T, µ) = N Q(N, V, T )eµN/(kB T ) = N j e−(EN,j (V )−µN )/(kB T )

Easiest connection to thermodynamics: Φ = −kB T ln Ξ(V, T, µ)

Fluctations

Different ensembles = different quantities fixed, others quantities

in balance with environment and allowed to fluctuate. How much

does a quantity in balance with the environment fluctuate? Does

measurements need to take this into account?

X

Mmeasured =? M̄ =

P i Mi

Pi

i*

i

For the energy:

Ē =

X

i

Pi Ei =

1 X

1 X ln Ω(E)−βE

E Ω(E)e−βE =

Ee

| {z } Q

Q

E

∼P (E)

E

How does the probabilities P (E) ∼ Ω(E)e−βE = eln Ω(E)−βE typically look? First lecture, one

independent particle:

ω(E)dE ∼ E 1/2 dE

⇒

P (E) ∼ e(1/2) ln E−βE

⇒

P (E) ∼ e(3N/2−1) ln E−βE

Many independent particles (ideal gas)

Ω(E)dE ∼ E 3N/2−1 dE

31

MIT 3.20, Rickard Armiento, Lecture 6

Ω(E)

e-ϐE

Ω(E)e-ϐE

≈Ce

-(E-E)2/(2σ2)

Looks similar to a Gaussian (but it is not exactly a Gaussian, view this as first term in an expansion)

−(E−Ē)2

1

−

2σ 2

P (E) =

e

(2πσ 2 )1/2

P (E) is approximately Gaussian-shaped. What is the variance σE ?

AB 6= ĀB̄

A+B =

2

but

= E 2 −2E Ē+Ē 2 =

= E 2 −2Ē 2 +Ē 2 ⇒

σE

= (E − Ē)2 = (E 2 − 2E Ē + Ē 2 ) =

Ā + B̄

AB̄ = ĀB̄

Variance of a fluctuating variable:

2

σE

1 X 2 −βEi

−

= E 2 − Ē =

Ei e

Q

2

i

1 X

Ei e−βEi

Q

!2

;

2

(σX

= X 2 − X̄ 2 )

i

Trick: the quadratic term can be rewritten as a derivative of QĒ:

!

1 X 2 −βEi

1 ∂ X

1 ∂(QĒ)

−βEi

Ei e

=−

Ei e

= −

=

Q

Q ∂β

Q ∂β

i

i

|

{z

}

QĒ

=−

n

o

P

Ē ∂Q Q ∂ Ē

∂ Ē

−βEi = −QĒ

=

−

E

e

−

= ∂Q

= Ē 2 −

i

i

∂β

Q ∂β

Q ∂β

∂β

32

MIT 3.20, Rickard Armiento, Lecture 6

Hence,

2

σE

= E 2 − Ē 2 = Ē 2 −

∂ Ē

∂ Ē

− Ē 2 = −

∂β

∂β

Typical result for variance of an extensive variable. Intensive mechanical variables are slightly

more complicated (See McQuarrie problem 3.18.) To connect to thermodynamics, lets change

the β derivative into a temperature derivative,

2

σE

=−

n

∂ Ē

∂ Ē ∂T

=−

·

= ∂T

∂β =

∂β

∂T ∂β

∂ 1

∂β kB β

o

∂ Ē

= − kB1β 2 = −kB T 2 = kB T 2

∂T

From thermodynamics

CV =

∂E

∂T

⇒

N,V

Remarkably simple connection between variance of energy fluctuations and the heat capacity:

2

σE

= kB T 2 CV

Relative root-mean-square of fluctuations of energy (in canonical ensemble)

σE

=

Ē

p

k B T 2 Cv

= relative deviation from average.

Ē

Order of magnitude → use ideal gas. (both E and Cv are extensive, i.e., scales with the system

size.)

Ē ∼ N kB T, Cv ∼ N kB ⇒

Typical fluctuations for a large system:

σE

1

∼ √ ≈0

Ē

N

In numbers: N ∼ 1023 ⇒

σE

Ē

∼ 10−11 = 0.000000001%.

• N = small → fluctuations more relevant.

• N = large (macroscopic) → probability distribution for E = practically a delta function.

Fluctuations of N , grand canonical ensemble

T, V, µ constant, E, p, N fluctuates. Grand canonical ensemble:

PN,j =

1 −βEN,j −γN

e

,

Ξ

Ξ=

XX

N

33

j

e−βEN,j −γN

MIT 3.20, Rickard Armiento, Lecture 6

The variance of the particle number:

2

σN

=

N2

1 X X 2 −βEN,j −γN

− N̄ =

N e

−

Ξ

1X

N e−βEi e−γN

Ξ

2

j

N

!2

i

Derivation almost exactly as for energy. Trick: rewrite the quadratic term as a derivative of QN̄ :

X

X

X

X

1

1 ∂

1 ∂(ΞN̄ )

=

N 2 e−βEN,j −γN = −

N e−βEN,j −γN = −

Ξ

Ξ ∂γ

Ξ ∂γ

j

j

N

N

{z

}

|

ΞN̄

=−

n

o

P P

N̄ ∂Ξ Ξ ∂ N̄

∂ N̄

−βEN,j e−γN = −ΞN̄

=

−

N

e

−

= ∂Ξ

= N̄ 2 −

N

j

∂γ

Ξ ∂γ

Ξ ∂γ

∂γ

Hence,

2

σN

= N 2 −N̄ 2 = N̄ 2 −

∂ N̄

∂ N̄

∂ N̄ ∂µ n ∂µ

−N̄ 2 = −

=−

·

= ∂γ =

∂γ

∂γ

∂µ ∂γ

∂

∂γ

(−kB T γ) = −kB T

o

= kB T

From thermodynamics:

∂ N̄

∂µ

V,T

N2

=− 2

V

∂V

∂p

N,T

(McQuarrie prob. 3.26, hint: use Gibbs-Duhem equation, SdT − V dp + N dµ = 0 to get

⇒

2

σN

N̄ 2 kB T κ

=

;

V

1

κ=−

V

∂V

∂p

= compressibility.

N,T

Size? Take ideal gas:

1 ∂

κ=−

V ∂p

Relative fluctuations ∼

√1

N

N kB T

p

N kB T

1

= 2

=

p V

p

⇒

√

σN

1

N

=

=√ .

N

N

N

is typical in statistical mechanics for extensive quantities.

Systems with significant fluctuations

1) Small systems: some variable M̄ :

σM

M̄

∼

√1 .

N

2) Close to critical points; at the critical point the transition between liquid and gas becomes a second-order transition. Spontaneous fluctuations can become very large here. “Critical opalescence” discovered in 1869 → fluids gets “milky” near the critical

point due to large length-scale density fluctuations (on the order of

the wave-length of visible light). At the critical point, the Gibbs

free energy becomes very flat, and there is an absence of restoring

force for fluctuations. Good read: Chapter 10 of Callen, Thermodynamics and an Introduction to Thermostatistics.

34

∂µ

∂p

.)

∂ N̄

∂µ

MIT 3.20, Rickard Armiento, Lecture 6

Equivalence of ensembles

In the thermodynamic limit (N → ∞), away from critical points

fluctuations are exceedingly small ⇒ Results obtained in one ensemble are the same in any other!

Equivalence between microcanonical and canonical:

Q(N, V, T ) =

X

Ω(N, V, E)e

−βE

=

E

E = E ∗ = Ē

=

other terms zero prob.

∗

= Ω(N, V, E ∗ )e−βE = Ω(N, V, E)e−βE .

Calculate the entropy using this form:

∂ ln Q

E

S = kB ln Q + kB T

=

= kB ln Q +

∂T

T

V,N

E

= kB ln Ω

T

P

Same as in microcanonical ensemble! The sum E is “optional”

when the system is large. However, it is often easier to keep it.

= kB ln Ω − kB βE +

Re-examine example from last lecture

N distinguishable systems that can be in 2 energy states = 0, .

j = nj ,

nj = [0, 1],

⇒

E=

N

X

nj ,

ρ=

X

j=1

nj .

j

In the Canonical ensemble we found:

Q = ... = (1 + e−β )N

∂ ln Q

N

E(T ) = −

= ... =

∂β

1 + e/(kB T )

V,N

In the Microcanonical ensemble we found:

S = ... = kB (N ln N − N − (N − m) ln(N − m) + (N − m) − m ln m + m),

T (E) =

∂S

∂E

−1

= ... =

N

1

ln

N

−1

E

−1

⇒

E(T ) =

m = E/

N

1 + e/(kB T )

But note: the derivation of S used Stirling’s approximation! I.e. the large system limit.

Very typical that one ensemble gives you the same curve, but one as E(T ) and the other T (E).

Another example: Chemical potential in canonical vs. grand canonical ensemble

35

MIT 3.20, Rickard Armiento, Lecture 6

1) µ(N ) in canonical ensemble, Q(N, V, T ) ⇒

∂A

∂ ln Q

µ(N ) = dA = −SdT − pdV + µdN =

= A = −kB T ln Q = −kB T

∂N V,T

∂N V,T

2) N (µ) in grand canonical ensemble, Ξ(µ, V, T ) ⇒

∂ ln Ξ

∂Φ

= − Φ = −kB T ln Ξ = kB T

N (µ) = dΦ = −SdT − N dµ − pdV =

∂µ V,T

∂µ V,T

These should give the same curve for large systems.

Suggested homework

• Exam problem 2001E2-2(a-c) (also: after trying to solve it as in the lecture, make sure

to check the solutions to see another systematic path towards the solution; a “3-step algorithm”.)

• Exam problem 2002E2-1(a-b) for deriving the variance of an even more exotic variable.

• McQuarrie problem 3.18.

• McQuarrie problem 3.24.

• McQuarrie problem 3.26.

36

MIT 3.20, Rickard Armiento, Lecture 7

Lecture 7: Non-interacting particles, ideal gas, indistinguishable

particles, Boltzmann approximation, Ideal monoatomic gas,

Fermions and Bosons

• October 28, 2011, McQuarrie 4.1, 5.1-5.4

Things We Know

P

Averages: Mmeasured = 1st postulate = M̄ = i Pi Mi

MicrocanonicalP

ensemble:

Pi = 1/Ω

Ω(N, V, E) = i = Degeneracy for energy E

Easiest connection to thermodynamics: S = kB ln Ω(N, V, E)

Canonical ensemble: Pi = e−Ei /(kB T ) /Q

P

P

Q(N, V, T ) = E Ω(E)e−E/(kB T ) = i e−Ei (V )/(kB T )

Easiest connection to thermodynamics: A = −kB T ln Q(N, V, T )

Grand canonical ensemble: Pi = e(−Ei +µN )/(kB T ) /Ξ

P

P P

Ξ(V, T, µ) = N Q(N, V, T )eµN/(kB T ) = N i e−EN,i (V )/(kB T )+µN/(kB T )

Easiest connection to thermodynamics: Φ = −kB T ln Ξ(V, T, µ)

In large ‘thermodynamical’ systems → all ensembles give the same result. (So use the one

where the sums in the partition function is the easiest to do.)

Distinguishable Non-Interacting / Weakly Interacting Particles

Many-particle wave function: Ψ(q̄1 , q̄2 , q̄3 , ..., q̄N ).

Interacting particles → cannot easily divide Ψ into single-particle functions (such a division is

an “orbital theory”).

For no interaction / weak interaction:

Ĥ = Ĥ A + Ĥ B + Ĥ C + ...,

has individual eigensolutions: Ĥ A ψ A = A ψ A , ...

Let: ψ = ψ A ψ B ψ C ... then:

Ĥψ = (Ĥ A + Ĥ B + Ĥ C + ...)ψ A ψ B ψ C ... =

= ψ B ψ C H A ψ A + ψ A ψ C H B ψ B + ... =

= (A + B + C + ...)ψ A ψ B ψ C ... = (A + B + C + ...)ψ.

Hence, ψ = ψ A ψ B ψ C ... is an eigenfunction to Ĥ with eigenvalue: E = A + B + C + ...

B

C

Enumerated solutions to the eigenvalue equation: Ei = Ej,k,l,... = A

j + k + l + ...

37

⇒

MIT 3.20, Rickard Armiento, Lecture 7

We have reduced the many-particle problem to N single-particle ones.

Partition function of independent states:

Q=

X

e−βEi =

i

A

β

e−β(j +k +....) =

X

X

A

e−βj

X

j

j,k,...

B

eβk ... = qA · qB ...

k

and, if A = B = C ⇒ Q = q N .

The partition function is a product of independent terms. ln Q is a sum of independent terms.

(Check the example in lecture 5 again; using this makes getting the partition function very easy)

For distinguishable weakly interacting subsystems (think: independent labeled particles):

B

C

Ej,k,l,... = A

j + k + l + ...

Q(N, V, T ) = qA · qB ... = if all subsystems equal = q(V, T )N

Where {qA } are the partition functions for the individual subsystems.

Great simplification, but this only holds for distinguishable particles, e.g., localized in space and

it would be straightforward to label them e.g., by coordinate. Works for sites in lattices, segments

in polymer chains, etc. Does not work for identical particles in a box.

Hydrogen on a surface: hypothetical model system (not very realistic).

Hydrogen adsorbed on surface. We assume hydrogen atoms have no interaction with each other

or the surface and thus maintain their atomic energy levels.

Localized on surface = can be labeled → Distinguishable!

Each hydrogen can individually be exited into different electronic states. 1s, 2s, 2px , 2py , 2px ,...

States of the system i corresponds to different combinations of electronic states/excitations.

State 1: E1 = a1s + b2s + c1s + d2px + ....

State 2: E2 = a2s + b1s + c1s + d1s + ....

Q = qN ;

q=

X

e−βl = ‘mini’ partition functions for each particle.

l

Indistinguishable Particles, Fermions and Bosons

Non-interacting particles that are not localized / can not be labeled = indistinguishable.

Example:

state 1:

state 2:

A

2s

1s

B

1s

2s

C

3s

3s

D

4s

4s

38

a b c

MIT 3.20, Rickard Armiento, Lecture 7

Are state 1 and state 2 really different states? If ’A’, ’B’, ... refers to specific sites on a surface:

yes. If they are hydrogen atoms in a gas, then: no.

Can we just allow for all possible reorderings with a factor (1/N !) ? Indistinguishability in

quantum mechanics makes things more tricky.

Quantum mechanics must give the same physics when particles are relabeled:

|ψ(q̄1 , q̄2 , q̄3 , ..., q̄N )|2 = |ψ(q̄2 , q̄1 , q̄3 , ..., q̄N )|2 ⇒ ψ(q̄1 , q̄2 , q̄3 , ..., q̄N ) = ±ψ(q̄2 , q̄1 , q̄3 , ..., q̄N ).

The wave function is allowed to change sign when we relabel indistinguishable particles! Turns

out: + and - describe two different types of particles:

+ = Bosons = Integer spin particles,

− = Fermions = Half-integer spin particles

(Actually comes out from combining quantum mechanics and special relativity ⇒ Dirac Equation ⇒ particles have spin)

Two fermions cannot be in the same state: ψ(q̄, q̄) = −ψ(q̄, q̄) ⇒ ψ(q̄, q̄) = 0. No problem for

bosons. (This is what we see in nature!)

This is a type of “dependence” (=correlation) between the particles in that

P it regulates which

states that exists. I.e. which i we should count in partition function sums i .

Partition Function for Weakly Interacting Indistinguishable Particles

Independent particles → the proof that led up to this relation is still valid:

X

B

C

A

Q(N, V, T ) =

e−(l +m +n +...)/(kB T )

l,m,n,...

BUT, only as long as the sum over l, m, n, ... is restricted to only be over valid states, AND only

counts identical states once ⇒ we cannot easily divide it up into independent factors.

Example: two identical indistinguishable particles, each with two energy levels. Count the number of possible states (= terms in the i sum):

If we only take reordering into account:

1

1 X X

× Sum =

= 2 states

N!

2!

l=0,1 m=0,1

Fermions:

X

← 1 possible state.

(l,m)=[(0,1)]

Bosons:

X

← 3 possible states.

(l,m)=[(0,0),(1,0),(1,1)]

Hence, in general it is not enough to just consider all possible ways of reordering the states, since

it does not correctly handle the tricky terms where particles are in identical states.

39

MIT 3.20, Rickard Armiento, Lecture 7

Boltzmann Distribution

Realization: if there is a huge number of relevant different states → the number of terms

with “collision” of particles in the same state are relatively few, and thus including or missing those terms will not affect the sum significantly. This only works if number of particles <<

number of available states. Usually true if the temperature is normal → high, or density is relatively low.

In this case we can just divide with all ways of reordering the particles, and thus:

P P P

...e−β(l +m +n )

qN

Q(N, V, T ) ≈ l m n

=

.

N!

N!

The Boltzmann Distribution / Boltzmann statistics

Q(N, V, T ) =

qN

.

N!

Where q is the partition function of one particle. Valid for indistinguishable particles when

number of particles << number of available single-particle states.

Monoatomic Ideal Gas

N independent identical indistinguishable atoms in a box.

all available single particle

=

Recall first lecture: φ() =

states with energy ≤ ∼ kB T, T ∼ 300 K, m ∼ 10−22 g, a ∼ 10 cm

⇒

π

6

8ma2 h2

3/2

φ() ∼ 1030 >> 1023 = N.

⇒ single particle states >> number of particles.

We can use the Boltzmann distribution!

The degrees of freedom decouple (independent sum of Hamiltonians, same proof as for independent particles):

qatom = qtranslational · qelectronic · qnuclear .

Nuclear energy levels are far apart (∼ 1 MeV), atoms stay at nuclear ground state at normal

temperatures, we can set qnucl = 1.

Commonly electronic states have ∆ ∼ 1eV and thus e−β is small (∼ 10−17 ), and thus we can

set qelec = 1. This is not true for all atoms (e.g. halogen atoms). McQuarrie discusses some

cases.

Partition function for particle in a box

qatom ≈ qtrans =

X

e−βl ;

l = nx ,ny ,nz =

l

h2

(n2x + n2y + n2z ).

2

8ma

| {z }

=1/M

40

MIT 3.20, Rickard Armiento, Lecture 7

qtrans =

∞ X

∞ X

∞

X

−β(n2x +n2y +n2z )/M

e

=

nx =1 ny =1 nz =1

x, y, z independent

=

n = nx , ny , nz

∞

X

!3

−βn2 /M

e

=

n=1

β/M ∼ 10−21 ⇒ MANY states, terms slowly changing with n.

=

=

Take continous limit

s

!3 Z ∞

3 n

o

R

p

2πmkB T 3/2

πM

2

2

∞ −cx

−βn /M

π

=

V

=

e

dn = 0 e

dx = c =

β

h2

0

Alternative way, using the density of states ω() in the continuous limit from lecture 1:

Z ∞

Z ∞

X

π 3/2 1/2 −β

ω()e−β =

ω()e−β d = ω() from 1st lecture =

qtrans =

M e d = ... = same thing

4

0

0

Canonical partition function for the monoatomic ideal gas (with no electronic excitations)

qatom ≈ qtrans =

2πmkB T

h2

3/2

V

qN

1

Q = trans =

N!

N!

V

= 3,

Λ

2πmkB T

h2

Λ=

3N/2

h2

2πmkB T

1/2

VN

The length Λ is a “thermal De Broglie wavelength”. Criterion for Boltzmann statistics becomes

Λ3 /V is small, i.e., Λ < dimensions of the container. (See McQuarrie, end of section 5-1).

Thermodynamic connection:

A = −kB T ln Q ⇒ A = −kB T ln

=

qN

N!

= −N kB T ln q + kB T ln N ! =

Stirling’s approximation

= −N kB T ln q + N kB T ln N − N kB T ⇒

ln n! ≈ n ln n − n

"

#

2πmkB T 3/2 V

A = −N kB T ln

− N kB T.

h2

N

Step 1, the pressure:

dA = −SdT − pdV + µdN

⇒

p=−

41

∂A

∂V

N,T

∂ = N kB T

ln V ⇒

∂V N,T

MIT 3.20, Rickard Armiento, Lecture 7

Pressure for the ideal gas (equation of state 1)

p=

N kB T

V

Step 2, the energy:

A = E − T S, dA = −SdT − pdV + µdN ⇒

∂A

∂ ln Q

S=−

+ kB ln Q ⇒

= A = −kB T ln Q = kB T

∂T V,N

∂T

3N/2

2 ∂ ln Q

2 ∂ ln

T

=

E = A + T S = kB T

= kB T

∂T

∂T N,V

N,V

Energy in the ideal gas (2nd equation of state)

3

E = N kB T ;

2

The other equation of state valid for a monoatomic ideal gas!

Step 3, the chemical potential

µ=

= −kB T ln

"

∂A

∂N

"

2πmkB T

h2

= −kB T ln

T,p

3/2

V

N

2πmkB T

h2

#

=

n

V

N

=

#

3/2

kB T

p

+ kB T ln N +

V

o

"

= −kB T ln

|

N kB T

− kB T =

N

2πmkB T

h2

{z

µ0

3/2

#

kB T +kB T ln p = µ0 + kB T ln p.

}

Suggested homework

•

•

•

•

McQuarrie problem 4-12

Exam problem 2003E2-5

Exam problem 2001E2-4(a)

Go through the derivation for an ideal gas in the lecture, and check what changes if we had

set qelec = ωe1 + ωe2 e−β∆12 . (Check McQuarrie 5-3, if you need help.)

42

MIT 3.20, Rickard Armiento, Lecture 8

Lecture 8: Ideal diatomic gas. Rotational and vibrational DOF

• October 31, 2011, McQuarrie 6

Useful Formulas

P

Averages: Mmeasured = 1st postulate = M̄ = i Pi Mi

MicrocanonicalP

ensemble:

Pi = 1/Ω

Ω(N, V, E) = i = Degeneracy for energy E

Easiest connection to thermodynamics: S = kB ln Ω(N, V, E)

Canonical ensemble: Pi = e−Ei /(kB T ) /Q

P

P

Q(N, V, T ) = E Ω(E)e−E/(kB T ) = i e−Ei (V )/(kB T )

Easiest connection to thermodynamics: A = −kB T ln Q(N, V, T )

Grand canonical ensemble: Pi = e(−Ei +µN )/(kB T ) /Ξ

P

P P

Ξ(V, T, µ) = N Q(N, V, T )eµN/(kB T ) = N i e−EN,i (V )/(kB T )+µN/(kB T )

Easiest connection to thermodynamics: Φ = −kB T ln Ξ(V, T, µ)

In large ‘thermodynamical’ systems → all ensembles give the same result.

Independent distinguishable particles: Q = qA · qB · qC · ... = {if identical} = q N

Independent indistinguishable particles in high-temperature low-density limit: Q = q N /N !

Diatomic Degrees of Freedom

Diatomic Molecule

Assume the degrees of freedom are independent. Requires the BornOppenheimer approximation: electrons reach equilibrium on much

shorter timescale than ionic motion. Also assume amplitude and

timescale of vibrations are so small they do not interfere with rotations (= “rigid-rotor approximation”).

2) ROT

Ĥsingle particle = Ĥtrans +

Ĥrot

+Ĥvib + Ĥelec + Ĥnucl

|{z}

|

{z

}

Rigid-rotor

For now, assume

approx = fixed r

big energy spacing.

Q=

N

qsingle

particle

← Assume normal conditions, Boltzmann statistics ok.

N!

qsingle particle = qtrans · qrot · qvib · qelec · qnucl

| {z }

we can set = 1

43

3) VIB

1) TRANS

MIT 3.20, Rickard Armiento, Lecture 8

The Two-Body Problem

Two bodies in a potential that only depends on their distance. Energy in classical physics:

1

1

E = m1 ṙ21 + m2 ṙ22 + v(|r2 − r1 |)

2

2

Reorganize to get:

1

1 m1 m2 2

E = (m1 + m2 )Ṙ2 +

ṙ + v(|r|),

2 | {z }

2 m1 + m2

| {z }

m

R=

m1 r1 + m2 r2

,

m1 + m2

r = r2 − r1 .

µ

Hence, the problem is equivalent to one of a free translational motion of the center of mass, R,

using the sum of the masses, m = m1 + m2 , plus the independent movement, r, of one ‘virtual’

particle with mass µ in the potential v. In our quantum mechanical formulation:

(m +m2 )

Ĥ = Ĥtrans1

(µ)

(µ)

+ Ĥrot + Ĥvib

Translations

Easy! Same as we derived for monoatomic ideal gas, but m = m1 + m2 :

qtrans =

2π(m + m )k T 3/2

V

1

2 B

V = 3

2

h

Λ

3

Etrans = N kB T

2

⇒

Vibrations

Harmonic approximation (small vibrations) = harmonic oscillator.

First term in Taylor expansion of the potential:

∂v 1 ∂ 2 v +

v(z) =

v(0)

(r−re )+

(r−re )2 +...

|{z}

∂r r=re