* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Laws of Thermodynamics

Heat capacity wikipedia , lookup

Dynamic insulation wikipedia , lookup

Van der Waals equation wikipedia , lookup

Conservation of energy wikipedia , lookup

Copper in heat exchangers wikipedia , lookup

Countercurrent exchange wikipedia , lookup

Thermoregulation wikipedia , lookup

R-value (insulation) wikipedia , lookup

Equation of state wikipedia , lookup

Thermal radiation wikipedia , lookup

Calorimetry wikipedia , lookup

Heat transfer wikipedia , lookup

First law of thermodynamics wikipedia , lookup

Heat equation wikipedia , lookup

Internal energy wikipedia , lookup

Heat transfer physics wikipedia , lookup

Thermal conduction wikipedia , lookup

Temperature wikipedia , lookup

Entropy in thermodynamics and information theory wikipedia , lookup

Adiabatic process wikipedia , lookup

Chemical thermodynamics wikipedia , lookup

Non-equilibrium thermodynamics wikipedia , lookup

Maximum entropy thermodynamics wikipedia , lookup

Thermodynamic system wikipedia , lookup

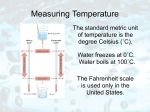

Lecture 1 Laws of Thermodynamics Classical thermodynamics is the branch of physics concerned with heat and related thermal phenomena. It was well established by the middle of the nineteenth century. Statistical mechanics is a theoretical branch of physics that provides an interpretation of thermodynamics in terms of a combination of microscopic physics and statistical concepts; it was first being developed in the middle of the nineteenth, with the major advances early in the twentieth century. 1.1 Classical thermodynamics Thermodynamics is a phenomenological branch of physics: it is a summary of the properties that real physical systems exhibit. The early history of the field revolved around the nature of heat, and the relation between heat and temperature. Following the experimental work of Joule it became accepted that heat is a form of energy, and this is the starting point for our discussion in the form of the first law of thermodynamics. Another ingredient in early ideas on thermodynamics concerned perpetual motion machines. Nobody was ever able to make a perpetual motion machine operate on its own, but why? It was realized that one class of suggested perpetual motion machines violated the first law of thermodynamics, that is, they involved creating energy out of nothing. Hence, this class could be dismissed as impossible by postulating that heat is a form of energy and that energy is conserved. However, there was a second class of suggested perpetual motion machines which didn’t work but which were consistent with energy conservation. Another law of thermodynamics was introduced to summarize the fact that this class of perpetual motion machines also does not work. This is the second law of thermodynamics, which expresses the observational fact that certain processes that are allowed by the first law of thermodynamics never happen. The second 1 law can be expressed in terms of a new thermodynamic variable called entropy. One statement of the second law is that in any physical process the total entropy never decreases. For example, if we drop a red hot cannon ball into a bucket of cold water, we end up with a bucket of warm water and a warm cannon ball. However, if we start from a bucket of warm water and a warm cannon ball we never observe the cannon ball jumping out red hot leaving a bucket of cold water. Such processes always go in one direction: heat flows from hotter to colder bodies. The explanation based on the second law is that the entropy of the bucket of warm water and the warm cannon ball is higher than the entropy of the bucket of cold water and the red hot cannon ball, and the second law requires that no process lead to a net decrease in entropy. There are certain processes that are reversible, and for these the entropy does not change. That is, reversible processes occur at constant entropy. The concept of entropy is a subtle one in classical thermodynamics. It has a relatively simple interpretation in statistical mechanics: entropy is a measure of randomness, and the Universe evloves from a more ordered to a less ordered state. There are certain systems that superficially appear to violate the second law of thermodynamics. One class involves self gravitating systems: interstellar gas clouds collapse to form stars; also the galaxies must have formed from a much more uniform distribution of matter in the early epoch of the Universe. In these systems, highly structured systems develop from essentially uniformly distributed matter. Biological systems also evolve from simpler to more complex structures. However, a more careful examination shows that in both cases the total entropy increases as these systems evolve. Physical quantities The laws of thermodynamics force us to define certain physical variables and to quantify them. The basic thermodynamic variables are heat, temperature and entropy. There are other variable that we can use to describe a system. Suppose for example that the system is the air in this room. We can do measurements to determine the volume of the system, the pressure that the air exerts, the density of the air, the chemical composition of the air, and so on. We are familiar with the concept of the temperature of the air, which we measure with a thermometer. However, the heat in the air and the entropy of the air are not familiar. Nevertheless, all these are examples of physical quantities that describe our system. A formal definition of a physical quantity is: A physical quantity is defined by the sequence of operations used to determine its value. This sequence of operations will often include calculations as well as measurements. 2 A physical law can now be defined: A physical law is a relation involving physical quantities that is found to be satisfied by physical systems under specified conditions. Some physical laws are very simple. For example, measuring a mass using a scale defines a physical quantity, and measuring mass using a spring balance defines another physical quantity. A simple physical law is that these two types of mass are the same thing: this law implies that these are two different but equivalent ways of measuring the same physical quantity (mass). A related physical law, with more profound consequences, is that inertial mass and gravitational mass are equal. In thermodynamics we are concerned with thermodynamic systems, with the air in this room being one illustrative example of a thermodynamic system. A somewhat better example is the air inside a balloon. This system is characterized by its volume, pressure, temperature and density. Another feature concerns the transport of heat across the surface of the balloon. If no heat is allowed to cross the surface (the balloon is made of thermally insulating material that allows no heat conduction), we say that the system is (thermally) isolated and the changes are then said to be adiabatic. 1.2 The laws of thermodynamics After the first and second laws of thermodynamics had been recognized and thermodynamics was becoming a more formal subject, it was realized that there was no consistent definition of temperature. A zeroth law of thermodynamics was added to rectify this. Later still, a third law of thermodynamics was added to summarize the observed properties of matter at extremely low temperatures. Zeroth Law: If two systems are in thermal equilibrium with a third system, then they are in equilibrium with each other. The zeroth law allows one to order systems according to the direction heat flows when two systems are put into thermal contact. One system is said to be hotter than another if heat flows from the former to the latter when they are put into thermal contact. This allows one to introduce a parameter, called an empirical temperature, which is the same for all bodies that are in thermal equilibrium with each other. This is done by constructing one system, called a thermometer, that allows one to ascribe a number to the temperature, e.g., the height of a colored liquid in a glass tube. First Law: Heat is a form of energy, and energy is conserved. The idea behind the first law is familiar to any thinking person in the modern world. We pay for energy, in the form of electricity, gas, oil, etc., which we use for heating, and we know that the amount of heat that we get is proportional to the amount of energy that we pay for. Heat is said to be generated by doing work on the system. The simplest example is the work done by a mechanical force: the 3 work is then the force times the displacement. In simple thermodynamic systems this appears with the force being identified as the pressure (= force per unit area) and the displacement being identified as the change in volume (= displacement of a surface area times that area). Another example of work iinvolves electricity: the force is then identified as the EMF (= electrical potential difference) and displacement as the charge transferred (= electrical current × time). Other force-displacement pairs that will appear in this course include surface tension and surface ares, and the chemical potential and chemical concentration. Second Law: There are several different ways of expressing the second law of thermodynamics, and the following are three examples. Clausius’ principle: No cyclic process exists which has as its sole effect the transference of heat from a colder body to a hotter body. Kelvin’s principle: No cyclic process exists which produces no other effect than the extraction of heat from a body and its conversion into an equivalent amount of work. Carathéodory’s principle: There are states of a system, differing infinitesimally from a given state, which are unattainable from that state by any quasi-static adiabatic process. The second law (especially in the Carathéodory form) allows one to order the states according to the direction that the system is allowed to evolve. A parameter, called an empirical entropy, is ascribed to each state, such that this parameter never decreases spontaneously. In the absence of external agents doing work on the system, any change in the system is either reversible, which involves no change in entropy, or irreversible, which involves an increase in entropy. The bucket of warm water with the warm cannon ball has a higher entropy than the bucket of cold water and the red hot cannon ball, so that the evolution is irreversible and can go in only one direction. To reverse the change we need to do work on the system: mechanical work to lift the cannon ball, and thermodynamic work, using some form of refrigerator to decrease the temperature of the bucket of water, and some other process to heat the cannon ball. Third Law: The entropy of a system approaches a constant value as the temperature approaches absolute zero. The third law is relatively easy to understand from a statistical point of view in which entropy is associated with disorder. As absolute zero is approached, all thermal motions cease, and any system must approach an ordered state in which the particles do not move. Hence, the entropy of a system is defined only to within an arbitrary constant, and only changes in entropy have physical significance. The changes in entropy become negligibly small as absolute zero is approached. 4 1.3 Thermodynamic variables To describe these ideas quantitatively we need to introduce thermodynamic variables and state functions. For the present let the pressure, P , be the only force that we consider. Then an infinitesimal change in the volume, dV , implies an infinitesimal amount of work done on the system, dW = −P dV . Note the sign: a positive change dV implies that the system expands a little, and hence that the system does work against the external pressure force (P is always positive), so that with dW defined as the work done on the system, we need a minus sign. Let dQ be the amount of heat added to the system. Let U be the internal energy of the system. Then the first law requires conservation of energy, which is expressed through the relation dU = dQ + dW = dQ − P dV . The absolute temperature and the metrical entropy The first law enables one to define an empirical temperature and the second law allows one to define an empirical entropy. What this means is that the laws allow us to order states with parameters t and s. Consider two states of a system labeled 1 and 2, with parameters t1 , s1 and t2 , s2 , respectively. For t1 > t2 we say that state 1 is hotter than state 2. The observational fact is that if the system is brought into thermal contact with some other system so that heat can be added or taken away from our system, then heat is required to flow out the system to get from state 1 to state 2, and heat is required to flow into the system to get from state 2 to state 1. For s1 > s2 it is possible for state 2 to evolve into state 1 without any work being done on the system, but it is impossible for state 1 to evolve into state 2. The temperature that we are familiar with is the Celsius scale, which differs from the absolute temperature (Kelvin) scale, by 273.15 K. That is, absolute zero is −273.15◦C. The unit of the absolute temperature scale is the kelvin, denoted K. The existence of the absolute temperature scale involves a rather subtle argument, which can be summarized as follows. Consider the heat, Q, transferred to the system in some irreversible change (this is, found by integrating the elements dQ over a range of infinitesimal changes). The zeroth and second laws imply that Q = Q(t, s) is some function of t and s. For a reversible change we know that s does not change, and a reversible change corresponds to no transfer of heat. Hence for a reversible change we have dQ = 0 and ds = 0. Now consider an irreversible change, ds 6= 0. In an irreversible change we have dQ = λds, where λ = λ(t, s) is some function of the empirical temperature and the empirical entropy. The subtle argument implies λ(t, s) is of the form λ(t, s) = T (t)φ(s), that is, that λ(t, s) depends on t and s only in the form of a product of a function, T (t), of t and a function, φ(s), of s. The argument involves imagining splitting the system into two parts, labeled A and B say. (Imagine splitting the system consisting of the air in the room into two parts corresponding to the air in two halves of 5 the room.) Each of these parts is a thermodynamic system in itself, and hence we have dQA = λA dsA , dQB = λB dsB , with QA , λA functions of tA , sA and QB , λB functions tB , sB . The amount of heat is additive, so that we have dQ = dQA + dQB . The empirical temperature of the two halves is the same, so that we have tA = tB = t. The total empirical entropy s depends on sA , sB and t. One can satisfy these differential relations only if the dependences of λ, λA and λB on t are all of the same functional form, a function T (t) say. It then follows that if one writes λ(t, s) = T (t)φ(s), R R λA (t, s) = T (t)φA (s), λBR(t, s) = T (t)φB (s) and S(s) = ds φ(s), SA (sA ) = dsA φA (sA ), SB (sB ) = dsB φB (sB ), one has dS = dSA + dSB . The function T (t) is the absolute temperature, and the function S(s) is the metrical entropy. From now on by temperature and entropy we mean the specific functions T and S, respectively. One then has dQ = T dS. The combined first and second laws of thermodynamics now imply that in an infinitesimal change, the internal energy, U , of a system changes according to dU = T dS − P dV. (1.1) Dependent and independent variables The variables in our thermodynamic relations appear in pairs: P , V and T , S in the case considered so far. One of each pair is chosen as the independent variable. According to (1.1) the variables we are allowing to change are V and S, which are therefore identified as the independent variables. The other two variables, P and T , are then the dependent variables, in the sense that they should be regarded as functions of S, V in (1.1). Specifically, the relation (1.1) determines the change in the internal energy when the independent variables S and T are changed. By implication, U = U (S, V ), is a function of these two variables. The internal energy is an example of a state function. If we know U (S, V ) as a function of S and V , then we know everything there is to know about the system from a thermodynamic viewpoint. Noting that we have ∂U ∂U dU = dS + dV, (1.2) ∂S V ∂V S comparison of (1.1) and (1.2) implies ∂U , T = ∂S V P =− ∂U ∂V . (1.3) S That is, given the state function U (S, V ) we may find the dependent variables T and P as functions of the independent variables S and V using (1.3). Also we can classify variables as intrinsic, which do not depend on the amount of the system, or extrinsic, which are proportional to the amount of the system. The variables P , T are intrinsic and V , S are extrinsic. 6 1.4 Specific heats and expansion coefficients The way a system responds to changes in variables is described in terms of various coefficients that are derived by partial differentiation of the appropriate state function. Consider how the energy of a system changes as heat is added to it. An element of heat is dQ = T dS. The effect of adding heat is different if one does so at constant volume or at constant pressure. At constant volume no work is done, but this is not so at constant pressure. The specific heats at constant volume and at constant pressure are defined by ∂S ∂S , cP = T , (1.4) cV = T ∂T V ∂T P respectively. The coefficient of thermal expansion is defined by 1 ∂V β= . V ∂T P Another coefficient is that of isothermal compressibility: 1 ∂V κ=− . V ∂P T 1.5 (1.5) (1.6) The equation of state Any specific thermodynamic system may be described by an equation of state, which is some functional relation between the P , V and T . We may write the equation of state in the form f (P, V, T ) = 0, (1.7) where the function f depends on the particular system. The simplest system is an ideal gas. In this case the equation of state is P V = N kT, (1.8) where N is the number of particles and k is Boltzmann’s constant. Another example of an equation of state is for a van der Waal’s gas: a P + 2 (v − b) = RT, (1.9) v where a and b are constants, v = V N0 /N is the molar specific volume, R = N0 k is the gas constant, and N0 is Avagadro’s number. The equation of state (1.9) reduces to (1.8) for a = 0, b = 0. 7 Appendix A: Rules of Partial Differentiation 1). Let f (x, y, z) be any differentiable function. Then one has ∂f ∂f ∂f dx + dy + dz. df = ∂x y,z ∂y x,z ∂z x,y The order of differentiation is reversible: " " # # ∂ ∂f ∂ ∂f = ∂y ∂x y,z ∂x ∂y x,z x,z . (A.1) (A.2) y,z 2). Let there be a relation f (x, y, z) = 0 that can be solved for x = x(y, z), y = y(x, z) or z = z(x, y). Then the partial derivative of the first of these functions with respect to y at fixed z is related to the partial derivative of the second with respect to x at fixed z by ∂x ∂y = 1. (A.3) ∂y z ∂x z The partial derivatives also satisfy the chain rule ∂y ∂z ∂x = −1. ∂y z ∂z x ∂x y (A.4) 3). Let f (x, y) be a function of x and y with y = y(x, z) a function of x and a third variable, z. Then f = f x, y(x, z) may also be regarded as a function of x and z. One has ∂f ∂f dx + dy, (A.5) df = ∂x y ∂y x ∂f ∂f df = dx + dz, (A.6) ∂x z ∂z x ∂y ∂y dy = dx + dz. (A.7) ∂x z ∂z x On inserting (A.7) into (A.5) and comparing the result with (A.6), one finds ∂y ∂f ∂f ∂f = + , (A.8) ∂x z ∂x y ∂y x ∂x z ∂y ∂f ∂f = . (A.9) ∂z x ∂y x ∂z x 8 Exercise Set 1 1.1). The coefficient of isothermal compressibility is 1 ∂V , κ=− V ∂P T and the coefficient of thermal expansion is 1 ∂V β= . V ∂T P (E1.1) (E1.2) Show that for an ideal gas one has κ = 1/P , β = 1/T . 1.2). Repeat the calculations in the previous problem, but for a van der Waal’s gas. Show that one has κ= v 2 (v − b)2 , RT v 3 − 2a(v − b)2 β= 9 Rv 2 (v − b) . RT v 3 − 2a(v − b)2 (E1.3)