* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download e-con 581 transcript

Survey

Document related concepts

Transcript

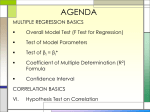

BUSINESS STATISTICS (PART-36) UNIT-III REGRESSION AND CORRELATION (PART 5) 1. INTRODUCTION Hello, dear students in my last lecture on regression analysis, we have learned how to develop a linear regression model using the bivariate sample data? Once the model is developed then it is ready for the purpose of the prediction. Now the mathematical model, i.e. the regression model, which we develop, is based on the sound principles of mathematics i.e. the method of least squares. Therefore, this model is very appropriate to use. But still since it is a non-deterministic model and the model is built on the basis of the sample data it is better to check the validity of the model. Therefore, in this lecture we shall discuss the method of checking the appropriateness or adequacy of the fitted regression model. It is the primary importance to be aware of the fact that a regression analysis is not completed by fitting a model by least squares, and provide estimate of dependent variable y. This only tells half of the story. Because we fit a linear regression model on the basis of sample observations; so we can never be sure that a particular model is correct unless we check the appropriateness or the validity of the model. 2. EXAMINING THE RESIDUALS Now, how to examining the model? Now in order to check the linear regression model let us consider the factor e i y i yˆ i , here in this equation, yi is the observed value, and we know that ŷi denotes the corresponding value predicted by the fitted linear regression model, and ei denotes the error or the residual. Now such errors we have for all the ‘n’ observed values, i.e. i=1, . . ., n. In order to understand how to check the validity of the model, we consider an example. This example concerns with the reduction of automobile pollution (i.e., Nitrogen oxide) by adding chemical composition in Petrol or Diesel. The amount of additive and reduction in Nitrogen Oxides data from 10 cars are given here in this table. The first row shows the amount of additive which is the variable ‘x’ i.e. x is an independent variable. And the value of this variable is 1, 1, 2, 3, 4, 4, 5, 6, 6, 7. This figure shows the amount of additive mixed in the petrol or in the gasoline. And with the result of this additive how much reduction in Nitrogen Oxide is there. This factor is denoted by the variable ‘y’. And here y takes values 2.1, 2.5, 3.1 and so on. That is, if the amount of additive of ‘x’ is 1 unit then the reduction in Nitrogen Oxides is 2.1 units. And so, for example, if the amount of additive is 2 in this car the reduction in Nitrogen Oxide is 3.1 and so on. In the last car, for example, where the amount of additive was 7 units the reduction in Nitrogen Oxide was 4.8 units. Now, when we fit a linear regression model to this by using the method of least squares we get this equation: ŷ 2.0 0.387 x This model provides estimated values for given x values. That is, yˆi 2.00 0.387 xi , where, i 1, 2,..., n If we put a particular value of x, for that we get an estimated value of y, which we are denoting it by 𝑦̂𝑖 . Now we have a linear regression model or the linear regression equation, using this equation for given values of x we get predicted values. That is, when we put x=1 in the equation we get 2.387 as the predicted value of y. And for x=2 we get the predicted value as 2.774 and so on. For the given value of x=7 the predicted value is 4.709. Now we have the two sets of y values. One is given in the second column, i.e., the observed values which we are denoting them by 𝑦𝑖 ’s. And other values are the predicted values, which we are denoting by 𝑦̂𝑖 . Now this last column gives me the difference between the observed values and the predicted values. And this is the error term due to model which we are using. So this is the difference between the observed value and the predicted value and these values are -0.287, 0.113 and so on 0.078 and 0.091. So these are the values of the residuals or the values of the errors. Now the sum of the square due to errors, i.e. square all the values of the residuals (or the errors ei’s) and sum them. We know that it is sum of squares due to errors and this is given by (-0.287)2 + (0.113)2 +.........+ (0.091)2 So the value of SSE comes out to be 0.7376. And the value of S x2 x 2 S y2 6.85 nx 2 40.9 & S xy xy n x y 15.81 and b1 (the reg coeff ) 0.387 for this given set of data and, as our linear regression line is given by ŷ 2.0 0.387x 3. THE COEFFICIENT OF DETERMINATION Now in order to check the validity of the model we consider the Coefficient of determination. Here we introduce what is the Coefficient of determination? This is an index to check the fitted linear regression model. And, 2 b S2 r 1 2x Sy 2 That is, r2 is equal to SS due to linear regression Total SS of y Now, this value of r2, for the given data, comes out to be 0.89. It means that 89 % of the variability in y is explained by linear regression, and the linear model seems satisfactory in this respect. Note: r is nothing but the Karl-Pearson’s correlation coefficient. So to check the model we have to calculate the r2, i.e. square of the correlation coefficient. When the value of r2 is small, we can only conclude that a straight – line relation does not give a good fit for the data. Such a case may arise due to following reasons: either (a) There is a little relation between the variables in the sense that scatter diagram fails to exhibit any pattern , as illustrated in this figure. That is there is no relation between the x and y variables. or (b) There is a prominent relation but it is non-linear in nature; that is, the scatter is banded around a curve rather than a line .This part of S that is explained by straight-line regression is small because the model is inappropriate. Some other relationship may improve the fit substantially. 2 y In this graph here we show that there is a curvilinear relationship between the y and the x data. So the linear regression model should not be fit to such type of data. 4. THEORETICAL ASPECT OF r2 We have taken r2 as an index to check the validity of linear regression model. But what is the theoretical basis? What is the theoretical aspect of taking the r2 as an index to check the validity of linear regression model? So, let us look at here. The observed value yi can be written as: (b0 b1 xi ) ( yi b0 b1 xi ) using the linear regression model. We can see that on the LHS we have the yi and on the RHS also it is yi but written in this particular form. So, we know yi observed value (b0 b1 xi ) is the part which is explained by linear relation. And the last factor ( y b b x ) residual or deviation from linear relation. i 0 1 i As we know that: yˆ i b0 b1 xi As an overall measure of the discrepancy or the variation from the linearity we can consider the sum of the squares of residuals. As we have defined it earlier. That is, SSE= ( y b i 0 b1 x)2 S y2 b12 S x2 So the sum of the squares due to errors can be written as: S y2 b12 S x2 And, the total variability of the y values is reflected in the sum of squares and it can be expressed as: ( yi y )2 Now this total sum of square can be expressed in terms of 𝑆𝑥2 and SSE by this equation. That is, S y2 b12 S x2 SSE As we know that: b12 S x2 SS explained by linear relation. SSE Residual SS or sum of squares due to errors. 2 2 Now, here we look at this equation .We know that if b S is large then the SSE will be small, because this equation gives me the value of 1 SSE S y2 b12 S x2 x Now the value of 𝑆𝑦2 is not in our control. Because 𝑆𝑦2 is the sum of the squares due to observed values. This is what we get through the experiment. So we can not control it. We can control the x variable; the independent variable. We take that variable as an independent variable which has a high correlation coefficient with the y variable. So if we pick up the x variable in such a way that this factor b S is large enough in order to have a minimum value of the SSE. So this is the basis of taking the r2 as an indicator to test the validity of the linear regression model. Now, let us look at this part. What is r2 in terms of 𝑆𝑥2 and 𝑆𝑦2 ? As an index of how well the straight-line fits, it is then reasonable to consider the proportion 2 1 2 x b12 S x2 i.e. r S y2 2 = SS due to linear regression Total SS of y where, r2 represents the proportion of the y variability explained by the linear relation with x. Here, what is b1? b1 we know is: S b S That is the regression coefficient of y on x. So that r2 can also be written as: xy 2 1 x r 2 2 S xy S x2 S y2 Here Sxy we know is covariance part and so the value of r S xy Sx S y is the sample correlation coefficient and this we denote by r. 5. SUMMARY In today’s lecture we have discussed a very important aspect of the regression analysis. That is how to check the appropriateness of the model or the validity of the linear regression model. We know that our process of developing a model is that once these sample data are given to us first in order to know that what type of relationship is there between the y and x variables. We plot a scatter diagram and once we have the scatter diagram we get an idea whether the relation between y and x is linear or whether there is a curvilinear relationship or whether there is an exponential relationship between the y and the x variable. Once we do that, then by the method of least squares we develop an appropriate model. But once we develop the model it becomes proper for us to check the validity of the model; and that is what we have learnt in this lecture today. That is how to test the validity of the linear regression model; which we know that, it is a non deterministic or a statistical model different then the deterministic or the mathematical model. And this we have learnt today that, we can check the validity of the model by calculating the square of the sample correlation coefficient. That tells whether the model is valid or not. Once we are satisfied that the model is valid then we can use this model for the purpose of prediction. Thank You!