* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Model 1

Survey

Document related concepts

Transcript

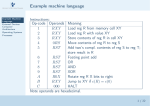

Chapter 5 Multicollinearity, Dummy and Interaction variables 1 We noted from the previous chapter that there are several dangers of adding new variables indiscriminately to the model. First, although unadjusted R2 goes up, we lose degrees of freedom because we have to estimate additional coefficients. The smaller the degrees of freedom, the less precise the parameter estimates. There is another serious consequence of adding too many variables to a model. If a model has several variables, it is likely that some of the variables will be strongly correlated. This property, known as multicollinearity, can drastically alter the results from one model to another, making them much harder to interpret. 2 Example 1 Let Housingt = number of housing starts (in thousands) in the U.S. Popt = U.S. population in millions GDPt = U.S. Gross Domestic Product in billions of dollars Interatet = new home mortgage rate t = 1963 to 1985 3 SAS codes: data housing; infile ‘d:\teaching\MS3215\housing.txt’; input year housing pop gdp unemp intrate ; proc reg data= housing ; model housing= pop intrate; run; proc reg data= housing ; model housing= gdp intrate; run; proc reg data= housing ; model housing= pop gdp intrate; run; 4 The REG Procedure Model: MODEL1 Dependent Variable: housing Number of Observations Read Number of Observations Used 23 23 Analysis of Variance Source Model Error Corrected Total DF 2 20 22 Root MSE Dependent Mean Coeff Var Sum of Squares 1125359 1500642 2626001 Mean Square F Value Pr > F 562679 7.50 0.0037 75032 273.91987 R-Square 1601.07826 Adj R-Sq 17.10846 0.4285 0.3714 Parameter Estimates Variable Intercept pop intrate Parameter DF Estimate 1 -3813.21672 1 33.82138 1 -198.41880 Standard Error 1588.88417 9.37464 51.29444 t Value Pr > |t| -2.40 0.0263 3.61 0.0018 -3.87 0.0010 5 The REG Procedure Model: MODEL1 Dependent Variable: housing Number of Observations Read Number of Observations Used 23 23 Analysis of Variance Source Model Error Corrected Total Sum of Squares 1134747 1491254 2626001 DF 2 20 22 Root MSE Dependent Mean Coeff Var Mean Square F Value Pr > F 567374 7.61 0.0035 74563 273.06168 R-Square 1601.07826 Adj R-Sq 17.05486 0.4321 0.3753 Parameter Estimates Variable DF Intercept gdp intrate 1 1 1 Parameter Estimate 687.92418 0.90543 -169.67320 Standard Error t Value 382.69637 0.24899 43.83996 1.80 3.64 -3.87 Pr > |t| 0.0874 0.0016 0.0010 6 The REG Procedure Model: MODEL1 Dependent Variable: housing Number of Observations Read Number of Observations Used 23 23 Analysis of Variance Source Model Error Corrected Total DF 3 19 22 Root MSE Dependent Mean Coeff Var Sum of Squares 1147699 1478302 2626001 Mean Square F Value Pr > F 382566 4.92 0.0108 77805 278.93613 R-Square 1601.07826 Adj R-Sq 17.42177 0.4371 0.3482 Parameter Estimates Variable Intercept pop gdp intrate Parameter DF Estimate 1 -1317.45317 1 4.91398 1 0.52186 1 -184.77902 Standard Error 4930.68042 36.55401 0.97391 58.10610 t Value -0.27 0.41 0.54 -3.18 Pr > |t| 0.7922 0.6878 0.5983 0.0049 7 Note that in the last model, the t statistics for Pop and GDP are insignificant, but they are both significant when entered separately in the first and second models. This is because the three variables Pop, GDP and Intrate are highly correlated. It can be shown that Cor(GDP, Pop) = 0.99 Cor(GDP, Intrate ) = 0.88 Cor(Pop, Intrate ) = 0.91 8 Example 2 Let expensesi be the cumulative expenditure on the maintenance for a given automobile, milesi be the cumulative mileage in thousand of miles and weeki be its age in weeks since the original purchase. i= 1,…,57. 9 SAS codes: data automobile; infile ‘d:\teaching\MS3215\automobile.txt’; input weeks miles expenses; proc reg data= automobile; model expenses = weeks; run; proc reg data= automobile; model expenses = miles; run; proc reg data= automobile; model expenses = weeks miles; run; 10 The REG Procedure Model: MODEL1 Dependent Variable: expenses Number of Observations Read Number of Observations Used 57 57 Analysis of Variance Source Model Error Corrected Total DF 1 55 56 Sum of Squares 66744854 7474117 74218972 Root MSE Dependent Mean Coeff Var Mean Square 66744854 135893 F Value Pr > F 491.16 <.0001 368.63674 R-Square 1426.57895 Adj R-Sq 25.84061 0.8993 0.8975 Parameter Estimates Variable Intercept weeks DF 1 1 Parameter Estimate -626.35977 7.34942 Standard Error 104.71371 0.33162 t Value -5.98 22.16 Pr > |t| <.0001 <.0001 11 The REG Procedure Model: MODEL1 Dependent Variable: expenses Number of Observations Read Number of Observations Used 57 57 Analysis of Variance Source Model Error Corrected Total DF 1 55 56 Sum of Squares 63715228 10503743 74218972 Root MSE Dependent Mean Coeff Var Mean Square F Value Pr > F 63715228 333.63 <.0001 190977 437.00933 R-Square 1426.57895 Adj R-Sq 30.63338 0.8585 0.8559 Parameter Estimates Variable Intercept miles Parameter DF Estimate 1 -796.19928 1 53.45246 Standard Error 134.75770 2.92642 t Value -5.91 18.27 Pr > |t| <.0001 <.0001 12 The REG Procedure Model: MODEL1 Dependent Variable: expenses Number of Observations Read Number of Observations Used 57 57 Analysis of Variance Source Model Error Corrected Total DF 2 54 56 Root MSE Dependent Mean Coeff Var Sum of Squares 70329066 3889906 74218972 Mean Square 35164533 72035 268.39391 R-Square 1426.57895 Adj R-Sq 18.81381 F Value Pr > F 488.16 <.0001 0.9476 0.9456 Parameter Estimates Variable Intercept weeks miles Parameter DF Estimate 1 7.20143 1 27.58405 1 -151.15752 Standard Error 117.81217 2.87875 21.42918 t Value Pr > |t| 0.06 0.9515 9.58 <.0001 -7.05 <.0001 13 A car that is driven more should have a greater maintenance expense. Similarly, the older the car the greater the cost of maintaining it. So we would expect both slope coefficients to be positive. It is interesting to note that even though the coefficient for miles is positive in the second model, it is negative in the third model. Thus, there is a reversal in sign. The magnitude of the coefficient for weeks also changes substantially. The t statistics for miles and weeks are also much lower in the third model even though both variables are still significant. The problem is again the high correlation between weeks and miles. 14 Consider the model yi 0 1 X i1 2 X i 2 i and let ˆ0 , ˆ1 and ˆ2 be the least squares estimates of 0 , 1 and 2 respectively. It can be shown that var ˆ1 var ˆ2 2 2 x x i1 1 1 r122 2 2 2 x x 1 r i2 2 12 cov ˆ1 , ˆ2 r12 2 1 r 2 12 n xi1 x1 i 1 where r12 = Cor(X1, X2) 2 n xi 2 x2 2 i 1 15 The effect of increasing r12 on var ˆ2 var ˆ2 Value of r12 0.00 2 n x i 1 i2 x A 2 0.5 1.33 x A 0.7 1.96 x A 0.8 2.78 x A 0.9 5.26 x A 0.95 10.26 x A 0.97 16.92 x A 0.99 50.25 x A 0.995 100 x A 0.999 500 x A The sign reversal and decrease in t value are caused by the inflated variance of the estimators. 16 Consequences of Multicollinearity Wider confidence intervals. Insignificant t values. High R2 and consequently F can convincingly reject H 0 : 1 2 ... p 0 , but few significant t values. Sensitivity of least squares estimates and their standard errors to small changes in model. 17 Exact multicollinearity exists if two or more independent variables have a perfect linear relationship between them. In this case there is no unique solution to the normal equations derived from least squares. When this happens, one or more variables should be dropped from the model. Multicollinearity is very much a norm in regression analysis involving non-experimental (or uncontrolled) data. It can never be eliminated . The question is not about the existence or nonexistence of multicollinearity, but how serious the problem is. 18 Identifying Multicollinearity High R2 (and significant F value) but low values for t statistics. High correlation coefficients between the explanatory variables. But the converse need not be true. In otherwords, multicollinearity may still be a problem even though the correlation between two variables does not appear to be high. This is because three of more variables may be strongly correlated, yet pairwise correlation are not high. Regression coefficient estimates and standard errors sensitive to small changes in specification. 19 Variance Inflation Factor (VIF): Let x1, x2,…, xp be the p explanatory variables in a regression. Perform the regression of xk on the remaining p-1 explanatory variables and call the coefficient of determination from the 2 regression RK . The VIF for variable xk is VIFK 1 1 RK2 VIF is a measure of the strength of the relationship between each explanatory variable and all other explanatory variables in the model. 20 2 Relationship between RK and VIFK RK2 VIFK 0 1 0.9 10 0.99 100 21 How large the VIFK have to be to suggest a serious problem with multicollinearity? a) An individual VIFK larger than 10 indicates that multicollinearity may be seriously influencing the least squares estimates of the regression coefficients. p b) If the average of the VIFK, VIF VIFK / p , is larger than 5, K 1 then serious problems may exist. V IF indicates how many times larger the errors for the regression is due to multicollinearity than it would be if the variables were uncorrelated. 22 SAS codes: data housing; infile ‘d:\teaching\MS3215\housing.txt’; input year housing pop gdp unemp intrate ; proc reg data= housing ; model housing= pop gdp intrate/vif; run; 23 The REG Procedure Model: MODEL1 Dependent Variable: housing Number of Observations Read Number of Observations Used 23 23 Analysis of Variance Source Model Error Corrected Total DF 3 19 22 Root MSE Dependent Mean Coeff Var Sum of Squares 1147699 1478302 2626001 Mean Square F Value Pr > F 382566 4.92 0.0108 77805 278.93613 R-Square 1601.07826 Adj R-Sq 17.42177 0.4371 0.3482 Parameter Estimates Variable Intercept pop gdp intrate Parameter DF Estimate 1 -1317.45317 1 14.91398 1 0.52186 1 -184.77902 Standard Error t Value 4930.68042 -0.27 36.55401 0.41 0.97391 0.54 58.10610 -3.18 Pr > |t| 0.7922 0.6878 0.5983 0.0049 Variance Inflation 0 87.97808 64.66953 7.42535 24 Solutions to Multicollinearity 1) Benign Neglect If an analyst is less interested in interpreting individual coefficients but more interested in forecasting then multicollinearity may not a serious concern. Even with high correlations among independent variables, if the regression coefficients are significant and have meaningful signs and magnitudes, one need not be too concerned with multicollinearity. 25 2) Eliminating Variables Remove the variable with strong correlation with the rest would generally improve the significance of other variables. There is a danger, however, in removing too many variables from the model because that would lead to bias in the estimates. 3) Re-specifying the model For example, in the housing regression, we can include the variables as per capita rather than include population as an explanatory variable, leading to Housing GDP 0 1 2 Intrate Pop Pop 26 SAS codes: data housing; infile ‘d:\teaching\MS3215\housing.txt’; input year housing pop gdp unemp intrate ; phousing= housing/pop; pgdp= gdp/pop; proc reg data= housing ; model phousing = pgdp intrate/vif; run; 27 The REG Procedure Model: MODEL1 Dependent Variable: phousing Number of Observations Read Number of Observations Used 23 23 Analysis of Variance Source Model Error Corrected Total DF 2 20 22 Sum of Squares 26.33472 34.38472 60.71944 Root MSE Dependent Mean Coeff Var Mean Square F Value Pr > F 13.16736 7.66 0.0034 1.71924 1.31120 R-Square 7.50743 Adj R-Sq 17.46531 0.4337 0.3771 Parameter Estimates Variable DF Parameter Estimate Intercept pgdp intrate 1 1 1 2.07920 0.93567 -0.69832 Standard Error t Value 3.34724 0.36701 0.18640 0.62 2.55 -3.75 Pr > |t| Variance Inflation 0.5415 0.0191 0.0013 0 3.45825 3.45825 28 4) Increasing the sample size This solution is often recommended on the ground that such an increase improves the precision of an estimator and hence reduce the adverse effects of multicollinearity. But sometimes additional sample information may not available. 5) Other estimation techniques (beyond the scope of this course) Ridge regression Principal component analysis 29 Dummy variables In regression analysis, qualitative or categorical variables are often useful. Qualitative variables such as sex, martial status or political affiliation can be represented by dummy variables, usually coded as 0 and 1. The two values signify that the observation belongs to one of two possible categories. 30 Example The Salary Survey data set was developed from a salary survey of computer professionals in a large corporation. The objective of the survey was to identify and quantify those variables that determine salary differentials. In addition, the data could be used to determine if the corporation’s salary administration guidelines were being followed. The data appear in the file salary.txt. The response variable is salary (S) and the explanatory variables are: (1) experience (X), measured in years; (2) education (E), coded as 1 for completion of a high school (H.S.) diploma, 2 for completion of a bachelor degree (B.S.), and 3 for the completion of an advanced degree; (3) management (M), which is coded as 1 for a person with management responsibility and 0 otherwise. We shall try to measure the effects of these three variables on salary using regression analysis. 31 So, the regression model is Si 0 1 X i 2 Ei 3 M i i where Mi 1 0 if employee i takes on management responsibility otherwise This leads to two possible regressions: i) For managerial positions: Si 0 3 1 X i 2 Ei i ii) For non-managerial positions: Si 0 1 X i 2 Ei i 3 therefore represents the average salary difference between employees with and without managerial responsibilities. 32 SAS codes: data salary; infile ‘d:\teaching\MS3215\salary.txt’; input s x e m; proc reg data= salary; model s= x e m; run; 33 The REG Procedure Model: MODEL1 Dependent Variable: s Number of Observations Read Number of Observations Used 46 46 Analysis of Variance Source Model Error Corrected Total DF 3 42 45 Sum of Squares 928714168 72383410 1001097577 Root MSE Dependent Mean Coeff Var Mean Square 309571389 1723415 F Value Pr > F 179.63 <.0001 1312.78883 R-Square 17270 Adj R-Sq 7.60147 0.9277 0.9225 Parameter Estimates Variable Intercept x e m Parameter DF Estimate 1 6963.47772 1 570.08738 1 1578.75032 1 6688.12994 Standard Error 665.69473 38.55905 262.32162 398.27563 t Value 10.46 14.78 6.02 16.79 Pr > |t| <.0001 <.0001 <.0001 <.0001 34 So the estimated regressions are i) For managerial positions: Sˆi 6963.48 6688.13 570.09 X i 1578.76Ei 13651.61 570.09 X i 1578.76Ei ii) For non-managerial positions: Sˆi 6963.48 570.09 X i 1578.76Ei Note that all variables are significant and all estimated coefficients have positive signs, indicating that, other things being equal, a. Each additional year of work experience is worth a salary increment of $570. b. An improvement of qualification from high school to a bachelor’s degree or from bachelor’s degree to advanced degree is worth $1579. c. On average, employees with managerial responsibility receive $6688 more than employees without managerial responsibility. 35 Note that only one dummy variable is needed to represent M which contains two categories. Suppose we define a new variable which is a compliment to M, that is, M ' i 1 0 if employee i does not takes on management responsibility otherwise Note that whenever Mi= 1, M i' = 0. If M i' is used in conjunction with Mi then we have Si 0 1 X i 2 Ei 3 M i 4 M i' i but note that the “implicit” explanatory variable (call it Ii) attached to the intercept term is represented by a vector of 1. Hence, I i M i M i' PERFECT MULTICOLLINEARITY 36 The method of least squares fails as a result and there is no unique solution to the normal equations. This problem is known as “dummy variable trap”. In general, for a qualitative variable containing J categories, only J-1 dummy variables are required. Question: What if Mi is replaced by M i' , will there be any difference in the result? Answer: Note that Mi and M i' contain essentially the same information. The results will be exactly the same. 37 SAS codes: data salary; infile ‘d:\teaching\MS3215\salary.txt’; input s x e m; mp= 0; If m eq 0 then mp= 1; proc reg data= salary; model s= x e mp; run; 38 The REG Procedure Model: MODEL1 Dependent Variable: s Number of Observations Read Number of Observations Used 46 46 Analysis of Variance Source Model Error Corrected Total DF 3 42 45 Sum of Squares 928714168 72383410 1001097577 Root MSE Dependent Mean Coeff Var Mean Square F Value Pr > F 309571389 179.63 <.0001 1723415 1312.78883 17270 7.60147 R-Square Adj R-Sq 0.9277 0.9225 Parameter Estimates Variable Intercept x e mp DF 1 1 1 1 Parameter Estimate 13652 570.08738 1578.75032 -6688.12994 Standard Error 734.39164 38.55905 262.32162 398.27563 t Value 18.59 14.78 6.02 -16.79 Pr > |t| <.0001 <.0001 <.0001 <.0001 39 So the regressions are i) For managerial positions: Sˆi 13652 570.09 X i 1578.76Ei ii) For non-managerial positions: Sˆi 13652 6688.13 570.09 X i 1578.76Ei 6963.87 570.09 X i 1578.76Ei The results, except for minor differences due to roundings, are essentially the same as those when M, instead of M’, is used as an explanatory variable. 40 So far, education has been treated in a linear fashion. This may be too restrictive. Instead, we shall view education as a categorical variable and define two dummy variables to represent three categories, Bi 1 Ai if employee i completes a bachelor degree as his/her highest level of education attainment 0 otherwise 1 if employee i completes an advanced degree as his/her highest level of education attainment 0 otherwise So, when Ei= 1, Bi= Ai= 0 Ei= 2, Bi= 1, Ai= 0 Ei= 3, Bi= 0, Ai= 1 So the model to be estimated is Si 0 1 X i 2 Bi 3 Ai 4 M i i 41 SAS codes: data salary; infile ‘d:\teaching\MS3215\salary.txt’; input s x e m; a= 0; b= 0; If e eq 2 then b= 1; If e eq 3 then a= 1; proc reg data= salary; model s= x b a m; run; 42 The REG Procedure Model: MODEL1 Dependent Variable: s Number of Observations Read Number of Observations Used 46 46 Analysis of Variance Source Model Error Corrected Total Sum of DF Squares 4 957816858 41 43280719 45 1001097577 Mean Square 239454214 1055627 Root MSE 1027.43725 R-Square Dependent Mean 17270 Adj R-Sq Coeff Var 5.94919 F Value Pr > F 226.84 <.0001 0.9568 0.9525 Parameter Estimates Variable Intercept x b a m Parameter DF Estimate 1 8035.59763 1 546.18402 1 3144.03521 1 2996.21026 1 6883.53101 Standard Error t Value 386.68926 20.78 30.51919 17.90 361.96827 8.69 411.75271 7.28 313.91898 21.93 Pr > |t| <.0001 <.0001 <.0001 <.0001 <.0001 43 The interpretation of the coefficients of X and M are the same as before. The estimated coefficient of Bi (3144.04) measures the differential salary between bachelor degree holders relative to high school leavers. Similarly, the estimated coefficient of Ai (2996.21) measures the differential salary between advanced degree holders relative to high school leavers. The difference (3144.04-2996.21) measures the salary differential between bachelor degree and advanced degree holders. Interestingly, the results suggest that a bachelor degree is worth more than an advanced degree! (but is the difference significant?) 44 Interaction Variables The previous models all suggest that the effects of education and management status on salary determination are additive. For example, the effect of a management position is measured by 4 independently of the level of educational attainment. The possible non-additive effects may be evaluated by constructing additional variables designed to capture interaction effects. Interaction variables are products of existing variables, for example, B M and A M are interaction variables capturing interaction effects between educational levels and managerial responsibility. The expanded model is Si 0 1 X i 2 Bi 3 Ai 4 M i 5 Bi M i 6 Ai M i i 45 SAS codes: data salary; infile ‘d:\teaching\MS3215\salary.txt’; input s x e m; a= 0; b= 0; If e eq 2 then b= 1; If e eq 3 then a= 1; bm= b*m; am= a*m; proc reg data= salary; model s= x b a m bm am; run; 46 The REG Procedure Model: MODEL1 Dependent Variable: s Number of Observations Read Number of Observations Used 46 46 Analysis of Variance Source Model Erro r Corrected Total DF 6 39 45 Sum of Squares 999919409 1178168 1001097577 Mean Square 166653235 30209 Root MSE 173.80861 R-Square Dependent Mean 17270 Adj R-Sq Coeff Var 1.00641 F Value Pr > F 5516.60 <.0001 0.9988 0.9986 Parameter Estimates Variable Intercept x b a m bm am DF 1 1 1 1 1 1 1 Parameter Estimate 9472.68545 496.98701 1381.67063 1730.74832 3981.37690 4902.52307 3066.03512 Standard Error t Value 80.34365 117.90 5.56642 89.28 77.31882 17.87 105.33389 16.43 101.17472 39.35 131.35893 37.32 149.33044 20.53 Pr > |t| <.0001 <.0001 <.0001 <.0001 <.0001 <.0001 <.0001 47 Interpretation of regression results: There are 6 regression models altogether i) high school leavers in non-managerial positions Si 0 1 X i i ii) high school leaves in managerial positions Si 0 1 X i 4 M i i iii) bachelor degree holders in non-managerial positions Si 0 1 X i 2 Bi i iv) bachelor degree holders in managerial positions Si 0 1 X i 2 Bi 4 M i 5 Bi M i i 48 i) advanced degree holders in non-managerial positions Si 0 1 X i 3 Ai i i) advanced degree holders in managerial positions Si 0 1 X i 3 Ai 6 Ai M i i For example, to ascertain the marginal change in salary due to the acquisition of an advanced degree, Si 3 6 M i Ai 1730.75 3066.04M i (estimate) Thus, the marginal change is $1730.75 for non-managerial employees and $4796.79 for managerial employees. 49 Similarly, to investigate the impact if a change from nonmanagerial to managerial position, Si 4 5 Bi 6 Ai M i 3981.38 4902.52Bi 3066.04 Ai (estimate) Thus, the marginal change is $3981.38 for high school leavers, $8883.9 for bachelor degree holders and $7047.42 for advanced degree holders. 50 Comparing different groups of regression models by dummy variables Sometimes a collection of data may consist of two or more distinct subsets, each of which may require a separate regression. Serious bias may be incurred if a combined regression relationship is used to represent the pooled data set. 51 Example A job performance test was given to a group of 20 trainees on a special employment program at the end of the job training period. All these 20 trainees were eventually employed by the company and given a performance evaluation score after 6 months. The data are given in the file employment.txt. Let Y represent job performance score of employee and X be the score on the pre-employment test. We are concerned with equal employment opportunity. We want to compare 52 Model 1 (pooled): yij 0 1 xij ij , j 1,2 i 1,2,..., n Model 2A (Minority): yi1 01 11xi1 i1 Model 2B (White): yi 2 02 12 xi 2 i 2 In model 1, race distinction is ignored, the data are pooled and there is a single regression line. In models 2A and 2B, there are two separate regression relationships for the two subgroups, each with a distinct set of regression coefficients. 53 54 So, formally, we want to test H 0 : 11 12 , 01 02 vs. H1: at least one of the equalities in H0 is false The test may be performed using dummy and interaction variables. Define 1 if j = 1 (minority) Z ij 0 if j = 2 (white) and formulate the following model which we call model 3: yij 0 1 xij 2 Z ij 3 Z ij xij ij , j 1,2 i 1,2,..., n 55 Note that Model 3 is equivalent to Models 2A and 2B. When j=1, Z i1=1, and Model 3 becomes yi1 0 1 xi1 2 3 xi1 i1 0 2 1 3 xi1 i1 , which is Model 2A, and when j=2, Z i 2=0, and Model 3 reduces to yi 2 0 1 xi 2 i 2 , which is Model 2B. So a comparison between Model 1 and Models 2A and 2B is equivalent to a comparison between Model 1 and Model 3. 56 Now, Model 1 yij 0 1 xij ij ; j 1,2 i 1,2,..., n may be obtained by setting 2 3 0 in Model 3 yij 0 1 xij 2 Z ij 3 zij xij ij , j 1,2 i 1,2,..., n Thus, the hypothesis of interest becomes H 0 : 2 3 0 H1: at least one of 2 and 3 is non-zero 57 The test may be carried out using a partial-F test defined as SSER SSEF / k F SSEF /( n p 1) where k is the number of restrictions under H0, SSER is the SSE corresponding to the restricted model. SSEF is the SSE corresponding to the full model and n-p-1 is the degrees of freedom in the full model. 58 SAS codes: data employ; infile ‘d:\teaching\MS3215\employ.txt’; input x race y; z= 0; if race eq 1 then z= 1; zx= z*x; proc reg data= employ; model y= x z zx; test: test z= 0, zx= 0; run; proc reg data= employ; model y= x; run; 59 Regression results of Model 3 (full model) The REG Procedure Model: MODEL1 Dependent Variable: y Number of Observations Read Number of Observations Used 20 20 Analysis of Variance Source Model Error Corrected Total DF 3 16 19 Sum of Squares 62.63578 31.65547 94.29125 Mean Square 20.87859 1.97847 Root MSE 1.40658 R-Square Dependent Mean 4.50850 Adj R-Sq Coeff Var 31.19840 F Value 10.55 Pr > F 0.0005 0.6643 0.6013 Parameter Estimates Variable Intercept x z zx DF 1 1 1 1 Parameter Standard Estimate Error t Value Pr > |t| 2.01028 1.05011 1.91 0.0736 1.31340 0.67037 1.96 0.0677 -1.91317 1.54032 -1.24 0.2321 1.99755 0.95444 2.09 0.0527 60 The REG Procedure Model: MODEL1 Test test Results for Dependent Variable y Source Numerator Denominator DF 2 16 Mean Square 6.95641 1.97847 F Value Pr > F 3.52 0.0542 61 Regression results of Model 1 (restricted model) The REG Procedure Model: MODEL1 Dependent Variable: y Number of Observations Read Number of Observations Used 20 20 Analysis of Variance Source Model Error Corrected Total DF 1 18 19 Sum of Squares 48.72296 45.56830 94.29125 Mean Square 48.72296 2.53157 F Value 19.25 Pr > F 0.0004 Root MSE 1.59109 R-Square 0.5167 Dependent Mean 4.50850 Adj R-Sq 0.4899 Coeff Var 35.29093 Parameter Estimates Variable Intercept x DF 1 1 Parameter Estimate 1.03497 2.36053 Standard Error t Value Pr > |t| 0.86803 1.19 0.2486 0.53807 4.39 0.0004 62 Here, k=2, n=20, p=3 and n-p-1=16 Hence, 45.57 31.66 / 2 F 3.52 31.66 /16 Now let 0.10 F(0.10,2,16) 2.67 and p (3.52) 0.0542 Hence we reject H0 and conclude that the relationship is different for the two groups. Specifically, for minorities, we have yˆ i1 0.091 3.31xi1 and for white, yˆ i 2 2.01 1.31xi 2 63