* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Molecular Modelling for Beginners

Canonical quantization wikipedia , lookup

Quantum electrodynamics wikipedia , lookup

X-ray fluorescence wikipedia , lookup

Hartree–Fock method wikipedia , lookup

Scalar field theory wikipedia , lookup

Renormalization group wikipedia , lookup

Renormalization wikipedia , lookup

Matter wave wikipedia , lookup

X-ray photoelectron spectroscopy wikipedia , lookup

Elementary particle wikipedia , lookup

Particle in a box wikipedia , lookup

Symmetry in quantum mechanics wikipedia , lookup

Rutherford backscattering spectrometry wikipedia , lookup

Chemical bond wikipedia , lookup

Wave–particle duality wikipedia , lookup

Franck–Condon principle wikipedia , lookup

Molecular orbital wikipedia , lookup

Electron scattering wikipedia , lookup

Relativistic quantum mechanics wikipedia , lookup

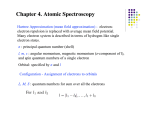

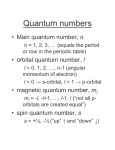

Atomic orbital wikipedia , lookup

Molecular Hamiltonian wikipedia , lookup

Theoretical and experimental justification for the Schrödinger equation wikipedia , lookup

Hydrogen atom wikipedia , lookup

Electron configuration wikipedia , lookup