* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Fundamentals of Light Microscopy and Electronic Imaging

Diffraction topography wikipedia , lookup

Dispersion staining wikipedia , lookup

Phase-contrast X-ray imaging wikipedia , lookup

Ultraviolet–visible spectroscopy wikipedia , lookup

Anti-reflective coating wikipedia , lookup

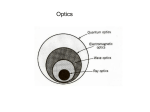

Lens (optics) wikipedia , lookup

Optical coherence tomography wikipedia , lookup

Retroreflector wikipedia , lookup

Surface plasmon resonance microscopy wikipedia , lookup

Nonlinear optics wikipedia , lookup

Image intensifier wikipedia , lookup

Nonimaging optics wikipedia , lookup

Night vision device wikipedia , lookup

Interferometry wikipedia , lookup

Image stabilization wikipedia , lookup

Super-resolution microscopy wikipedia , lookup

Optical aberration wikipedia , lookup