* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Introduction to Applied Statistics

Data assimilation wikipedia , lookup

Interaction (statistics) wikipedia , lookup

Lasso (statistics) wikipedia , lookup

Forecasting wikipedia , lookup

Instrumental variables estimation wikipedia , lookup

Choice modelling wikipedia , lookup

Time series wikipedia , lookup

Linear regression wikipedia , lookup

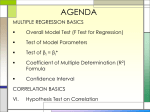

Chapter 5: Regression Analysis Part 1: Simple Linear Regression Regression Analysis Building models that characterize the relationships between a dependent variable and one (single) or more (multiple) independent variables, all of which are numerical, for example: Sales = a + b*Price + c*Coupons + d*Advertising + e*Price*Advertising Cross-sectional data Time series data (forecasting) Simple Linear Regression Single independent variable Linear relationship SLR Model Y = b0 + b 1 X + e Intercept slope error E(YX) f(YX) Error Terms (Residuals) ei = Yi - b0 - b1 Xi Estimation of the Regression Line True regression line (unknown): Y = b0 + b 1 X + e Estimated regression line: Y = b0 + b1 X Observable errors: ei= Yi - b0 - b1 Xi Least Squares Regression n (Y minimize i =1 n b1 = X Y i =1 n i X i =1 i 2 i i - [b0 + b1 X i ]) 2 - nXY - nX b0 = Y - b1X 2 a b c Excel Trendlines Construct a scatter diagram Method 1: Select Chart/Add Trendline Method 2: Select data series; right click Ŷ6000 = 0.0854(6000) – 108.59 = 403.81 Without Regression Best estimate for Y is the mean; independent of the value of X Unexplained variation Y Yi A measure of total variation is SST = (Yi -Y)2 Xi With Regression Variation unexplained after regression, Y - Y Observed values Yi Fitted values Yi Y Yi Variation explained by regression, Y - Y Fitted line Y = b0 + b1X Xi Sums of Squares SST = (Yi -Y)2 =(Yˆ – Y)2 + (Y - Yˆ )2 = SSR + SSE Explained variation Unexplained variation Coefficient of Determination R2 = SSR/SST = (SST – SSE)/SST = 1 – SSE/SST = coefficient of determination: the proportion of variation explained by the independent variable (regression model) 0 R2 1 Adjusted R2 incorporates sample size and number of explanatory variables (in multiple regression models). Useful for comparing against models with different numbers of explanatory variables. R 2 adj 2 n -1 =1- 1- R n 2 Correlation Coefficient Sample correlation coefficient R = R2 Properties -1 R 1 R = 1 => perfect positive correlation R = -1 => perfect negative correlation R = 0 => no correlation Standard Error of the Estimate MSE = SSE/(n-2) = an unbiased estimate of the variance of the errors about the regression line Standard error of the estimate is SYX = SSE n-2 Measures the spread of data about the line Confidence Bands Analogous to confidence intervals, but depend on the specific values of the independent variable Yˆi t/2, n-2 SYXhi (X i - X )2 1 hi = + n n 2 ( X X ) i i =1 Regression as ANOVA Testing for significance of regression H0: b1 = 0 H1: b1 0 SST = SSR + SSE The null hypothesis implies that SST = SSE, or SSR = 0 MSR = SSR/1 = variance explained by regression F = MSR/MSE If F > critical value, it is likely that b1 0, or that the regression line is significant t-test for Significance of Regression b1 - b 1 t= n S YX / 2 ( X X ) i with n-2 degrees of freedom i =1 This allows you to test H0: slope = b1 H1: slope b1 Excel Regression Tool Excel menu > Tools > Data Analysis > Regression Input variable ranges Check appropriate boxes Select output options Regression Output Correlation coefficient SYX b0 b1 t- test for slope p-value for significance of regression Confidence interval for slope Residuals Standard residuals are residuals divided by their standard error, expressed in units independent of the units of the data. Assumptions Underlying Regression Linearity Normally distributed errors for each X with mean 0 and constant variance Examine histogram of standardized residuals or use goodness-of-fit tests Homoscedasticity – constant variance about the regression line for all values of the independent variable Check with scatter diagram of the data or the residual plot Examine by plotting residuals and looking for differences in variances at different values of X No autocorrelation. Residuals should be independent for each value of the independent variable. Especially important if the independent variable is time (forecasting models). Residual Plot Histogram of Residuals Evaluating Homoscedasticity OK Heteroscadastic Autocorrelation Durbin-Watson statistic n D= 2 ( e e ) i i -1 i =2 n 2 e i i =1 D < 1 suggest autocorrelation D > 1.5 suggest no autocorrelation D > 2.5 suggest negative autocorrelation PHStat tool calculates this statistic Regression and Investment Risk Systematic risk – variation in stock price explained by the market Measured by beta Beta = 1: perfect match to market movements Beta < 1: stock is less volatile than market Beta > 1: stock is more volatile than market Systematic Risk (Beta) Beta is the slope of the regression line