Lecture 28: Eigenvalues - Harvard Mathematics Department

... Proof. The pattern, where all the entries are in the diagonal leads to a term (A11 − λ) · (A22 − λ)...(Ann − λ) which is (−λn ) + (A11 + ... + Ann )(−λ)n−1 + ... The rest of this as well as the other patterns only give us terms which are of order λn−2 or smaller. How many eigenvalues do we have? For ...

... Proof. The pattern, where all the entries are in the diagonal leads to a term (A11 − λ) · (A22 − λ)...(Ann − λ) which is (−λn ) + (A11 + ... + Ann )(−λ)n−1 + ... The rest of this as well as the other patterns only give us terms which are of order λn−2 or smaller. How many eigenvalues do we have? For ...

Properties of the Trace and Matrix Derivatives

... Thus, we have a set of vectors W that, when transformed by A, are still orthogonal to v, so if we have an original eigenvector v of A, then a simple inductive argument shows that there is an orthonormal set of eigenvectors. To see that there is at least one eigenvector, consider the characteristic p ...

... Thus, we have a set of vectors W that, when transformed by A, are still orthogonal to v, so if we have an original eigenvector v of A, then a simple inductive argument shows that there is an orthonormal set of eigenvectors. To see that there is at least one eigenvector, consider the characteristic p ...

solution of equation ax + xb = c by inversion of an m × m or n × n matrix

... N × N real matrix. A familiar example occurs in the Lyapunov theory of stability [1], [2], [3] with B = AT . Is also arises in the theory of structures [4]. Using the notation P × Q to denote the Kronecker product (Pij Q) (see [5]) in which each element of P is multipled by Q, we find that the equat ...

... N × N real matrix. A familiar example occurs in the Lyapunov theory of stability [1], [2], [3] with B = AT . Is also arises in the theory of structures [4]. Using the notation P × Q to denote the Kronecker product (Pij Q) (see [5]) in which each element of P is multipled by Q, we find that the equat ...

Matrix Analysis

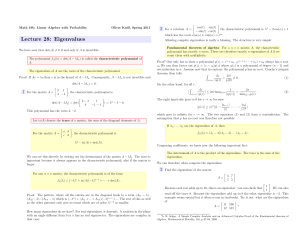

... The characteristic polynomial of An×n is p(λ) = det (A − λI). The degree of p(λ) is n, and the leading term in p(λ) is (−1)nλn. The characteristic equation for A is p(λ) = 0. The eigenvalues of A are the solutions of the characteristic equation or, equivalently, the roots of the characteristic polyn ...

... The characteristic polynomial of An×n is p(λ) = det (A − λI). The degree of p(λ) is n, and the leading term in p(λ) is (−1)nλn. The characteristic equation for A is p(λ) = 0. The eigenvalues of A are the solutions of the characteristic equation or, equivalently, the roots of the characteristic polyn ...

ENGG2013 Lecture 17

... • By definition a matrix M is diagonalizable if P–1 M P = D for some invertible matrix P, and diagonal matrix D. or equivalently, ...

... • By definition a matrix M is diagonalizable if P–1 M P = D for some invertible matrix P, and diagonal matrix D. or equivalently, ...

Mathematica (9) Mathematica can solve systems of linear equations

... Mathematica can also manipulate matrices. To define a matrix, we make a list of lists where the elements of the “outer” list are the columns of the “inner” list of row coefficients. When defining a matrix you must use this format or Mathematica will not know what it is. However, you can display the ...

... Mathematica can also manipulate matrices. To define a matrix, we make a list of lists where the elements of the “outer” list are the columns of the “inner” list of row coefficients. When defining a matrix you must use this format or Mathematica will not know what it is. However, you can display the ...

LOYOLA COLLEGE (AUTONOMOUS), CHENNAI – 600 034

... 3. Define rank and nullity of a vector space homomorphism T: U V. 4. Give a basis for the vector space F[x] of all polynomials of degree at most n. 5. If V is an inner product space, show that u, v w u, v u, w . 6. Define regular and singular linear transformation. 7. Give an example ...

... 3. Define rank and nullity of a vector space homomorphism T: U V. 4. Give a basis for the vector space F[x] of all polynomials of degree at most n. 5. If V is an inner product space, show that u, v w u, v u, w . 6. Define regular and singular linear transformation. 7. Give an example ...

The Random Matrix Technique of Ghosts and Shadows

... specifically β as a knob to turn down the randomness, e.g. Airy Kernel –d2/dx2+x+(2/β½)dW White Noise ...

... specifically β as a knob to turn down the randomness, e.g. Airy Kernel –d2/dx2+x+(2/β½)dW White Noise ...

Notes

... amount that multiplication by A can stretch a vector. We can also still interpret κ(A) in terms of the distance to singularity – or, at least, the distance to rank deficiency. Of course, the actual sensitivity of least squares problems to perturbation depends on the angle between the right hand side ...

... amount that multiplication by A can stretch a vector. We can also still interpret κ(A) in terms of the distance to singularity – or, at least, the distance to rank deficiency. Of course, the actual sensitivity of least squares problems to perturbation depends on the angle between the right hand side ...

homework 11

... This is the same as saying that all nonzero vectors are eigenvectors of the identity matrix, with eigenvalue 1. (Note that we used axiom 8 in our calculations). 8.1 #13 We wish to show that the vector P −1~v is an eigenvector of the matrix P −1 AP , with the same eigenvalue λ. (P −1 AP )(P −1~v ) = ...

... This is the same as saying that all nonzero vectors are eigenvectors of the identity matrix, with eigenvalue 1. (Note that we used axiom 8 in our calculations). 8.1 #13 We wish to show that the vector P −1~v is an eigenvector of the matrix P −1 AP , with the same eigenvalue λ. (P −1 AP )(P −1~v ) = ...

Applications of eigenvalues

... where Λ = diag(1, λ2 , λ3 , . . .), |λi | ≥ |λi+1 |, and Λ̃ = diag(0, λ2 , λ3 , . . .). In most reasonable operator norms, |Λ̃|k = |λ2 |k , and so a great deal of the literature on convergence of Markov chains focuses on 1 − |λ2 |, called the spectral gap. But note that this bound does not depend on ...

... where Λ = diag(1, λ2 , λ3 , . . .), |λi | ≥ |λi+1 |, and Λ̃ = diag(0, λ2 , λ3 , . . .). In most reasonable operator norms, |Λ̃|k = |λ2 |k , and so a great deal of the literature on convergence of Markov chains focuses on 1 − |λ2 |, called the spectral gap. But note that this bound does not depend on ...

Jordan normal form

In linear algebra, a Jordan normal form (often called Jordan canonical form)of a linear operator on a finite-dimensional vector space is an upper triangular matrix of a particular form called a Jordan matrix, representing the operator with respect to some basis. Such matrix has each non-zero off-diagonal entry equal to 1, immediately above the main diagonal (on the superdiagonal), and with identical diagonal entries to the left and below them. If the vector space is over a field K, then a basis with respect to which the matrix has the required form exists if and only if all eigenvalues of the matrix lie in K, or equivalently if the characteristic polynomial of the operator splits into linear factors over K. This condition is always satisfied if K is the field of complex numbers. The diagonal entries of the normal form are the eigenvalues of the operator, with the number of times each one occurs being given by its algebraic multiplicity.If the operator is originally given by a square matrix M, then its Jordan normal form is also called the Jordan normal form of M. Any square matrix has a Jordan normal form if the field of coefficients is extended to one containing all the eigenvalues of the matrix. In spite of its name, the normal form for a given M is not entirely unique, as it is a block diagonal matrix formed of Jordan blocks, the order of which is not fixed; it is conventional to group blocks for the same eigenvalue together, but no ordering is imposed among the eigenvalues, nor among the blocks for a given eigenvalue, although the latter could for instance be ordered by weakly decreasing size.The Jordan–Chevalley decomposition is particularly simple with respect to a basis for which the operator takes its Jordan normal form. The diagonal form for diagonalizable matrices, for instance normal matrices, is a special case of the Jordan normal form.The Jordan normal form is named after Camille Jordan.