* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download homework 11

Fundamental theorem of algebra wikipedia , lookup

Quadratic form wikipedia , lookup

Euclidean vector wikipedia , lookup

Generalized eigenvector wikipedia , lookup

Vector space wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Tensor operator wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Determinant wikipedia , lookup

Orthogonal matrix wikipedia , lookup

System of linear equations wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Bra–ket notation wikipedia , lookup

Gaussian elimination wikipedia , lookup

Cartesian tensor wikipedia , lookup

Matrix multiplication wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Four-vector wikipedia , lookup

Basis (linear algebra) wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Linear algebra wikipedia , lookup

Matrix calculus wikipedia , lookup

Jordan normal form wikipedia , lookup

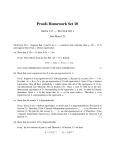

Math 60 – Linear Algebra

Solutions to Homework 5

8.1 #7a We compute

6−λ

−24

−10 − λ

det 2

1

4

the determinant of A − λI by expanding along the first row:

−4

−2 = (6−λ) [(−10 − λ)(1 − λ) + 8]+24 [2 − 2λ + 2]−4 [8 + 10 + λ]

1−λ

= (6 − λ)(λ2 + 9λ − 2) + (−48λ + 96) + (−4λ − 72) = −λ3 − 3λ2 + 4λ + 12 = 0

Factoring, we get −(λ − 2)(λ + 2)(λ + 3) = 0. So the eigenvalues of this matrix are 2, −2

and −3. Now we find the eigenvectors.

λ = 2: We must find the solutions to the homogeneous system of linear equations (A−2I)~x =

~0.

4 −24 −4 0

1 −6 −1 0

1 −6 −1 0

1 −6 −1 0

2 −12 −2 0 −→ 1 −6 −1 0 −→ 0 0

0 0 −→ 0 1

0 0

1 4 −1 0

1 4 −1 0

0 10 0 0

0 0

0 0

1 0 −1 0

−→ 0 1 0 0

0 0 0 0

r

1

So the eigenvectors of A with eigenvalue 2 are all vectors of the form 0 = r 0 with

r

1

1

r 6= 0. In other words, all nonzero multiples of 0.

1

λ = −2: Again, we find solutions to the homogeneous system of equations (A + 2I)~x = ~0.

1 0 1 0

8 −24 −4 0

2 −8 −2 0 −→ · · · −→ 0 1 1/2 0

0 0 0 0

1 4

3 0

−1

So the eigenvectors of A with eigenvalue −2 are all nonzero multiples of −1/2.

1

λ = −3: a similar

computation will show that the associated eigenvalues are all nonzero

−4

multiples of −2.

3

8.1 #12 Suppose ~v ∈ V is a nonzero vector (nonzero so that it fits the first eigenvector

criterion). Then idV (~v ) = ~v = 1 · ~v . Therefore, ~v is an eigenvector of idV with eigenvalue 1.

This is the same as saying that all nonzero vectors are eigenvectors of the identity matrix,

with eigenvalue 1. (Note that we used axiom 8 in our calculations).

8.1 #13 We wish to show that the vector P −1~v is an eigenvector of the matrix P −1 AP ,

with the same eigenvalue λ. (P −1 AP )(P −1~v ) = (P −1 A)(P P −1 )(~v ) (by associativity of matrix

multiplication) = (P −1 A)(In )(~v ) (by the defining property of the inverse) = (P −1 A)(~v ) (since

the identity matrix is a multiplicative identity element among matrices) = (P −1 )(A~v ) (again

by associativity) = P −1 (λ~v ) (since we’re given that ~v is an eigenvector of A with eigenvalue

λ) = λP −1~v (since λ is a scalar). So we have proved that

(P −1 AP )(P −1~v ) = λ(P −1~v )

8.1 # 15a A2~v = (AA)~v = A(A~v ) (by associativity) = A(λ~v ) (since we’re given that ~v is an

eigenvector of A with eigenvalue λ) = λ(A~v ) (since λ is a scalar) = λ(λ~v ) (again using the

given fact that ~v is an eigenvector of A with eigenvalue λ) = λ2~v (using the associativity of

scalar multiplication axiom), so that ~v is an eigenvector of A2 with associated eigenvalue λ2 .

8.1 # 15b Using a similar technique, but iterated k times, we can show that Ak~v =

λ(Ak−1~v ) = λ2 (Ak−2~v ) = · · · = λk~v , so that ~v is an eigenvector of Ak with associated

eigenvalue λk .

8.1 #15c We proved this in class! Briefly, since A~v = λ~v , we multiply both sides by A−1 to

get ~v = λA−1~v , and now we multiply by 1/λ (we know λ 6= 0) to get A−1~v = (1/λ)~v , so that

~v is an eigenvector of A−1 with eigenvalue 1/λ. Applying part b above, we can show that ~v

is an eigenvector of (A−1 )k with eigenvalue 1/λk .

8.3 #2a We found three linearly independent eigenvectors in #7a above (since, in part,

there were three distinct eigenvalues) so we know that this matrix is diagonalizable, and

−1

1 −1 −4

2 0

0

1 −1 −4

6 −24 −4

2 −10 −2 = 0 −1/2 −2 0 −2 0 0 −1/2 −2

1

1

3

0 0 −3

1

1

3

1 4

1

8.3 #7a Since A = A2 , then any eigenvalue of A will also be an eigenvalue of A2 . But, from

problem 15a above, we know that if λ is an eigenvalue of A, then λ2 is an eigenvalue of A2 .

Combining these two observations, we see that any eigenvalue λ of A must satisfy λ2 = λ,

or λ(λ − 1) = 0, so that λ must equal either 0 or 1.

8.3 #7b Suppose ~v is a vector in Rn . If A~v = ~0, then ~v is an eigenvector of A with eigenvalue

0. If not, observe that A(A~v ) = A2~v (by associativity of matrix multiplication) = A~v (by

the given fact that A2 = A) = 1 · (A~v ) (by axiom 8) so that A~v is an eigenvector of A

with eigenvalue 1. Now observe that A(~v − A~v ) = A~v − A2~v (by distributivity of matrix

multiplication over matrix addition) = A~v −A~v (since we’re given A2 = A) = ~0 = 0·(~v −A~v )

(since 0 times any vector is the zero vector). So (~v −A~v ) is an eigenvector of A with eigenvalue

0. That proves the asserion, since A~v + (~v − A~v ) = ~v .

8.3 #7c For A to be diagonalizable, there must be a set of eigenvectors which form a basis

for Rn . Now suppose that {~v1 , · · · , ~vk } is a basis for EA (1) and {w

~ 1, · · · , w

~ l } is a basis

for EA (0). In particular, the ~vi ’s and w

~ j ’s are eigenvectors of A with eigenvalues 1 and 0,

respectively. Now if ~v ∈ Rn is any vector, we’ve shown that

A~v = a1~v1 + · · · + ak~vk

(since A~v ∈ EA (1)), and

(~v − A~v ) = b1 w

~ 1 + · · · bl w

~l

(since (~v − A~v ) ∈ EA (0)), and so

~v = a1~v1 + · · · + ak~vk + b1 w

~ 1 + · · · bl w

~l

so that the set {~v1 , · · · , ~vk , w

~ 1, · · · , w

~ l } spans Rn , and by the contraction theorem, we can

find a subset which forms a basis for Rn . That basis would consist solely of eigenvectors of

A, proving that A is indeed diagonalizable.