* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Matrix Analysis

Factorization of polynomials over finite fields wikipedia , lookup

Quartic function wikipedia , lookup

Polynomial ring wikipedia , lookup

Eisenstein's criterion wikipedia , lookup

Quadratic form wikipedia , lookup

Tensor operator wikipedia , lookup

Determinant wikipedia , lookup

Factorization wikipedia , lookup

Symmetry in quantum mechanics wikipedia , lookup

System of linear equations wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

System of polynomial equations wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Cartesian tensor wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Linear algebra wikipedia , lookup

Basis (linear algebra) wikipedia , lookup

Fundamental theorem of algebra wikipedia , lookup

Bra–ket notation wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Jordan normal form wikipedia , lookup

Four-vector wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Matrix calculus wikipedia , lookup

Matrix multiplication wikipedia , lookup

Matrix Analysis

Basic Operations

A matrix is a rectangular array of elements arranged in horizontal rows and vertical

columns, and usually enclosed in brackets. A matrix is real-valued (or, simply, real) if

all its elements are real numbers or real-valued functions; it is complex-valued if at

least one element is a complex number or a complex-valued function. If all its

elements are numbers, then a matrix is called a constant matrix.

Matrices are designated by boldface uppercase letters. A general matrix A having r

rows and с columns may be written

a11

a

A 21

a r1

a12

a22

a11

a1c

a2c

arc

where the elements of the matrix are double subscripted to denote location. By

convention, the row index precedes the column index, thus, a25 represents the element

of A appearing in the second row and fifth column, while a31 represents the element

appearing in the third row and first column. A matrix A may also be denoted as [aij],

where aij denotes the general element of A appearing in the ith row and jth column.

A matrix having r rows and с columns has order (or size) "r by c," usually written

rc. Two matrices are equal if they have the same order and their corresponding

elements are equal.

The transpose of a matrix A, denoted as AT, is obtained by converting the rows of A

into the columns of A one at a time in sequence. If A has order mn, then AT has

order nm.

Vectors and DOT Products

A vector is a matrix having either one row or one column. A matrix consisting of a

single row is called a row vector; a matrix having a single column is called a column

vector. The dot product А-В of two vectors of the same order is obtained by

multiplying together corresponding elements of A and В and then summing the

results. The dot product is a scalar, by which we mean it is of the same general type as

the elements themselves.

Matrix Addition and Matrix Subtraction

The sum A + В of two matrices A = [aij] and В = [bij] having the same order is the

matrix obtained by adding corresponding elements of A and B. That is,

A + B = [aij] + [aij] = [aij + bij]

Matrix addition is both associative and commutative. Thus,

A + (B + C) = (A + В) + С and A + В = В + А

The matrix subtraction A – В is denned similarly: A and В must have the same order,

and the subtractions must be performed on corresponding elements to yield the matrix

[aij – bij].

Scalar Multiplication and Matrix Multiplication

For any scalar k, the matrix kA (or, equivalently, Ak) is obtained by multiplying every

element of A by the scalar k. That is, kA= k[aij] = [kaij].

Let A=[aij] and B = [bij] have orders rp and pc, respectively, so that the number of

columns of A equals the number of rows of B. Then the product AB is defined to be

the matrix С = [cij] of order rс whose elements are given by

p

cij aik bkj

i 0, 1, 2, , r;

j 0, 1, 2, c

k 1

Each element cij of AB is a dot product; it is obtained by forming the transpose of the

ith row of A and then taking its dot product with the yth column of B.

Matrix multiplication is associative and distributes over addition and subtraction; in

general, it is not commutative. Thus,

A(BC) = (AB)C

A(B + C) = AB + AC

(B - C)A = BA - CA

but, in general, AB ВА. Also,

(AB)T=ATBT

Linear Transformation

Introduction

Suppose S = {u1, u2,..., un} is a basis of a vector space V over a field k and, for v V,

suppose

v = alu1 + a2u2 + … +апиn

Then the coordinate vector of v relative to the basis S, which we write as a column

vector unless otherwise specified or implied, is denoted and defined by

a1

a

vs 2 a1

an

a2 an

T

The mapping v[v]S, determined by the basis S, is an isomorphism between

V and the space Kn

Matrix Representation of a Linear Operator

Let T be a linear operator on a vector space V over a field K and suppose S = {u1, u2,

...,un} is a basis of V. Now T(u1), ..., Т(un) are vectors in V and so each is a linear

combination of the vectors in the basis S; say,

T(u1) = a11u1 + a12u1 + … + a1nu1

T(u2) = a21u1 + a22u1 + … + a2nu1

………………………………….

T(un) = an1u1 + an2u1 + … + annu1

The following definition applies.

Definition: The transpose of the above matrix of coefficients, denoted by mS(T) or

[T]S, is called the matrix representation of T relative to the basis S or

simply the matrix of T in the basis S; that is,

a11

a

TS 21

an1

a12

a22

an 2

a1n

a2 n

ann

(The subscript S may be omitted if the basis S is understood.)

Remark: Using the coordinate (column) vector notation, the matrix representation of

T may also be written in the form

mT= [T] = ([T(ul)], [T(u2)],..., [T(un)])

that is, the columns of m(T) are the coordinate vectors ([T(ul)], [T(u2)],..., [T(un)].

Example 1

(a)

Let V be the vector space of polynomials in t over R of degree 3, and let

D:VV be the differential operator defined by D(p(t)) = d(p(t))/dt. We

compute the matrix of D in the basis {1, t, t2, t3}.

2

3

D(1) = 0 = 0 + 0t + 0t + 0t

D(t) =1 = 1 + 0t + 0t2 + 0t3

D(t3) = 3t2 = 0 + 0t + 3t2 + 0t3

0

0

D

0

0

1 0 0

0 2 0

0 0 3

0 0 0

[Note that the coordinate vectors D(l), D(t), D(t2), and D(t3) are the columns, not the

rows, of [D].]

(b)

Consider the linear operator F : R2 R2 defined by F(x, y) = (4x2y,

2x+y) and the following bases of R2:

(c)

S={m,=A. 1),мг = (-1,0)} and E = {e, = A, 0), e2 = @, 1)}

(d)

We have

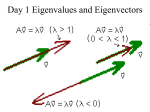

Eigenvalues and Eigenvectors

CHARACTERISTIC EQUATION

Consider the problem of solving the system of two first-order linear differential

equations, du1/dt = 7u1 − 4u2 and du2/dt = 5u1 − 2u2. In matrix notation, this system is

or equivalently, u'=Au

Eq(1)

u1

u1

7 4

, A

and u

u

u . Because solutions of a single

5 2

2

2

where u

equation u' = λu have the form u = αeλt, we are motivated to seek solutions of Eq(1)

that also have the form

u1 = α1eλt and u2 = α2eλt.

Eq(2)

Differentiating these two expressions and substituting the results in Eq(1) yields

In other words, solutions of Eq(1) having the form Eq(2) can be constructed provided

1

in the matrix equation Ax = λx can be found. Clearly,

2

solutions for λ and x =

x=0 trivially satisfies Ax = λx, but x = 0 provides no useful information concerning

the solution of Eq(1). What we really need are scalars λ and nonzero vectors x that

satisfy Ax = λx. Writing Ax = λx as (A − λI) x = 0 shows that the vectors of interest

are the nonzero vectors in N (A − λI) . But N (A − λI) contains nonzero vectors if and

only if A − λI is singular. Therefore, the scalars of interest are precisely the values of

λ that make A − λI singular or, equivalently, the λ ’s for which det (A − λI) = 0.

These observations motivate the definition of eigenvalues and eigenvectors.

Eigenvalues and Eigenvectors

For an n × n matrix A, scalars λ and vectors xn×1 0 satisfying Ax = λx are called

eigenvalues and eigenvectors of A, respectively, and any such pair, (λ, x), is called

an eigenpair for A. The set of distinct eigenvalues, denoted by σ (A) , is called the

spectrum of A.

λ σ (A) A − λI is singular det (A − λI) = 0.

Eq(3)

{x 0| x N (A − λI)_ is the set of all eigenvectors associated with λ. From

now on, N (A − λI) is called an eigenspace for A.

Nonzero row vectors y* such that y*(A − λI) = 0 are called lefthand

eigenvectors for A

Let’s now face the problem of finding the eigenvalues and eigenvectors of the matrix

7 4

appearing in Eq(1). As noted in Eq(3), the eigenvalues are the scalars

A

5

2

λ for which det (A − λI) = 0. Expansion of det (A − λI) produces the second-degree

polynomial

which is called the characteristic polynomial for A. Consequently, the eigenvalues

for A are the solutions of the characteristic equation p(λ) = 0 (i.e., the roots of the

characteristic polynomial), and they are λ = 2 and λ = 3. The eigenvectors associated

with λ = 2 and λ = 3 are simply the nonzero vectors in the eigenspaces N (A − 2I) and

N (A − 3I), respectively. But determining these eigenspaces amounts to nothing more

than solving the two homogeneous systems, (A − 2I) x = 0 and (A − 3I) x = 0.

For λ = 2,

For λ = 3,

In other words, the eigenvectors of A associated with λ = 2 are all nonzero multiples

of x = (4/5 1)T , and the eigenvectors associated with λ = 3 are all nonzero multiples of

y = (1 1)T . Although there are an infinite number of eigenvectors associated with

each eigenvalue, each eigenspace is one dimensional, so, for this example, there is

only one independent eigenvector associated with each eigenvalue. Let’s complete the

discussion concerning the system of differential equations u' = Au in Eq(1). Coupling

Eq(2) with the eigenpairs (λ1, x) and (λ2, y) of A computed above produces two

solutions of u' = Au, namely,

It turns out that all other solutions are linear combinations of these two particular

solutions.

Below is a summary of some general statements concerning features of the

characteristic polynomial and the characteristic equation.

Characteristic Polynomial and Equation

The characteristic polynomial of An×n is p(λ) = det (A − λI). The

degree of p(λ) is n, and the leading term in p(λ) is (−1)nλn.

The characteristic equation for A is p(λ) = 0.

The eigenvalues of A are the solutions of the characteristic equation or,

equivalently, the roots of the characteristic polynomial.

Altogether, A has n eigenvalues, but some may be complex numbers

(even if the entries of A are real numbers), and some eigenvalues may

be repeated.

If A contains only real numbers, then its complex eigenvalues must

occur in conjugate pairs—i.e., if λ σ (A) , then σ (A) .

Proof. The fact that det (A − λI) is a polynomial of degree n whose leading term is

(−1)nλn follows from the definition of determinant

Then

is a polynomial in λ. The highest power of λ is produced by the term

earlier contained the proof that the eigenvalues are precisely the solutions of the

characteristic equation, but, for the sake of completeness, it’s repeated below:

The fundamental theorem of algebra is a deep result that insures every polynomial of

degree n with real or complex coefficients has n roots, but some roots may be

complex numbers (even if all the coefficients are real), and some roots may be

repeated. Consequently, A has n eigenvalues, but some may be complex, and some

may be repeated. The fact that complex eigenvalues of real matrices must occur in

conjugate pairs is a consequence of the fact that the roots of a polynomial with real

coefficients occur in conjugate pairs.

1 1

1

1

Example: Determine the eigenvalues and eigenvectors of A

Solution: The characteristic polynomial is

so the characteristic equation is λ2 − 2λ+2 = 0. Application of the quadratic formula

yields

so the spectrum of A is σ (A) = {1 + i, 1 − i}. Notice that the eigenvalues are complex

conjugates of each other—as they must be because complex eigenvalues of real

matrices must occur in conjugate pairs. Now find the eigenspaces.

For λ = 1 + i,

For λ = 1− i,

In other words, the eigenvectors associated with λ1 = 1 + i are all nonzero multiples of

x1 = (i 1)T , and the eigenvectors associated with λ2 = 1 − i are all nonzero multiples

of x2 = (−i 1)T . In previous sections, you could be successful by thinking only in

terms of real numbers and by dancing around those statements and issues involving

complex numbers. But this example makes it clear that avoiding complex numbers,

even when dealing with real matrices, is no longer possible—very innocent looking

matrices, such as the one in this example, can possess complex eigenvalues and

eigenvectors.