* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Vector space Definition (over reals) A set X is called a vector space

Rotation matrix wikipedia , lookup

Euclidean vector wikipedia , lookup

Exterior algebra wikipedia , lookup

System of linear equations wikipedia , lookup

Determinant wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Gaussian elimination wikipedia , lookup

Principal component analysis wikipedia , lookup

Vector space wikipedia , lookup

Jordan normal form wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Matrix calculus wikipedia , lookup

Matrix multiplication wikipedia , lookup

Vector space

Definition (over reals)

A set X is called a vector space over IR if addition and scalar multiplication are defined and satisfy for all x, y, x ∈

X and λ, µ ∈ IR:

• Addition:

associative x + (y + z) = (x + y) + z

commutative x + y = y + x

identity element ∃0 ∈ X : x + 0 = x

inverse element ∀x ∈ X ∃x0 ∈ X : x + x0 = 0

• Scalar multiplication:

distributive over elements λ(x + y) = λx + λy

distributive over scalars (λ + µ)x = λx + µx

associative over scalars λ(µx) = (λµ)x

identity element ∃1 ∈ IR : 1x = x

Properties and operations in vector spaces

subspace Any non-empty subset of X being itself a vector space (E.g. projection)

linear combination given λi ∈ IR, xi ∈ X

n

X

λi xi

i=1

span The span of vectors x1 , . . . , xn is defined as the set of their linear combinations

( n

)

X

λi xi , λi ∈ IR

i=1

Basis in vector space

Linear independency

A set of vectors xi is linearly independent if none of them can be written as a linear combination of the others

Basis

• A set of vectors xi is a basis for X if any element in X can be uniquely written as a linear combination of vectors

xi .

• Necessary condition is that vectors xi are linearly independent

• All bases of X have the same number of elements, called the dimension of the vector space.

Linear maps

Definition

Given two vector spaces X , Z, a function f : X → Z is a linear map if for all x, y ∈ X , λ ∈ IR:

• f (x + y) = f (x) + f (y)

• f (λx) = λf (x)

Linear maps as matrices

A linear map between two finite-dimensional spaces X , Z of dimensions n, m can always be written as a matrix:

• Let {x1 , . . . , xn } and {z1 , . . . , zm } be some bases for X and Z respectively.

• For any x ∈ X we have:

f (x) = f (

f (xi ) =

f (x) =

n

X

λi xi ) =

i=1

m

X

n

X

λi f (xi )

i=1

aij zj

j=1

n X

m

X

λi aij zj =

i=1 j=1

m X

n

m

X

X

(

λi aij )zj =

µj zj

j=1 i=1

j=1

Linear maps as matrices

• Matrix of basis transformation

M ∈ IRm×n

a11

= ...

am1

...

..

.

...

a1n

..

.

amn

• Mapping from basis coefficients to basis coefficients

Mλ = µ

Matrix properties

transpose Matrix obtained exchanging rows with columns (indicated with M T ). Properties:

(M N )T = N T M T

trace Sum of diagonal elements of a matrix

tr(M ) =

n

X

Mii

i=1

inverse The matrix which multiplied with the original matrix gives the identity

M M −1 = I

rank The rank of an n × m matrix is the dimension of the space spanned by its columns

2

Metric structure

Norm

A function || · || : X → IR+

0 is a norm if for all x, y ∈ X , λ ∈ IR:

• ||x + y|| ≤ ||x|| + ||y||

• ||λx|| = |λ| ||x||

• ||x|| > 0 if x 6= 0

Metric

A norm defines a metric d : X × X → IR+

0:

d(x, y) = ||x − y||

Note

The concept of norm is stronger than that of metric: not any metric gives rise to a norm

Dot product

Bilinear form

A function Q : X × X → IR is a bilinear form if for all x, y, z ∈ X , λ, µ ∈ IR:

• Q(λx + µy, z) = λQ(x, z) + µQ(y, z)

• Q(x, λy + µz) = λQ(x, y) + µQ(x, z)

A bilinear form is symmetric if for all x, y ∈ X :

• Q(x, y) = Q(y, x)

Dot product

Dot product

A dot product h·, ·i : X × X → IR is a symmetric bilinear form which is positive definite:

hx, xi ≥ 0 ∀ x ∈ X

A strictly positive definite dot product satisfies

hx, xi = 0 if f x = 0

Norm

Any dot product defines a corresponding norm via:

||x|| =

p

hx, xi

3

Properties of dot product

angle The angle θ between two vectors is defined as:

cosθ =

hx, zi

||x|| ||z||

orthogonal Two vectors are orthogonal if

hx, yi = 0

orthonormal A set of vectors {x1 , . . . , xn } is orthonormal if

hxi , xj i = δij

where δij = 1 if i = j, 0 otherwise.

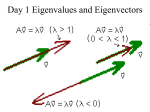

Eigenvalues and eigenvectors

Definition

Given a matrix M , the real value λ and (non-zero) vector x are an eigenvalue and corresponding eigenvector of

M if

M x = λx

Spectral decomposition

• Given an n × n symmetric matrix M , it is possible to find an orthonormal set of n eigenvectors.

• The matrix V having eigenvectors as columns is unitary, that is V T = V −1

• M can be factorized as:

M = V ΛV T

where Λ is the diagonal matrix of eigenvalues corresponding to eigenvectors in V

Eigenvalues and eigenvectors

Singular matrices

• A matrix is singular if it has a zero eigenvalue

M x = 0x = 0

• A singular matrix has linearly dependent columns:

4

Eigenvalues and eigenvectors

Symmetric matrices

Eigenvectors corresponding to distinct eigenvalues are orthogonal:

λhx, zi = hAx, zi

= (Ax)T z

= x T AT z

= xT Az

= hx, Azi

= µhx, zi

Note

An n × n symmetric matrix can have at most n distinct eigenvalues.

Positive semi-definite matrix

Definition

An n × n symmetrix matrix M is positive semi-definite if all its eigenvalues are non-negative.

Alternative sufficient and necessary conditions

• for all x ∈ IRn

xT M x ≥ 0

• there exists a real matrix B s.t.

M = BT B

Functional analysis

Cauchy sequence

• A sequence (xi )i∈IN in a normed space X is called a Cauchy sequence if for all > 0 there exists n ∈ IN s.t.

∀n0 , n00 > n ||xn0 − xn00 || < .

• A Cauchy sequence converges to a point x ∈ X if

lim ||xn − x|| → 0

n→∞

Banach and Hilbert spaces

• A space is complete if all Cauchy sequences in the space converge (to a point in the space).

• A complete normed space is called a Banach space

• A complete dot product space is called a Hilbert space

Hilbert spaces

• A Hilbert space can be (and often is) infinite dimensional

• Infinite dimensional Hilbert spaces are usually required to be separable, i.e. there exists a countable dense

subset: for any > 0 there exists a sequence (xi )i∈IN of elements of the space s.t. for all x ∈ X

min ||xi − x|| < xi

5