* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download B.3 Time dependent quantum mechanics

Renormalization wikipedia , lookup

Dirac bracket wikipedia , lookup

Quantum decoherence wikipedia , lookup

Many-worlds interpretation wikipedia , lookup

Quantum electrodynamics wikipedia , lookup

EPR paradox wikipedia , lookup

Quantum group wikipedia , lookup

Particle in a box wikipedia , lookup

Measurement in quantum mechanics wikipedia , lookup

Tight binding wikipedia , lookup

Coupled cluster wikipedia , lookup

Renormalization group wikipedia , lookup

History of quantum field theory wikipedia , lookup

Matter wave wikipedia , lookup

Copenhagen interpretation wikipedia , lookup

Wave–particle duality wikipedia , lookup

Interpretations of quantum mechanics wikipedia , lookup

Scalar field theory wikipedia , lookup

Quantum state wikipedia , lookup

Erwin Schrödinger wikipedia , lookup

Probability amplitude wikipedia , lookup

Perturbation theory wikipedia , lookup

Hydrogen atom wikipedia , lookup

Coherent states wikipedia , lookup

Wave function wikipedia , lookup

Dirac equation wikipedia , lookup

Schrödinger equation wikipedia , lookup

Hidden variable theory wikipedia , lookup

Density matrix wikipedia , lookup

Perturbation theory (quantum mechanics) wikipedia , lookup

Molecular Hamiltonian wikipedia , lookup

Canonical quantization wikipedia , lookup

Symmetry in quantum mechanics wikipedia , lookup

Path integral formulation wikipedia , lookup

Theoretical and experimental justification for the Schrödinger equation wikipedia , lookup

B.3 Time dependent quantum mechanics

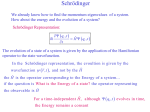

3.1 The Schrödinger and Heisenberg time-dependent pictures

One way to view the principal difference between classical mechanics and quantum mechanics is in

the way they treat conjugate variables. Conjugate variables are pairs whose products have units of

Planck’s constant, ≈ 6.62.10-34 J.s (or kg-m/s2 . m). The main example encountered in timeindependent quantum mechanics is x and p, so we review them briefly.

Position and momentum are independent variables in classical mechanics; both are needed to specify

the initial condition of a trajectory, after which the trajectory can be computed in principle for all future

times. (In practice, the extreme sensitivity of trajectories to initial conditions, called classical chaos,

may make that difficult.) Rewriting Newton’s equation F = ma = m

x as two first-order differential

equations, we obtain

H

B.3.1.1

x v p / m, p& F

x

Making further use of x p / m and H = p2/2m + V(x) in Cartesian coordinates, we obtain Newton’s 3d

law in the form of Hamilton’s equations

H

H

x

, p&

B.3.1.2

p

x

One can prove that these equations hold in any canonical coordinate system, not just in Cartesian

coordinates. To integrate them to yield x(t) and p(t), x0 and p0 must be specified.

In quantum mechanics, x and p are not independent variables. They are Fourier-conjugate, which

means that the operator p̂ is given in terms of position by (see Appendix A)

B.3.1.3

p̂ ih / x

In the position representation, the system is entirely specified by the wavefunction x, and the

expectation value of the momentum is obtained as <p> = -i∫dx x∂x/∂x = <x|p|x>. Vice-versa,

the position operator and position expectation value are given in the momentum representation by

analogous equations. The Fourier-conjugate relationship leads to the Heisenberg principle

B.3.1.4

px h / 2

When the equal sign holds, this principle is not indicative of any ‘uncertainty’ in x and p (Heisenberg

himself called it the ‘Unschärfeprinzip,’ not the ‘Unsicherheitsprinzip’). It is far more radical: it states

that x and p are not independent variables, and it makes no sense to attempt to specify them

independently.

This is normal for Fourier-conjugate variables, and shows up classically in the property of waves.

The best-known classical Fourier principle is

̂ i / t

B.3.1.5

t 1 / 2

B.3.1.6

where t is time and is the angular frequency 2. Time and frequency are not independent variables,

and figure B-3 illustrates how waveforms can be represented equivalently as a function of time or as a

function of frequency via the Fourier transform. Time derivatives in the time domain correspond to

multiplication by –i in the frequency domain. The key is that waveforms narrow in time will be broad

in frequency, and vice-versa. This is again not an uncertainty, but a property of waves: a very short

chunk of a waveform simply does not have a single frequency, but must be synthesized out of a very

large number of frequency components sin it and cosit.

Using Planck’s relationship E = h between the frequency of electromagnetic radiation and the

corresponding energy difference, eqs. 5 and 6 can be rewritten as

B.3.1.7

Ê ih / t

Et

h

/

2

B.3.1.8

Schrödinger realized that the full quantum theory of motion would involve replacing p and E by the

corresponding Fourier-conjugate operators in terms of x and t (eqs. 3 and 8), and applying the resulting

operators to a function (x,t) to obtain a differential equation describing the motion of the system:

p̂ 2

Ĥ Ê [

V (x)](x,t) ih (x,t)

t

2m

B.3.1.9

2

2

h

[

V (x)] ih

2m x 2

t

The time dependent Schrödinger equation of course cannot be derived from classical dynamics, but it is

very similar in form to Hamilton’s equations of motion. The Schrödinger equation is actually two

coupled differential equations: it contains a factor i, so the wave function (x,t) = (x,t) + i(x,t) is a

complex function, which is really two functions. Splitting the Schrödinger equation into its independent

real and imaginary components, it can be rewritten as

i H r / h

B.3.1.10

&

r H i / h

to be compared with eq. 2. Such pairs of coupled differential equations for two functions, one having a

(-) sign, the other a (+) sign, are called symplectic, or ‘area preserving.’ Classically this means an area

xp is mapped into an equal area in phase space at a later time. Quantum mechanically it means that

the normalization of the wave function is preserved, and that the Heisenberg principle of eq. 4, once

satisfied, will always be satisfied.

The solutions to eq. 9 can be very complex (pun intended) functions of position and time. The timedependent Schrödinger equation has one particularly simple kind of solution when the wave function

can be split into position and time-dependent parts of the form

iEt /h

B.3.1.11

(x,t) (x)e

Inserting this Ansatz into eq. 9, we obtain the time-independent Schrödinger equation, which solves for

the stationary position-dependent part only. Thus the states we usually think of as time-independent

eigenstates actually have a simple time-dependence, given by the phase factor exp[-itEi/] from equation

11. Their phase oscillates, and after a period h / E , (x, ) (x,0) .

Quantum systems are not generally in an eigenstate, but evolve in time just as classical systems do.

However, if we know the complete set of eigenstates, it is easy to compute the dynamics for any initial

state (x,t=0). Switching to Dirac notation |t> for the time evolving state and |i> for the eigenstates at

energy Ei, we insert a complete set of states:

| 0 | i i | 0 ci0 | i

B.3.1.12

At t>0, the stationary states in eq. 12 simply evolve with the phase factor of equation 11, so the state |t>

evolves as

iEi t /h

|i

B.3.1.13

| t ci0 e

This formally reduces the problem of time-dependent quantum mechanics to finding the overlap of the

initial state with all eigenstates. Of course, in practice finding all eigenstates and their eigenenergies is

not a trivial task.

There is another formal solution of the time-dependent Schrödinger equation useful for derivations,

although again it is difficult to use for direct computation. Rewriting equation 9 as

B.3.1.14

Ĥ ih / t iĤdt d / ,

we can integrate both sides to obtain the formal solution

iĤt /h

(0) Û(t)(0) .

B.3.1.15

(t) e

This is an operator equation, which requires exponentiation of an operator, the Hamiltonian, to obtain

the time evolution operator U, also known as the propagator. If the Hamiltonian were represented in

terms of a matrix H (via its matrix elements Hij in some basis {|j>}), this would correspond to

computing the exponential of a matrix, or in a Taylor approximation,

/ h) I iHt / h ( Ht / h)2 L ,

B.2.1.16

exp(iHt

%

% %

%

where I is the identity matrix. This involves a large number of matrix multiplications even when a

approximation is used, a computationally very expensive task. Only if the basis {|j>} for H is

truncated

the eigenbasis is the matrix multiplication easy, but then we are back to solving the full eigenvalue

problem. At very short times, only the linear term is significant, but note that the truncated operator

does not preserve the norm of the wave function (after all, I by itself is already normalized). The

linear approximation by itself will blow up at long times.

Figure B-4 considers two examples of time evolution. In one case, a quantum particle is moving in a

double well potential. The ground state |0> and first excited state |1> wave functions are shown. A

system in one of these eigenstates is delocalized over both sides of the well, i.e. a classical statement

such as ‘ammonia in its lowest energy state has all three protons below the nitrogen nucleus’ is

meaningless. But now consider the system in the initial state |t = 0> = |0>+|1>. This state is localized

on the left side of the well. It time-evolves as

iEt /h

|1

B.3.1.17

| t | 0 e

When t = h/2E, the wave function has evolved to |t = h/2E> = |0> - |1>. The system is now localized

on the right side of the well. It has tunneled through the barrier separating the two

wells, even though both eigenstates have energies below the barrier, a classically forbidden process.

In the second example, consider exciting a wave packet consisting of several successive harmonic

oscillator eigenstates. They will tend to add up on the left, and cancel on the right (because odd/even

quantum number eigenstates switch ‘lobes’ from positive to negative on the right side). The resulting

wave packet is localized on the left side. As it time-evolves, the phases of the higher energy states

advance more rapidly, until eventually the lobes on the right add up and the ones on the left cancel. The

wave packet now has moved to the right side, in a time t = 1/2, where is the harmonic oscillator

vibrational frequency. This is very similar to what a classical particle would do: move through half a

vibrational cycle.

In the Schrödinger picture, operators such as p and x are time-independent, and the wave function

depends on time. In classical mechanics, p(t) and x(t) depend on time directly. Quantum mechanics can

also be formulated in this way, and indeed, was originally formulated in this way by Werner Heisenberg.

To see how this comes about, remember that wave functions are not observable; quantum mechanics

instead computes expectation values of observables as matrix elements of operators. For example,

<p(t)> = (t) | p̂ | (t) tells us what the average expected value of p is at time t, and we could

compute higher moments <p(t)n> to determine the full distribution of values of p.

Rewriting this explicitly in terms of the wave function at t = 0, an observable A's expectation value

as a function of time is given by

i

i

Ht

Ht

| (0)

(0) | Â(t) | (0)

h

{

{

1 (t)

2 3 | {Â | (t)

1 (0)

2 3 | e1 h4 2Âe4 3

B.3.1.18

ÔS

*

*

Ô

H

H

S

H

S iHt /h

where U = e

is the time-evolution operator. By inserting eq. 13 twice on the left hand side to

express (t) in terms of eigenfunctions, we obtain an expression for the expectation value of any

operator in terms of its diagonal and off diagonal matrix elements, and phase factors exp(–i[Ei-Ej]/ ).

As shown by the right hand side of eq. 18., instead of taking the wave functions as time-dependent

and operators as time-independent, we can take the operator as time-dependent and the wave functions

as time-independent:

i

Ht

i

Ht

A H (t) e A(0)e

B.3.1.19

Taking the derivative of eq. 19, one obtains the equation of motion (compare with the Liouville equation

in chapter 2):

dA 1

A

[A(t), H]

B.3.1.20

t

dt ih

The second term is usually zero since Schrödinger operators do not usually depend explicitly on time, so

we have

dA 1

[A(t), H] .

B.3.1.21

dt ih

This is the Heisenberg equation of motion. It is a more natural equation to use with operators in matrix

form because the commutator of two matrices is relatively easy to compute. It does not simplify the

computational problem because the time derivative still means that formally exponentiations are

involved in the solution, just like in eq. 15. However, eq. 21 makes the following statement transparent:

operators that commute with the Hamiltonian do not evolve in time, and share eigenfunctions with the

Hamiltonian.

Eq. 21 is very close to Hamilton’s equations of motion in form. Inserting x and p and evaluating the

commutators,

Ĥ

dx̂ 1

1

[x̂,H]

[x̂, p̂ 2 ] p̂ / m

dt ih

p̂

2imh

Ĥ

dp̂ 1

1

[p̂,H] [p̂,V(x̂)] V ( x̂) / x̂

ih

x̂

dt ih

B.3.1.22

Taking the expectation values on both sides of eq. 22, we obtain Ehrenfest’s theorem for the expectation

values as a function of time.

3.2 Time dependent perturbation theory: the goal

Time-independent perturbation theory is based on the idea that if H H0 V , and the matrix

elements or eigenfunctions of H0 are known, we can derive corrected energy levels based on the

smallness of the perturbation V compared to H0 . Time dependent perturbation theory follows a very

similar idea. Let us say we have a Hamiltonian

H(t) H0 V(t) ,

B.3.2.1

where V(t) is a small time dependent perturbation (of course the special case where V(t) is timeindependent is also allowed). We know how to propagate (0) to get (t) for H0 alone, but we do not

know how to propagate (0) for the full Hamiltonian. We want to derive an approximate propagator

that is based on the smallness of V(t) compared to H0 . A typical case where this is useful would be a

molecule interacting with a weak time-dependent radiation field, a molecule interacting with a surface or

with another molecule which is treated only via a potential V(t) instead of fully quantum mechanically,

or the time-dependent decay of an energy level due to coupling to other levels as in a dissociation

reaction or spontaneous emission.

3.3 TDPT: Interaction representation

Time-dependent perturbation theory attempts to replace the full propagator

t

i

dt ' H (t ')

h

0

B.3.3.1

U(t) Ot e

by a simpler propagator valid only for short times or small V(t). The problem with eq. 1 is twofold: 1)

U(t) is an exponential function, which is very difficult computationally; 2) if H(t) is time-dependent, it

may not commute with itself at a different time, and certainly not with H0. For example, an expression

of the type

U† (t)0U (t)

B.3.3.2

is unambiguous when H is time-independent, but leads to commutation problems when V(t) ≠ 0. Hence

a time-ordering operator Ot has to be included in eq. 1.

To make the propagator simpler, we would like to replace eq. 1 by a power series expansion. At a

first glance, it seems reasonable to try a Taylor expansion of the type

t

i

(t) U(t)(0) [1 dt ' H (t ') L ](0) .

B.3.3.3

h

0

The problem is that while V(t) may be very small, H0 usually is not. By expanding it also, eq. 3 can be

valid only for the very shortest of times. Furthermore, the eigenstates of H0 , or its action on (0) are

often known. Therefore it would be better to use an expansion which leaves the H0 part of the

propagator in exponential form, and expands only the V(t) part in a power series. We achieve this by

introducing a new propagator defined as

i

H0t

h

U(t) ,

B.3.3.4

U I (t) e

the so-called propagator in the interaction representation. Consider how this propagator acts on a

wavefunction (0) : first U(t) propagates the wavefunction properly to time t; then the exponential

undoes (+ sign in exponent) only the part of the propagation done by H0 . This leads to a much 'slower'

propagation of the wavefunction by the new operator UI (t) . In fact, if V(t) = 0 then U(t) = exp[-iH0t/]

and UI (t) is simply the identity operator, not propagating the wavefunction at all.

The propagator obeys the Schrödinger equation just like the wavefunction (but as an operator

differential equation). For example, if U(t) = exp[-iH0t/] , we have

(t)

U(t)

H (t) ih

HU(t)(0) ih

(0)

t

t

B.3.3.5

U(t)

HU(t) ih

t

Solving 4 for U(t) and inserting into the Schrödinger equation with Hamiltonian B.3.2.1,

i

i

i

i

H0t

H0t

H0t

H 0 t U (t)

I

H 0 e h U I (t) V (t)e h U I (t) H 0 e h U I (t) ihe h

.

B.3.3.6

t

Canceling identical terms on both sides and multiplying by exp[iH0t/] on both sides yields

U (t)

VI (t)U I (t) ih I ,

B.3.3.7

t

where VI (t) exp[iH 0t / h]V (t)exp[iH 0t / h] . The interaction propagator thus satisfies a much more

slowly evolving Schrödinger equation, from which all time evolution due to H0 has disappeared.

Integrating both sides, we have the fundamental equation of time-dependent perturbation theory

t

i

U I (t) I dt 'VI (t)U I (t) .

B.3.3.8

h

0

The identity operator is the integration constant because when t=0 the integral vanishes and we must

have UI (t) =I to satisfy the boundary conditions. When the propagator in the interaction representation

propagates (0) , it does not yield (t) of course. Rather,

i

H0 t

i

H0t

h

U(t)(0) e h (t) .

B.3.3.9

I (t) U I (t)(0) e

The interaction wavefunction is just the 'slower propagated' wavefunction discussed below eq. B.3.3.4: it

consists of the full wavefunction (t) with the propagation by H0 undone. As long as we can easily

propagate with H0 , we can move back and forth between the interaction representation and the usual

Schrödinger representation.

3.5 TPT when the eigenstates of H0 are known

In many applications, the eigenenergies Ej of H0 are known and the matrix elements Vji = <j|V(t)|i>

can be calculated, leading to explicit expressions for B.3.4.2. Consider the example of first order TDPT.

Let | (0) >=|i> and expand

iE t /h

| (t) c ji (t) | j(t) c ji (t)e j | j

B.3.5.1

j

j

from which follows

iE t /

iH t /

| I (t) e 0 c ji (t)e f | j c ji (t) | j .

B.3.5.2

j

f

The first order term in eq. B.3.4.2 then becomes

t

i

B.3.5.3

c ji (t) | j | i h dt 'eiH0 t '/hV (t ')eiH0 t '/h | i .

j

0

Taking the matrix element with a final state <f| and using the fact that |f> and |i> are eigenfunctions of

H0 we have

t

i

iE t '/h

c fi (t) f | i dt 'e f f | V (t ') | i eiEi t '/h

h0

.

B.3.5.4

t

i

i( f i )t

fi dt 'e

V fi (t ')

h0

Evidently, the time-dependent correction is proportional to the Fourier transform of the time dependent

potential evaluated at f i if we allow that V=0 for t'<0 and t'>t. Similarly, the higher order

perturbation corrections are simply nested Fourier transforms where series of matrix elements such as

VfkVki ... appear instead of just Vfi. The sum of all |cfi|2 should remain unity and cii should start out at 1

and decrease in magnitude, while the others start out at zero and increase in magnitude.

3.6 The Golden Rule

Consider a state |0> which is initially the only one populated. It is coupled by time independent

matrix elements Vj0 to a manifold of states {|j>}. Population will leak out of the state |0>, and the

question is: how fast is the rate of that population leakage? We are given

H H0 V, H0 | 0 E0 | 0 , H0 | j Ei | j , Vj0 j | V | 0 .

B.3.6.1

A typical example of this problem might be a vibronic state which is undergoing predissociation into a

continuum, or a highly excited vibrational state which is decaying into a manifold of other vibrational

states after excitation. According to first order TDPT,

i t

i

e j0 1

i t

c j 0 dt 'e j 0 Vj 0 Vj 0

h0

h j 0

The population going to state |j> is given by the square of that amplitude,

| Vj 0 |2

i t

i t

2

2

Pj 0 | c j 0 | | t | 0 | 2 2 {2 e j 0 e j 0 }

h j0

t

2 | Vj 0 |2

h2 2j 0

4 | Vj 0 |2

h2 2j 0

| Vj 0 |2 t 2

2

B.3.6.2

{1 cos j 0t}

sin2 ( j 0t / 2)

B.3.6.3

sinc2 ( j 0t / 2)

h

The total transition probability for leaving state |0> is obtained by summing eq. 3 over all states |j>. The

sum can be approximated by an integral, if we assume that the density of states (E) of the manifold

{|j>} is large and independent of energy, and if Vj0 is independent of j (and thus of energy):

Ptot 1 Pj 0 1 dE (E)Pj 0 (E)

j0

t2

1 | Vj 0 | (E0 ) 2 dE sinc2 ([E E0 ]t / 2h)

h

2

B.3.6.4

2

(E0 ) | Vj 0 |2 t 1 kGRt

h

Let us review some of the limitations of eq. 4. 1) It was derived with first order TDPT and is valid only

for short times. 2) Because we introduce an integral, it holds only for a true continuum of constant

density of states; variations in the density of states are not allowed for; moreover, a finite density of

states has to roll off as a sum of cosines, or 1-at2, as one can see by expanding |<0|t>|2 in eigenstates that

Ptot at early times. 3) It is only valid if |0> is coupled to every other state by either the same average

coupling, or at least by randomly distributed couplings independent of energy. The Golden Rule is a

mean field theory.

To extend eq. 4 to longer times, we can assume that we have n = t/t such steps of short duration t

that are independent of one another, or

kGR t n

Ptot lim1

e k GR t .

B.3.6.5

n

n

A succession of infinitely many infinitely short first order PT steps predicts an exponential decay. Most

theories that derive exponential decays like eq. 5 make overt or hidden assumptions similar to 1)-3)

discussed above. Real quantum systems generally violate 3). This has little effect at short times, where

replacing matrix elements by their ensemble averages does not greatly affect the dynamics. At long

times, the steps t are no longer independent of one another; they are correlated by the fluctuations in

the matrix elements V0i. The rate constant kGR can be expected to be a good predictor of the 1/e decay

envelope of Ptot, but one should never expect the decay of a quantum system to be exponential at very

long times, and if the quantum system has a finite density of states, one should not expect it at very short

times either. The main effect of correlating time steps is that they are no longer independent. Rather

than letting n∞, n should be the finite number of effectively independent steps. To a first

approximation, one might therefore expect quantum systems to decay as

1

kt D

Ptot (1 )1 .

B.3.6.6

D

≠0 for a finite density of states ) because there are only kGR) states under the spectral

envelope of an exponential decay (see 3.1.6). If the quantum system decays statistically at long times

such that there is an equal probability of finding it in any one of its states, then even state |0> will be

populated with probability 1/(kGR) ). Of course this approaches 0 as {|i>} becomes a true

continuum.

3.7 Absorption of a Gaussian electromagnetic pulse

Consider a molecule with a dipole moment subjected to a z-polarized Gaussian electromagnetic

pulse. The (nonconservative) Hamiltonian becomes

r r

r

r t 2 / t 2

cos( t )Ẑ .

B.3.7.1

H H 0 (t), where (t) e

ˆ

Z is a unit vector in the space-fixed z direction. To make the example more concrete, let the molecule

be a diatomic AB with rotation-vibration Hamiltonian

h2 2

h2 2

H0

V (r)

Ĵ

B.3.7.2

2 r 2

2 r 2

and dipole moment

B.3.7.3

(r)ẑ .

In these equations, is the reduced mass mAmB/(mA+mB), r is the internuclear distance, V(r) is the

vibrational potential energy, Jˆ the rotational angular momentum operator, (r) the dipole moment as a

function of bond distance, and zˆ a unit vector pointing along the bond axis, which is the molecule-fixed

z axis. We will assume we know the eigenfunctions |vJM> and energy levels EvJM of this Hamiltonian.

They depend on three quantum numbers: the vibrational quantum number v, the total angular

momentum quantum number J, and the orientational quantum number M. The eigenfunctions are of the

form |vJM> = |vJ>|JM> because Hmol commutes with the angular momentum operator Jˆ :

h2 2

h2 2

2 r 2 V (r) 2 r 2 Ĵ | vJ | JM

h2 2

h2

V

(r)

J(J 1) | vJ | JM

2

2

2 r

2 r

B.3.7.4

EvJM | vJ | JM

Taking a matrix element with <JM| on both sides we obtain the eigenvalue equation for |vJ>:

h2 2

h2

V

(r)

J(J 1) | vJ EvJM | vJ

B.3.7.5

2

2 r 2

2

r

Typically this could be approximately solved by calculating matrix elements in a harmonic oscillator

basis |v> and diagonalizing the matrix. If J is small and V(r) is approximately a parabola, eq. 5

approximately becomes the harmonic oscillator equation, so |vJ> ≈ |v> under those conditions and |vJM>

≈ |v>|JM>. The details of how to derive a Hamiltonian such as eq. 5 and its eigenfunctions and energy

levels are discussed in chapters 5 through 9. The interaction term in the Hamiltonian explicitly becomes

r r

t 2 / t 2

cos( t )

B.3.7.6

(t) (r)cos e

ˆ

where is the angle between the zˆ and Z axes. Taking the transition matrix element,

V fi (t) v f J f M f | (r)cos et

2

/ t 2

cos( t ) | v i J i M i

v f J f | (r) | v i J i J f M f | cos | J i M i et

fi et

2

/ t

2

2

/ t 2

cos( t )

B.3.7.7

cos( t )

As seen in chapter 5, fi is nonzero only if Jf = Ji±1, corresponding to an R branch or P branch

transition. Doing first order time-dependent perturbation theory, we obtain

i fi

2

2

i t

c fi

dt 'e fi et / t cos( t ) .

B.3.7.8

h

Note that the integration is extended to ±∞ instead of 0 to t; we want to know the final transition

probability, and we can extend the integration because the pulse has a cutoff t, so the integrand is

effectively zero for values much outside the range (-t, t). The integral is simply the Fourier transform

of a Gaussian, which yields another Gaussian

i fi t i t 2 ( fi )2 / 4

t 2 ( fi )2 / 4

c fi

e e

ei e

.

B.3.7.9

2h

Taking the absolute value squared to obtain the transition probability,

2fi 2t 2 t 2 ( fi )2 /2 t 2 ( fi )2 /2

t 2 [( fi )2 ( fi )2 ]/ 4

Pfi

e

e

2 cos 2 e

.

B.3.7.10

2

4h

If the phase of the incoming radiation is random (e.g. the glowbar of a IR spectrometer, or even if a

laser, the molecules are at random spatial positions) , the third term in parenthesis averages out to zero

and we have our final result

2

3/2 fi

t t 2 ( fi )2 /2

t t 2 ( fi )2 /2

Pfi 3/2

t

e

e

B.3.7.11

.

2

2 h

2

Again, the probability increases linearly with time just as for the Golden Rule. The first term in

parentheses is called the Rabi frequency (see chapter 11), while the second term in parentheses contains

the normalized Gaussian lineshapes. The fi term is resonant upon absorption, while the fi

term is resonant only for negative frequencies, corresponding to stimulated emission. If we took the

limit t ∞ in the lineshape, it would approach a delta function. If we had taken a box-shaped pulse

(constant from 0 to t), the lineshape would have been a sinc function, as discussed in the previous

section.