* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Basic Statistical Concepts

Degrees of freedom (statistics) wikipedia , lookup

Foundations of statistics wikipedia , lookup

Bootstrapping (statistics) wikipedia , lookup

Taylor's law wikipedia , lookup

History of statistics wikipedia , lookup

Sampling (statistics) wikipedia , lookup

Statistical inference wikipedia , lookup

Gibbs sampling wikipedia , lookup

Misuse of statistics wikipedia , lookup

Some Basic Statistical

Concepts

Dr. Tai-Yue Wang

Department of Industrial and Information Management

National Cheng Kung University

Tainan, TAIWAN, ROC

1/33

Outline

Introduction

Basic Statistical Concepts

Inferences about the differences in Means,

Randomized Designs

Inferences about the Differences in Means,

Paired Comparison Designs

Inferences about the Variances of Normal

Distribution

2/33

Introduction

Formulation of a cement mortar

Original formulation and modified formulation

10 samples for each formulation

One factor formulation

Two formulations:

two treatments

two levels of the factor formulation

3

Introduction

Results:

4

Introduction

Dot diagram

5

Basic Statistical Concepts

Experiences from above example

Run – each of above observations

Noise, experimental error, error – the individual runs

difference

Statistical error– arises from variation that is

uncontrolled and generally unavoidable

The presence of error means that the response variable

is a random variable

Random variable could be discrete or continuous

6

Basic Statistical Concepts

Describing sample data

Graphical descriptions

Dot diagram—central tendency, spread

Box plot –

Histogram

7

Basic Statistical Concepts

8

Basc Statistical Concepts

9

Basic Statistical Concepts

•Discrete vs continuous

10

Basic Statistical Concepts

Probability distribution

Discrete

0 p( y j ) 1 all values of y j

P( y y j ) p( y j ) all values of y j

p( y ) 1

j

all values

of y j

Continuous

0 f ( y)

b

p ( a y b) f ( y )dy

a

f ( y )dy 1

11

Basic Statistical Concepts

Probability distribution

Mean—measure of its central tendency

yf ( y )dy y continuous

y yp( y ) y discrete

all

Expected value –long-run average value

yf ( y )dy y continuous

E( y)

y yp( y ) y discrete

all

12

Basic Statistical Concepts

Probability distribution

Variance —variability or dispersion of a

distribution

( y ) 2 f ( y )dy y continuous

2

(

y

)

p( y ) y discrete

all y (

or

2 E[( y ) 2 ]

or

V ( y) 2

13

Basic Statistical Concepts

Probability distribution

Properties: c is a constant

E(c) = c

E(y)= μ

E(cy)=cE(y)=cμ

V(c)=0

V(y)= σ2

V(cy)=c2 σ2

E(y1+y2)= μ1+ μ2

14

Basic Statistical Concepts

Probability distribution

Properties: c is a constant

V(y1+y2)= V(y1)+V(y2)+2Cov(y1, y2)

V(y1-y2)= V(y1)+V(y2)-2Cov(y1, y2)

If y1 and y2 are independent, Cov(y1, y2) =0

E(y1*y2)= E(y1)*V(y2)= μ1* μ2

E(y1/y2) is not necessary equal to E(y1)/V(y2)

15

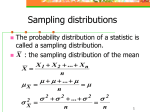

Basic Statistical Concepts

Sampling and sampling distribution

Random samples -if the population contains N elements and a

sample of n of them is to be selected, and if

each of N!/[(N-n)!n!] possible samples has

equal probability being chosen

Random sampling – above procedure

Statistic – any function of the observations in a

sample that does not contain unknown

parameters

16

Basic Statistical Concepts

Sampling and sampling distribution

Sample mean

n

y

y

i 1

i

n

Sample variance

n

s2

2

(

y

y

)

i

i 1

n 1

17

Basic Statistical Concepts

Sampling and sampling distribution

Estimator – a statistic that correspond to an

unknown parameter

Estimate – a particular numerical value of an

estimator

Point estimator: y to μ and s2 to σ2

Properties on sample mean and variance:

The point estimator should be unbiased

An unbiased estimator should have minimum

variance

18

Basic Statistical Concepts

Sampling and sampling distribution

Sum of squares, SS

in

n

2

( yi y )

E ( S 2 ) E i 1

n 1

Sum of squares, SS, can be defined as

n

SS ( yi y )2

i 1

19

Basic Statistical Concepts

Sampling and sampling distribution

Degree of freedom, v, number of independent

elements in a sum of square

in

n

2

( yi y )

E ( S 2 ) E i 1

n 1

Degree of freedom, v , can be defined as

v n 1

20

Basic Statistical Concepts

Sampling and sampling distribution

Normal distribution, N

1

(1 / 2 )[( y ) / ]2

f ( y)

e

- y

2

21

Basic Statistical Concepts

Sampling and sampling distribution

Standard Normal distribution, z, a normal

distribution with μ=0 and σ2=1

z

y

i.e.,

z~N( 0 ,1 )

22

Basic Statistical Concepts

Sampling and sampling distribution

Central Limit Theorem–

If y1, y2, …, yn is a sequence of n independent

and identically distributed random variables

with E(yi)=μ and V(yi)=σ2 and x=y1+y2+…+yn,

then the limiting form of the distribution of

zn

x n

n 2

as n∞, is the standard normal distribution

23

Basic Statistical Concepts

Sampling and sampling distribution

Chi-square, χ2 , distribution–

If z1, z2, …, zk are normally and independently

distributed random variables with mean 0 and

variance 1, NID(0,1), the random variable

x z12 z22 ... zk2

follows the chi-square distribution with k

degree of freedom.

1

f ( x) k / 2

x ( k / 2 )1e x / 2

2 (k / 2)

24

Basic Statistical Concepts

Sampling and sampling distribution

Chi-square distribution– example

If y1, y2, …, yn are random samples from N(μ, σ2),

distribution,

n

SS

2

( y y)

i 1

i

2

2

~ n21

Sample variance from NID(μ, σ2),

SS

S

i.e., S 2 ~ [ 2 /( n 1)] n21

n 1

2

25

Basic Statistical Concepts

Sampling and sampling distribution

t distribution–

If z and k2 are independent standard normal and

chi-square random variables, respectively, the

random variable

tk

z

k2 / k

follows t distribution with k degrees of freedom

26

Basic Statistical Concepts

Sampling and sampling distribution

pdf of t distribution–

[( k 1) / 2]

1

f (t )

k (k / 2) [( t 2 / k ) 1]( k 1) / 2

t

μ =0, σ2=k/(k-2) for k>2

27

Basic Statistical Concepts

28

Basic Statistical Concepts

Sampling and sampling distribution

If y1, y2, …, yn are random samples from N(μ, σ2),

the quantity

y

t

S/ n

is distributed as t with n-1 degrees of freedom

29

Basic Statistical Concepts

Sampling and sampling distribution

F distribution—

If u2 and v2 are two independent chi-square

random variables with u and v degrees of

freedom, respectively

u2 / u

Fu ,v 2

v / v

follows F distribution with u numerator degrees of

freedom and v denominator degrees of freedom

30

Basic Statistical Concepts

Sampling and sampling distribution

pdf of F distribution–

[( u v ) / 2]( u / v )u / 2 x ( u / 2 )1

h( x )

(u / x )(v / 2)[( u / v ) x 1]( uv ) / 2

0 x

31

Basic Statistical Concepts

Sampling and sampling distribution

F distribution– example

Suppose we have two independent normal

distributions with common variance σ2 , if y11,

y12, …, y1n1 is a random sample of n1

observations from the first population and y21,

y22, …, y2n2 is a random sample of n2

observations from the second population

S12

~ Fn1 1, n2 1

2

S2

32

The Hypothesis Testing Framework

Statistical hypothesis testing is a useful

framework for many experimental

situations

Origins of the methodology date from the

early 1900s

We will use a procedure known as the twosample t-test

33

Two-Sample-t-Test

Suppose we have two independent normal, if y11,

y12, …, y1n1 is a random sample of n1 observations

from the first population and y21, y22, …, y2n2 is a

random sample of n2 observations from the second

population

34

Two-Sample-t-Test

A model for data

i 1,2

yij i ij{

, ij ~ NID(0, i2 )

j 1,2,..., n j

ε is a random error

35

Two-Sample-t-Test

Sampling from a normal distribution

Statistical hypotheses: H :

0

1

2

H1 : 1 2

36

Two-Sample-t-Test

H0 is called the null hypothesis and H1 is call

alternative hypothesis.

One-sided vs two-sided hypothesis

Type I error, α: the null hypothesis is rejected

when it is true

Type II error, β: the null hypothesis is not rejected

when it is false

P( type I error ) P( reject H 0 | H 0 is true )

P( type II error ) P(fail to reject H 0 | H 0 is false)

37

Two-Sample-t-Test

Power of the test:

Power 1 P( reject H 0 | H 0 is false)

Type I error significance level

1- α = confidence level

38

Two-Sample-t-Test

Two-sample-t-test

Hypothesis:

H 0 : 1 2

H1 : 1 2

Test statistic:

where

y1 y2

t0

1 1

Sp

n1 n1

2

2

(

n

1

)

S

(

n

1

)

S

1

2

2

S p2 1

(n1 n2 2)

39

Two-Sample-t-Test

1 n

y yi estimates the population mean

n i 1

n

1

2

2

S

( yi y ) estimates the variance

n 1 i 1

2

40

Two-Sample-t-Test

41

Example --Summary Statistics

Formulation 1

Formulation 2

“New recipe”

“Original recipe”

y1 16.76

y2 17.04

S 0.100

S22 0.061

S1 0.316

S2 0.248

n1 10

n2 10

2

1

42

Two-Sample-t-Test--How the TwoSample t-Test Works:

Use the sample means to draw inferences about the population means

y1 y2 16.76 17.04 0.28

Difference in sample means

Standard deviation of the difference in sample means

2

y

2

n

This suggests a statistic:

Z0

y1 y2

12

n1

22

n2

43

Two-Sample-t-Test--How the TwoSample t-Test Works:

Use S and S to estimate and

2

1

2

2

2

1

The previous ratio becomes

2

2

y1 y2

2

1

2

2

S

S

n1 n2

However, we have the case where

2

1

2

2

2

Pool the individual sample variances:

(n1 1) S (n2 1) S

S

n1 n2 2

2

p

2

1

2

2

44

Two-Sample-t-Test--How the

Two-Sample t-Test Works:

The test statistic is

y1 y2

t0

1 1

Sp

n1 n2

Values of t0 that are near zero are consistent with the null

hypothesis

Values of t0 that are very different from zero are consistent

with the alternative hypothesis

t0 is a “distance” measure-how far apart the averages are

expressed in standard deviation units

Notice the interpretation of t0 as a signal-to-noise ratio

45

The Two-Sample (Pooled) t-Test

(n1 1) S12 (n2 1) S22 9(0.100) 9(0.061)

S

0.081

n1 n2 2

10 10 2

2

p

S p 0.284

t0

y1 y2

16.76 17.04

2.20

1 1

1 1

Sp

0.284

n1 n2

10 10

The two sample means are a little over two standard deviations apart

Is this a "large" difference?

46

Two-Sample-t-Test

P-value– The smallest level of significance that

would lead to rejection of the null hypothesis.

Computer application

Two-Sample T-Test and CI

Sample

N Mean StDev SE Mean

1

10 16.760 0.316 0.10

2

10 17.040 0.248 0.078

Difference = mu (1) - mu (2)

Estimate for difference: -0.280

95% CI for difference: (-0.547, -0.013)

T-Test of difference = 0 (vs not =): T-Value = -2.20 P-Value = 0.041 DF = 18

Both use Pooled StDev = 0.2840

47

William Sealy Gosset (1876, 1937)

Gosset's interest in barley cultivation led

him to speculate that design of

experiments should aim, not only at

improving the average yield, but also at

breeding varieties whose yield was

insensitive (robust) to variation in soil and

climate.

Developed the t-test (1908)

Gosset was a friend of both Karl Pearson

and R.A. Fisher, an achievement, for each

had a monumental ego and a loathing for

the other.

Gosset was a modest man who cut short

an admirer with the comment that “Fisher

would have discovered it all anyway.”

48

The Two-Sample (Pooled) t-Test

So far, we haven’t really

done any “statistics”

We need an objective

basis for deciding how

large the test statistic t0

really is

In 1908, W. S. Gosset

derived the reference

distribution for t0 …

called the t distribution

Tables of the t

distribution – see textbook

appendix

t0 = -2.20

49

The Two-Sample (Pooled) t-Test

t0 = -2.20

A value of t0

between –2.101 and

2.101 is consistent

with equality of

means

It is possible for the

means to be equal and

t0 to exceed either

2.101 or –2.101, but it

would be a “rare

event” … leads to the

conclusion that the

means are different

Could also use the

P-value approach

50

The Two-Sample (Pooled) t-Test

t0 = -2.20

The P-value is the area (probability) in the tails of the t-distribution beyond -2.20 + the

probability beyond +2.20 (it’s a two-sided test)

The P-value is a measure of how unusual the value of the test statistic is given that the null

hypothesis is true

The P-value the risk of wrongly rejecting the null hypothesis of equal means (it measures

rareness of the event)

The P-value in our problem is P = 0.042

51

Checking Assumptions –

The Normal Probability Plot

52

Two-sample-t-test--Choice of

sample size

The choice of sample size and the probability of

type II error β are closely related connected

Suppose that we are testing the hypothesis

H 0 : 1 2

H1 : 1 2

And The mean are not equal so that δ=μ1-μ2

Because H0 is not true we care about the

probability of wrongly failing to reject H0

type II error

53/72

Two-sample-t-test--Choice of

sample size

Define

One can find the sample size by varying power

(1-β) and δ

1 2

d

2

2

54

Two-sample-t-test--Choice of

sample size

Testing mean 1 = mean 2 (versus not =)

Calculating power for mean 1 = mean 2 + difference

Alpha = 0.05 Assumed standard deviation = 0.25

Sample Target

Difference

Size

Power Actual Power

0.25

27

0.95 0.950077

0.25

23

0.90 0.912498

0.25

10

0.55 0.562007

0.50

8

0.95 0.960221

0.50

7

0.90 0.929070

0.50

4

0.55 0.656876

The sample size is for each group.

55

Two-sample-t-test--Choice of

sample size

56

An Introduction to Experimental

Design -How to sample?

A completely randomized design is an

experimental design in which the treatments

are randomly assigned to the experimental

units.

If the experimental units are heterogeneous,

blocking can be used to form homogeneous

groups, resulting in a randomized block

design.

57/54

Completely Randomized

Design -How to sample?

Recall Simple Random Sampling

Finite populations are often defined by lists

such as:

Organization membership roster

Credit card account numbers

Inventory product numbers

58/54

Completely Randomized

Design -How to sample?

A simple random sample of size n from a finite

population of size N is a sample selected such

that each possible sample of size n has the same

probability of being selected.

Replacing each sampled element before

selecting subsequent elements is called

sampling with replacement.

Sampling without replacement is the procedure

used most often.

59/54

Completely Randomized

Design -How to sample?

In large sampling projects, computer-generated

random numbers are often used to automate the

sample selection process.

Excel provides a function for generating

random numbers in its worksheets.

Infinite populations are often defined by an

ongoing process whereby the elements of the

population consist of items generated as though

the process would operate indefinitely.

60/54

Completely Randomized

Design -How to sample?

A simple random sample from an infinite

population is a sample selected such that the

following conditions are satisfied.

Each element selected comes from the same

population.

Each element is selected independently.

61/54

Completely Randomized

Design -How to sample?

Random Numbers: the numbers in the table

are random, these four-digit numbers are

equally likely.

62/54

Completely Randomized

Design -How to sample?

Most experiments have critical error on random

sampling.

Ex: sampling 8 samples from a production line

in one day

Wrong method:

Get one sample every 3 hours not random!

63/54

Completely Randomized

Design -How to sample?

Ex: sampling 8 samples from a production line

Correct method:

You can get one sample at each 3 hours interval but not

every 3 hours correct but not a simple random

sampling

Get 8 samples in 24 hours

Maximum population is 24, getting 8 samples two digits

63, 27, 15, 99, 86, 71, 74, 45, 10, 21, 51, …

Larger than 24 is discarded

So eight samples are collected at:

15, 10, 21, … hour

64/54

Completely Randomized

Design -How to sample?

In Completely Randomized Design, samples are

randomly collected by simple random sampling

method.

Only one factor is concerned in Completely

Randomized Design, and k levels in this factor.

65/54

Importance of the t-Test

Provides an objective framework for simple

comparative experiments

Could be used to test all relevant hypotheses

in a two-level factorial design, because all

of these hypotheses involve the mean

response at one “side” of the cube versus

the mean response at the opposite “side” of

the cube

66

Two-sample-t-test—

Confidence Intervals

Hypothesis testing gives an objective statement

concerning the difference in means, but it doesn’t

specify “how different” they are

General form of a confidence interval

L U where P( L U ) 1

The 100(1- α)% confidence interval on the

difference in two means:

y1 y2 t / 2,n1 n2 2 S p (1/ n1 ) (1/ n2 ) 1 2

y1 y2 t / 2,n1 n2 2 S p (1/ n1 ) (1/ n2 )

67

Two-sample-t-test—

Confidence Intervals--example

68

Other Topics

Hypothesis testing when the variances are

known—two-sample-z-test

One sample inference—one-sample-z or

one-sample-t tests

Hypothesis tests on variances– chi-square

test

Paired experiments

69

Other Topics

70

Other Topics

71

Other Topics

72