* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download The Symbol Grounding Problem

Kevin Warwick wikipedia , lookup

Perceptual control theory wikipedia , lookup

Visual Turing Test wikipedia , lookup

Concept learning wikipedia , lookup

Chinese room wikipedia , lookup

Alan Turing wikipedia , lookup

History of artificial intelligence wikipedia , lookup

Turing test wikipedia , lookup

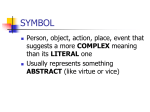

Cognitive model wikipedia , lookup

The Symbol Grounding Problem A Symbol System is: 1. 2. 3. 4. 5. 6. 7. 8. A set of arbitrary "physical tokens" Manipulated on the basis of "explicit rules" that are Likewise physical tokens and strings of tokens Manipulation is based purely on the shape of the symbol tokens Consists of "rulefully combining" and recombining symbol tokens Primitive atomic symbol tokens Composite symbol-token strings The entire system is "semantically interpretable" (Harnad, 1990) A thermostat is not a symbol system Behaving in accordance with the rule (implicit) is not necessarily following a rule (explicit) Semantic interpretability must be coupled with explicit representation (2), syntactic manipulability (4), and systematicity (8) in order to be symbolic Computers are symbol systems A binary command 11000110 Says “copy memory in the register denoted by the fifth and sixth positions to the register denoted by the seventh and eighth positions” Strong AI Symbol strings capture mental phenomenon The symbolic level is independent of its physical realization Computationalism A function is effectively computable if and only if it is Turing computable Persons are Turing machines The Turing Test (or Total Turing Test) is valid The Turing Test The Total Turing Test The Total Turing Test Computationalism A function is effectively computable if and only if it is Turing computable Persons are Turing machines The Turing Test (or Total Turing Test) is valid We will succeed in producing artificial persons Functionalism The mind is what the brain does If we find the key functions for intelligence then we can create algorithms to perform those functions The functions capture mental phenomenon Implications for Computers ANY computable function can be implemented as a lookup table All human inputs could be mapped to appropriate outputs AI is about computing the functions that underlie intelligence within the constraints of reality Grounding Meaning is coupled to environmental states Meaning of ‘meaning’ #1 Appropriate action results from input Meaning of ‘meaning’ #2 The agent knows why it produces a particular action as a result of an input and a given situation – – – – Goals Planning Explicit knowledge of what states result from its actions Consciousness?? Meaning of ‘meaning’ #3 Symbols derive there meaning solely based on what other symbols they are associated with Meaning of ‘meaning’ #4 The system contains concepts (representations) that are discernable as appropriately matching the environment Coupled to the Environment Several interpretations: – – – Shape of symbols capture some form of the sensory input The symbol system must physically exist within the environment without “software” The agent and environment mutually perturb each other Is a thermostat grounded? Meaning #1 – Meaning #2, 3, and 4 – Yes No Coupled – Yes Humans Humans are grounded Are humans symbol systems? The Problem Given a symbol system, can the symbols be truly grounded? "The question of whether a computer can think is no more interesting than the question of whether a submarine can swim." - E. W. Dijkstra Criteria for Symbol Grounding Lee McCauley Definitions Grounding – a representation is grounded when the agent can reason about all of the perceivable features of the entity represented and can construct an instantiation of the category – – “can reason” does not mean “will reason” “an instantiation of a category” does not mean recreating a particular episode Definitions (cont.) Meaning – a grounded representation’s associations with other grounded representations – “associations” include all possible relationships Logical (isa, hasa, etc.) Temporal Spatial Implicit What properties are necessary for symbol grounding? Primitives Iconic Representations/Perceptual Symbols Structural Coupling Systemic Learning Primitives The agent must have some primitive semantic elements – – – Perceptual primitives Primitive motivators Primitive actions Why primitive motivators? – Value judgment is impossible without them. Iconic/Perceptual Symbols Every representation in the system needs to be an icon of the features that feed into it – – – This is very much (although not exactly) like Barsalou’s perceptual symbols No matter what depth the representation might be at, it “resembles” the salient features that comprise it There are no truly arbitrary symbols Structural Coupling/Synchronization The agent is embodied within its environment The agent can change the environment through its actions in such a way that the environment’s impact on the agent is modified (mutual perturbation) Without this, the links through iconic representations break down Systemic Learning Systemic learning means that the structure of the representations change, not just the contents – Examples: Neural networks Some symbolic systems (would be possible in Lisp) For any complex enough agent in a complex enough environment to be interesting, the iconic representations and embodiment require a systemic learning mechanism to change how they think (not just what they think) Motivational adaptation (a form of Systemic Learning) Any sufficiently complex environment/agent interaction has intermediate goals that are not expressed by the primitive motivators A grounded agent should be able to modify its motivational structure in a way that increases its ability to satisfy its primitive motivators Properties: What’s missing or shouldn’t be present? Primitives Iconic Representations/Perceptual Symbols Structural Coupling Systemic Learning ?? Quotes "It is possible to store the mind with a million facts and still be entirely uneducated." -- Alec Bourne "Knowledge must be gained by ourselves. Mankind may supply us with the facts; but the results, even if they agree with previous ones, must be the work of our mind." -- Benjamin "Dizzy" Disraeli (1804-81), [First Earl of Beaconsfield] British politician Quotes (cont.) “Computers are useless. They can only give you answers.” -- Pablo Picasso (1881 - 1973) "The best computer is a man, and it's the only one that can be mass-produced by unskilled labor." -- Wernher Magnus Maximilian von Braun (1912-77), German-born American rocket engineer Quotes (cont.) “What is real? How do you define real? If you're talking about what you can feel, what you can smell, what you can taste and see...then real is simply ... electrical signals interpreted by your brain.” - Morpheus, The Matrix Quotes (cont.) "The factory of the future will have two employees: a man and a dog. The man's job will be to feed the dog. The dog's job will be to prevent the man from touching any of the automated equipment." -- Warren G. Bennis (b. 1925), American writer, educator, University of Southern California sociologist