* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Notes on Applied Linear Regression - Stat

Survey

Document related concepts

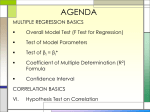

Transcript