* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download sequential decision models for expert system optimization

Pattern recognition wikipedia , lookup

Ecological interface design wikipedia , lookup

Embodied cognitive science wikipedia , lookup

Clinical decision support system wikipedia , lookup

Collaborative information seeking wikipedia , lookup

History of artificial intelligence wikipedia , lookup

Catastrophic interference wikipedia , lookup

Gene expression programming wikipedia , lookup

Personal knowledge base wikipedia , lookup

Mathematical model wikipedia , lookup

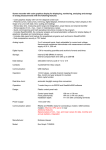

SEQUENTIAL DECISION MODELS FOR EXPERT SYSTEM OPTIMIZATION Vijay S. Mookerjee and Michael V. Mannino Department of Management Science 543-4796, [email protected] 685-4762, [email protected] 01/14/97 SEQUENTIAL DECISION MODELS FOR EXPERT SYSTEM OPTIMIZATION Abstract Sequential decision models are an important element of expert system optimization when the cost or time to collect inputs is significant and inputs are not known until the system operates. Many expert systems in business, engineering, and medicine have benefited from sequential decision technology. In this survey, we unify the disparate literature on sequential decision models to improve comprehensibility and accessibility. We separate formulation of sequential decision models from solution techniques. For model formulation, we classify sequential decision models by objective (cost minimization versus value maximization) knowledge source (rules, data, belief network, etc.), and optimized form (decision tree, path, input order). A wide variety of sequential decision models are discussed in this taxonomy. For solution techniques, we demonstrate how search methods and heuristics are influenced by economic objective, knowledge source, and optimized form. We discuss open research problems to stimulate additional research and development. 1. Introduction Expert systems have become an important decision making tool in many organizations. Some of the benefits attributed to expert systems include increased quality, reduced decision making time, and reduced downtime. Examples of successful expert systems are reported in many areas such as ticket auditing [SS91], trouble shooting [HBR95], risk analysis [Newq90], computer system design [BO89], and building construction [TK91]. Development of these systems has benefited from a large amount of research on technologies that can reduce the cost to construct and maintain the systems. As these technologies mature and lead to widespread deployment of expert systems, obtaining maximum value from the operation of expert systems becomes more important. Some expert systems may fail to deliver maximum value because appropriate optimization models have not been used. In some cases, the developer is unaware of available models, but in other cases appropriate models do not exist. Sequential Decision Models (SDM) provide a powerful framework to improve the operation of expert systems. The objective of a sequential decision model is to optimize cost or value over a horizon of sequential decisions. In sequential decision making, the decision maker is assumed to possess a set of beliefs about the state of nature and a set of payoffs about alternatives. The decision maker can either make an immediate decision given current beliefs or make a costly observation to revise current beliefs. The next observation or input to acquire depends on the values of previously acquired inputs. For example, a physician collects relevant information by asking questions or conducting clinical tests in an order that depends on the specific case. Once enough information has been acquired, the decision maker selects the best alternative. 2 Any expert system in which the cost or time to collect inputs is significant and inputs are not known until the system operates can benefit from an appropriate sequential decision model. Many expert systems in business, engineering, and medicine have costly inputs that may not be available before the system operates. In addition to costly inputs, the value of decisions can affect the operation of an expert system. Decision value depends on the costs of wrong decisions and the benefit (a negative cost) of correct decisions. When the values of decisions vary, a sequential decision model can make a tradeoff between the cost of collecting inputs and the decision value. Results of cost savings and increased user satisfaction from using sequential decision models are reported in many applications. In [PA90], expected test time reductions of 80% are reported for repairing power supply systems as compared to an existing test procedure. Simulation results in an automobile domain [HBR95] demonstrated expected cost reductions of $144 per case as compared to a static repair sequence. In [BH95] increased technician satisfaction and ease of use are reported for an operating system support domain using a sequential decision model coupled with a Bayesian belief network. In this survey, we examine the role of sequential decision models in the optimization of expert systems. Figure 1 describes the application of a sequential decision models to expert system optimization. Sequential decision models convert the knowledge of an expert system to an optimized form. There are 2 objectives that can apply to expert system optimization. Cost minimization is an appropriate objective when the decisions are fixed by the knowledge source or when decision value is uniform. When these conditions do not hold, value maximization is an appropriate objective. 3 Depending on the optimized form, objective and knowledge source, an appropriate search method and heuristics are chosen to solve the sequential decision model. The most general optimized form of an expert system resulting from a SDM is a decision tree. A variety of search methods including greedy, informed optimal such as AO* and dynamic programming have been used to generate decision trees. In solving a sequential decision model, heuristic measures are used for selecting an input, terminating a path (stopping), and choosing a goal as the terminating node (classification) of a path. Sometimes the optimized form may be less general than a tree. A path may be produced when there are space or time constraints; for example, when the optimized form must be produced on-line. A more restrictive optimized form is an input order where the next input acquired is insensitive to the states of previous inputs. Greedy search is suitable for less general optimized forms (path and input order). Economic Objectives Cost Minimization Value Maximization Search Methods Greedy search, Informed optimal search, dynamic programming, etc. Knowledge Source Human Expert, Training Cases, Rules, Belief Network, etc. Sequential Decision Model Heuristics and Measures Input Selection, Stopping, Classification Optimized Expert System (Decision Tree, Path, Input Order) Figure 1. Expert System Optimization Using Sequential Decision Models 4 The goal of this survey is to improve understanding of sequential decision models for expert system optimization. We would like to facilitate increased usage of sequential decision models and to improve understanding of the underlying assumptions so that they can be applied to expert system optimization. We would also like to spur additional research into problems that are not yet covered by sequential decision models. To accomplish this goal, we separate factors affecting the formulation of sequential decision models from issues about efficient solution. We classify sequential decision models by economic objective and knowledge source. We demonstrate how search methods and heuristics are influenced by economic objective, knowledge source and the kind of optimized form being sought. The remainder of this survey is organized as follows. Section 2 defines a taxonomy of sequential decision models and classifies existing sequential decision models. Sections 3 and 4 survey solution techniques for cost minimization and value maximization models, respectively. Section 5 discusses open research questions. Section 6 summarizes the work. 2. Formulation of Sequential Decision Models In this section, we present an informal overview of sequential decision models along with a more precise description. We first present an example expert system and describe how input costs and decision value can affect its operation. We then develop a taxonomy of sequential decision models based on economic objective, knowledge source, and application domain. We conclude this section with a more precise description of cost minimization and value maximization models. 5 2.1 Credit Granting Example To place our discussion of sequential decision models in context, we use a rule-based expert system as an example. Consider the simple credit granting expert system depicted in Table 1. There are 4 inputs (income, education, employment, and references) that the user may have to provide and 3 decisions (grant loan, refuse loan, and investigate further) that the system makes. When a new case is presented, the expert system consults its knowledge source (here, a set of rules) using an inference strategy such as forward or backward reasoning. Table 1: Knowledge Base for a Simple Credit-Granting Expert System Rule 1 If Sound-Financial-Status then Grant-Loan Rule 2 If Future-Repayment-Schedule then Investigate-Further Rule 3 If Doubtful-Repayment-Schedule then Investigate-Further Rule 4 If Poor-Financial-Status then Refuse-Loan Rule 5 If Income = “H” then Sound-Financial-Status Rule 6 If Income = “H” and Employed and BachDegree then Sound-Financial-Status Rule 7 If Income = “M” and not Employed and BachDegree then Future-Repayment-Potential Rule 8 If Income = “M” and Employed and Not BachDegree then Doubtful-Repayment-Potential Rule 9 If Income = “L” and Not BachDegree and References = “G” then Future-Repayment-Potential Rule 10 If Income = “L” and Employed and References = “G” then Future-Repayment-Potential Rule 11 If Income = “L” and References = “B” then Poor-Financial-Status Rule 12 If Income = “M” and not Employed and Not BachDegree then Poor-Financial-Status Rule 13 If References = “G” and Employed and BachDegree then Sound-Financial-Status The optimized form resulting from a sequential decision model can replace standard inference strategies. In Figure 2, the sequential decision model generates a decision tree (Figure 2) that provides a compiled inference strategy. A decision tree is a conditional ordering of inputs in which leaf nodes represent decisions, non-leaf nodes represent inputs to collect, and arcs 6 represent values of inputs. In Figure 2, the first input to collect is income (I1), followed by either education level (I2) if income is moderate or references (I4) if income level is low. When the knowledge source is a set of rules, the decision tree should produce the same decisions as the set of rules. The goal of an SDM is to produce a form (such as a decision tree or path) that optimizes an economic objective using a given a knowledge source. 2.2 Economic Factors It is beneficial to use a sequential decision model if inputs are costly and not available before the system operates. In the credit granting example, at least some of the inputs may be costly. For example, there is employee time and possibly communication costs involved in contacting and evaluating references. There may be expense involved in verifying employment history. Some of the inputs may not be available before the system operates; for example, references and employment history would probably not be available for new customers. I1 H D1 M L I2 Y I4 N G I3 I3 Y D1 Y N D2 D2 D3 I2 N Y D3 N I3 Y Legend I 1 : Income I2 : B a c h D e g r e e I 3 : Employment I 4 : References D 1 : Grant D 2 : Investigate D 3 : Refuse B D1 D2 N D2 Figure 2: A Decision Tree for Credit Granting 7 The conditions involving input cost and availability may at first seem not to apply to some expert systems. For example in credit granting, a financial institution can require that customers pay a loan application fee to offset information processing costs. However, competitive pressures such as deregulation, often force financial institutions to become more efficient in information processing costs. Even if an expert system operates with all inputs available when a problem is posed, it may be beneficial to re-engineer the system so that at least some inputs are acquired on demand. In the credit granting example, lower operational costs may be achieved by acquiring references and employment history on demand because they are not used in every rule. The economic objective can also be affected by the value of decisions. If decision values are symmetric, input costs alone can be considered. Symmetry of decision values means that all correct decisions have the same value and all incorrect decisions have the same penalty. In some situations, the value of decisions is not symmetric. For example, in credit granting, the cost of classifying a high risk customer as low risk may be different than the cost of classifying a low risk customer as high risk. A payoff matrix (Table 2) is a useful way to represent value of decisions. Negative costs on the diagonal elements represent benefits of correct decisions made by the system. Positive costs on the non-diagonal elements represent penalties for incorrect decisions. Symmetric payoff matrices have diagonals with the same negative cost and non-diagonals with the same positive cost. 8 Table 2: Payoff Matrix for Loan Granting Expert System Expert System Decision True Decision D1 (Grant) D2 (Investigate) D3 (Refuse) -10 5 10 D2 (Investigate) 8 -5 5 D3 (Refuse) 50 5 -10 D1 (Grant) 2.3 Applicability In cost minimization models, the output decisions are constrained by a knowledge source. Cost minimization is an appropriate objective when the knowledge source is fixed or the value of decisions is symmetric. The nature of the constraint depends on the knowledge source. If the knowledge source is a rule set, the sequential decision model is required to produce a decision tree that is logically equivalent to the rules. With some knowledge sources such as cases or historical data, the optimized form only explains the knowledge source but does not replicate it. The explanation property can be further relaxed to generate an optimized form that is more robust with unseen cases. In value maximization models, the sequential decision model can make a tradeoff between the value of decisions made by the system and input costs. Value maximization models are applicable when the value of decisions made by the system varies. In value maximization problems, the knowledge source does not directly provide an output decision. Instead, the knowledge source provides information about the certainty of a decision given the current state of the system. The sequential decision model may use the certainty provided by the knowledge source to make tradeoffs between the best decision and the cost to observe inputs. The consideration of decision value can be somewhat controversial. Most previous research on expert systems has assumed expert’s decisions to be the “gold standard” in the 9 problem domain. Hence a system that explicitly considers decision value may provide decisions that are different from the expert, collect more or less information to solve a problem or both. Cost minimization research is driven by the assumption that the expert’s decisions and costbenefit tradeoffs are optimal for all users. Hence a consistency requirement between the knowledge source and the concepts is enforced. Value maximization research, on the other hand, does not enforce a consistency requirement. The knowledge source is used to extract the probability of a class or a future outcome, conditioned upon certain input observations. In value maximization problems, decision rules are typically constructed using the expert’s beliefs (i.e., the probability information) combined with a representative payoff function for system users. Value maximization problems are therefore more appropriate when the expert system could be consulted by a diverse population of users with different payoff functions. 2.4 Taxonomy of Sequential Decision Models Researchers have proposed sequential decision models for a variety of economic objectives, knowledge sources, and applications. Table 3 classifies work by objective and knowledge source. There has been a lot of research on the cost minimization objective using decision tables, diagnostic dictionaries (a specialized decision table), and training cases. Value maximization is not possible with a decision table because the decision table fixes the recommendations. Value maximization studies are not as common because it is often more difficult to measure the value of decisions than the cost of inputs. Knowledge sources such as Bayesian belief networks are more difficult than decision tables because they non-monotonic. The next input acquired can increase or decrease the belief in 10 a goal. To cope with this complexity, work on non-monotonic knowledge sources is limited to specialized structures. For example, the belief network structure in [HBR95] has a single problem defining node. In [BH96], the belief network has a three-node structure with cause nodes leading to issue nodes leading to symptom nodes. In [MMG97], the belief network’s structure is limited and only goal directed queries can be optimized. Table 3: Taxonomy of Sequential Decision Models Objective Knowledge Source Cost Minimization Decision Table [RS66], [GR73], [KJ73], [Schw74] Value Maximization [SS76], [HR76], [MM78] Diagnostic [PA90] Dictionary Training cases [Nune91], [MM97] [MD93], [Mook96], [BFOS84], [Turn95] Belief Network [MMG97], [BH95], [HBR95] [HB95] Rules [DM93], [MM96a] Table 4 shows applications of sequential decision models by knowledge source and objective. We show representative references that have reported applications of sequential decision models to industrial problems. Early work focused on computer program generation from a decision table. Expert system compilation differs from earlier work on program generation in that an existing rule base must first be converted to a decision table before the sequential decision model optimizes the decision table. Troubleshooting has been the focus of much recent work. Key assumptions in many troubleshooting applications are the existence of a single fault and the fault can be identified through either a combination of tests or a repair action. Some troubleshooting work has used a diagnostic dictionary in which each failure state is associated with a unique combination of test 11 results. More recent interest in troubleshooting has used Bayesian reasoning networks. Medical diagnosis differs from troubleshooting in that the cost of medical tests is balanced against the payoff of successful diagnosis. In addition, there may not be a combination of tests or a repair action that reveals a patient’s problem. Display of time critical information [HB95] is another recent application of sequential decision models. Here the benefits of choosing a timely decision are balanced against the time for human operators to process displayed information. Table 4: Selected Applications of Sequential Decision Models Application Knowledge Source Objective References Program generation Decision table Minimize execution time [SS76], [Jarv71] Audit procedures Decision table Minimize cost of tests [Shwa74], [SKA72] Expert system compilation Rule base Minimize cost of inputs [DM93], [MM96a] Troubleshooting Diagnostic dictionary Minimize cost of tests [PA90] Troubleshooting Bayesian belief network Minimize expected cost of [BS95], [HBR95] repair Medical diagnosis Time critical display Bayesian belief network Bayesian belief network Maximize value of [HHM92], [HN92], diagnosis [HHN92], [Turn95] Maximize value of [HB95] displayed information Software help desk Training cases Minimize cost of inputs [SM91] 2.5 Formal Definitions We now present a formal definition of the cost minimization and value maximization models informally presented in Sections 2.1 through 2.4. We begin by defining sequential decision problems followed by the objective and constraints of the models. The models are inspired by the seminal works of [MW86, 87]. Other related works that have contributed to Sequential Decision Models include [BW91; JMW88; MRW90; MRW94]. 12 A sequential decision problem consists of 5 components: [X, D, Z: Y → 5 , C: (d , d ) → 5 Κ] + i j X is the set of inputs, Xi is an input i = 1,2,..n D is the set of decisions, dk ∈D, k = 1,2,...m. Y is the power set (set of all subsets) of X Z is an input cost function, Z(Y) is the joint cost of acquiring the set of inputs Y ⊆ X C is a classification cost function, C(di, dj) is the classification cost of making the decision dj when the correct decision is di. Κ is a knowledge source, Before defining the objective function and constraints of the models, some additional definitions are needed. T is the optimized form resulting from solving the model. For example, T may be a tree where inputs label the non-leaf nodes, input states label the arcs, and decisions label the leaf nodes. EIC(T) is the expected information acquisition cost of the optimized form T O is a problem that can be presented to the optimized form. A problem consists of a set of potentially observable input-value pairs and a “best” decision Od, as recommended by the knowledge source. Θ is the set of all problems. p(O) is the probability that the problem O will occur dOT is the decision recommended by optimized form T for problem O ECC(T) is the expected classification cost of T = ∑ p(O)C (d O ∈Θ O , d OT ) 13 TEC(T) is the total expected cost of the optimized form T = EIC(T) + ECC(T) Cost Minimization Model Find T* such that EIC(T*) ≤ EIC(T) for all T such that Od = dOT for all problems O Value Maximization Model Find T* such that TEC(T*) ≤ TEC(T) for all T The cost minimization and value maximization models differ primarily in how further acquisition of inputs is terminated. For example, if the optimized form is a tree or path, cost minimization models use the knowledge source constraint to generate leaf nodes (decisions). The knowledge source tells the model when a path has enough information to make a decision. In contrast, value maximization models often make a tradeoff between decision quality and input costs rather than a constraint on when a decision is reached. Input acquisition terminates only when the total expected cost cannot be reduced by acquiring further inputs. Both models optimize expected cost or payoff as is traditional in decision theory. Other measures besides optimizing an expected measure are possible. Examples of other measures are: (1) a minimax criterion to handle unreliable cost estimates [Jame84], (2) a combined mean risk objective [MM96b] to balance mean and variation in performance, and (3) misclassification variance [BFOS84] to control for wide differences in penalties of incorrect decisions. Sequential decision problems are difficult to solve because of the problem size. Optimal solutions have been reported only for some cost minimization models. In the above models, the 14 number of choices for an optimal tree grows exponentially with the number of inputs. Hyafil and Rivest [HR76] proved that constructing an optimal binary decision tree from a decision table is an NP-complete problem if each input can lead to the diagnosis of at most 3 decisions. The NPcomplete results in [HR76] were specialized to finding a decision tree with an expected cost less than a given value in [PA90]. Polynomial time results are described in [PA90] for 2 special cases: (1) each goal state is detected by 1 input and (2) sequential decision problem is equivalent to a noiseless coding problem. These special cases are not common, however. Because optimal decision trees can be difficult to generate, other optimized forms such as paths, input orders and rule schedules have been employed. Table 5 shows the different optimized forms that have been produced for variety of knowledge sources. Table 5: Optimized Form and Knowledge Source Knowledge Source Optimized Form Tree Decision Table Path Order [RS66], [GR73], [KJ73], , [MM78] [Schw74] [SS76], [HR76] Diagnostic Dictionary Training cases [PA90] [Nune91],,[MD93], [MM97], [SM91] [Turn95], [BFOS84] Belief Network [MMG97] [BH95], [HBR95], [HB95] [MMG97] Rules [DM93], [MM96a] [Davi80] The above cost minimization and value maximization models are rather abstract. They describe a large body of models, but they do not specify how the objectives and constraints are measured for a given problem. Precise definitions of objectives and constraints depend on the knowledge source. Sections 3 and 4 describe details about how these models are solved for specific knowledge sources. In addition, the above definitions do not cover recent extensions 15 such as multi-period models, cost uncertainties, and adversarial relationships. Section 5 discusses extensions to the base models presented in this section. 3. Solution Methods: Cost Minimization In this section, we survey methods that have been employed to solve the cost optimization model described in the previous section. We first describe solution methods that separate the tasks of concept and strategy formation followed by those that optimize these tasks jointly. Separating the tasks requires more work to process the knowledge source but can significantly reduce the search effort. Within methods that separate concept and strategy formation, we discuss methods used to form concepts from knowledge sources such as rule bases, belief networks, and cases. We next describe how concepts are converted into cost minimizing strategies to acquire information. The section ends with a discussion of joint concept and strategy formation. 3.1 Separate Concept and Strategy Formation Solution methods that separate the tasks of concept and strategy formation differ with respect to the severity of the requirement that the concepts must agree with the knowledge source. A severe requirement is that the concepts and the knowledge source must be logically equivalent, that is, they must logically imply each other. For example, in cost optimizing a rule base, the knowledge source (rules) is first expressed as a compressed decision table (concepts) that is logically equivalent to the rule base. A less severe requirement is that the concepts agree with the knowledge source, but the concepts are allowed to be more general than the knowledge source. For example, when rules are induced from cases, the requirement is that the rules explain the cases, but the rules are typically more general than the cases. Another requirement is that the concepts provide some partial cover of the knowledge source [MMG97]. 16 3.1.1 Concept Formation Before a cost minimizing strategy can be generated, the knowledge source must be converted into concepts (a compressed form derived from the knowledge source) that are suitable for cost optimization. Most of the work has focused on converting the knowledge source into an equivalent decision table. Depending on the knowledge source (rules, belief network, and training cases), there are several methods to convert the knowledge source into a decision table. Methods that separate concept and strategy formation require that the concept formation phase convert the given knowledge source into a “compressed” decision table. A compressed decision table is one in which all rules are minimal and all minimal rules are represented. There are many algorithms to remove redundancies in the rules of a given decision table [CB93]. However, these algorithms do not produce a unique decision table. From the standpoint of cost minimization, this raises the question: which non-redundant representation of the decision table should be used for cost minimization? To produce an optimal strategy, it is necessary to take the union of all non-redundant representations of the decision table. This is the compressed form of the decision table. The compressed form guarantees that all minimal ways to reach a final conclusion are enumerated. One effect of using the compressed form is that overlapping rules can be present in the decision table. Hence any method to produce a decision tree from a compressed table must be able to handle rule overlap. Rule Bases The conversion of a rule base to a compressed decision table has been discussed in [DM93] and [MM96a]. There are two basic ideas behind this technique. First, it is necessary to eliminate intermediate conclusions in the rule base such that a mapping between pure inputs and final conclusions is obtained. A pure input is one that is obtained from an external source such as 17 the user or a database. For example, in the credit granting rule base (Table 1), pure inputs are Income, BachDegree, Employment, and References. Final conclusions are system recommendations made at the end of the consulting session. In the credit granting rule base, final conclusions are Grant, Investigate, and Refuse. After a mapping is obtained, the second step in concept formation is to convert the decision table into a compressed form. The compressed decision table for the credit granting rule base is shown in Table 6. We note that a compressed decision table is not the only representation of concepts suitable for cost optimization. The only requirement is that the representation provide a complete and minimal mapping of pure inputs to final conclusions. Table 6: Rule Base Expressed as a Decision Table Inputs Rules Inc (I1) R1 H R2 - R3 M R4 M R5 M R6 L R7 L R8 L R9 M BachDegree (I2) - Y N Y Y N - - N Emp (I3) - Y Y N Y - N - N Ref (I4) - G - - - G G B - * * * * * * Decisions Grant (D1) Refuse (D2) Investigate (D3) * * * Belief Networks The conversion of a belief network to a compressed decision table is a more difficult problem because, unlike a rule base, a belief network is non-monotonic. The problem with cost optimizing a non-monotonic knowledge source is that special care must be taken to detect a condition in which a significant revision of results will not occur. For belief networks, it is necessary to specify whether or not a particular belief revision is significant. 18 Belief intervals provide one approach to specify significant belief revisions. In a belief interval, a user is indifferent to changes in belief within the interval, but revisions that cause a change in the belief interval are significant. For a given set of belief intervals, the network can be examined to detect whether it is possible to guarantee that a significant belief revision will not occur. A procedure for goal directed queries and well-behaved networks is described in [MMG97]. In well-behaved networks, input nodes are discrete and must be leaf nodes, i.e., have no child nodes below them. For any unobserved input, the states of the input can be ordered by the impact on the goal. The state ordering for an input does not depend upon states of other inputs. By examining the state orderings of all unobserved inputs, it is possible to specify a belief range that will contain the belief in the goal if all unobserved inputs are acquired. If the computed belief range is contained within an indifference interval (called a capturing condition in [MMG97]), then additional inputs are not necessary. Using the notion of a capturing condition, a certain portion of the belief network can be converted to rules to satisfy some coverage requirement. An x-coverage requirement means that there is a x% probability that a random problem can be solved using the rules. If a problem can be solved, then because of capturing, it is guaranteed that the belief network will produce a belief that maps to the belief interval produced by the rules. After an x-coverage set of rules is obtained, a standard decision table algorithm can be used to generate a compressed decision table. Training Cases An inductive approach to convert a set of cases to a set of rules can be found in the PRISM algorithm [Cend87]. Once the set of rules is obtained, a standard redundancy removal 19 algorithm can be used to generate a compressed decision table. However, beyond the formation of rules, PRISM uses rule scheduling, rather than decision tree formation, to improve the efficiency of the inference process. The concept representation technique used in case based systems is a set of norms [Kolo91]. Norms are rules that allow for partial matching. Because of partial matching, a set of norms can potentially generate a large number of rules with exact matching requirements. Theoretically, the approach used in [MMG97] to map a belief network to a decision table may be applicable to convert a set of norms to a decision table. However, no research to date has addressed this problem. 3.1.2 Strategy Formation Most of the methods discussed in this subsection deal with converting a compressed decision table to a minimal cost decision tree. Decision table to tree methods are either optimal or approximate. Approximate search methods often employ the use of greedy heuristics to prune the search space. Optimal search methods can be divided in terms of whether or not they use knowledge of the search space. Informed search approaches such as AND/OR search use “optimistic” heuristics that enable the search space to be pruned effectively. Uninformed methods, such as dynamic programming, use implicit enumeration to find the optimal solution. Optimal Methods Using Compressed Tables AND/OR tree search is a well-studied approach to solve strategy problems where the search space can be represented as a space of decision trees. The ability to represent the search space as a tree rather than a graph comes from the fact that the decision table is compressed as all minimal rules. The rules in the decision table provide stopping conditions for the search. In 20 contrast, when the decision table does not provide all minimal rules, the entire input space must be searched that requires a graph representation for the search space. We will discuss AND/OR graph search in the context of joint concept and strategy formation. A number of algorithms (AO* [MM73], HS [MM78], and CF [MB85]) are available to search AND/OR trees. Since the differences between these algorithms are not essential to this discussion, we will emphasize the most widely known variation, AO* [Pear84]. AO* is an “informed” optimal search algorithm that is guided by a heuristic function f that estimates the cost of the best solution at a given node. AO* is guaranteed to be optimal [Pear84] as long as the heuristic f is optimistic. The heuristic f is admissible if it underestimates the expected cost of the optimal solution. This optimistic property allows pruning. The more closely that f approximates the optimal expected cost, the greater the pruning ability of AO*. If f exactly estimates the optimal remaining cost, only the optimal hyper path will be expanded by AO*. Mannino and Mookerjee [MM96a] developed and analyzed several “optimistic” heuristics providing a range of choices for precision and computational effort. The expected rule heuristic calculates the minimum expectation using the remaining rules and a representative collection of cases. For each case, only the cost of the least expensive rule is included. The constrained expected rule heuristic calculates the minimum expectation constrained through one remaining input. Simulation experiments demonstrated that the expected rule and constrained expected rule heuristics are very precise leading to excellent performance in terms of the search effort. Optimal solutions could be determined on a personal computer for problems with 20 non-binary (average of 3.5 states) inputs. 21 The decision table to tree problem can also be solved using uninformed search, albeit with considerably more search effort. A dynamic programming formulation of this problem has been described in [DM93] where only modest sized problems were solved (7 non-binary inputs). Because dynamic programming has exponential computational requirements [Gare81], it becomes important to prune the search space through the use of optimistic heuristics as employed in the AO* algorithm. Approximate Methods Using Compressed Tables The problem of converting a compressed decision table to a minimal cost decision tree does not appear to have much deception, except for pathological cases [MM96a]. Deception occurs when a greedy choice results in a sub optimal solution. In [MM96a], greedy solutions were typically within 0.1 percent of optimal using the constrained expected rule heuristic. The expected rule heuristic was also found to be close to the optimal solution with much less search effort than the optimal search. Through a separate simulation experiment, Mannino and Mookerjee [MM96a] deliberately created pathological rule bases to deceive greedy search. They have reported solutions as far as 15% above the optimal, although the occurrence of such rule bases appears to be unlikely. The loss criterion heuristic, proposed in [DM93], also produces near-optimal solutions. The loss criterion heuristic is similar to the expected rule heuristic. However, it is difficult to implement when the decision table has overlapping rules. Other Methods A decision tree is not the only form of a strategy used to acquire inputs. Other strategies such as rule scheduling and input ordering can also achieve lower input costs. However, an 22 optimal strategy always dominates an optimal rule schedule or an optimal input order because these methods are a special case of a strategy. Rule scheduling is a method of controlling the processing of knowledge in an expert system [Davi80, Cend87, Lian92]. Rule scheduling methods can only crudely control the strategy to acquire information. Traditional backward and forward reasoning differ by the order in which rules are evaluated. However, the order of rule execution is only partially controlled by a reasoning method. Within any reasoning method, there may be more than one rule that is available for execution. Criteria used to prioritize competing rules include specificity, recency of use and rule length [Fu87]. A different approach to rule scheduling is one in which scheduling knowledge is directly embedded into the rule base using meta rules [Davi80]. Meta rules contain knowledge that helps determine the selection of the next rule. Although meta rules resemble the way humans handle scheduling information, it is difficult to acquire meta rules from human experts. The limitations of generating an ordering of inputs rather than a decision tree was demonstrated in [MM97]. In this work, two orderings were produced to minimize input costs for norms (rules with partial matching). First, an ordering of norms was generated. Within each norm, an order to test inputs was then generated. Testing of a norm was terminated as early as possible. For example, if a norm required that any 3 out of 4 conditions be true, testing terminated after 3 conditions were proven true or two conditions were proven false. The orderings generated by two selection measures (input cost sensitive and information sensitive) were compared to a decision tree generated using cost sensitive selection measure. The input cost measure proved superior to the information measure. Both orderings performed much worse than the decision tree. 23 3.2 Joint Concept and Strategy Formation In joint concept and strategy formation, the sequential decision model determines the concepts and decision tree. Joint approaches have fewer constraints on the knowledge source than separate approaches, but the search space is generally larger. As an example of the difference, consider a cost minimization problem where the knowledge source is a decision table. For illustration purposes, let us use the simple decision table depicted in Table 7. A joint approach such as [MM78] can use this decision table to find the optimal solution. A separate approach requires that decision table reveal all possible compressed rules. Table 7 is only partially 1 compressed because it is missing a compressed rule : I1=F, I2=F, I3=- (dash). The search space is larger for partially compressed decision tables because missing or implied rules must be discovered in the search process, but the knowledge source is easier to generate. Table 7: Partially Compressed Decision Table Rules Inputs R1 R2 R3 R4 I1 - F F T I2 F F T - I3 F T - T D1 * * D2 D3 1 * * The missing compressed rule can be discovered by expanding R1 and R2 into three complete rules. Two different compressions are possible for the 3 complete rules. 24 Optimal Methods Using Uncompressed Tables The methods discussed here are those that are used to convert a partially compressed decision table to a cost minimizing decision tree. Informed optimal search algorithms with optimistic (lower bound) heuristics were developed by [MM78] and [PA90] for decision table to decision tree conversion. In [MM78], the decision table is partially compressed as dash entries are possible but all minimal rules may not be present. In [PA90], the decision table is uncompressed with no dash entries. In addition, [PA90] assumes that each decision is associated with exactly one combination of input values. This is a reasonable assumption in troubleshooting applications. In both [MM78] and [PA90], the search space is an AND/OR graph because the entire input space must be covered in the search process. The sequential decision model discovers minimal concept representations and generates an optimal decision tree. The optimistic heuristic in [MM78] computes the maximum expected savings from incomplete paths (i.e., paths missing 1 input). The optimistic heuristics in [PA90] are analogous to noiseless coding [Huff52] in which faults correspond to messages, paths in a decision tree correspond to message codes, and a decision tree corresponds to a coding scheme. Four heuristics in [PA90] utilize input costs and constraints on input availability along with the noiseless coding analogy. AND/OR graph search appears to be most suitable method for solving this class of problems. Other methods such as branch and bound [RS66] and dynamic programming [SS76] have been found to be restricted to problems of modest size. A branch and bound implementation was limited to 5 binary inputs and dynamic programming to 12 binary inputs. 25 Approximate Methods Because of effort required to find optimal solutions, a number of greedy heuristics have been developed to convert a knowledge source to a decision tree. Knowledge sources that have been used for cost optimization include uncompressed decision tables, Bayesian Belief Networks, and a set of training cases. There are several greedy heuristics that have been applied to convert an uncompressed decision table to a minimum cost decision tree. These include: (1) maximizing information gain (entropy reduction [SW49]) per dollar ([John60], [Schw74], [GR73], and [DW87]), (2) minimizing dash (do not care) entries in the table ([PHH71] and [Verh72]), and (3) maximizing a distinguishability criterion (product of conditional probabilities) [GG74]. In [VHD82], an upper bound based on information gain and a lower bound based on Huffman coding [Huff52] were derived. These bounds were used in a look-ahead heuristic that minimizes the upper bound at each step. An upper bound for the distinguishability heuristic was derived in [GG74] but the bound tends to be very loose in practice [PA90]. Bayesian belief network structures have been used as the knowledge source in [HBR95] and [HB96]. The application domain is troubleshooting with the key assumption that device faults are observable or non-observable. Non-observable faults can be repaired, and the device can be tested to see if the fault has been eliminated. This assumption leads to an input selection rule and stopping condition. The input with the smallest expected cost is selected if the expected cost is less than the expected cost to repair with the current knowledge. This heuristic is applied in a greedy search process. If the expected cost of repair is less than the expected cost to observe the best input, the stopping condition is triggered. Potential faults are tested or repaired in an 26 order determined by the network until the fault is found. Simulation results and application to several pilot projects demonstrated significant improvements over other approaches. Greedy heuristics have been proposed for converting a set of training cases to a decision tree with the objective of minimizing input costs. Induction algorithms such as ID3 [Quin86,87] minimize the expected number of inputs to make a classification decision. Alternative measures have been proposed in [Nune91] and [MM97] to minimize expected input costs rather than expected number of inputs. The EG2 algorithm [Nune91] uses input cost divided by the information gain as the input selection measure while the ID3c algorithm [MM97] uses information gain divided by input cost. As is typical in induction algorithms, these measures are applied in a greedy search procedure. 4. Solution Methods: Value Maximization In this section, we review methods to solve value maximization problems for expert system optimization. The main difference between cost and value optimization problems is that in cost optimization problems decisions are constrained to be consistent with the knowledge source. In value maximization problems, the decisions are unconstrained. The decisions are associated with a cost function, for example, C(i, j), representing the cost of recommending decision j when the correct decision is i. Solution methods take advantage of the cost function associated with decisions to incorporate cost objectives in some or all the three elements of the sequential decision model, namely, input selection, stopping and classification. For example, the designer can trade off decision quality with the cost of providing the decisions such that maximum system value is 27 delivered. We next describe value maximization studies for a variety of applications and knowledge sources including training cases and belief networks. 4.1. Training Cases The Value Based (VB) algorithm [MD93] is an example of a value maximization technique applicable to knowledge sources that consists of a set of cases. The VB algorithm uses cost considerations in the design of the input selection, stopping and classification elements. Input selection is designed to maximize the rate of return provided by the next input. The rate of return is the benefit to cost ratio. Benefit is measured by the reduction in expected classification cost as a result of observing an input and cost is the input’s information acquisition cost. Since the VB algorithm is greedy, the next input at any stage is chosen with the highest benefit to cost ratio. The VB algorithm stops when the benefit to cost ratio for all remaining inputs is less than one. Classification is designed to minimize expected classification costs. The CART algorithm can be viewed as an attempt to maximize system value [BFOS83]. However, system value is defined in terms of misclassification costs only; inputs are assumed to be free and correct decisions are assigned zero costs. The approach taken in the CART algorithm is somewhat different to that of the VB algorithm. In the first phase of tree construction, CART does not use any stopping rule. Thus the decision tree is grown until pure partitions are achieved. A pure partition is one in which all cases have the same class. Once the initial decision tree is constructed, cross validation techniques are used to prune the tree. Unlike VB, CART uses non greedy search because it searches the space of pruned trees using cross validation techniques. ICET [Turn95] is another induction algorithm that uses nongreedy search to maximize system value. ICET employs a genetic algorithm to evolve a 28 population of input costs that are used to construct decision trees. These trees are compared using a set of test cases. The search converges with a cost assignment that generates the best tree. 4.2. Belief Networks The Pathfinder project [HHN92, HN92] applies utility considerations in making information gathering recommendations to the system user. Pathfinder is a Bayesian belief network developed to support diagnostic decisions for lymph node diseases. There are 60 disease varieties (including benign, Hodgkin’s disease and metastatic) and 30 features or inputs that the system can potentially acquire to diagnose the disease. Pathfinder associates a utility of u(dj, dk) for diagnosing disease dj as disease dk. For low levels of risk (less than 0.001 probability of death) it is assumed that the user’s utility of reducing risk of death is linear. The term “micromort” is used to represent a one in million chance of painless death. Pathfinder assumes that the user will be willing to buy or sell micromorts at $20 a micromort. Pathfinder makes information recommendations only as these recommendations are empirically found to be much less sensitive to utilities than diagnostic recommendations. In terms of the sequential decision model, Pathfinder can be described as using utility considerations in the design of the input selection element only. Because an exhaustive search of possible information recommendations can be time consuming, Pathfinder makes myopic computations for determining the next input. Non-myopic computations are possible for special cases where there is a single binary hypothesis node [HHM92]. Another value maximization technique applied to belief networks has been described in [HB95]. The application domain is a system that supports time-critical monitoring applications at the NASA mission control center. A time-critical decision is one in which the benefits of spending 29 time to review additional information must be compared with the criticality of the situation. In these decisions, the outcome utility diminishes significantly with delays in taking appropriate action. Delays occur because valuable time is lost in reviewing and processing additional information. Horvitz and Barry [HB95] model the user’s knowledge about the problem domain in a belief network so that the user’s action can be predicted for a given display of information. Using the belief network, they evaluate the change in the user’s action (if any) with the display of additional information. The delay incurred in reviewing and processing additional information is balanced with the likely improvement in the expected utility as a result of displaying the information. In addition to the stream of research on the optimization of belief network usage, other research has attempted to incorporate payoff information within the structure of a belief network. An influence diagram is a graphical structure for modeling uncertain variables and the value of decisions. Shachter (Shac86) develops optimal policies for evaluating regular influence diagrams to maximize the net value of decisions. A regular influence diagram has no cycles and has a directed path that contains all the decision nodes. Value nodes, if present, should have no successors. 4.3. Other Studies Although a majority of research in expert system value maximization has focused on maximizing expected system value, other objectives have also been proposed. One variation is the MR (Mean Risk) induction algorithm that is designed to optimize a combined mean-variance criterion [MM96b]. In most previous research, performance variation has been mainly used in the spirit of hypothesis testing for means [Lian92]. Here, the designer considers variance only to 30 ensure that the system design is statistically robust. However, system users may prefer a stable system to a highly variable one even though the mean performance of the stable system is worse. The user’s aversion for variation has been modeled using a constant, with units of dollars per dollar squared. Until a certain point, significant mean-variance tradeoffs do not exist because measures to improve mean performance also improve (that is, reduce) variation in performance. This is the pruning region [Ming89]. Beyond the pruning region, the input selection, stopping and classification elements of the MR algorithm explicitly trade off expected performance for variation in performance. We conclude this section with a discussion of bias handling in expert systems. In value maximization techniques, it is implicitly assumed that the expert and the system user have identical payoff functions. Sometimes this may not be the case. Mookerjee [Mook96] draws upon sequential decision making and agency theory to model the classification behavior of an expert who may or may not be self serving. The set of cases used as the knowledge source is debiased before it is used as input to an induction algorithm. The debiasing procedure uses evolutionary search to reconstruct cases that would have been provided by the expert if there was no economic bias. For knowledge sources (cases) with a factual class variable, bias is not an issue [BFOS84, Nune91]. However, when the knowledge source is a human expert, it pays to examine whether the expert is self serving. If the expert is self serving, debiasing data generates more system value from the standpoint of the user. 5. Open Research Areas There are a lot of interesting opportunities for future research in the optimization of expert systems. A second wave of research has already begun to address some of these questions. 31 Researchers are currently investigating optimization models for expert systems over larger horizons than a single consulting session, cost modeling issues (including temporal issues, scale economies, adversarial relationships, and cost uncertainties), and noisy input measurement. 5.1 Single and Multi Period Models The horizon over which the economic objectives are to be optimized is an important issue for an expert system. Consider for example, an expert system that aids in diagnosing and treating patients in a medical clinic. Previous models have mainly been concerned with actions the system takes to collect information (such as conduct clinical tests, ask questions, etc.). When treatments are part of the optimization scope, it is necessary to model the dynamics of treatment and the possible outcomes of these treatments [BH96]. For example, a treatment may change the condition of the patient. Hence, the results of earlier clinical tests could change. Designing an expert system to optimize an economic objective (such as cost or value) over a muti-session horizon is a difficult problem. The benefit of gathering information would not only depend upon its immediate benefits to aid in the diagnosis, but also in diagnosis benefits in future consulting sessions as well. Of course, the information may become stale and would have to be refreshed periodically. Saharia and Diehr [SD90] study the economics of refreshing information used for decision making. Their model may be useful to apply to the expert system scenario. In addition, the rich body of literature on muti-period inventory modeling may be useful to model the muti-session optimization of expert systems. 32 5.2 Cost Modeling Modeling input and classification costs also poses some interesting opportunities for further research. We discuss three broad issues here: (1) Temporal Issues, (2) Economies of Scale, and (3) Cost Uncertainties. Temporal issues may arise if input costs reduce as time passes. For example, a market demand forecast may be more costly and less certain at the product development phase than after the product has been in the marketplace for 6 months. However, the value of the forecast is higher at the product development phase than at a later phase. Temporal issues could also arise if the time delay in making a decision significantly reduces the benefits from the decision. For example, in time-critical applications such as emergency care of patients and space shuttle launch, if appropriate actions are not taken quickly, the consequences may be disastrous. Hence temporal issues concerning the quality of inputs and decisions may be important to consider to ensure optimal system design. Adversarial relationships arise when the system user and the information provider have asymmetric utilities and/or information. The information provider (such the applicant for a loan) tries to provide attractive value to be classified favorably. The decision maker needs to verify this value because opportunistic behavior is suspected. A related issue arises in the verification of inputs even when there is no deliberate attempt to conceal or reveal attractive information. The noise in the input can be reduced by verification or resampling resulting in a cost-quality tradeoff for the information. Economies of scale occur when the bulk acquisition of inputs reduces the total cost of acquiring these inputs. The bulk acquisition of different inputs may arise when there is some 33 dependence in the cost structure of these inputs. For example, Mannino and Mookerjee [MM96a] study a cost optimization problem where there are fixed and incremental costs of acquiring inputs. Fixed costs occur when a set of inputs requires a common operation such as drawing blood for medical tests or opening an engine for observing the state of different components [Turn95]. Incremental costs are the extra costs incurred in observing a particular input after performing the common operation. Another context in which economies of scale could arise is in the bulk solving of cases. For example, in loan granting the bank could outsource the credit checking step to an outside agency. Economies of scale could arise if the cost per credit check depends on the total volume of checks performed by the credit agency in a given period. The tradeoff here is between the lower cost of a credit check and the expected cost of wasting the credit information for a particular case. The modeling of uncertainties in costs has some interesting opportunities as well. One issue is the granularity of the class structure. Continuing with the loan granting story, it is reasonable to suppose that the cost of wrongly granting a loan would depend upon the amount of the loan granted. Hence for classification costs, uncertainty may arise from differences in the case being classified. Mookerjee and Mannino [MM96a] model the classification cost for a {true class, assigned class} pair as a mean value and a variance. In addition to uncertainties in classification costs, the cost of acquiring an input could vary across cases. For example, verifying credit may be relatively easy for an applicant with high income and a good history than for an applicant who has a history of bankruptcy. Uncertainties in input and classification costs could make the system perform variably across time. Performance variation has been studied as a criterion to evaluate inductive system performance in [MMG95, MM96b]. 34 5.3. Noisy Input Measurement Most previous research on the economic optimization of expert systems has assumed that pure inputs (information that cannot be derived by the system) supplied to the system are certain. Once a certain (pure) input is observed, the value of the input is known with certainty. Unfortunately, some expert systems operate in environments where the user cannot supply the value of an input with certainty. For example, if an auditor is asked whether a particular firm is a “going concern,” the response may not be a certain yes or a certain no. In such situations, expert systems allow the response to be provided with a certain level of confidence. This confidence is attached to the value of the input and propagated through the rules of the system to influence the confidence of conclusions reached by the system. The problem with uncertainty in pure inputs is that a finite mapping between pure inputs and conclusions cannot be easily obtained. To derive such a mapping one would have to consider all possible levels of uncertainty attached with all states of all pure inputs. Since uncertainty levels may be continuous (such as probability or certainty factor values), obtaining a finite mapping between pure inputs and outputs becomes problematic. One approach to resolve this situation may be to break up a continuous uncertainty scale to a discrete one. For example, if uncertainty levels between 0.0 and 0.25 do not affect the output in any way, then the entire range could be mapped to a single uncertainty level. The detection of insensitive uncertainty ranges requires a careful analysis of the system’s rules and calculus employed to handle uncertainty. Another approach would be to examine the raw data that is used by the expert to provide the value of an input where uncertainty may exist. It would be necessary to examine all uncertain pure inputs and identify the raw (certain) data that yields these inputs. 35 The fuzzy mapping between raw data and uncertain input variables would then be included as rules in the expert system. Other techniques such as neural networks may be useful to learn the fuzzy mapping. Although uncertainty in pure inputs is a real obstacle to the widespread deployment of sequential models, we know of no previous research that has addressed the “uncertainty inclusion” problem. 6. Summary We surveyed sequential decision models as tools for improving the operation of expert systems. Sequential decision models should be considered in optimization of expert systems when the cost of acquiring inputs is significant and all inputs are not available before the system operates. We classified sequential decision models by economic objective and knowledge source. Cost minimization models are more prevalent than value maximization models because the value of decisions is often difficult to estimate. Most work is reported for deterministic representations such as traditional if-then rules. Recently, there has been increased activity on uncertain representations such as belief networks. There still remain many research opportunities to improve expert system operation with sequential decision models. A key insight of this survey concerns separate versus joint concept and strategy formation. In cost minimization problems, severe consistency requirements between the knowledge source and the optimized form are often enforced. In such cases, it is useful to separate the steps of concept and strategy formation because strategy formation can be greatly simplified if minimal and complete concepts can be generated. If the consistency requirement is not so severe (for example, if training cases are used to induce the concepts), then the steps of concept and strategy formation should be optimized jointly. Here a concept formation technique that ignores costs will 36 likely prove suboptimal. For value maximization problems, the best choice appears to be to perform concept and strategy formation in a single, joint step. References BFOS84 Breiman, L., Friedman, J., Olshen, R., and Stone, C. Classification and Regression Trees, Wadsworth Publishing, Belmont, CA, 1984. BH95 Breese, J. and Heckerman, D. “Decision-Theoretic Case-Based Reasoning,” Forthcoming in IEEE Transactions on Systems, Man, and Cybernetics, August 1995, also available Microsoft Technical Report MSR-TR-95-03. BH96 Breese, J. and Heckerman, D. “Decision Theoretic Troubleshooting: A Framework for Repair and Experiment,” in Proc. Twelfth Conference on Uncertainty in Artificial Intelligence, August 1996, also available Microsoft Technical Report MSR-TR-96-06. BM85 Bagchi, A. and Mahanti, A. “AND/OR Graphs Heuristic Search Methods,” J. ACM 32, 1 (1985), 28-51. BO89 Barker, V. and O’Connor, D. “Expert Systems for Configuration at Digital,” Communications of the ACM 32, 3 (March 1989), 298-318. BW91 Balakrishnan, A., and Whinston, A., “Information Issues in Model Specification,” Information Systems Research, 2, 4 (1991), 263-286. CB93 Coenen, F. and Bench-Capon, T. Maintenance of Expert Systems, The A.P.I.C. Series, Number 40, Academic Press, San Diego, CA, 1993. Cend87 Cendrowska, J. "PRISM: An Algorithm for Inducing Modular Rules," International Journal of Man-Machine Studies, Vol. 27, pp. 349-370, 1987. CGD88 Chakrabarti, S. Ghose, S. and DeSarkar, S. “Admissibility of AO* When Heuristics Overestimate,” Artificial Intelligence, 34 (1988), North Holland, 97-113. Davi80 Davis, R., "Content Reference: Reasoning About Rules," Artificial Intelligence, No. 15, pp. 223-239, 1980. DM93 Dos Santos, B. and Mookerjee, V. “Expert System Design: Minimizing Information Acquisition Costs,” Decision Support Systems, 9 (1993), North Holland, 161-181. DW87 de Kleer, J. and Williams, B. “Diagnosing Multiple Faults,” Artificial Intelligence 32, (1987), Elsevier, 97-130. Fu87 Fu, K. "Artificial Intelligence," in Handbook of Human Factors, G. Salvendy (Ed.), John Wiley & Sons, Inc., 1987. Gare81 Garey, M. “Optimal Binary Identification Procedures,” SIAM Journal of Applied Math 23, 2 (1981), 173-186. 37 GG74 Garey, M. and Graham, R. “Performance Bounds on the Splitting Algorithm for Binary Testing,” Acta Informatica, 3 (1974), 347-355. GR73 Ganapathy, S. and Rajaraman, V. “Information Theory Applied to the Conversion of Decision Tables to Computer Programs,” Communications of the ACM 16, 9 (September 1973), 532-539. HB95 Horvitz, E. and Barry, M. “Display of Information for Time-Critical Decision Making,” in Proc. of the Eleventh Conference on Uncertainty in Artificial Intelligence, Montreal, August 1995. HBR95 Heckerman, D. and Breese, J., and Rommelse, K. “Troubleshooting under Uncertainty,” Communications of the ACM , (March 1995), . HHM92 Heckerman, D., Horvitz, E. and Middleton, B. “An Approximate, Nonmyopic Computation for Value of Information,” in Proc. of the Seventh Conference on Uncertainty in Artificial Intelligence, University of California, Los Angeles, July 1992, pp. 135-141. HHN92 Heckerman, D., Horvitz, E., and Nathwani, B. “Toward Normative Expert Systems: Part I. The PathFinder Project,” Methods of Information in Medicine, 31, (1992), 90115. HN92 Heckerman, D., and Nathwani, B. “An Evaluation of the Diagnostic Accuracy of PathFinder,” Computers and Biomedical Research, 25, (1992), 56-74. HR76 Hyafil, L. and Rivest, R. “Constructing Optimal Binary Decision Trees is NPComplete,” Information Processing Letters 5, 1 (May 1976), 15-17. Huff52 Huffman, D. “A Method for the Construction of Minimum Redundancy Codes,” Proc. IRE 40, 10 (October 1952), 1098-1101. ICHQ93 Irani, K., Cheng, J., Fayyad, U., and Qian, Z. “Applying Machine Learning to Semiconductor Manufacturing,” IEEE Expert 8, 1, pp. 41-47, Feb. 1993. Jame84 James, M. Classification Algorithms, John Wiley and Sons, 1985. John60 Johnson, R. “An Information Theory Approach to Diagnosis,” in Proc. 6th Symposium Reliability Quality Control, 1960, pp. 102-109. JMW88 Jacob, V., Moore, J., and Whinston, A., “Artificial Intelligence and the Management Science Practitioner: Rational Choice and Artificial Intelligence,” Interfaces, 18, 4 (1988), 24-35. KJ73 King, P. and Johnson, R. “Some Comments on the Use of Ambiguous Decision Tables and Their Conversion to Computer Programs,” Communications of the ACM 16, 5 (May 1973), 287-290. Kolo91 Kolodner, J. “Improving Human Decision Making through Case-Based Decision Making,” AI Magazine, American Association of Artificial Intelligence, Summer 1991, 52-67. 38 Lian92 Liang, T. “A Composite Approach to Inducing Knowledge for Expert System Design,” Management Science, Vol. 38, No. 1, pp. 1-17, 1992. MB85 Mahanti, A. and Bagchi, A. “AND/OR Graph Heuristic Search Methods,” Journal of the ACM 32, 1 (1985), 28-51. MD93 Mookerjee, V. and Dos Santos, B. “Inductive Expert System Design: Maximizing System Value,” Information Systems Research 4, 2 (June 1993), 111-140. Ming89 Mingers, J. "An Empirical Comparison of Pruning Methods for Decision Tree Induction, Machine Learning 4, 2, 1989, 227-243. MM73 Martelli, A. and Montanari, U. “Additive And/Or Graphs,” in Proc. 3rd International Conference on Artificial Intelligence, Stanford, CA, August, 1973, pp. 1-11. MM78 Martelli, A. and Montanari, U. “Optimizing Decision Trees Through Heuristically Guided Search,” Communications of the ACM 21, 12 (December 1978), 1025-1039. MM97 Mookerjee, V. and Mannino, M. “Redesigning Case Retrieval Systems to Reduce Information Acquisition Costs,” Information Systems Research, (In Press) 1997. Url http://weber.u.washington.edu/~zmann MM96a Mannino, M. and Mookerjee, V. “Redesigning Expert Systems: Heuristics for Efficiently Generating Low Cost Information Acquisition Strategies,” Under Revision at INFORMS Journal on Computing, 1996. Url http://weber.u.washington.edu/~zmann MM96b Mookerjee, V. and Mannino, M. “Mean-Variance Tradeoffs in Inductive Expert System Construction,” Submitted to Information Systems Research, 1996. Url http://weber.u.washington.edu/~zmann MMG95 Mookerjee, V., Mannino, M., and Gilson, B., “Improving the Performance Stability of Inductive Expert Systems Under Input Noise,” Information Systems Research 6, 4 (December 1995). MMG97 Mannino, M., Mookerjee, V., and Gilson, B. “Overcoming Non Monotonicity in Bayesian belief Networks,” Submitted to IEEE Transactions on Knowledge and Data Engineering. 1997. Url http://weber.u.washington.edu/~zmann Mook96 Mookerjee, V. “Debiasing Training Data for Inductive Expert System Construction,” submitted for publication to INFORMS Journal on Computing, 1996. More82 Moret, B. “Decision trees and diagrams,” ACM Computing Surveys 14, 4 (1982), 593623. MRW90 Moore,J., Richmand, W., and Whinston, A., “A Decision Theoretic Approach to Information Retrieval,” ACM Transactions on Database Systems, 15, 3 (1990), 311340. MRW94 Moore,J., Rao, H., and Whinston, A., “Multi-Agent Resource Allocation: An Incomplete Information Perspective,” IEEE Transactions on Systems, Man and Cybernetics, 24, 8 (August 1994). 39 MW86 Moore, J. and Whinston, A. “A Model of Decision Making with Sequential Information Acquisition - Part I,” Decision Support Systems 2, 4 (1986), North Holland, 285-307. MW87 Moore, J. and Whinston, A. “A Model of Decision Making with Sequential Information Acquisition - Part II,” Decision Support Systems 3, 1 (1987), North Holland, 47-72. Myer72 Myers, H. “Compiling Optimized Code from Decision Tables,” IBM Journal of Research and Development 16, 5 (September 1972), 489-503. Newq90 Newquist, H. “No Summer Returns,” AI Expert, (October 1990). Nune91 Nunez, M. “The Use of Background Knowledge in Decision Tree Induction,” Machine Learning, 6, 1991, 231-250. PA90 Patttipati, K. and Alexandridis, M. “Application of Heuristic Search and Information Theory to Sequential Fault Diagnosis,” IEEE Transactions on Systems, Man, and Cybernetics 20, 4 (July/August 1990), 872-887. Pear84 Pearl, J. Heuristics: Intelligent Search Strategies for Computer Problem Solving, Addison Wesley, 1984. PHH71 Pollack, S., Hicks, H., and Harrison, W. Decision Tables: Theory and Practice, Wiley, New York, 1971. Quin86 Quinlan, J. “Induction of Decision Trees,” Machine Learning, Vol. 1, 1986, 81-106. Quin87 Quinlan, J. “Simplifying Decision Trees,” International Journal of Man Machine Studies, Vol. 27, 1987, 221-234. RS66 Reinwald, L. and Soland, R. “Conversion of Limited-Entry Decision Tables to Optimal Computer Programs,” Journal of the ACM 13, 3 (July 1966), 339-358. Schw74 Schwayder, K. “Extending the Information Theory Approach to Converting LimitedEntry Decision Tables to Computer Programs,” Communications of the ACM 17, 9 (September 1974), 532-537. SD90 Saharia, A., and Diehr, G. “A Refresh Scheme for Remote Snapshots,” Information Systems Research, Vol. 1, No. 3, 1990. Shac86 Shachter, R. “Evaluating Influence Diagrams,” Operations Research, 34, 6, (Nov-Dec. 1986), 871-882. SKA72 Schwayder, K., Kenney, A. and Ainslie, R. “Decision Tables: a Tool for Tax Practitioners,” The Tax Advisor 3, 6 (June 1972), 336-345. SM91 Simoudis, E. and Miller, J. "The Application of CBR to Help Desk Applications," in Proceedings. Workshop on Case Based Reasoning, 1991, pp. 25-36. SS76 Schumacher, H. and Sevcik, K. “The Synthetic Approach to Decision Table Conversion,” Communications of the ACM 19, 6 (June 1976), 343-351. SS91 Smith, R. and Scott, C. Innovative Applications of Artificial Intelligence, Volume 3, AAAI Press, Menlo Park, CA, 1991. 40 SW49 Shannon, C. and Weaver, C. The Mathematical Theory of Communication, University of Illinois Press 1949 (Published in 1964). TK91 Tiong, R. and Koo, T. “Selecting Construction Formwork: An Expert System Adds Economy,” Expert Systems, (Spring 1991). Verh72 Verhelst, M. “The conversion of limited entry decision tables to optimal and near optimal flowcharts: two new algorithms,” Communications of the ACM 15, 11 (November 1972), 974-980. VHD82 Varshney, P., Hartman, P., and DeFaria, J. “Application of Information Theory to Sequential Fault Diagnosis,” IEEE Transactions on Computers 31, 2 (1982), 164-170.