* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Chapter 14, part C

Survey

Document related concepts

Transcript

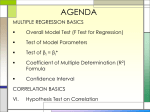

Chapter 14, part C Goodness of Fit. III. Coefficient of Determination We developed an equation, but we don’t really know how well it fits the data. The coefficient of variation gives us a measure of the goodness of fit for an estimated regression equation. How closely an estimate, ŷi comes to the actual value, yi is called a residual. A. Total Sum of Squares (SST) If you had to estimate repair cost but had no knowledge of the car’s age, what would be your best guess? Probably the mean repair cost. If we subtract each yi from the mean, we calculate the error involved in using the mean to estimate cost. I hope our regression equation does a better job of estimating repair cost than just using the mean! The calculation of SST n SST ( yi yi ) 2 i 1 For the 4th observation, this difference is 300-276=24. Do this for each observation, square it and sum them and you calculate SST=62,870. B. Sum of Squares due to Error (SSE) Every ith observation has a residual. The process of Least Squares minimizes the sum of the squared residuals. n 2 SSE ( yi yˆ i ) i 1 Some observations will be overestimated, some underestimated. A predicted yi that is $20 too high is just as large of a “miss” as $20 too low. So squaring each residual gives equal weight to positive and negative residuals of equal magnitude. Take the 4th observation. The estimated repair cost for a 4-year old car is $351.50, but the actual data for y4=$300. Repair Cost and Age y = 75.5x + 49.5 $500 Cost $400 =51.50 $300 y =276 $200 $100 $0 1 2 3 Age (years) 4 5 6 So the residual is 51.50. Square this for every observation and sum them and you get SSE=5867.50. You can also see the variation around the mean, 276. C. Sum of Squares due to Regression (SSR) So SSE measures how closely observations are clustered around the regression line, SST measures how closely they are clustered around the mean. What’s left over is called SSR. n SST = SSE + SSR, where SSR ( yˆ i yi ) 2 =57,002.5 i 1 Since our regression model is designed to minimize SSE, I would hope that SSR would make up the bulk of the total variation in y. D. Coefficient of Determination (R2) All of the variation in y is represented by SST, and since least squares is designed to minimize SSE, then a very good model is one that explains most of the variation in y and would thus have a very small SSE. Equivalently, you could think of a good model as having a large SSR, relative to SSE. If so, SSR/SST is very close to being equal to 1. This ratio, of SSR to SST is called R2, the coefficient of determination. SSR 0 R 1 SST 2 A terrible model has a very large SSE, and a very small SSR, so R2 is very close to zero. An excellent model has a R2 very close to 1. Interpretation of R2 • In the repair cost example, R2=.9067. This means that 90.67% of the total sum of squares can be explained by using the estimated regression equation between age and repair cost. Excel Output I’ve highlighted the relevant information in the table of regression output. Can you pick out the important information that we have been discussing? SUMMARY OUTPUT Regression Statistics Multiple R 0.95219352 R Square 0.906672499 Adjusted R Square 0.875563332 Standard Error 44.2248045 Observations 5 ANOVA df Regression Residual Total Intercept Age 1 3 4 SS MS F 57002.5 57002.5 29.14487 5867.5 1955.833 62870 Coefficients Standard Error t Stat P-value 49.5 46.38336627 1.067193 0.364141 75.5 13.98511113 5.398599 0.012457