* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Multiple Regression

History of numerical weather prediction wikipedia , lookup

Granular computing wikipedia , lookup

General circulation model wikipedia , lookup

Perceptual control theory wikipedia , lookup

Expectation–maximization algorithm wikipedia , lookup

Vector generalized linear model wikipedia , lookup

Predictive analytics wikipedia , lookup

Simplex algorithm wikipedia , lookup

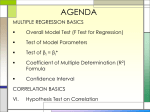

Multiple Regression What is multiple regression? What is multiple regression? Predicting a score on Y based upon several predictors. Why is this important? Behavior is rarely a function of just one variable, but is instead influenced by many variables. So the idea is that we should be able to obtain a more accurate predicted score if using multiple variables to predict our outcome. Simple Linear Regression For simple linear regression, the function is y o 1x Where o is the intercept and 1 is the slope or the coefficient associated with the predictor variable X. The Model Multiple Linear Regression refers to regression applications in which there are several independent variables, x1, x2, … , xp . A multiple linear regression model with p independent variables has the equation y o 1x1 p x p The ε is a random variable with mean 0 and variance σ2. The prediction equation A prediction equation for this model fitted to data is ŷ bo b1x1 bp x p Where ŷ denotes the “predicted” value computed from the equation, and bi denotes an estimate of βi. These estimates are usually obtained by the method of least squares. This means finding among the set of all possible values for the parameter estimates the ones which minimize the sum of squared residuals. A few concepts… In multiple regression, a dependent variable (the criterion variable) is predicted from several independent variables (predictor variables) simultaneously. Thus, we form a 'linear combination' of these variables to best predict an outcome, and then we assess the contribution that each predictor variable makes to the equation. The correlation between the criterion variable (Y) and the set of predictor variables (Xs) is Multiple R. When we speak of multiple regression we use R instead of r. So Multiple R squared is the amount of variation in Y that can be accounted for by the combination of variables. Minitab, and other statistical software, will show a value of adjusted R squared. This is really a correction factor. Values of R squared tend to be larger in samples than they are in populations. The adjusted R squared is an attempt to correct for this. It is also a factor that is important when there are multiple variables and few participants, since this scenario will give an inflated value of R squared just by chance. The equation substitutes a common slope for the multiple variables and is not meaningfully interpreted but is used in the overall calculation. Doing the calculations Computation of the estimates by hand is tedious. They are ordinarily obtained using a regression computer program. Standard errors also are usually part of output from a regression program. ANOVA An analysis of variance for a multiple linear regression model with p independent variables fitted to a data set with n observations is: Source of Variation Model Error Total DF p n-p-1 n-1 SS SSR SSE SST MS MSR MSE Sums of squares The sums of squares SSR, SSE, and SST have the same definitions in relation to the model as in simple linear regression: yˆ y ˆ SSE y y SST y y SSR 2 2 2 SST = SSR + SSE The value of SST does not change with the model. It depends only on the values of the dependent variable y. SSE decreases as variables are added to a model, and SSR increases by the same amount. This amount of increase in SSR is the amount of variation due to variables in the larger model that was not accounted for by variables in the smaller model. This increase in regression sum of squares is sometimes denoted SSR(added variables | original variables), Where original variables represents the list of independent variables that were in the model prior to adding new variables, and added variables represents the list of variables that were added to obtain the new model. The overall SSR for the new model can be partitioned into the variation attributable to the original variables plus the variation due to the added variables that is not due to the original variables, SSR(all variables) = SSR(original variables) + SSR(added variables | original variables). R2 Generally speaking, larger values of the coefficient of determination R2 = SSR/SST indicate a better fitting model. The value of R2 must necessarily increase as variables are added to the model. However, this does not necessarily mean that the model has actually been improved. The amount of increase in R2 can be a mathematical artifact rather than a meaningful indication of an improved model. Sometimes an adjusted R2 is used to overcome this shortcoming of the usual R2.