* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Likelihood approaches to sensory coding in auditory cortex

Neuroethology wikipedia , lookup

Neurocomputational speech processing wikipedia , lookup

Artificial neural network wikipedia , lookup

Sensory substitution wikipedia , lookup

Premovement neuronal activity wikipedia , lookup

Embodied cognitive science wikipedia , lookup

Stimulus (physiology) wikipedia , lookup

Central pattern generator wikipedia , lookup

Eyeblink conditioning wikipedia , lookup

Recurrent neural network wikipedia , lookup

Neural modeling fields wikipedia , lookup

Perception of infrasound wikipedia , lookup

Neural engineering wikipedia , lookup

Neuroeconomics wikipedia , lookup

Executive functions wikipedia , lookup

Neuroplasticity wikipedia , lookup

Optogenetics wikipedia , lookup

Time perception wikipedia , lookup

Convolutional neural network wikipedia , lookup

Neuropsychopharmacology wikipedia , lookup

Metastability in the brain wikipedia , lookup

Sensory cue wikipedia , lookup

Sound localization wikipedia , lookup

Neural correlates of consciousness wikipedia , lookup

Types of artificial neural networks wikipedia , lookup

Development of the nervous system wikipedia , lookup

Synaptic gating wikipedia , lookup

Psychophysics wikipedia , lookup

Nervous system network models wikipedia , lookup

Cortical cooling wikipedia , lookup

Cognitive neuroscience of music wikipedia , lookup

Neural coding wikipedia , lookup

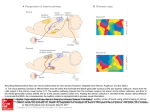

INSTITUTE OF PHYSICS PUBLISHING NETWORK: COMPUTATION IN NEURAL SYSTEMS Network: Comput. Neural Syst. 14 (2003) 83–102 PII: S0954-898X(03)57828-9 Likelihood approaches to sensory coding in auditory cortex Rick L Jenison1 and Richard A Reale Departments of Psychology and Physiology, Waisman Center University of Wisconsin-Madison, 1202 W Johnson Street, Madison, WI 53706, USA E-mail: [email protected] Received 19 March 2002, in final form 9 November 2002 Published 17 January 2003 Online at stacks.iop.org/Network/14/83 Abstract Likelihood methods began their evolution in the early 1920s with R A Fisher, and have developed into a rich framework for inferential statistics. This framework offers tools for the analysis of the differential geometry of the full likelihood function based on observed data. We examine likelihood functions derived from inverse Gaussian (IG) probability density models of cortical ensemble responses of single units. Specifically, we investigate the problem of sound localization from the observation of an ensemble of neural responses recorded from the primary (AI) field of the auditory cortex. The problem is framed as a probabilistic inverse problem with multiple sources of ambiguity. Observed and expected Fisher information are defined for the IG cortical ensemble likelihood functions. Receptive field functions of multiple acoustic parameters are constructed and linked to the IG density. The impact of estimating multiple acoustic parameters related to the direction of a sound is discussed, and the implications of eliminating nuisance parameters are considered. We examine the degree of acuity afforded by a small ensemble of cortical neurons for locating sounds in space, and show the predicted patterns of estimation errors, which tend to follow psychophysical performance. 1. Introduction The process by which neural responses are translated into a perceptual decision or estimate can be viewed as a solution to a probabilistic inverse problem—that is, given a set of responses, what is the most likely state of the environment that evoked that response? Historically, Bayesians were the first to frame such problems in inferential statistics, and central to the Bayesian approach is the use of axiomatic prior probabilities. One aim in statistical inference is to infer something about the value of a parameter, which is unknown, based on observed 1 Author to whom any correspondence should be addressed. 0954-898X/03/010083+20$30.00 © 2003 IOP Publishing Ltd Printed in the UK 83 84 R L Jenison and R A Reale data. Likelihood approaches, which address this aim, were introduced by Fisher (1925) within the framework of estimation theory. Fisher believed that objective inference could be properly based solely on the likelihood function (Efron 1998, Pawitan 2001). It has come to represent more than just a method for generating point estimates, but rather a more general way of reasoning objectively under conditions of uncertainty—a task the brain must continuously undertake. But point estimates, in the form of maximum likelihood estimates, at the level of the primary sensory cortices may very well not be the appropriate stage for making a decision or perceptual estimate. Gold and Shadlen (2001) have recently suggested that log-likelihoods may reflect the natural currency for combining sensory information from several sources, and may provide a bridge between sensory and motor processing. Transformation of the entire likelihood function, or the conveyance of the likelihood function, may be the more proper way of thinking about the evaluation of information. Summarizing the likelihood function in the form of a point estimate rarely conveys a sufficient representation of the entire function. Alternative ways of simplifying a multiparameter log-likelihood function may involve the removal of parameters that particular areas of cortex may be specialized for. An approach within the formal likelihood framework can eliminate nuisance parameters by profiling or integrating out parameters, and affords an analysis of the impact of parameter reduction on information. Under some conditions, the log-likelihood function can be well approximated using sequential orders of Taylor series expansions—often just a simple second-order quadratic function is sufficient. In these cases the log-likelihood function can be summarized using just the maximum likelihood estimate and the curvature about the maximum. It is this curvature that defines the quantity referred to as the observed Fisher information. In this paper we describe the application of likelihood approaches to the problem of sound localization from the observation of an ensemble of neural responses recorded from the primary auditory (AI) cortical field. The problem is framed as a probabilistic inverse problem with multiple sources of ambiguity. 2. Auditory cortex Understanding how the sensory cortices represent and convey information about the physical world around us is one of the central questions facing the field of computational neuroscience. Our specific interest in recent years has been the functional evaluation of ensembles of neurons in auditory cortex in order to statistically characterize the information available for estimating aspects of the spatial environment. Auditory cortex in the cat (figure 1) is located on the exposed ectosylvian gyri and extends into the suprasylvian, the anterior ectosylvian and the posterior ectosylvian sulci (Woolsey 1961, Merzenich et al 1975, Knight 1977, Reale and Imig 1980). Superimposed lines delimit four auditory fields (anterior A, primary AI, posterior P, ventroposterior VP) that are each distinguished by an orderly (tonotopic) and complete representation of the audible frequency spectrum. A core of tonotopically organized fields is a common finding in auditory cortex of mammals. Surrounding the four core fields is a peripheral belt of auditory cortex in which complete tonotopic representations are generally not evident. However, the belt region can be partitioned into a number of areas (e.g. second AII, ventral V, temporal T and dorsoposterior DP) based on cytoarchitectonics, chemoarchitectonics, connections and relative position with respect to the core fields. Neurons in the belt areas of the cat, unlike those in the core fields, often respond poorly to pure tone stimuli and exhibit broad or complex patterns of spectral tuning, even for tones near their threshold intensity (Merzenich et al 1975, Reale and Imig 1980, Schreiner and Cynader 1984, Sutter and Schreiner 1991). For these reasons, it is generally considered unlikely that cells in the core fields and belt areas will process auditory information in a similar manner. Whether in core fields or in belt Likelihood approaches to sensory coding in auditory cortex 85 DP A AI AII P VP T Cat brain courtesy of Wally Welker - University of Wisconsin Comparative Mammalian Brain Collection Figure 1. (a) Cat brain showing idealized boundaries of auditory cortical fields: anterior A, primary AI, posterior P, ventroposterior VP, second AII, temporal T and dorsoposterior DP. areas, neurons that possess complex spectro-temporal sensitivities are often hypothesized to be candidates for specializations in function (Middlebrooks and Zook 1983, Sutter and Schreiner 1991, He et al 1997). In this regard we are particularly interested in the proposition that such neurons may represent sound features along a few independent base dimensions that effectively reduce the number of parameters that determine that representation (Schreiner 1998). The directional sensitivity of auditory cortical neurons in the cat to free-field sounds has been studied for nearly 30 years. Generally, due to physical limitations, only sensitivity to changes in sound-source azimuth were investigated in detail (Eisenman 1974, Middlebrooks and Pettigrew 1981, Middlebrooks et al 1994, Middlebrooks 1998, Imig et al 1990, Rajan et al 1990, Eggermont and Mossop 1998, Xu et al 1998). With this approach the majority of neurons can be classified as azimuthal sensitive. In this population, cells can have a contralateral (most prevalent), ipsilateral, central, multipeaked or omnidirectional response preference. The development of advanced signal processing techniques has permitted the use of virtual-space stimuli, rather than free-field stimuli, to characterize spatial receptive fields (Brugge et al 1994). The observed shapes of virtual-space derived receptive fields are in close agreement with those obtained in free-field studies. However, a major benefit of the virtual space approach is that receptive fields were generally determined in both azimuth and elevation and with a high spatial resolution (<5◦ ). Our experimental approach depends on knowing first the relationship between spectral transformation and sound-source direction for the ear of the cat. The technique originally used for determining the free-field measurements has been described in detail in a previous paper (Musicant et al 1990) from our laboratory. The simulation of these transformed sounds, as a function of sound-source direction constitutes a virtual acoustic spherical space as shown in figure 2. The virtual sound-source can be any waveform (e.g. wide- and narrow-band transients or steady state noise, tones, speech, animal vocalizations) chosen to best suit the experimental protocol and the response requirements of the neuron under study. Spatial receptive fields are obtained by random presentation of sounds on the virtual sphere at various sound intensity levels. We study the spatial receptive fields of auditory cortical neurons in cats under general anaesthesia following well established protocols (Brugge et al 1994, Reale et al 1996, Jenison et al 1998, 2001a). Tone burst stimuli delivered monaurally or binaurally are used to estimate the best-frequency (BF) of neurons isolated along a given electrode penetration as described 86 R L Jenison and R A Reale Figure 2. (a) Spherical auditory space synthesized using virtual space signal processing. The cat’s nose is pointing to 0◦ azimuth, 0◦ elevation. previously (Brugge et al 1994). Prior to single-unit recording we always derive a partial BF map of the primary auditory (AI) cortical field. With such a map in hand, we are able to make estimates of adjacent belt areas and direct electrode penetrations into acoustically responsive areas. 2.1. Space–intensity receptive fields The receptive field functions, rf (θ, φ, η), described in this paper were constructed to depend on three acoustic parameters: azimuth θ , elevation φ and intensity η. Our prior functional approximation work on spatial receptive fields used spherical basis functions (Jenison et al 1998, 2001a) with free parameters (wi j , κi j , βi j and αi j ) for fitting the basis functions. The free parameters serve only to mathematically approximate the receptive field, where α and β specify the placement of each basis function on the sphere in radians, κ specifies each width and w each weight. The current extension of this approximation now includes an exponential dependence on the intensity level, η, of the sound source defined as rfi (θ, φ, η) = M wi j exp{log(η)κi j (sin φ sin βi j cos(θ − αi j ) + cos φ cos βi j )} + exp{ξi j η} j =1 (1) and a corresponding free fitting parameter ξ . Figure 3 illustrates space–intensity receptive fields for three modelled units using a quartic-authalic mapping of first-spike latency (colour coded). Here the empirically measured first-spike latency, averaged across all effective directions, and the associated standard deviation are seen (full blue line) to increase with decreasing intensity level. These empirically measured standard deviations contain both the unsystematic and the High Intensity Likelihood approaches to sensory coding in auditory cortex Short Latency Long Latency Measured 15 30 15 Modelled 25 35 45 40 50 35 60 55 45 70 65 55 75 75 0 Low Intensity 0 10 20 30 40 50 Latency (ms) 60 70 10 20 30 40 Latency (ms) 50 60 0 10 20 30 40 50 60 Latency (ms) 87 Figure 3. Auditory space–intensity receptive fields for three units recorded using virtual space techniques in primary auditory cortex. The spherical projections on the left panels reflect the change in spatial sensitivity as a function of decreasing sound intensity. The cat’s nose points to the centre of the projection at (0, 0). The left edge of the map corresponds to −180◦ and the right edge corresponds to +180◦ . The panel to the right of the spherical projections reflects the mean and standard deviation of first-spike latency collapsed across all sound directions for each intensity level (dBA). The blue line corresponds to the measured recordings and the red line reflects the modelled receptive fields. The standard deviation lines for the modelled conditions are shorter, as they reflect just the systematic variability due to predicted direction selectivity at each intensity level. The measured standard deviations reflect both systematic and unsystematic (noise) variance. 88 R L Jenison and R A Reale systematic variance of the response. The modelled results (full red line) are seen to capture the increase in first-spike latency, averaged across directions, as a function of decreasing intensity. The nonlinear dependence on η was introduced in order to modulate the width parameter κ of the spherical basis functions and allow for the shape of the receptive field to depend on sound intensity. Similarly, the nonlinear dependence of mean latency on intensity is provided by introduction of the final term exp{ξi j η} in equation (1). Constrained optimization techniques were used to fit the parameters wi j , κi j , βi j , αi j and ξi j of the M basis functions defined by equation (1) to the dependent neural response of interest—which in this case was response latency. The details of the approximation techniques can be found in Jenison et al (1998, 2001a). The linear combination of spherical basis functions effectively performs spatial lowpass filtering of the observed data. However, the placement and width free parameters allow for varying degrees of smoothing (Jenison et al 1998). The reliability of the receptive field function (equation (1)) in estimating the systematic component of response variability is limited by the reliability of the fitting process itself. There is no true solution of the receptive field function for a particular neuron, given a finite input set consisting of measured response latencies together with their corresponding soundsource directions and intensity levels. A bootstrap of the receptive field function was used to investigate the standard error of receptive field estimates for two typical AI neurons. The total number of measured response latencies composing the input set for these neurons was 3900 and 3450, respectively. The sample and receptive field estimation process was repeated 40 times as prescribed by Efron and Tibshirani (1993). The results are shown in figure 4 for the two neurons. The left-hand column maps the averaged bootstrapped receptive fields for two intensity levels. The right-hand column maps the bootstrapped standard errors at each direction. In general, regions with the shortest response latencies are also the regions that map to the smallest standard deviations. These findings suggest that the function approximations to the receptive fields shown in figure 3 were indeed valid estimates of systematic variability in response latency that is due to both the dependence of latency on the direction of the sound source and to the intensity level of the source. Several observations can be made regarding the behaviour of the receptive field as a function of direction and intensity. First, the units become more broadly tuned as the sound source increases in intensity. Second, the average latency, collapsed across directions, decreases as the sound source intensity increases, as does the variance of the latency to firstspike. Finally, the receptive fields are broadly tuned in general, an observation consistent with previous investigations, but individual receptive fields are unique in their shape and their evolution as a function of intensity. Given this obvious degree of ambiguity, the question is then, how well can an ensemble of these units decode sound direction using first-spike latency alone? 3. Decoding first-spike latency using likelihoods 3.1. First-spike latency Recently, response latency has been shown to carry a significant proportion of the information for the physical stimulus in addition to spike count (Heller et al 1995, Wiener and Richmond 1999, Petersen et al 2001). A common issue with respect to the use of latency as a neural code for sound direction is the apparent lack of temporal marking for the onset of the stimulus. This problem is not specific to directional coding in the auditory system, but is problematic for decoding strategies that have been proposed in visual cortex as well (Gawne et al 1996). The obvious resolution is an internally available global time marker, such as a collective oscillatory Likelihood approaches to sensory coding in auditory cortex A 89 BOOTSTRAP MEAN BOOTSTRAP SE 40 dBA 60 dBA 12 22 B 0.1 0.5 0.1 0.5 30 dBA 60 dBA 12 16 Neuron #23 Response Neuron #3 Response Neuron #15 Response Figure 4. Bootstrapped auditory space receptive field functions based on response latency from two AI neurons. The intensity in dBA is shown above each row. Left column, map projection of mean response latency obtained from 40 invocations of spherical modelling process. Right column, map projection of standard error of modelling process. Colour bar annotations below each column denote response latency and standard error limits, respectively. Stimulus Onset γ 10 11 12 13 14 Latency (ms) Figure 5. Schematic of the response (first-spike latencies) from a population of neurons to a sound in space. γ is the common latency across the ensemble of neurons. pattern of activity (Hopfield 1995). An alternative resolution is that latencies relative to one another in an ensemble of neurons could be decoded rather than absolute latency (Heil 1997, Eggermont and Mossop 1998, Furukawa et al 2000, Jenison 2001). Recently we described a formal method for decoding sound location from a population of cortical neurons in field AI of the cat (figure 1) based on maximum likelihood estimation from absolute first-spike latencies 90 R L Jenison and R A Reale (Jenison 1998). An extension to this approach is to replace the unknown stimulus onset by the first observed spike in an ensemble cortical population (Jenison 2001). Under this scenario, the observer measures subsequent evoked first-spikes within the ensemble relative to the first poststimulus neural event in the ensemble (figure 5 sketches the general idea). Although still based on parametric timing, this approach is related to the statistically nonparametric rankordered decoding described by Gautrais and Thorpe (1998) and reviewed by Thorpe et al (2001). Using a relative referent, rather than absolute, implies that all of the ensemble neural events are temporally shifted by some unknown common value relative to the physical onset of the stimulus. The latency to the first-spike, x i reflects the timing of the i th neuron in the ensemble relative to that of the first poststimulus event evoked by one of the neurons in the ensemble. Any member of the ensemble could produce the first spike in the ensemble. By definition the neuron with the shortest absolute latency will be assigned the value of 0. γ is the common latency across the ensemble of neurons, and hence it reflects the parameter that ‘binds’ the ensemble responses to the stimulus onset. The statistical model of the relative latency x i can be expressed in terms of the i th receptive field function of some parameter vector , a common latency γ and error term εi as x i = rf i () − γ + εi , where the probability density of the latency noise term εi is assumed to be distributed according to some parametric probability density. Jenison (2001) showed that γ could be effectively eliminated from the likelihood function by replacing γ with its maximum likelihood estimate at fixed values of . This approach is referred to as profiling out a nuisance parameter and the resultant likelihood function is commonly referred to as the profile likelihood (see Murphy and Van der Vaart 2000, for a review). This is an example of how formal likelihood approaches show promise in providing insights into new decoding strategies, based on formal likelihood methods, which may not have been obvious without these analysis tools. 3.2. Defining the proper probability density Generalized linear models (GLIM) have served to unify the various classical inferential statistical procedures since their introduction by Nelder and Wedderburn (1972). The GLIM approach has also spawned the growth of alternatives to the multivariate normal distribution. One example, the so-called dispersion model developed by Jorgensen and colleagues (Jorgensen 1983, 1987), represents a class of probability densities that has implications for spike train models. Specifically, we consider a subset known as reproductive exponential dispersion models, or, as Jorgensen referred to them, the Tweedie densities (Tweedie 1947, 1957). Tweedie densities are characterized by an index p, where E[x] = µ and var[x] = µ p /λ. The indices p = [0, 1, 2, 3] correspond to the normal, Poisson, gamma, and inverse Gaussian (IG), respectively (Jorgensen 1987, 1999). Jorgensen’s original paper defined the power variance function for multivariate densities only under the assumption of independent dimensions. The normal and Poisson densities are well known in the neuroscience community. The Poisson and its variants have been used extensively as point process and rate models. Less familiar Tweedie densities are the IG and gamma, which were employed for inter-spike intervals by (Tuckwell 1988, Levine 1991, Iyengar and Liao 1997). Most recently Barbieri et al (2001) have modelled spike trains using these densities to address deficiencies in their earlier Poisson models (Brown et al 1998). The IG distribution has a history dating back to 1915 when Schrödinger presented derivations of the density of the first passage time distribution of Brownian motion with motion drift (Chhikara and Folks 1989, Seshadri 1999). Tweedie (1941) coined the term ‘inverse Gaussian’ based on his observation that the cumulant generating function of IG is the inverse of the cumulant generating function for the Gaussian. The IG Likelihood approaches to sensory coding in auditory cortex 91 Figure 6. IG approximations to first-spike latency from 50 trials for two different spatial locations (63◦ and −72◦ ). The histogram represents a probability of observing a particular latency. The full curve corresponds to the IG probability density with fixed λ = 15 000 such that the variance depends only on the mean rf (θ ). The predicted values (means) as a function of direction are rf (63) = 11.2 and rf (−72) = 12.8. density, with the linked receptive field function, is −λ[x i + γ − rf i (θ, φ, η)]2 λ p I G (x i | θ, φ, η) = exp . 2π[x i + γ ]3 2[x i + γ ]rf i (θ, φ, η)2 (2) . The mean corresponds to the receptive field rfi (θ, φ, η) and the variance is rfi (θ,φ,η) λ The parameter λ is a free parameter in the density function, and the variance depends on λ. If λ can be reasonably fixed over an ensemble of neurons, then the variance depends only on the mean. The parameter γ has typically been used as an additional degree of freedom for purposes of improving the fit of univariate models. However, it can also serve as a common ensemble latency similar to the manner in which it was employed as a binding parameter for the multivariate Gaussian in Jenison (2001). Figure 6 shows examples of first-spike (50 trial) histograms corresponding to two different sound directions from a single unit in field AI. These single direction examples were obtained from the receptive field data shown in figure 3. Overlaid on top of the histogram is the corresponding IG density using the predicted mean, with the parameter γ set to zero and λ set to 15 000 based on a reasonable approximation to the entire ensemble data set. The IG density appears to do a good job of capturing the dependence of first-spike variance as a function of the mean latency. Further goodness-of-fit analyses are certainly necessary to access whether other members of the Tweedie densities might afford better models. 3 3.3. Log-likelihood functions Likelihood functions have been employed in point process analyses by Ogata and Akaike (1982) and Brillinger (1988), and most recently by Kass and Ventura (2001) and Brown et al (2001). Martignon et al (2000) have also examined ensemble interaction structure using both likelihood and Bayesian techniques. A parametric likelihood function can be straightforwardly 92 R L Jenison and R A Reale A Intensity (dBA) Low Intensity 35 dBA Latency (ms) High Intensity Azimuth (deg) Low Likelihood B High Likelihood Intensity (dBA) Low Intensity 15 dBA Latency (ms) High Intensity Azimuth (deg) Figure 7. Log-likelihood functions for a sound source at 45◦ azimuth 0◦ elevation at two intensities: (A) 35 dBA and (B) 15 dBA. Black arrows denote maximum likelihood estimates given the population of first-spike latencies shown in the insets. constructed based on marginal probability density functions for each neuron in the population under the assumption that the unsystematic variability (noise) is independent: L(θ, φ, η) = p( x |θ, φ, η) = N i=1 p(x i |θ, φ, η). (3) Likelihood approaches to sensory coding in auditory cortex 93 The log of the likelihood function, based on the IG (equation (2)), is log L(θ, φ, η) = N N N λ 3 [x i + γ − rf i (θ, φ, η)]2 log − log[x i + γ ] − λ . 2 2π 2 i=1 2[x i + γ ]rf i (θ, φ, η)2 i=1 (4) An example of the log-likelihood surface defined by equation (4) (with an ensemble size N = 26 and fixed elevation φ) is shown in figure 7. The full log-likelihood function provides more than just the opportunity to estimate the true values of the parameters. The differential geometry of the surface is of interest as well. In this example the mode of the loglikelihood surface would correspond to the maximum likelihood estimate of θ and η (azimuth and intensity). Figure 7(a) shows the resulting log-likelihood surface for a sound source at 45◦ azimuth, 0◦ elevation presented at 35 dB down from a reference intensity level (dBA). Following the onset of the sound stimulus, the first-spikes from an ensemble of 26 AI units2 (in a form shown in figure 5) are shown in the inset panel to the left of the log-likelihood surface. This surface represents the log-likelihood for sound direction (azimuth) and intensity given the ensemble response3. The maximum of the log-likelihood functions are easily observed in this example, which signal the maximum likelihood estimates of [47◦ , 38 dBA]. The curvature at the maximum of the log surface reflects the degree of observed Fisher information in the ensemble for estimating the acoustic parameters of this particular sound source. For the more intense sound source (figure 7(b)), the curvature about the maximum has decreased somewhat, reflecting a decrease in observed Fisher information. In this case the maximum likelihood estimates are [49◦ , 14 dBA]. The fact that a maximum exists, and the log-likelihood surface is so well structured suggests that information for both parameters are conveyed in the population of first-spike latencies. The stochastic pattern of first-spike latencies shown in each inset, therefore, informs the observer as to the direction of the sound source and, potentially of equal interest, the intensity of the sound source. 4. Fisher information We, as well as others, have investigated the consequences of broad receptive fields on population coding using Fisher information and the Cramer–Rao lower bound (CRLB) under the assumption of independent residual noise (Paradiso 1988, Seung and Sompolinsky 1993, Jenison 1998) and correlated residual noise (Abbott and Dayan 1999,Gruner and Johnson 1999, Jenison 2000, Sompolinsky et al 2001). Our prior work has shown that, under the assumption of independence, even very broad and nonuniform spatial receptive fields in auditory cortex can demonstrate observed psychophysical localization acuity with as few as ten cells in the population (Jenison 1998). The CRLB is a lower bound on the variance, or the standard error, of any unbiased estimator, and is derived from Fisher information with respect to a family of parametric probability distributions. For a single parameter, the CRLB is inversely related to Fisher information mathematically, and intuitively. As the magnitude of Fisher information increases, we expect the estimation standard error to diminish. Although there may not exist an algorithm that achieves the CRLB, a maximum likelihood estimator asymptotically approaches 2 The ensemble is constructed from 13 unique AI receptive field functions (predictions), with a mirrored set of functions reflected about the midline, to produce a total of 26. The residual noise is not reflected and therefore is considered here to be independent. The reason for the forced symmetry is to approximate the contributions from the contralateral hemisphere. 3 The full log-likelihood function is actually a function of three acoustic parameters—azimuth, elevation and intensity. For purposes of visualization, we assume for this example that elevation is known, i.e. 0◦ . 94 R L Jenison and R A Reale the CRLB. The Fisher information matrix for an ensemble of space–intensity receptive fields and parameter vector = {θ, φ, η} is defined as follows: ∂ 2 ∂ ∂ ∂ ∂ E E E ∂θ ∂θ ∂φ ∂θ ∂η ∂ 2 ∂ ∂ ∂ ∂ E E E (5) J = ∂φ ∂θ ∂φ ∂φ ∂η ∂ ∂ ∂ 2 ∂ ∂ E E E ∂η ∂θ ∂η ∂φ ∂η where the log-likelihood is denoted by = log L(θ, φ, η) and σθ̂2 ρθ̂,φ̂ σθ̂ σφ̂ ρθ̂ ,η̂ σθ̂ ση̂ σφ̂2 ρφ̂,η̂ σφ̂ ση̂ K = ρθ̂ ,φ̂ σθ̂ σφ̂ (6) ρθ̂ ,η̂ σθ̂ ση̂ ρφ̂,η̂ σφ̂ ση̂ ση̂2 ˆ = is the estimation covariance matrix for azimuth, elevation and intensity estimates {θ̂ , φ̂ and η̂}. K J −1 defines the relationship between the estimation covariance matrix and the inverse of the Fisher information matrix. The inequality reflects the fact that the matrix K − J −1 is nonnegative definite, and it follows that the diagonal of J −1 reflects the Cramer– Rao lower bounds of estimate variability for θ̂ , φ̂, and η̂ (Blahut 1987, Cover and Thomas 1991). Under some regularity conditions, a maximum likelihood estimate of the parameters is asymptotically distributed as a multivariate normal with vector mean and covariance matrix J −1 (Lehmann and Casella 1998). The expected value for the azimuth element in the Fisher information matrix under the assumption of the multivariate IG probability density with independent noise is 2 N [∂rf i (θ, φ, η)/∂θ]2 ∂ log L(θ, φ, η) = λ (7) E ∂θ rfi (θ, φ, η)3 i=1 where in the present case N is 26 units in the ensemble (see the appendix). Figure 8 illustrates only two out of the nine cells in the K matrix for a maximum likelihood estimator—the square root of the upper left cell σθ̂ and the correlation coefficient portion of the upper right cell ρθ̂ ,η̂ — as a function of different azimuth directions and intensities. The lower bound on the standard error of the estimate of azimuthal direction is σθ̂ given the ensemble of cortical responses, and therefore can be interpreted as an analogue of a lower bound on acuity. There are several interesting aspects of this error surface. First, there are several bands of elevated standard error, particularly around azimuths4 of +50◦ and −50◦ . These azimuths correspond to regions of maximum sound pressure amplification by the horn-like shape of the cat’s pinnae, (Musicant et al 1990), and where the majority of neurons tend to exhibit their shortest first-spike latencies at near-threshold intensities (Brugge et al 1996). However, these regions of minimal response latency are also regions with near-zero response gradients, ∂rf i (θ, φ, η)/∂θ . Consequently, at these directions expected Fisher information deflates, with a corresponding increase in the standard error. Second, there is a general trend for regions of minimal standard error to occur at moderate intensity levels; by comparison, the standard error is increased at higher and lower intensities. Figure 8(b) illustrates this non-monotonic pattern by taking cross-sectional cuts across intensity at three azimuthal directions. The explanation for the decrease in acuity at lower intensities is that, as intensity decreases, first-spike latency becomes less precise. The opposite is true at higher intensities. However the magnitude of the gradients, ∂rf i (θ, φ, η)/∂θ , decreases 4 The symmetry is the result of mirroring the receptive field functions as described previously. Likelihood approaches to sensory coding in auditory cortex 95 A Intensity (dBA) Low Intensity σ∧ θ deg High Intensity Azimuth (deg) B σ∧ θ deg Intensity (dBA) Intensity (dBA) C ρ∧ ∧ θ ,η Azimuth (deg) Figure 8. (A) standard errors of the estimates σθ̂ as a function of sound direction (azimuth) and intensity. Dotted vertical line: cross-section shown in (B) standard errors of the estimates σθ̂ as a function of intensity at three azimuths: full: −48◦ , broken: −44◦ , dotted: −40◦ (C) correlation coefficients of the estimates ρθ̂ ,η̂ . 96 R L Jenison and R A Reale at high intensities, resulting in a relative deflation of expected Fisher information, and a corresponding increase in the standard error. The explanation for this is revealed in figure 3 where tuning is shown to broaden with increasing intensity along with a corresponding decrease in receptive field gradient at particular spatial directions. In summary there is a trade-off between lower slopes of first-spike latency gradients at high intensity and higher variability in first-spike latency at low intensity, both of which are reflected in the Fisher information matrix. The correlation coefficient of the estimates ρθ̂ ,η̂ (figure 8(C)) characterizes the degree of linear dependence that is expected to occur when θ̂ and η̂ are jointly estimated by a maximum likelihood estimator. The corresponding cell in the K matrix depends on the degree of crossinformation evident in the Fisher information matrix through the matrix inverse operation. If the off-diagonal cells of the Fisher information matrix were all zero, then the K matrix would necessarily be diagonal. This condition, known as an orthogonal Fisher information matrix, would also imply that the parameters could be simply factored and treated as independent, which has not been shown to be the case for any of the analyses that we have performed on our recorded AI units. 5. Parameters of interest 5.1. Profile likelihood The profile likelihood function for parameters of interest is obtained by substituting the conditional maximum likelihood estimate for the so-called nuisance parameters into the constructed likelihood function (Murphy and Van der Vaart 2000). The standard profile likelihood function can be expressed simply as L p (θ, φ) = L(θ, φ, η̂) = maxη L(θ, φ, η). So, for example, we may be interested in the profile likelihood construction when the sound intensity η parameter is eliminated. Although the profile likelihood is widely used for inference on the parameters of interest, it is well known that the profile likelihood can provide less than satisfactory inference, and yield biased and/or inconsistent results. Adjustments to the profile likelihood, with an eye toward obtaining improved statistical inference on the parameters of interest, have generated substantial interest. The work of Cox and Reid (1987, 1992, 1993), McCullagh and Tibshirani (1990), Diciccio and Stern (1993, 1994), Barndorff-Nielsen (1994), Stern (1997) and Mukerjee and Reid (1999) on adjustments to the profile likelihood has led to significant progress in unbiased removal of nuisance parameters from likelihood function constructions. 5.2. Integrated likelihood The integrated likelihood addresses some of the issues raised by the profile likelihood approach, specifically where profile likelihood functions fail to capture the uncertainty present about the maximum likelihood estimate of the nuisance parameter being eliminated. Profiling out a nuisance parameter by maximization could be misleading if the region of nuisance parameter maximization is atypical in particular regions of the whole likelihood surface (Berger et al 1999). Integrating out the nuisance parameter is reminiscent of Bayesian approaches (see Tierney et al 1989a, 1989b, Kass et al 1989, Diciccio et al 1997) and can be defined using a uniform prior p(η) over a reasonable range of intensities (10–60 dBA) as in the case L U (θ, φ) = L(θ, φ, η) p(η) dη. Although a reasonable first choice, Berger et al (1999) have raised potential caveats regarding the use of uniform priors that remain to been investigated. One analytical approach to approximating this integral is the Laplace approximation, which is Likelihood approaches to sensory coding in auditory cortex A 97 B 60 Low Intensity -20 -40 Log-likelihood Intensity (dBA) 50 40 30 -60 -80 -100 -120 20 High Intensity 10 -140 -150 -100 -50 0 50 100 150 -150 Azimuth (deg) -100 -50 0 50 100 150 Azimuth (deg) Low Likelihood High Likelihood Figure 9. (A) Taylor series expanded intensity for the log-likelihood function shown in figure 7(A). (B) Integrated log-likelihood function after removing the intensity parameter. The maximum likelihood estimate is shown. based on a second-order Taylor series expansion of the likelihood function about the maximum likelihood estimate η̂: 2π L(θ, φ, η) p(η) dη = L(θ, φ, η̂) p(η̂) . (8) −∂ 2 log L(θ, φ, η̂)/∂η2 The denominator −∂ 2 log L(θ, φ, η̂)/∂η2 is the observed Fisher information I (η̂θ,φ ). This approximation is useful so long as a unique maximum exists, I (η̂θ,φ ) = 0 and the log-likelihood function is approximately quadratic about the maximum (Pawitan 2001). Although it is implied that the integral is computed over the whole line, it may be sufficiently accurate as long as the mass of the approximating function is within the limits of the integration (Goutis and Casella 1999). This result can be shown to be roughly equivalent to an adjusted profile likelihood described by Cox and Reid (1987). The importance of these analytical approaches is that they may offer new theoretical ways of thinking about perceptual invariance within the framework of cortical ensemble likelihood functions. For example, a population of neurons that remain invariant for a particular parameter over different levels of intensity may reflect this process of intensity integration. The log-likelihood function for direction (azimuth) and intensity, shown in figure 7, can be second-order Taylor series expanded across intensity, conditioned on direction (figure 9(A)). The resulting surface reflects the fitting of a quadratic to the maximum of the log-likelihood function of intensity at each direction. This now allows the intensity dimension to be integrated out using equation (8) to yield the so-called integrated log-likelihood function shown in figure 9(B). Following this transformation, the maximum of the resulting integrated loglikelihood can be determined, and the point estimate used for locating the sound source. Furthermore, the curvature at the maximum conveys the amount of available observed information, which should more accurately account for the intensity uncertainty that has been marginalized. Removal of one dimension may seem counter to the premise that perceptual decisions based on the likelihood function should be deferred as long as possible. However, there are advantages to the statistical removal of dimensions at downstream stages of information processing. We are agnostic, at this point, as to which dimensions would be eliminated— pursuant to this example it could be either intensity or direction. Given this perspective, 98 R L Jenison and R A Reale although a unique global maximum exists, it can happen that multiple modes appear in the log-likelihood function. Given an inaccurate estimate of intensity, a maximum could be chosen corresponding to a direction of near 90◦ , rather than near the veridical estimate of 45◦ . Integrating out the intensity dimension reveals a single maximum near the true direction. Integrated likelihood incorporates the added uncertainty introduced by the estimation of the eliminated parameter, such that the resulting curvature reflects the deflation of information resulting from the intensity parameter. The use of the profile or integrated likelihood is aligned with the psychophysical judgment scenario where, for example, the observer is asked to estimate sound direction at different sound intensity levels. This is typically how behavioural localization studies are performed—direction estimation accuracy is of interest, not the accuracy of estimating the sound level. We are not suggesting that the intensity information is eliminated, just that different cortical regions may evaluate the likelihood functions differently, depending on the particular role of the cortical area. 6. Implications for cortical field processing Profile likelihood or integrated likelihood methods can be used to analytically eliminate parameters that are hypothesized to be redundant and thus allow us to make specific predictions of reduced likelihood functions. Profile likelihood methods represent the simplest approach to the removal of parameters as an approximation to marginal likelihood functions. Both approaches require a maximum likelihood estimate, which is often intractable or non-unique. Although there are numerical approaches to the derivation of estimators, analytical solutions can offer deeper insights into the consequences of multiplexing multiple parameter sources of information and the consequences of reductions in dimensionality. There is recent evidence for separate populations at the level of primary auditory cortex that segregate coding schemes of time-varying sounds into synchronous versus rate based codes (Liu et al 2001), and that appear to respond invariantly across ranges of intensity, suggesting a form of profiled or integrated likelihood. Convergent horizontal connections between AI subregions have recently been revealed, which suggest the existence of intrinsic modularity within cortical fields (Schreiner et al 2000, Read et al 2001). These anatomically and functionally segregated subregions may reflect a form of integrated likelihood where specific dimensions have been eliminated. Fisher information, and particularly its inverse, can serve as a link between neural population decoding performance and psychophysical performance. Recent evidence supports the observations described by our results, predicting that direction estimation acuity declines at low intensities (Zu and Recanzone 2001), improves at moderate intensities and declines at higher intensities (MacPherson and Middlebrooks 2000). The likelihood analyses presented here assumed the residual noise to be independent. Our recent findings, and others, challenge the common interpretation that correlated noise between information channels always compromises ideal performance (Snippe and Koenderink 1992, Dan et al 1998, Oram et al 1998, Abbott and Dayan 1999, Gruner and Johnson 1999, Panzeri et al 1999a, 1999b, Jenison 2000), thus suggesting a need for further inquiry into the dependence structure of the noise within the population. The need is further driven by recent evidence that the structure of the ensemble covariance matrix is related to auditory cortical receptive field properties (Eggermont 1994, Eggermont and Smith 1995, Brosch and Schreiner 1999). Therefore, an important extension to the likelihood analyses developed here using non-Gaussian densities is to introduce a flexible covariance structure to describe the noise dependence. However, this extension is nontrivial, particularly one that leaves the resulting marginal distributions of alternative densities. We are currently pursuing ways to couple the Tweedie densities in order to construct flexible multivariate densities and their likelihood functions. Likelihood approaches to sensory coding in auditory cortex 99 Acknowledgment This work was supported by National Institutes of Health Grants DC-03554, DC-00116, and HD-03352. Appendix There are two ways to evaluate expected Fisher information. One approach is to derive the expected value directly using integration by parts, which is the more general approach. The alternative approach is to utilize the score function, which for θ is defined as ∂ log L(θ, φ, η). (9) ∂θ Under the regularity conditions met by the exponential family, the expected value of the score function is 0, therefore 2 ∂ 2 varS(θ, φ, η) = E S(θ, φ, η) = E log L(θ, φ, η) . (10) ∂θ S(θ, φ, η) = So the variance of the score function is equal to the expected Fisher information. The score function for the multivariate IG assuming independence (equation (4)) and linked to the space– intensity receptive fields is S(θ, φ, η) = λ N ([x i + γ ] − rfi (θ, φ, η)) ∂ rfi (θ, φ, η). rfi (θ, φ, η)3 ∂θ i=1 (11) In our application E{[x i + γ ]} = rf i (θ, φ, η). The expectation can be distributed and, under the assumption of independence, the cross-terms removed to yield 2 ∂ N rf (θ, φ, η) 2 2 ∂θ i E S(θ, φ, η) = λ E [xi +γ ] ([x i + γ ] − rf i (θ, φ, η))2 . (12) 3 rf (θ, φ, η) i i=1 The final term is just the variance of [x i + γ ] so 2 ∂ N rf (θ, φ, η) rfi (θ, φ, η)3 i ∂θ E S(θ, φ, η)2 = λ2 rf i (θ, φ, η)3 λ i=1 then E ∂ log L(θ, φ, η) ∂θ 2 =λ N [∂rf i (θ, φ, η)/∂θ]2 i=1 rfi (θ, φ, η)3 . (13) (14) References Abbott L F and Dayan P 1999 The effect of correlated variability on the accuracy of a population code Neural Comput. 11 91–4 Barbieri R, Quirk M C, Frank L M, Wilson M A and Brown E N 2001 Construction and analysis of non-Poisson stimulus-response models of neural spiking activity J. Neurosci. Methods 105 25–37 Barndorff-Nielsen O E 1994 Adjusted versions of profile likelihood and directed likelihood, and extended likelihood J. R. Stat. Soc. Ser. B 56 125–40 Berger J O, Liseo B and Wolpert R L 1999 Integrated likelihood methods for eliminating nuisance parameters Stat. Sci. 14 1–22 Blahut R E 1987 Principles and Practice of Information Theory (Reading, MA: Addison-Wesley) Brillinger D R 1988 Maximum likelihood analysis of spike trains of interacting nerve cells Biol. Cybern. 59 189–200 100 R L Jenison and R A Reale Brosch M and Schreiner C E 1999 Correlations between neural discharges are related to receptive field properties in cat primary auditory cortex Eur J. Neurosci. 11 3517–30 Brown E N, Frank L M, Tang D D, Quirk M C and Wilson M A 1998 A statistical paradigm for neural spike train decoding applied to position prediction from ensemble firing patterns of rat hippocampal place cells J. Neurosci. 18 7411–25 Brown E N, Nguyen D P, Frank L M, Wilson M A and Solo V 2001 An analysis of neural receptive field plasticity by point process adaptive filtering Proc. Natl Acad. Sci. USA 98 12261–6 Brugge J F, Reale R A and Hind J E 1996 The structure of spatial receptive fields of neurons in primary auditory cortex of the cat J. Neurosci. 16 4420–37 Brugge J F, Reale R A, Hind J E, Chan J C, Musicant A D and Poon P W 1994 Simulation of free-field sound sources and its application to studies of cortical mechanisms of sound localization in the cat Hear. Res. 73 67–84 Chhikara R S and Folks J L 1989 The Inverse Gaussian Distribution: Theory, Methodology, and Applications (New York: Marcel Dekker) Cover T M and Thomas J A 1991 Elements of Information Theory (New York: Wiley-Interscience) Cox D R and Reid N 1987 Parameter orthogonality and approximate conditional inference J. R. Stat. Soc. Ser. B 49 1–39 Cox D R and Reid N 1992 A note on the difference between profile and modified profile likelihood Biometrika 79 408–11 Cox D R and Reid N 1993 A note on the calculation of adjusted profile likelihood J. R. Stat. Soc. Ser. B 55 467–71 Dan Y, Alonso J M, Usrey W M and Reid R C 1998 Coding of visual information by precisely correlated spiked in the lateral geniculate nucleus Nat. Neurosci. 1 501–7 Diciccio T J, Kass R E, Raftery A and Wasserman L 1997 Computing Bayes factors by combining simulation and asymptotic approximations J. Am. Stat. Assoc. 92 903–15 Diciccio T J and Stern S E 1993 On Bartlett adjustments for approximate Bayesian-inference Biometrika 80 731–40 Diciccio T J and Stern S E 1994 Frequentist and Bayesian Bartlett correction of test statistics based on adjusted profile likelihoods J. R. Stat. Soc. Ser. B 56 397–408 Efron B 1998 R A Fisher in the 21st century—Invited paper presented at the 1996 R A Fisher lecture Stat. Sci. 13 95–114 Efron B and Tibshirani R J 1993 An Introduction to the Bootstrap (New York: Chapman and Hall) Eggermont J J 1994 Neural interaction in cat primary auditory-cortex: 2. Effects of sound stimulation J. Neurophysiol. 71 246–70 Eggermont J J and Mossop J E 1998 Azimuth coding in primary auditory cortex of the cat. I. Spike synchrony versus spike count representations J. Neurophysiol. 80 2133–50 Eggermont J J and Smith G M 1995 Rate covariance dominates spontaneous cortical unit-pair correlograms Neuroreport 6 2125–8 Eisenman L M 1974 Neural encoding of sound location: an electrophysiological study in auditory cortex (AI) of the cat using free field stimuli Brain Res. 75 203–14 Fisher R A 1925 Theory of statistical estimation Proc. Camb. Phil. Soc. 22 700–25 Furukawa S, Xu L and Middlebrooks J C 2000 Coding of sound-source location by ensembles of cortical neurons J. Neurosci. 20 1216–28 Gautrais J and Thorpe S 1998 Rate coding versus temporal order coding: a theoretical approach Biosystems 48 57–65 Gawne T J, Kjaer T W and Richmond B J 1996 Latency: another potential code for feature binding in striate cortex J. Neurophysiol. 76 1356–60 Gold J I and Shadlen M N 2001 Neural computations that underlie decisions about sensory stimuli Trends Cogn. Sci. 5 10–16 Goutis C and Casella G 1999 Explaining the saddlepoint approximation Am. Stat. 53 216–24 Gruner C M and Johnson D H 1999 Correlation and neural information coding fidelity and efficiency Neurocomputing 26/27 163–8 He J, Hashikawa T, Ojima H and Kinouchi Y 1997 Temporal integration and duration tuning in the dorsal zone of cat auditory cortex J. Neurosci. 17 2615–25 Heil P 1997 Auditory cortical onset responses revisited. I. First-spike timing J. Neurophysiol. 77 2616–41 Heller J, Hertz J A, Kjaer T W and Richmond B J 1995 Information flow and temporal coding in primate pattern vision J. Comput. Neurosci. 2 175–93 Hopfield J J 1995 Pattern recognition computation using action potential timing for stimulus representation Nature 376 33–6 Imig T J, Irons W A and Samson F K 1990 Single-unit selectivity to azimuthal direction and sound pressure level of noise bursts in cat high-frequency primary auditory cortex J. Neurophysiol. 63 1448–66 Iyengar S and Liao Q M 1997 Modelling neural activity using the generalized inverse Gaussian distribution Biol. Cybern. 77 289–95 Likelihood approaches to sensory coding in auditory cortex 101 Jenison R L 1998 Models of direction estimation with spherical-function approximated cortical receptive fields Central Auditory Processing and Neural Modelling ed P W Poon and J F Brugge (New York: Plenum) pp 161–74 Jenison R L 2000 Correlated cortical populations can enhance sound localization performance J. Acoust. Soc. Am. 107 414–21 Jenison R L 2001 Decoding first-spike latency: a likelihood approach Neural Comput. 38 239–48 Jenison R L, Reale R A and Brugge J F 2001a Integrated likelihood estimation of sound-source direction under different intensity levels by ensembles of AI cortical neurons Soc. Neurosci. Abs. 30 Jenison R L, Reale R A and Brugge J F 2001b Profile likelihood estimation of sound-source direction under different intensity levels by ensembles of AI cortical neurons Assoc. Res. Otolaryngol. Abs. 213 Jenison R L, Reale R A, Hind J E and Brugge J F 1998 Modelling of auditory spatial receptive fields with spherical approximation functions J. Neurophysiol. 80 2645–56 Jorgensen B 1983 Maximum-likelihood estimation and large-sample inference for generalized linear and non-linear regression-models Biometrika 70 19–28 Jorgensen B 1987 Exponential dispersion models J. R. Stat. Soc. Ser. B 49 127–62 Jorgensen B 1999 Despersion models Encyclopedia of Statistical Sciences ed S Kotz, C B Read and D L Banks (New York: Wiley) pp 172–84 Jorgensen B, LundbyeChristensen S, Song P X and Sun L 1997 State space models for multivariate longitudinal data of mixed types Can. J. Stat. 25 425 Kass R E, Tierney L and Kadane J B 1989 Approximate methods for assessing influence and sensitivity in Bayesiananalysis Biometrika 76 663–74 Kass R E and Ventura V 2001 A spike-train probability model Neural Comput. 13 1713–20 Knight P L 1977 Representation of the cochlea within the anterior auditory field (AAF) of the cat Brain Res. 130 447–67 Lehmann E L and Casella G 1998 Theory of Point Estimation 2nd edn (New York: Springer) Levine M W 1991 The distribution of the intervals between neural impulses in the maintained discharges of retinal ganglion-cells Biol. Cybern. 65 459–67 Liu T, Liang L and Wang X 2001 Temporal and rate representations of time-varying signals in the auditory cortex of awake primates Nature Neurosci. 4 1131–8 MacPherson E A and Middlebrooks J C 2000 Localization of brief sounds: effects of level and background sounds J. Acoust. Soc. Am. 108 1834–49 Martignon L, Deco G, Laskey K, Diamond M, Freiwald W and Vaadia E 2000 Neural coding: higher-order temporal patterns in the neurostatistics of cell assemblies Neural Comput. 12 2621–53 McCullagh P and Tibshirani R 1990 A simple method for the adjustment of profile likelihoods J. R. Stat. Soc. Ser. B 52 325–44 Merzenich M M, Knight P L and Roth G I 1975 Representation of cochlea within primary auditory cortex in the cat J. Neurophysiol. 38 231–49 Middlebrooks J C 1998 Location coding by auditory cortical neurons Central Auditory Processing and Neural Modelling ed P W Poon and J F Brugge (New York: Plenum) pp 139–48 Middlebrooks J C, Clock A E, Xu L and Green D M 1994 A panoramic code for sound location by cortical neurons Science 264 842–4 (see comments) Middlebrooks J C and Pettigrew J D 1981 Functional classes of neurons in primary auditory cortex of the cat distinguished by sensitivity to sound location J. Neurosci. 1 107–20 Middlebrooks J C and Zook J M 1983 Intrinsic organization of the cat’s medial geniculate body identified by projections to binaural response-specific bands in the primary auditory cortex J. Neurosci. 3 203–24 Mukerjee R and Reid N 1999 On a property of probability matching priors: matching the alternative coverage probabilities Biometrika 86 333–40 Murphy S A and Van der Vaart A W 2000 On profile likelihood J. Am. Stat. Assoc. 95 449–65 Musicant A D, Chan J C and Hind J E 1990 Direction-dependent spectral properties of cat external ear: new data and cross-species comparisons J. Acoust. Soc. Am. 87 757–81 Nelder J. A and Wedderburn R. W 1972 Generalized linear models J. R. Stat. Soc. Ser. A 135 370–84 Ogata Y and Akaike H 1982 On linear intensity models for mixed doubly stochastic poisson and self-exciting pointprocesses J. R. Stat. Soc. Ser. B 44 102–7 Oram M W, Foldiak P, Perrett D I and Sengpiel F 1998 The ‘Ideal Homunculus’: decoding neural population signals TINS 21 259–65 Panzeri S, Schultz S R, Treves A and Rolls E T 1999a Correlations and the encoding of information in the nervous system Proc. R. Soc. B 266 1001–12 Panzeri S, Treves A, Schultz S and Rolls E T 1999b On decoding the responses of a population of neurons from short time windows Neural Comput. 11 1553–77 102 R L Jenison and R A Reale Paradiso M A 1988 A theory for the use of visual orientation information which exploits the columnar structure of striate cortex Biol. Cybern. 58 35–49 Pawitan Y 2001 In All Likelihood: Statistical Modelling and Inference Using Likelihood (Oxford: Clarendon) Petersen R S, Panzeri S and Diamond M E 2001 Population coding of stimulus location in rat somatosensory cortex Neuron 32 503–14 Rajan R, Aitkin L M and Irvine D R 1990 Azimuthal sensitivity of neurons in primary auditory cortex of cats. II. Organization along frequency-band strips J. Neurophysiol. 64 888–902 Read H L, Winer J A and Schreiner C E 2001 Modular organization of intrinsic connections associated with spectral tuning in cat auditory cortex Proc. Natl Acad. Sci. USA 98 8042–7 Reale R A, Chen J, Hind J E and Brugge J F 1996 An implementation of virtual acoustic space for neurophysiological studies of directional hearing Virtual Auditory Space: Generation and Applications ed S Carlile (Austin, TX: RG Landes Co.) pp 153–84 Reale R A and Imig T J 1980 Tonotopic organization in auditory cortex of the cat J. Comput. Neurol. 192 265–91 Schreiner C E 1998 Spatial distribution of responses to simple and complex sounds in the primary auditory cortex Audiol. Neurootol. 3 104–22 Schreiner C E and Cynader M S 1984 Basic functional organization of second auditory cortical field (AII) of the cat J. Neurophysiol. 51 1284–305 Schreiner C E, Read H L and Sutter M L 2000 Modular organization of frequency integration in primary auditory cortex Annu. Rev. Neurosci. 23 501–29 Seshadri V 1999 The Inverse Gaussian Distribution: Statistical Theory and Applications (New York: Springer) Seung H S and Sompolinsky H 1993 Simple models for reading neuronal population codes Proc. Natl Acad. Sci. USA 90 10749–53 Snippe H P and Koenderink J J 1992 Information in channel-coded systems: correlated receivers Biol. Cybern. 67 183–90 Sompolinsky H, Yoon H, Kang K J and Shamir M 2001 Population coding in neuronal systems with correlated noise Phys. Rev. E 64 051904 Stern S E 1997 A second-order adjustment to the profile likelihood in the case of a multidimensional parameter of interest J. R. Stat. Soc. Ser. B 59 653–65 Sutter M L and Schreiner C E 1991 Physiology and topography of neurons with multipeaked tuning curves in cat primary auditory cortex J. Neurophysiol. 65 1207–26 Thorpe S, Delorme A and Van Rullen R 2001 Spike-based strategies for rapid processing Neural Network 14 715–25 Tierney L, Kass R E and Kadane J B 1989a Approximate marginal densities of nonlinear functions Biometrika 76 425–33 Tierney L, Kass R E and Kadane J B 1989b Fully exponential Laplace approximations to expectations and variances of nonpositive functions J. Am. Stat. Assoc. 84 710–16 Tuckwell H 1988 Introduction to Theoretical Neurobiology (Cambridge: Cambridge University Press) Tweedie M C K 1941 A mathematical investigation of some electrophoretic measurements of colloids MS Thesis University of Reading Tweedie M C 1947 Functions of a statistical variate with given means, with special reference to Laplacian distributions Proc. Camb. Phil. Soc. 49 41–9 Tweedie M C 1957 Statistical properties of the inverse Gaussian distributions Ann. Math. Stat. 28 362–77 Wiener M C and Richmond B J 1999 Using response models to estimate channel capacity for neuronal classification of stationary visual stimuli using temporal coding J. Neurophysiol. 82 2861–75 Woolsey C N 1961 Organization of cortical auditory system Sensory Communication ed W A Rosenblith (Cambridge, MA: MIT Press) pp 235–57 Xu L, Furukawa S and Middlebrooks J C 1998 Sensitivity to sound-source elevation in nontonotopic auditory cortex J. Neurophysiol. 80 882–94 Zu T K and Recanzone G H 2001 Differential effect of near-threshold stimulus intensities on sound localization performance in azimuth and elevation of human subjects J. Assoc. Res. Otolaryn. 2 246–56