* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Compiler Design Chapter1-Week3-02-11

Regular expression wikipedia , lookup

C Sharp (programming language) wikipedia , lookup

C Sharp syntax wikipedia , lookup

Name mangling wikipedia , lookup

String literal wikipedia , lookup

Reserved word wikipedia , lookup

Gene expression programming wikipedia , lookup

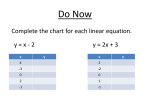

A Simple Syntax-Directed Translator 1 • • • • • • • 2.2 Syntax-Directed Translation 2.3 Parsing 2.4 A Translator for Simple Expressions 2.5 Lexical Analysis 2.6 Symbol Tables 2.7 Intermediate Code Generation 2.8 Summary of Chapter 2 2 Syntax-Directed Translation • Syntax-directed translation is done by attaching rules or program fragments to productions in a grammar. • For example, consider an expression expr generated by the production: • We can translate expr by exploiting its structure, as in the following pseudo-code: 3 Concepts of syntax-directed translation • Attributes. – An attribute is any quantity associated with a programming construct. Examples of attributes are data types of expressions, the number of instructions in the generated code, or the location of the first instruction in the generated code for a construct , among many other possibilities. • (Syntax- directed) translation schemes. – A translation scheme is a notation for attaching program fragments to the productions of a grammar. The program fragments are executed when the production is used during syntax analysis. 4 Postfix Notation • The postfix notation for an expression E can be defined inductively as follows: 5 Postfix Notation • Example 2.8 : The postfix notation for (9-5)+2 • What about?? 9- (5+2) • Evaluate?? 6 Postfix Notation • How to evaluate?? – Repeatedly scan the postfix string from the left , until you find an operator. – Then, look to the left for the proper number of operands, – group this operator with its operands. – Evaluate the operator on the operands, and replace them by the result. – repeat the process, continuing to the right and searching for another operator. 7 Postfix Notation • Example 2.9 : evaluate the following postfix notation: 952+-3* 8 Synthesized Attributes • We associate attributes with nonterminals and terminals. Then, we attach rules to the productions of the grammar; these rules describe how the attributes are computed at those nodes of the parse tree where the production in question is used to relate a node to its children. 9 10 11 Tree Traversals • Tree traversals will be used for describing attribute evaluation and for specifying the execution of code fragments in a translation scheme. – A traversal of a tree starts at the root and visits each node of the tree in some order. – A depth-first traversal starts at the root and recursively visits the children of each node in any order, not necessarily from left to right . It is called "depth first“ because it visits an unvisited child of a node whenever it can, so it visits nodes as far away from the root (as "deep" ) as quickly as it can. 12 13 Translation Schemes • Preorder and Postorder Traversals 14 15 16 Semantic actions 17 Example Actions for postfix translating 9-5+2 into 95-2+ 18 2.4 Parsing • Parsing is the process of determining how a string of terminals can be generated by a grammar. – Top-Down Parsing 19 steps 20 21 Use ϶-Productions • Predictive Parsing (self study) • When to Use ϶-Productions – predictive parser uses an ϶ -production as a default when no other production can be used 22 23 2.5 A Translator for Simple Expressions • Using the techniques of the last three sections, we now construct a syntax directed translator – A syntax-directed translation scheme often serves as the specification for a translator. – The scheme in Fig. 2.21 (repeated from Fig. 2.15) defines the translation to be performed here 24 Abstract and Concrete Syntax • A useful starting point for designing a translator is a data structure called an abstract syntax tree. • In an abstract syntax tree for an expression, each interior node represents an operator; the children of the node represent the operands of the operator. 25 Example 26 27 syntax tree vs. parse tree • in the syntax tree, interior nodes represent programming constructs while in the parse tree, the interior nodes represent nonterminals. 28 29 2.6 Lexical Analysis • A lexical analyzer reads characters from the input and groups them into "token objects.“ • The lexical analyzer in this section allows numbers, identifiers, and "white space" (blanks, tabs, and newlines) to appear within expressions. 30 Reading Ahead • A lexical analyzer may need to read ahead some characters before it can decide on the token to be returned to the parser. • For example, a lexical analyzer for C or Java must read ahead after it sees the character >. – If the next character is =, then > is part of the character sequence >=, the lexeme for the token for the "greater than or equal to" operator. – Otherwise > itself forms the "greater than" operator, and the lexical analyzer has read one character too many. 31 Constants 32 Recognizing Keywords and Identifiers • Most languages use fixed character strings such as for, do, and if , as punctuation marks or to identify constructs. • Such character strings are called keywords. • Character strings are also used as identifiers to name variables, arrays, functions, and the like. • Grammars routinely treat identifiers as terminals to simplify the parser, which can then expect the same terminal, say id, each time any identifier appears in the input. For example, on input 33 Recognizing Keywords and Identifiers • the parser works with the terminal stream id = id + id. The token for id has an attribute that holds the lexeme. • Writing tokens as tuples, we see that the tuples for the input stream (2.6) are • Keywords generally satisfy the rules for forming identifiers, so a mechanism is needed for deciding when a lexeme forms a keyword and when it forms an identifier. • The problem is easier to resolve if keywords are reserved; i.e., if they cannot be used as identifiers. Then, a character string forms an identifier only if it is not a keyword. 34 2.7 Symbol table • Symbol tables are data structures that are used by compilers to hold information about source-program constructs. • The information is collected incrementally by the analysis phases of a compiler and used by the synthesis phases to generate the target code. • Entries in the symbol table contain information about an identifier such as its character string (or lexeme) , its type, its position in storage, and any other relevant information. Symbol tables typically need to support multiple declarations of the same identifier within a program. 35 Scope • Symbol Table Per Scope: The term "scope of identifier x' really refers to the scope of a particular declaration of x. • Scopes are important, because the same identifier can be declared for different purposes in different parts of a program. • If blocks can be nested, several declarations of the same identifier can appear within a single block. 36 37 2.8 Intermediate Code Generation • Two Kinds of Intermediate Representations 38 Construction of Syntax Trees 39 L-values and R-values • There is a distinction between the meaning of identifiers on the left and right sides of an assignment. In each of the assignments the right side specifies an integer value, while the left side specifies where the value is to be stored. 40 Type Checking 41 Three-Address Code 42 Example 43 2.9 Summary o f Chapter 2 44