* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

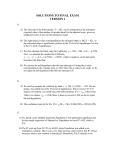

Download p - HKUST Business School

Data assimilation wikipedia , lookup

German tank problem wikipedia , lookup

Choice modelling wikipedia , lookup

Confidence interval wikipedia , lookup

Least squares wikipedia , lookup

Linear regression wikipedia , lookup

Regression analysis wikipedia , lookup