* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Lecture 5 - Categorical and Survival Analyses

German tank problem wikipedia , lookup

Expectation–maximization algorithm wikipedia , lookup

Regression toward the mean wikipedia , lookup

Data assimilation wikipedia , lookup

Least squares wikipedia , lookup

Choice modelling wikipedia , lookup

Regression analysis wikipedia , lookup

Linear regression wikipedia , lookup

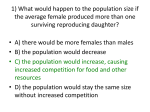

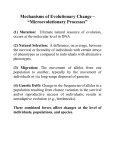

Lecture 5 – Categorical Data and Survival Analyses OUTLINE • Definition • Common CDA – Descriptive summaries – Tests of Association – Modeling • Extensions • Other examples in CDA What is Categorical Data Analysis? • Statistical analysis of data that are categorical (cannot be summarized with mean +/- SD) • Includes dichotomous, ordinal, nominal outcomes • Examples: Disease prevalence, Discharge location, Treatment adherence (yes/no) Examples of Studies with CDA • MI after CABG • Diagnostic studies looking Sensitivity, Specificity of a new test/procedure • Discharge location after new surgical intervention. How to analyze words? • Order vs. no order • Breakdown mean +/- SD for two groups • Do the same: Breakdown Outcome %’s for two groups How to analyze words? • Comparing length of stay after CABG: – New Trt = 19.2 +/- 2.7 – SOC = 21.3 +/- 3.3 • Comparing prevalence of MI: – New Trt = 16% – SOC = 24% • Are these differences statistically significant? clinically significant? Choice of End Point • Some designs have a binary response variable – MI after 3 years – Overall Survival – Time to CVD – Time to recurrent MI • Can Dichotomize as 1 year rate (Yes/No) What is Categorical Data Analysis? • paper example Common CDA • Descriptive summaries • Tests for association • Modeling Descriptive summaries Let’s Talk Data… Descriptive Summaries in CDA Nominal – Categorical Data Measured in unordered categories (summarize with %’s) Ordinal – Categorical Data Measured in ordered categories (summarize with %’s) Continuous – Quantitative Data Measured on a continuum summarize with many measures Types of Data Nominal – Categorical data measured in unordered categories Race Blood Type Gender Ordinal – Categorical data measured in ordered categories Likert (unlikely, neutral, likely) Cancer Stages Socio-economic Status (low, medium, high) Continuous – Quantitative data measured on a continuum Serum Creatinine Height/Weight/BMI Diastolic Blood Pressure Tumor measurements What the data might look like… Compare Categorical Outcomes between groups • How to assess if a predictor is associated with a categorical outcome? • Intuitive?: Get the %’s of the outcome prevalence within each predictor group. • Example: New Trt and MI. – New Trt response rate = 16% – SOC response rate = 24% Contingency Tables Group MI Yes No New a b a+b Old c d c+d a+c b+d n=a+b+c+d Group MI Yes No New 12 8 20 Old 4 16 20 16 24 N=40 CDA Summary with Contingency Table • Research question Is there a relationship between Group and Attacked Heart? • Better to convert the table into percentages (easier to see) What the data might look like… Step 1. Breakdown the frequencies MI No MI New TRT 12 8 Old TRT 4 16 Step 2. Get the different %’s (Cell %) Row % No MI MI New TRT 12 (30%) 60% 75% 8 ( 20%) 40% 33% Old TRT 4 (10%) 20% 25% 16 (40%) 80% 67% 20 Total 16 24 40 Col % Total 20 Row vs. Column %’s: It’s your choice • Row %’s: – 40% of New trt patients had MI vs. Old trt patients had MI 80% of • Col %’s: – 75% of No MI were in the New trt group vs. MI were in New trt group • P-value for test of association is the same! 33% of Tests for Association CDA tests for Association • Is there a significant association between Group and MI? • What is a good way to test for an association between the two? Test for significant differences • The most common tests are the Chi-square test and Fisher’s Exact test. • Research question: Is there an association between treatment group and MI? • To answer this: Compare what you would expect if there was no association to what you observed Expect if no relationship? No MI New TRT MI Total 20 Old TRT 20 Total 40 Expect if no relationship? No MI MI 20 New TRT Old TRT Total Total 20 16 24 40 Same % with MI by Group (Cell %) Row % No MI MI New TRT 8 (20%) 40% 50% 12 ( 30%) 60% 50% Old TRT 8 (20%) 40% 50% 12 (30%) 60% 50% 20 Total 16 24 40 Col % Total 20 Test for significant differences • Have exact same response % would favor “no association” • There is another general way to calculate what you “expect” • Use Row totals, Column totals, Grand total to calculate “Expected” frequencies Observed vs. Expected Frequencies • Observed frequencies = actual counts • “Expected” frequencies: = Row total x Column total / Grand total (why?) What you actually observed in Study Actual No MI MI Total New TRT 12 8 20 Old TRT 4 16 20 Total 16 24 40 “Expected” frequencies Actual Expected No MI MI Total New TRT 12 8 8 12 20 Old TRT 4 8 16 12 20 Total 16 24 40 Chi-square test • Quantify if the actual frequencies are far enough away from the Expected (assuming no association) • We can quantify using the Chi-square test statistic • We can get the p-value to determine if there is a significant association. Chi-square test for association in RxC table • H0: There is no association between row and columns TotalROW * TotalCOL expected ij TotalOVERALL • The classic Pearson’s chi-squared test of independence 2 2 (Observedij Expectedij ) 2 12 dist Expected • Fori a12x2 (2-1) x (2-1) ij = 1 j 1 table, df = • Conservatively, we require expected ≥ 5 for all i, j Chi-square Test 2 2 2 TS (Observedij Expectedij ) 2 Expectedij i 1 j 1 12 82 8 12 2 4 82 16 12 2 8 12 8 12 6.67 •Associated P-value for this Chi-square value is p=0.0098. Thus, we conclude group and MI are significantly associated (given α = 0.05). “Expected” frequencies Actual Expected No MI MI Total New TRT 12 8 8 12 20 Old TRT 4 8 16 12 20 Total 16 24 40 Fisher’s Exact Test • Fisher’s Exact test will test similar hypotheses as the Chi-square test. • Use Fisher’s Exact test when assumptions of Chisquare test are not satisfied. • That is, when you have Expected < 5 (basically implying when cell sample size is small). Confidence Intervals for %’s Confidence Interval for %’s • You conduct your follow-up after CABG study and accrue 40 patients. • After 3 years 20 out of all 40 patients have had a MI. • Q1. What is your best guess at the true (population) MI rate at 3 years? A. Based on your sample, 20/40 = 50% Sampling Variability Inference MI = 50% MI at 3 yrs = ? Sample Population Sampling Variability Inference MI = 44% MI at 3 yrs = ? Sample Population Confidence Interval for %’s • A good way to make inference about what the range of plausible values of the population % is to calculate a Confidence Interval (CI). • Q2. How much precision do you have in terms of estimating the MI rate at 3 yrs. in the population based on your sample? 95% Confidence Intervals • 95% Confidence Interval for Mean: sd X 2 n • 95% Confidence Interval for Proportion (Standard “Wald” CI): pˆ 1 pˆ pˆ 2 n Confidence Interval for %’s • Q2. How much precision do you have in terms of estimating the MI rate in the population based on your sample? (Remember, 20 of 40 total had MI) A. A 95% Wilson CI for population MI rate is (35.2%, 64.8%). Thus, if we have repeated our study over and over again, each time drawing a sample of 40 patients, then the true population MI rate at 3 yrs. would be between 35.2% and 64.8% approximately 95% of the time. Confidence Interval for %’s • What’s interesting is that there are “lucky” and “unlucky” combinations of p (response rate) and N (sample size) • That is, for a given sample size: * for some p you may higher ability to make inference * for some p you may have less ability! • Not to scald the Wald, but not all CI’s are created equal • Paper Modeling in CDA Modeling in CDA • Modeling is done with variations of Logistic Regression: • • • • • • Dichotomous Ordinal (Proportional odds) Nominal (Generalized logit) Conditional (Matched-pairs) Exact (small sample size/rare outcome) Longitudinal (GEE, GLMM) • Simple (1 predictor) vs. Multivariable (>1 predictor/adjusted) Why use adjusted analysis? • Do you think patient demographics or clinical characteristics at baseline would affect MI? • What if half of the patients are all <30 yrs. old and half are all >80 yrs. old? • What are some possible confounders of response? Effect modifiers? • These are testable in adjusted analyses. You may not need adjusted. • Typically have well-defined specific patient populations of interest. • Thus, inclusion/exclusion criteria might have removed variability from potential confounders • A well designed, well executed trial usually does not require intensive and complex analysis. What is Logistic Regression? • In a nutshell: A statistical method used to model dichotomous or binary outcomes (but not limited to) using predictor variables. Used when the research method is focused on whether or not an event occurred, rather than when it occurred (time course information is not used). What is Logistic Regression? • What is the “Logistic” component? Instead of modeling the outcome, Y, directly, the method models the Pr(Y) using the logistic function. What is Logistic Regression? • What is the “Regression” component? Methods used to quantify association between an outcome and predictor variables. Could be used to build predictive models as a function of predictors. What can we use Logistic Regression for? • To estimate adjusted prevalence rates, adjusted for potential confounders (sociodemographic or clinical characteristics) • To estimate the effect of a treatment on a dichotomous outcome, adjusted for other covariates • Explore how well characteristics predict a categorical outcome Fig 1. Logistic regression curves for the three drug combinations. The dashed reference line represents the probability of DLT of .33. The estimated MTD can be obtained as the value on the horizontal axis that coincides with a vertical line drawn through the point where the dashed line intersects the logistic curve. Taken from “Parallel Phase I Studies of Daunorubicin Given With Cytarabine and Etoposide With or Without the Multidrug Resistance Modulator PSC-833 in Previously Untreated Patients 60 Years of Age or Older With Acute Myeloid Leukemia: Results of Cancer and Leukemia Group B Study 9420” Journal of Clinical Oncology, Vol 17, Issue 9 (September), 1999: 283. http://www.jco.org/cgi/content/full/17/9/2831 Logistic Regression quantifies “effects” using Odds Ratios • Does not model the outcome directly, which leads to effect estimates quantified by means (i.e., differences in means) • Estimates of effect are instead quantified by “Odds Ratios” Logistic Regression & Odds Ratio (OR) • The odds ratio is equally valid for retrospective, prospective, or cross-sectional sampling designs • That is, regardless of the design it estimates the same population parameter (not true for Relative Risk) Relationship between Odds & Probability Odds event = Probability event 1-Probability event Probability event Odds event 1+Odds event The Odds Ratio Definition of Odds Ratio: Ratio of two odds estimates. Example: Suppose 16 out of 40 people in the trt group had a MI and only 5 out of 25 in the placebo group had a MI. 16 Pr MI | trt group 0.40 40 5 Pr MI | placebo group 0.20 25 The Odds Ratio Example Cont’d: So, if Pr(MI | trt) = 0.40 and Pr(MI | placebo) = 0.20 Then: 0.40 Odds MI | trt group 0.667 1 0.40 0.20 Odds MI | placebo group 0.25 1 0.20 0.667 OR Trt vs. Placebo 2.67 0.25 Interpretation of the Odds Ratio •Example cont’d: Outcome = MI, OR Trt vs. Plb 2.67 Then, the odds of a MI in the treatment group were estimated to be 2.67 times the odds of having a MI in the placebo group. Alternatively, the odds of having a MI were 167% higher in the treatment group than in the placebo group. Odds Ratio vs. Relative Risk • An Odds Ratio of 2.67 for trt. vs. placebo does NOT mean that MI is 2.67 times as LIKELY to occur. • It DOES mean that the ODDS of MI are 2.67 times as high for trt. vs. placebo. Odds Ratio vs. Relative Risk • The Odds Ratio is NOT mathematically equivalent to the Relative Risk (Risk Ratio) • However, for “rare” events, the Odds ratio can approximate the Relative risk (RR) 1-P MI | plb OR=RR 1-P MI | trt The Logistic Regression Model Logistic Regression: P Y ln 0 1 X 1 2 X 2 1-P Y K X K Linear Regression: Y 0 1 X 1 2 X 2 K X K The Logistic Regression Model predictor variables P Y ln 0 1 X 1 2 X 2 1-P Y K X K dichotomous outcome PY is the log(odds) of the outcome. ln 1 PY The Logistic Regression Model P Y ln 0 1 X 1 2 X 2 1-P Y intercept K X K model coefficients PY is the log(odds) of the outcome. ln 1 PY The Logistic Regression Model P Y ln 0 1 X 1 2 X 2 1-P Y P Y exp 0 1 X 1 2 X 2 1 exp 0 1 X 1 2 X 2 K X K K X K K X K In this latter form, the logistic regression model directly relates the probability of Y to the predictor variables. Application of Logistic Regression: • paper example Extensions of Logistic Regression • Outcomes with more than 2 categories (polytomous or polychotomous) • Cumulative logit model – Proportional odds model for ordinal outcomes (ordered categories) • Generalized logit model for nominal outcomes or nonproportional odds models (unordered categories) Extensions of Logistic Regression • Ordinal Logistic Regression model: – Fits a logistic regression model with g-1 intercepts for a g category outcome and one model coefficient for each predictor – Models cumulative probability of being in a “higher” category Discharge Location as Ordinal (Died, Assisted, Home) • There is no law that says you can’t model all categories of Discharge Location • Ordinal logistic regression example: Predictor OR P-value Trt vs. Control 1.24 0.036 M vs. F 0.87 0.163 Ordinal Outcome (Died, Assisted, Home) Predictor OR P-value Trt vs. Control 1.24 0.036 M vs. F 0.87 0.163 OR(Trt vs C) = 1.24 means there was 24% higher odds of being in a higher DL category for Treatment vs. Control (adjusting for gender). OR(M vs F) = 0.87 means there was 13% lower odds of being in a higher DL category for Males vs. Females (adjusting for Trt group). Extensions of Logistic Regression • Nominal Logistic Regression Model: – Fits a logistic regression model with g-1 intercepts and g-1 model coefficients for a g category outcome – Model captures the multinomial probability of being in a particular category using generalized logits Nominal Logistic Regression • Doesn’t make “Proportional odds” assumption • Separate OR’s for C-1 categories of C category outcome (get OR for every group except Referent) • Example: Predictor Trt vs. Control Home vs. Died Assisted vs. Died OR P-value 1.22 1.56 Overall=0.048 0.236 0.034 Nominal Logistic Regression Predictor Trt vs. Control Home vs. Died Assisted vs. Died OR P-value 1.22 1.56 Overall=0.048 0.236 0.034 • Thus, there was 56% higher odds of being discharged to Assisted Living compared to Dying for Trt. vs. Control. Extensions of Logistic Regression • Longitudinal data / repeated measures data / Clustered data with binary outcomes • Multilevel models (nested data structures) GEE (Generalized Estimating Equations) GLMM (Generalized Linear Mixed Models) Repeated Measures / Longitudinal data • Longitudinal data = data on subjects over time • Repeated measures need to be taken into account when testing for differences • Need to investigate correlation of repeated measures Extensions to Logistic Regression • Exact Logistic Regression • Small Sample Size • Adequate sample size but rare event (sparse data) Questions? Part II. Analysis of Time-to-event Data (A.K.A., Survival Analysis) What do we mean by Time? • • Length of follow-up till the event of interest occurs Follow-up can start at (for example) 1. Randomization into a clinical trial 2. Time of employment 3. First contact on record in retrospective cohort • Age of the individual at the time of the event What is Survival Analysis? • Survival analysis is a collection of statistical analysis techniques where the outcome is time to an event. • Survival or time-to-event outcomes are defined by the pair of random variables (ti, δi) that give the observation time and an indicator of whether or not the event occurred What do we mean by Event? • Usually we mean death – thus the name “survival” analysis • Other examples: – Cancer relapse or recurrence – Disease incidence • Can also be a positive outcome: – Discharge from psychiatric counseling – Normalization of WBC count (in these examples, death would be a censored outcome) Censoring • In the pair of random variables (ti, δi) that constitute survival outcomes: – ti is an observed variable representing time (e.g., actual time until death or time until last follow-up) – δi is a Bernoulli random variable (0,1) or indicator of whether the observation is censored or not – 1 if we observed a failure, 0 if we have a censored observation Censoring Occurs • When we have incomplete information about the exact survival time due to a random factor – Non-informative censoring – whether an observation is censored or not is independent of the value of the observation. – Informative censoring – whether an observation is censored or not is dependent on the value of the observation – E.g., we use dates seen in clinic provide censoring times (without attempted phone contact to verify vital status) • We will require non-informative censoring mechanisms. If censoring is informative, then these methods will generate biased results. Types of censoring • Right censoring – true survival time is greater than what we observed • Left censoring – true survival time is less than what we observed (less common) • Interval censoring – subjects are not observed continuously and we only know the event happened between time A and time B (e.g., annual testing of partner of an HIV+ individual) Three common reasons for right censoring • Person does not experience the event before the study ends • Person is lost to follow-up during the study period • Person withdraws from the study because of death (if death is not the outcome of interest) or some other reason (e.g., adverse drug reaction) What do the data look like? end of study 1 2 drop out 3 4 event occurred 5 0 5 10 15 20 Example: Survival of Patients With Renal Resistive Index ≥ 0.8 • 86 hypertensive patients with open or percutaneous repair of RVD • RA resistive index (RI) defined as 1-EDV/PSV in the D-segment • RI dichotomized: <0.8 or ≥0.8 • Outcome of interest is time to death (any cause) Example: Survival of Patients With Renal Resistive Index ≥ 0.8 Preop RI Hosp UNITNO 006-52-90 <08 or ≥ 0.8 1 013-39-46 1 77.1 0 014-57-80 0 112.3 0 022-39-81 0 90.3 0 023-80-78 0 88.3 0 026-66-88 0 104.3 0 028-75-46 0 57.8 0 030-10-56 0 26.8 0 033-83-68 0 38.1 1 034-30-42 1 90.9 0 RI=1 if ≥ 0.8 Time to Death Event Indicator 41.0 1 δi = 1 if death δi = 0 if censored RI=0 if < 0.8 Survival Distribution • Distribution of times to event – called “survival times,” even when the “event” is not “death” • Let T = survival time (T ≥ 0) t = specified value for T • Survival times follow a continuous distribution with times ranging from zero to infinity • Ordinary methods for estimating and comparing continuous distributions cannot be used with survival data due to the presence of censoring Probability Density Function f (t ) 1 f (t ) lim P[t T t t ] t 0 t Difficult to estimate density directly because of censoring – histogram not direct estimate of f(t) Cumulative Distribution Function F(t ) t F (t ) P[T t ] f ( s )ds 0 Defined in the same way we would any CDF Survival Function S(t ) t t 0 S (t ) Pr[T t ] f (u )du 1 f (u )du 1 F (t ) • Monotone non-increasing function • S(0) = 1 • S(+∞) = 0 Hazard Function λ(t) Prob event in (t, t t ) given survived to t (t ) lim t 0 t Pr(t T t t | T t ) lim t 0 t Instantaneous death rate at time t, given alive at time t Hazard Function λ(t ) • So, you survived to time t, what is the probability that you survive another increment of time t? • Standardize this conditional probability to a per unit of time. • As unit of time gets very small (i.e., goes to 0) this conditional probability becomes an instantaneous rate. • Some simple features of λ(t) – λ(t) takes on values in the interval (0, ∞) – λ(t) could be instantaneously increasing, decreasing, or constant Survival Distribution • Any one of these four functions is enough to specify the survival distribution. There exists an equivalence relationship between the them. • Survival analysis techniques focus on survival distribution S(t) and hazard rate λ(t) – When λ(t) is high, S(t) decreases faster. – When λ(t) is low, S(t) decreases slower. How do censored cases affect survival estimation? • Censored patients do not make the survival curve drop in steps • Censored cases do reduce the number of patients left who are contributing to the survival curve • Thus every event after that censored case will result in a “larger” step down than it would have been without the censored case How do censored cases affect the survival estimation? • The reduction in sample size due to amount of censored cases present will result in reduced reliability of the estimates of survival. • That is, larger amount of censored cases make wider CI’s about survival estimates. • End of the survival curve is most affected yet is of great interest Survival Estimation: The KaplanMeier (K-M) Method • Also known as “Product-limit” Method • Most popular method of estimating survival / time-to-event • Good statistical properties: estimates converge to true survival distribution as sample size grows • Nonparametric - does not require knowledge of the underlying distribution K-M Estimation: How it Works • Order death/censoring times from smallest to largest • Update survival estimate at each distinct failure time j Sˆ (t( j ) ) Pˆ [T t( i ) | T t( i ) ] i 1 Sˆ (t( j 1) ) Pˆ (T t( j ) | T t( j ) ) K-M Estimation: RI Example Product-Limit Survival Estimates Time Censored Survival Number Number (months) Survival SE Failed Left (*) 0.000 1.0000 0 0 27 2.628 * . . 0 26 7.392 0.9615 0.0377 1 25 13.470 0.9231 0.0523 2 24 14.587 * . . 2 23 16.164 0.8829 0.0636 3 22 16.296 0.8428 0.0722 4 21 ˆ = (prop. alive after this death) (surv. estimate at prior death) S(t) 21 = 0.8829 22 Plot of K-M Survival Estimate Group with RI≥0.8 1.0 Proportion Alive 0.9 0.8 0.7 0.6 0.5 0.4 0 6 12 18 24 30 36 42 48 54 60 66 72 78 84 90 96 Months Post-surgery Survival estimate updated at each death time Open diamonds mark censoring times Assumptions of Kaplan-Meier • Non-informative Censoring: The probability of being censored does not depend upon a patient’s prognosis for the event. • Deaths of patients in a sample occur independently of each other • Does not make assumptions about the distribution of survival times Before Kaplan-Meier • • • • • Life-table (“actuarial”) method of estimating time to death Break follow-up time into pre-defined intervals Number of subjects alive at beginning of interval Number of subjects dying during interval Estimate survival in similar fashion to Kaplan-Meier Testing for difference between two survival curves: Log-rank test • Are two survivor curves the same? • Use the times of events: t1, t2, ... (do not include censoring times) • Treat each event and its “set of persons still at risk” (i.e., risk set) at each time tj as an independent table • Make a 2×2 table at each tj (i.e., each distinct death time) Event No Event Total Group A aj njA- aj njA Group B cj njB-cj njB Total dj nj-dj nj Log-rank test for comparing survivor curves • At each event time t j, under assumption of equal survival (i.e., SA(t) = SB(t) ), the expected number of events in Group A out of the total events (dj=aj +cj) is proportional to the numbers at risk in group A to the total at risk at time tj: E(aj)= dj x njA / nj • Differences between aj and E(aj) represent evidence against the null hypothesis of equal survival in the two groups Log-rank test for comparing survivor curves • Use the Cochran Mantel-Haenszel idea of pooling over events j to get the log-rank chi-squared statistic with one degree of freedom 2 a j E (a j ) j 2 ~ 2 1 ˆ V a r a j j Log-rank test for comparing survivor curves • Idea summary: – Create a 2x2 table at each uncensored failure time – The construct of each 2x2 table is based on the corresponding risk set – Combine information from all the tables • The null hypothesis is SA(t) = SB(t) for all times t (i.e., tests for differences across entire distribution) Resistive Index example 1.0 0.9 0.8 RI < 0.8 Proportion Alive 0.7 0.6 0.5 RI ≥ 0.8 0.4 0.3 Log-rank Χ2= 15.2 p-value<0.0001 0.2 0.1 0.0 0 (N=84) (N=76) (N=63) (N=48) (N=37) (N=30) (N=15) (N=8) (N=3) 12 24 36 48 60 72 84 96 108 Months Post-surgery 120 Other Tests to Compare Survival Curves You May Encounter • Wilcoxon (a.k.a., Peto) Test – Weights analysis by the number of subjects at risk at each distinct death time – More sensitive than log-rank to early differences in survival curves; log-rank is more sensitive to late differences in curves • Likelihood Ratio Test – Assumes exponential distribution – Optimal if survival is, in fact, exponentially distributed From Stratification to Modeling • Goal: extend survival analysis to an approach that allows for multiple covariates of mixed forms (i.e., continuous, ordinal and nominal categorical) • We have two options for our expansion – Model the survival function or time – Model the hazard function (between 0 to ∞) We will model the hazard function What are Proportional Hazards? (t | x1 ) C C S (t | x1 ) S (t | x 2 ) (t | x 2 ) • The constant C does not depend on time • The model is multiplicative Cox Proportional Hazards Model • D.R. Cox assumed proportionality was constant across time and proposed the following model: p (t; X ) o (t ) exp( i xi ) i 1 where λ0(t) is the baseline hazard and involves t but not X p • exp( i xi ) is the exponential function; involves X’s i 1 but not t (as long as the are time independent) Cox Proportional Hazards Model • The regression model for the hazard function as a function of p explanatory (X) variables is specified as follows: log hazard: log λ(t; X) = log h0(t) + 1X1 + 2X2 + … + pXp hazard: λ(t; X) λo (t)e β1X1 e ...e β2X2 βpXp Cox Proportional Hazards Model β1 • Interpretation of e – The relative hazard (i.e., hazard ratio) associated with a 1 unit change in X1 (i.e., X1+1 vs. X1), holding other Xs constant, independent of time – The relative (instantaneous) risk for X1+1 vs. X1, holding other Xs constant, independent of time • Other ’s have similar interpretations Cox Proportional Hazards Model • e 1 “multiplies” the baseline hazard λ0(t) by the same amount regardless of the time t, thus we have a “proportional hazards” model – the effect of any (fixed) X is the same at any time during follow-up • is the focus whereas λ 0(t) is a nuisance variable • Cox (1972) showed how to estimate without having to assume a model for λ 0(t) (e.g., estimating λ 0(t) with a step function) • Let # steps get large —partial likelihood for depends on , not λ0(t) Partial likelihood • The likelihood function used in Cox PH models is called a partial likelihood • We use only the part of the likelihood function that contains the ’s • It depends only on the ranks of the data and not the actual time values. Partial likelihood • Let the survival times (times to failure) be: t1 < t2 < ... < tk • And let the “risk sets” corresponding to these times be: R1, R2, ..., Rk where Rj = list of persons at risk just before tj • The “partial likelihood” for is 1 X1i 2 X 2 i ... p X pi e L( ) 1 X 1 j 2 X 2 j ... p X pj i 1 e jRi k (Assumes no ties in event times) • To estimate , find the values of s that maximize L() above. Partial likelihood • Why does the partial likelihood make sense? e 1X 1i 2 X 2 i ... p X pi e jRi 1X 1 j 2 X 2 j ... p X pj 1X 1i 2 X 2 i ... p X pi 0 (ti )e X ( t ) e 0 i 1 1 j 2 X 2 j ... p X pj jRi hazard of failedperson hazards of ones who could have failedat ti • Choose so that the one who failed at each time was most likely - relative to others who might have failed! Some General Comments Thoughts • Similar to logistic regression, a simple function of the β has a particularly nice interpretation • ˆ e can be interpreted as a relative risk (risk ratio) for a one unit change in the predictor ˆ 0.60 e0.60 0.55 (protective effect) 0.60 ˆ 0.60 e 1.82 (increased risk) Some General Comments Thoughts • Estimates of βs are asymptotically normal (i.e., are normally distributed) • Two important implications of asymptotic normality – We can use the likelihood ratio, score, and Wald tests to make inference about our data – Wald test: “thing/SE(thing)” – We can use the usual method to construct a 95% confidence interval e ˆ ˆ 1.96 SE ( ) Resistive Index: Univariable PH Regression Summary of the Number of Event and Censored Values Percent Total Event Censored Censored 86 22 64 74.42 Testing Global Null Hypothesis: BETA=0 ChiTest Square DF Pr > ChiSq Likelihood Ratio 12.6328 1 0.0004 Score 15.2341 1 <.0001 Wald 12.6195 1 0.0004 “Score” test is equivalent to log-rank Analysis of Maximum Likelihood Estimates 95% Hazard Ratio Parameter Standard Hazard Confidence Parameter DF Estimate Error Chi-Square Pr > ChiSq Ratio Limits PRERIGEP8 1 1.56144 0.43954 12.6195 0.0004 4.766 2.014 11.279 β̂ e ˆ e ˆ 1.96 SE ( ˆ ) Resistive Index: Multivariable PH Regression Analysis of Maximum Likelihood Estimates Parameter RI >=0.8 History of Coronary Disease History of PVD Preop - Postop Num Meds Failed BP Response DF 1 1 1 1 1 Parameter Estimate 1.89910 1.65099 0.78338 -0.46046 1.43046 Standard Error Chi-Square Pr > ChiSq 0.48164 15.5471 <.0001 0.75558 4.7745 0.0289 0.44869 3.0483 0.0808 0.20628 4.9830 0.0256 0.57694 6.1474 0.0132 Hazard 95% Hazard Ratio Ratio Confidence Limits 6.680 2.60 17.17 5.212 1.19 22.92 2.189 0.91 5.27 0.631 0.42 0.94 4.181 1.35 12.95 • Each effect is estimated controlling for other effects in model • Note: increase in hazard ratio for RI in multivariable model • Proportional hazards assumption should be verified Examine survival plots by covariate values Plot –log(-log(S(t)) vs. time, should be parallel if hazards are proportional Resistive Index example 1.0 0.9 0.8 RI < 0.8 Proportion Alive 0.7 0.6 0.5 RI ≥ 0.8 0.4 0.3 Log-rank Χ2= 15.2 p-value<0.0001 0.2 0.1 0.0 0 (N=84) (N=76) (N=63) (N=48) (N=37) (N=30) (N=15) (N=8) (N=3) 12 24 36 48 60 72 84 96 108 Months Post-surgery 120 Example of proportional hazards violation Unadjusted all-cause mortality survival curve, by annual hospital volume of Medicare breast cancer cases: United States, 1994–1996 Remedial Measures for Non-proportionality • Stratified analysis – Uses stratification to control for the nonproportional factor – Removes factor as covariate (i.e., get no effect estimate) • Add time dependent covariate – Time dependent covariates change value over time – Can use indicator to fit new effect after change point (e.g., at 40 months in previous plot)