* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Classical Tests

Survey

Document related concepts

Transcript

Classical Tests

Assumptions and related tests

What we will cover

• Assumptions behind the statistical tests we have

examined already

• How to check normality in R

• How to check population variances

• How to carry out some the classical statistical tests

quickly in R

• T-tests

• Chi-squared Goodness of fit

• Analysis of variance

• What to do when the assumptions are broken?

• Introducing non-parametric tests.

Classical Tests

• There is absolutely no point in carrying out an analysis that is more

complicated than it needs to be.

• Occam’s razor applies to the choice of statistical model just as

strongly as to anything else: simplest is best.

• The so-called classical tests deal with some of the most frequently

used kinds of analysis for single-sample and two-sample problems.

• In addition we have tests for more than two samples.

• But before we apply the tests we have looked at previously we need

to see if we have satisfied the assumptions.

Single Samples

• Suppose we have a single sample. The questions we might want to answer are these:

• What is the mean value?

• Is the mean value significantly different from current expectation or theory?

• What is the level of uncertainty associated with our estimate of the mean value?

• In order to be reasonably confident that our inferences are correct, we need to establish some

facts about the distribution of the data:

• Are the values normally distributed or not?

• Are there outliers in the data?

• If data were collected over a period of time, is there evidence for serial correlation?

Be careful

• Non-normality, outliers and serial correlation can

all invalidate inferences made by standard

parametric tests like Student’s t test.

• REMEMBER: none of this is of any concern to the

functions in R.

• We can run a t-test on any data that we choose and as

long as it is numeric R will return a result. But the

result could be completely meaningless.

• Getting a p-value at the end may be give the

impression of precision and that we can make

inferences but don’t be fooled by this impression.

Data Inspection

> das <- read.table( "C:/das.txt", header=T )

> par(mfrow=c(2,2))

> plot( das$y)

> boxplot( das$y)

> hist( das$y, main="")

> y2 <- das$y

> y2[52]<-21.75

> plot(y2)

Data Inspection

We inserted a mistaken

entry in ‘y2’. The index plot

(bottom right) is

particularly valuable for

drawing attention to

mistakes in the dataframe.

Suppose that the 52nd

value had been entered as

21.75 instead of 2.175: the

mistake stands out like a

sore thumb in the plot

(bottom right).

Data Inspection

> summary( das$y)

Min. 1st Qu. Median Mean 3rd Qu. Max.

1.904 2.241 2.414 2.419 2.568 2.984

• This gives us six pieces of information about the vector called y. The

smallest value is 1.904 (labelled Min. for minimum) and the largest

value is 2.984 (labelled Max. for maximum).

• There are two measures of central tendency: the median is 2.414 and

the arithmetic mean in 2.419.

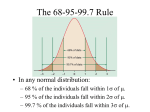

Normality

• Parametric statistical tests often assume the sample under test is from a population with normal

distribution.

• By making this assumption about the data, parametric tests are more powerful than their

equivalent non-parametric counterparts and can detect differences with smaller sample sizes, or

detect smaller differences with the same sample size.

• When to check sample distribution

• It’s vital you ensure the assumptions of a parametric test are met before use.

• If you’re unsure of the underlying distribution of the sample, you should check it.

• Only when you know the sample under test comes from a population with normal distribution –

meaning the sample will also have normal distribution – should you consider skipping the normality

check.

• Many variables in nature naturally follow the normal distribution, for example, biological variables such

as blood pressure, serum cholesterol, height and weight. You could choose to skip the normality check

these in cases, though it’s always wise to check the sample distribution.

Normality

• Tests for normality: we have actually already done this when we examined

the residuals for the regression models which we have built!

• The simplest test of normality (and in many ways the best) is the ‘quantile–

quantile plot’.

• This plots the ranked samples from our distribution against a similar

number of ranked quantiles taken from a normal distribution.

• If our sample is normally distributed then the line will be straight. Departures from

normality show up as various sorts of non-linearity (e.g. S shapes or banana shapes).

• The functions you need are qqnorm and qqline (quantile–quantile plot

against a normal distribution):

quantile–quantile plot

> qqnorm( das$y )

> qqline( das$y, lty=2)

• This shows a slight S-shape, but there is no compelling evidence of nonnormality (our distribution is somewhat skew to the left; see the

histogram, above).

• It’s relatively easy spot data that falls at either extreme (either along the

line or far from it) but there is no real clear rules for borderline cases.

• Different people will have different opinions.

• Therefore, there a number of tests that can be used to test normality.

• And R does come with widely used tests that allow use to test normality.

Test for normality

• One test we might use is the Shapiro-Wilks normality test (shapiro.test in

R) for testing whether the data in a vector come from a normal

distribution.

• Let’s generate some data that are lognormally distributed:

> dasX <-exp(rnorm(30))

• What does this look like?

> hist( dasX )

• Does the plot look normal?

> qqnorm( dasX )

> qqline( dasX, lty=2)

Shapiro-Wilks Test

• Lets try the Shapiro-Wilks normality test and remember we should want

our non-normal data to fail the normality test:

> shapiro.test( dasX )

Shapiro-Wilk normality test

data: dasX

W = 0.7485, p-value = 8.614e-06

• Hang on this is a very low p-value. Is this distribution normal or not? This pvalue tells you what the chances are that the sample comes from a normal

distribution. The lower this value, the smaller the chance. Statisticians

typically use a value of 0.05 as a cutoff, so when the p-value is lower than

0.05, you can conclude that the sample deviates from normality.

Shapiro-Wilks Test

• Do you want to check the test with a ‘real’ normal distribution? Try this (you won’t get the exact same results as

shown here):

> checkShapiroData <- rnorm(n=10)

> shapiro.test( checkShapiroData)

Shapiro-Wilk normality test

data: checkShapiroData

W = 0.9594, p-value = 0.7787

> checkShapiroData <- rnorm(n=50)

> checkShapiroData <- rnorm(n=100)

> checkShapiroData <- rnorm(n=250)

> shapiro.test( checkShapiroData)

Shapiro-Wilk normality test

data: checkShapiroData

W = 0.9941, p-value = 0.4408

Interpretation

• The null-hypothesis of this the Shapiro-Wilks is that the population is normally distributed. Thus if

the p-value is less than the chosen alpha level, then the null hypothesis is rejected and there is

evidence that the data tested are not from a normally distributed population. In other words, the

data is not normal.

• On the contrary, if the p-value is greater than the chosen alpha level, then the null hypothesis

that the data came from a normally distributed population cannot be rejected. E.g. for an alpha

level of 0.05, a data set with a p-value of 0.32 does not result in rejection of the hypothesis that

the data are from a normally distributed population.

• However, since the test is biased by sample size, the test may be statistically significant from a

normal distribution in any large samples.

• Thus a Q–Q plot is required for verification in addition to the test.

Classic two-sample tests

• The classical tests for two samples include:

• comparing two sample means with normal errors (Student’s t test…, t.test)

• correlating two variables (Pearson’s correlation…, cor.test)

• testing for independence of two variables in a contingency table (chi-squared,

chisq.test, …).

• and (which we haven’t covered…)

• comparing two means with non-normal errors (Wilcoxon’s rank test, wilcox.test)

• comparing two proportions (the binomial test, prop.test)

• We will cover some of these additional tests when we look at nonparametric tests.

• However, we will now look at the Fisher F test…

Fisher F-test

• If you remember anything about the t-test you know that there are two

forms for an independent groups test if the population variances are

assumed to be equal or not.

• We will stop assuming and start testing!

• Comparing two variances

• Before we can carry out a test to compare two sample means we need to test

whether the sample variances are significantly different.

• The test could not be simpler!

• It is called Fisher’s F test after the famous statistician and geneticist R.A.

Fisher.

• To compare two variances, all you do is divide the larger variance by the

smaller variance. Obviously, if the variances are the same, the ratio will be

1.

Fisher F-test

• In order to be significantly different, the ratio will need to be significantly bigger than 1 (because the larger

variance goes on top, in the numerator).

• How will we know a significant value of the variance ratio from a non-significant one? The answer, as always, is to

look up the critical value of the variance ratio or get the p-value.

> f.test.data <- read.table( "C:/f.test.data.txt", header=T)

> var( f.test.data$gardenB )

[1] 1.333333

> var( f.test.data$gardenC )

[1] 14.22222

> F.ratio<-var(f.test.data$gardenC)/var(f.test.data$gardenB)

> F.ratio

[1] 10.66667

• There is a way to work out the p-value for this F value using R

> 2*(1-pf(F.ratio,9,9))

[1] 0.001624199

The fast way

• However, there is an even faster way!

> var.test( f.test.data$gardenC, f.test.data$gardenB)

F test to compare two variances

data: f.test.data$gardenC and f.test.data$gardenB

F = 10.6667, num df = 9, denom df = 9, p-value = 0.001624

alternative hypothesis: true ratio of variances is not equal to 1

95 percent confidence interval:

2.649449 42.943938

sample estimates:

The variance in garden C is more than 10 times as big as

ratio of variances

the variance in garden B.

10.66667

We have calculated the p-value here.

The null hypothesis was that the two variances were not

significantly different, so we accept the alternative

hypothesis that the two variances are significantly

different.

var.test then t.test

• Okay, so we can check the variances now lets do a t-test.

> t.test.data<-read.table("c:/t.test.data.txt",header=T)

> str( t.test.data )

'data.frame': 10 obs. of 2 variables:

$ gardenA: int 3 4 4 3 2 3 1 3 5 2

$ gardenB: int 5 5 6 7 4 4 3 5 6 5

> var.test( t.test.data$gardenA, t.test.data$gardenB)

…

> t.test( t.test.data$gardenA, t.test.data$gardenB, var.equal=T)

Two Sample t-test

data: t.test.data$gardenA and t.test.data$gardenB

t = -3.873, df = 18, p-value = 0.001115

alternative hypothesis: true difference in means is not equal to 0

95 percent confidence interval:

-3.0849115 -0.9150885

sample estimates:

mean of x mean of y

3

5

Chi-squared

• As you have guessed by now there is also a quick way to calculate a

chi-squared goodness of fit. The real work here is actually in creating

the probability data we pass to the function.

• What is the chi-squared goodness of fit test? Lets recap…

• Measure of the discrepancy between the observed results and some hypothetically expected

results.

• How much deviation from expected values will you accept before you start to question the

accuracy of your underlying assumptions or the reliability of your sampling (testing) technique?

Chi-square Formula

χ2 = Σ (O-E)2

E

where O = the observed value

and E = the expected value

Steps…

χ2 = Σ (O-E)2

E

1.For each class of observations, calculate the deviation of the

observed value from the expected value

2.The deviation is then squared and divided by the expected value.

3.Then all of the values are summed

Example

Round

Yellow

Round green

Wrinkled

Yellow

Wrinkled

green

Observed

315

108

101

32

556

Expected

(9:3:3:1)

313

104

104

35

556

Deviation (O-E)

2

4

-3

-3

Deviation

Squared (O-E)2

4

16

9

9

0.013

0.154

0.086

0.257

(O-E)2

E

0

38

Χ2 = 0.51

Χ2 = 0.51… What does this mean?

• Chi-square distribution (tables)

• Critical Value

• α = 0.05

Degrees of Freedom

• Remember “Minus One” rule

• DF = 3

4–1=3

Round Yellow

Round green

Wrinkled Yellow

Wrinkled green

Observed

315

108

101

32

556

Expected

(9:3:3:1)

313

104

104

35

556

Deviation (O-E)

2

4

3

3

Deviation

Squared (O-E)2

4

26

9

9

0.013

0.154

0.086

0.257

(O-E)2

E

Χ2 = 0.51

Find Critical Value

• α = 0.05

• DF = 3

• Χ2 = 0.51

• Reject H0?

Your Turn… using R

• Random sample of 150 voters. Voter will favour candidate 1, 2, or 3

• Results of poll:

• Test

• H0: p1 = p2 = p3 = 1/3 (no preference)

• Ha: At least one of the proportions exceeds 1/3 (a preference exists)

> chisq.test(c(61,53,36), p=c(1/3,1/3,1/3))

Chi-squared test for given probabilities

data: c(61, 53, 36)

X-squared = 6.52, df = 2, p-value = 0.03839

Result:

Another example

> chisq.test(c(315,108,101,32), p=c(9/16,3/16,3/16,1/16))

Chi-squared test for given probabilities

data: c(315, 108, 101, 32)

X-squared = 0.47, df = 3, p-value = 0.9254

• This example is interesting as you think of it as showing that a

predicted distribution of values was correct. In that there may be a

theory that states what the expected values should be and if the

observed values are close we get a very high p-value which in this

case is good news.

Round Yellow

Observed

Round green

Wrinkled Yellow

Wrinkled green

315

108

101

32

556

312.75

104.25

104.25

34.75

556

2.25

3.75

-3.25

-2.75

5.0625

14.0625

10.5625

7.5625

0.01618705 0.13489209

0.101318945

Expected

(9:3:3:1)

Deviation (O-E)

Deviation

Squared (O-E)2

(O-E)2

E

0.217625899 0.470023981

Assumptions

• Simple random sample – The sample data is a random sampling from a fixed distribution or population

where every collection of members of the population of the given sample size has an equal probability of

selection.

• Variants of the test have been developed for complex samples, such as where the data is weighted. Other forms can

be used such as purposive sampling.

• Sample size (whole table) – A sample with a sufficiently large size is assumed.

• If a chi squared test is conducted on a sample with a smaller size, then the chi squared test will yield an inaccurate

inference. The researcher, by using chi squared test on small samples, might end up committing a Type II error.

• Expected cell count – Adequate expected cell counts. Some require 5 or more, and others require 10 or

more. A common rule is 5 or more in all cells of a 2-by-2 table, and 5 or more in 80% of cells in larger tables,

but no cells with zero expected count. When this assumption is not met, Yates's Correction is applied.

• Independence – The observations are always assumed to be independent of each other. This means chisquared cannot be used to test correlated data (like matched pairs or panel data).

Assumptions: t-test

• In a specific type of t-test, these conditions are consequences of the population being studied,

and of the way in which the data are sampled. For example, in the t-test comparing the means of

two independent samples, the following assumptions should be met:

• Each of the two populations being compared should follow a normal distribution. This can be tested

using a normality test, such as the Shapiro–Wilk can be assessed graphically using a normal quantile

plot (or both!)

• If using Student's original definition of the t-test, the two populations being compared should have the

same variance (what do we use?!). If the sample sizes in the two groups being compared are equal,

Student's original t-test is highly robust to the presence of unequal variances. Welch's t-test is

insensitive to equality of the variances regardless of whether the sample sizes are similar.

• The data used to carry out the test should be sampled independently from the two populations being

compared. This is in general not testable from the data, but if the data are known to be dependently

sampled (i.e. if they were sampled in clusters), then the classical t-tests discussed here may give

misleading results

ANOVA

> data( InsectSprays )

> str( InsectSprays )

'data.frame': 72 obs. of 2 variables:

$ count: num 10 7 20 14 14 12 10 23 17 20 ...

$ spray: Factor w/ 6 levels "A","B","C","D",..: 1 1 1 1 1 1 1 1 1 1 ...

• 6 different insect sprays (1 Independent Variable with 6 levels) were tested

to see if there was a difference in the number of insects found in the field

after each spraying (Dependent Variable).

> sprayData <- InsectSprays

> boxplot( sprayData$count ~ sprayData$spray)

ANOVA

Default is equal variances

(i.e. homogeneity of

variance) not assumed – i.e.

Welch’s correction applied

(and this explains why the

df (which is normally k*(n1)) is not a whole number in

the output)

> oneway.test( sprayData$count ~ sprayData$spray)

One-way analysis of means (not assuming equal variances)

data: sprayData$count and sprayData$spray

F = 36.0654, num df = 5.000, denom df = 30.043, p-value = 7.999e-12

aov( )

• This function provides more information:

> aov.out = aov(count ~ spray, data=spray)

> summary( aov.out )

Df Sum Sq Mean Sq F value Pr(>F)

spray

5 2669 533.8 34.7 <2e-16 ***

Residuals 66 1015 15.4

--Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

• Df=(5,66), F-value=34.7… there is a lot of information under the hood too…

> str( aov.out )

> hist( aov.out$residuals )

Post-hoc test

• Can you remember what to look for?

Other Assumptions

• We know about normality and checking population variances.

• Actually, there may be other problems:

• The sample values should be independent of each other. For example, time-series

data is generally assumed to be serially correlated.

• Remember that the mean and sample variance are sensitive to outliers (i.e.

not resistant).

• Values may not be identically distributed because of the presence of

outliers.

• Outliers are anomalous values in the data.

• Outliers tend to increase the estimate of sample variance, thus decreasing

the calculated t statistic and lowering the chance of rejecting the null

hypothesis.

Other assumptions

• Outliers may be due to recording errors, which may be correctable, or they may be due to the

sample not being entirely from the same population.

• Apparent outliers may also be due to the values being from the same, but nonnormal, population.

• The boxplot and normal probability plot (normal Q-Q plot) may suggest the presence of outliers in

the data.

• An implicit factor may also separate the data into different distributions, each of which may be

normal, but which produce a nonnormal composite distribution.

• For example, measurements for females may follow a normal distribution, and measurements for males

may also follow a normal distribution, but the measurements for the entire population of both males

and females may not follow a normal distribution.

• Separating the data into different subsamples based on the value of the implicit factor may reveal that,

conditional on the value of the implicit factor (e.g., gender), the data are sampled from a normal

distribution, even if it is a different distribution for each value of the implicit factor.

Effect & normality tests

• If the sample size is small, it may be difficult to detect assumption violations. Moreover, with

small samples, nonnormality is difficult to detect even when it is present.

• Even if none of the test assumptions are violated, a normality test with small sample sizes may

not have sufficient power to detect a significant departure from normality, even if it is present.

• For very large sample sizes, a hypothesis test may become so powerful that it detects departures

from normality that are statistically significant but not of practical importance.

• With large sample sizes, small departures from normality will not compromise some statistical

tests that assume normality (such as the t test, but not the F test for variances).

• If a normality test on a very large sample rejects normality, but the boxplot, histogram, and normal

probability plot do not point to any clear signs of nonnormality (such as outliers or skewness), then the

normality test may be detecting a departure from normality that has no practical importance.

Independence

• Independence is important to check if you are using true time-series data.

We haven’t covered this. What is the big deal with ‘time series data’ as

opposed to other types of data?

• Remember we did look at moving averages etc.

• The key point about time series data is that the ordering of the time points

matters.

• For many sets of data (for example, the heights of a set of school children) it does

not really matter which order the data are obtained or listed. One order is good as

another.

• For time series data, the ordering is crucial. The ordering of the data imposes a

certain structure on the data.

• Think about it – why do moving averages work?

• In terms of what we have covered more of a problem for Regression Residuals should be independent

Autocorrelation

• Autocorrelation, also known as serial correlation, is the crosscorrelation of a signal with itself. Informally, it is the similarity

between observations as a function of the time lag between them.

…randomness is ascertained by

computing autocorrelations for data

values at varying time lags. If

random, such autocorrelations

should be near zero for any and all

time-lag separations. If non-random,

then one or more of the

autocorrelations will be significantly

non-zero.

What’s next?

• What if our data doesn’t satisfy the normality constraint?

• Alternative tests exist:

• Non-Parametric tests

• You may be justified in assuming the sampling distribution is normal with a

large enough data size.

• Some tests may be more ‘robust’ to breaking the normality constraint

• Beyond what is written in a standard textbook there are extensive studies / simulations

that look at what happens with data that doesn’t quite fit the assumptions.

• …but it’s best to be conservative. And some non-parametric tests are more effective if

the normality constraint is broken.

• Bootstrapping, jackknife and beyond.