* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download 8:1 MLR Assumptions. - UC Davis Plant Sciences

Survey

Document related concepts

Transcript

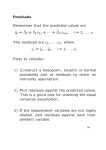

Lecture outline AGR 206 769834562 Revised: 5/6/2017 Chapter 8. Regression Diagnostics 8:1 MLR Assumptions. 1. Normality of errors. 2. Homogeneity of variance of errors. 3. Independence of errors. 4. Linearity. Although they are not really assumptions, two additional problem areas are listed here because they are usually diagnosed and dealt with at the same stage as the assumptions. 5. Outliers. 6. Collinearity. We present this topic using the Spartina dataset as an example. Only five X variables are considered in the example: salinity, pH, K, Na, and Zn. This example is presented in detail in the RP book, pp. 377-396. In MLR most of the diagnostics are based on the residuals. Although we have many predictors, we still have only one residual on the Y dimension. Therefore, all the methods used for simple linear regression apply to MLR without change, except that now there are more X’s to plot the residuals, and we need to consider the concept of partial regression plots. Partial regression plots show the relationship between Y and each X after correcting for the effects of all other X’s. Most of the assumptions that need to be checked refer to the errors. The first step is to obtain the studentized residuals and inspect them. Residuals are plotted against the predicted Y and each predictor to look for patterns that might suggest non-linearity or outliers. 8:1.1 Obtaining diagnostic statistics. Open the Spartina JMP table and regress biomass on salinity, pH, K, Na, and Zn using the Fit Model platform (Figure 1). Then save the studentized residuals, hats, and Cook’s D using the Save Columns command in the drop-down menu from the red triangle in the upper left corner of the output window. Using the same dropdown menu, select Row Diagnostics, and then, Durbin Watson test and PRESS. The first output window shows the model with all variables (Figure 2). The only estimated regression coefficient that is significantly different from zero after all other variables are considered is the one for pH. The rest of the diagnostic statistics can be obtained from this window. Right-click on the Parameter Estimates to add the VIF column. 1 Lecture outline AGR 206 Revised: 5/6/2017 769834562 1 2 8:1.2 Normality. Normality is checked exactly in the same manner as for simple linear regression. Save the residuals and then use the Analyze, Distribution platform and select Fit Distribution Normal and finally, select Goodness of fit. A significant value of the Shapiro-Wilk statistic would indicate that the data are not normally distributed. In the Spartina example normality is rejected, because the distribution shows a concentration of values on the left side and a few vary large values. This will require a remedial measure such as a transformation. Before deciding on a remedial action, the rest of the diagnostics should be checked. Frequently, a transformation or other remedial measures will correct more than one problem at once. 2 Lecture outline AGR 206 Revised: 5/6/2017 769834562 3 8:1.3 Homogeneity of variance. Homogeneity of variance should be assessed visually by plotting residuals against the predicted values and each of the predictors. This can be done in one step by using the Fit Y by X platform as shown in Figure 4. No obvious trends in the spread of the errors is seen, except that there are five observations that have very high biomass and pH. One of these observations has a very low residual. These observations will be flagged when the hats and the studentized residuals are considered. Observations in the scatter plot can be easily identified by moving the cursor over them. When the cursor is over an observation, its row number is displayed. It is also possible to label the observations by selecting Row Label and Column Label, or one can simply click on the observation on the plot and it will become selected in the data table. Several observations can be selected at once by Shift-clicking. Because the data set has predetermined groups, homogeneity of variance among groups can be studied by plotting the standard deviation vs. the average biomass for each group. Homogeneity of variance among groups can also be tested with the Fit Y by X platform. In order to build the plot of standard deviation against average biomass for each of the 9 location by type groups, use the Table Summary command (Figure 5). Select typ and loc as the grouping variables and in the lower box enter Mean(bmss) and std dev(bmss). A new table is created that depends on the original table, and contains the summary by groups. While the new summary table is the active window, select Fit Y by X and plot and regress standard deviation vs. average biomass (Figure 6). The figure shows a clear increase in variance as biomass increases, typical of experimental data. This lack of homogeneity of variance, is corroborated by the tests performed in each of the location and type groups (Figure 7). The first choice of remedial action is to apply a transformation on the response variable. A logarithmic transformation is suggested by the shape of the distribution and by the increasing variance with increasing Yhat. The Levene test for homogeneity of variance is based on the F value resulting for the model or groups when the variable Zij=|Yij-Yhat.j| is analyzed by ANOVA with group as the effect. The variable Zij is the absolute value of the deviations of the observations from the means for each group. 3 Lecture outline AGR 206 Revised: 5/6/2017 769834562 4 4 Lecture outline AGR 206 Revised: 5/6/2017 769834562 5 6 5 Lecture outline AGR 206 Revised: 5/6/2017 769834562 7 8:1.4 Independence of errors. Independence of errors has to be tested if there is information on the spatial or temporal order of samples. The Durbin-Watson statistic requested before will print the first order autocorrelation among errors, assuming that samples are ordered by time and that the intervals between observations are constant. The first order autocorrelation is the correlation between error of row i and error of row i-1. When errors are independent, this 6 Lecture outline AGR 206 Revised: 5/6/2017 769834562 correlation should not differ from zero. Using the red triangle to the left of the Durbin-Watson title, one can obtain the probability level for the correlation. If the Durbin-Watson statistic is significant, it indicates that errors are correlated and require remedial action. The first action is to try to identify a new X variable that is causing the autocorrelation and include it in the model. Alternatively, time series analysis should be performed. Usually, the consequence of ignoring a correlation among errors is that the precision of the model is inflated, thus giving the impression that the model fits better than it actually does. 8:1.5 Linearity. In order to assess linearity JMP automatically performs a lack of Fit test if there are replicate observations for the same values of X’s. Replicates are usually not available for multiple linear regression of observational experiments. When replicates are not available, in simple linear regression the relationship between Y and X can be explored visually by plotting Y vs. X and errors vs. X. MLR requires that we consider the partial regression plots for the same purpose. The term partial refers to the fact that the plots show the relationship between Y and each X after the effects of all other X’s have been removed. Analogously, partial correlation is the linear correlation between two variables controlling (statistically) for the effects of all other variables. The partial regression plot between any X (say pH) and bmss is obtained as follows: 1. Regress bmss on all variables except pH. Save the residuals and call them eY|other X’s. 2. Regress pH on all other X’s (sal, Na, K, Zn). Save the residuals and call them epH|other X’s. 3. Use the fit Y by X to plot eY|other X’s vs. epH|other X’s. 8 Figure 8 shows the partial regression plot for pH. The scatter plot is inspected to look for non-linearity, outliers, etc. In this case, the relationship appears to be linear, and there are no major problems with the scatter, except perhaps the distribution of the deviations around the line that seems to be asymmetric. This will probably be taken care of by a transformation. Inspection of the rest of the partial plots is left as an exercise. They should not show any grave lack of linearity, although the plots will show some problematic observations which will be fully explored as outliers. 8:1.6 Outliers. There are two types of outliers, just as in the simple regression situation, outliers in the Y dimension and outliers in the X dimension. In MLR the X dimensions actually encompasses several dimensions, but the concept is the same. Outliers in the y dimension are identified by studying the residuals. Outliers in the X dimensions are identified by studying the hats. Observations can be outliers in either or both types, but they 7 Lecture outline AGR 206 769834562 Revised: 5/6/2017 may not be influential. Non-influential outliers are usually not a concern. To determine if an outlier is influential we study the Cook’s D statistic for each observation. In MLR we have only one set of random variables that are assumed to have identical properties, the errors. Therefore, it is not necessary to study multivariate outliers in the strict sense. When we study true multivariate statistics, such as in MANOVA, it will be necessary to use the Mahalanobi’s test as described in Lecture 4. 8:1.6.1 Outliers in the Y dimension. Using the Table … Sort command (Figure 9), sort the table in decreasing order of studentized residuals (str). The sorting is just to facilitate the location of outliers, but it is not necessary. The str’s should be distributed like a t distribution. We can calculate the P-value for each str by creating a new column with the following formula: T Distribution[Studentized Resid bmss, 39] 9 This formula (Figures 10 and 11) gives the probability of obtaining a residual of the observed value, using a t distribution with 39 degrees of freedom, which is the dfe for the model with all variables. There are 5 observations with probability values greater than 0.975 or smaller than 0.025. The value expected by chance is 45*0.05=2.25 so there are more outliers than expected by chance. However, using a set probability of 0.001 no outliers are found. In any case, after all the observations with an extreme probability are checked for correctness, no specific problems of coding or other errors are found, so there is no reason to eliminate any observations. Further analysis of the influence of each suspect observation can be done by inspecting the Cook’s D statistic for each observation. Cook’s D is a combined measure of the impact that each observation has on all regression coefficients. An observation may be overly influential if its D>F(0.5, p, n-p), where p is the number of parameters, and n is the number of observations. In the Spartina dataset with the 5 variables considered, the critical value is F(0.5, 6, 39)=0.907. On this basis, there are no influential observations, because Cook’s D is smaller than the critical value for all observations. 8 Lecture outline AGR 206 Revised: 5/6/2017 769834562 10 11 8:1.7 Collinearity. The first thing to check is the VIF. The VIF was obtained by right-clicking on the parameter estimates table and choosing Columns… VIF. A VIF greater than 10 is interpreted as an indicator or severe collinearity and excessive inflation of variance of the estimated betas. In this Spartina example all values are below 10, so no serious problem is present (Figure 12). As seen in the example of variable selection, out of the five variables considered, a good choice of model would include only pH and Na. If that model were used, collinearity would practically disappear (Figure 13). Whether one chooses to reduce collinearity beyond that present in the model with all 5 variables depends on the goals of the regression. If good, low variance, estimates of the regression coefficients are necessary, one can use a model with 2 or 3 variables, or perform biased regression using Principal Components Regression or Ridge Regression. 12 9 Lecture outline AGR 206 769834562 Revised: 5/6/2017 13 8:1.7.1 Outliers in the X dimension. In order to detect outliers in the X dimension, we explore the hats. These values come from the main diagonal of the hat matrix and depend only on the values of the predictor or X variables. Values that are greater than 2 p/n (where p is the number of parameters and n is the number of observations)are considered as having a large leverage and can potentially affect the model negatively. For example, an observation with an extreme hat value can dramatically and artificially reduce the error of the estimates and predictions. In the Spartina example, the critical value for the hats is 2*6/45=0.27. There are two observations that exceed this value, and only one is much larger. Cross checking its Cook;s D value we see that the observation does not have much influence, so no further action is required. 8:1.8 Summary of diagnostic and remedial measures.. The complete diagnostics resulted in the identification of non-normality of the errors and lack of homogeneity of variance. In addition, we found a few observations that are borderline outliers, and a little collinearity. The first action is to apply a transformation that we hope will fix both problems at once and will reduce the outliers. Based on the distribution of the residuals, we guess that a square root or a logarithmic transformation may work. The residuals after performing each transformation are presented in Figure 14. 10 Lecture outline AGR 206 769834562 Revised: 5/6/2017 15 Neither transformation fixed the non-normality, so we try additional transformations. The strength of the transformation can be regulated by rescaling and translating the range of the data according to a linear formula Y’=f(a+bY) where a and b are arbitrary coefficients selected to meet normality, and F is either the square root or the log transformation. It turns out that Y’=sqrt(bmss-200) has residuals that are not different from normal. The regression of Y’ on the predictors gives a similar overall R2 and has not outliers. At this point, the process of checking the rest of the assumptions should be repeated, to make sure that the new variable meets all assumptions. In case that some assumptions cannot be met, the method is robust and can still be applied. An alternative is to use bootstrapping to generate confidence intervals and to test hypotheses based on the model. Bootstrapping will be considered in detail in a future lecture. Here, it is sufficient to understand that it is a method to make inferences that has a minimum of assumptions. 8:2 Model Validation. After all transformations and remedial measures have been applied, and after final variables to keep in the model have been selected, the model has to be validated. Validation is necessary because in the course of building the model and applying remedial measures, the probability levels provided by the analysis lose their meaning. In general, after all the manipulations the model obtained will look much better than it actually is. Model validation provides a means to check how good the model actually is, and to correct the probability levels. There are three ways to validate and assess models: 1. Collect new data and apply the model to the new data. 2. Compare the model to previous empirical results, theories, or simulation results. 3. Check validity with part of the data, using the model developed independently with another part of the data. 11 Lecture outline AGR 206 769834562 Revised: 5/6/2017 In options 1 and 3 the original model can be assessed b calculating the Mean Squared Prediction Residual (MSPR): Y Y n* MSPR 2 i i 1 n* where n* is the number of observations in the validation data set, and Yi is the observed value for the ith observation in the validation data set, and Yhati is the predicted value for the same observation, but based on the model derived with the model-building or “training” data set. If MSPR is not too much larger than the original MSE, then the model is valid. If the MSPR is much larger, the model should be improved. Meanwhile, the MSPR and not the MSE should be used as a true estimate of the variance for predictions. The third option can be performed as data splitting, with double-crossed validation, if the number of observations is large relative to the number of variables (more than 20 observations per variable). The data are randomly split into two halves and each half is used to test the validity of the model developed with the other half. The PRESS statistic, the MSPR and direct comparisons between estimated coefficients can be made to check if the models are similar. See page 229 of RP book. Test for significance of bias This is another test that can be used to determine if the model is validated or not. 12