* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Data Analysis and Presentation

Survey

Document related concepts

Transcript

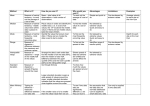

Data Analysis and Presentation By Temtim Assefa [email protected] Quantitative Data Analysis • Data analysis refers to converting raw data into meaningful information, reducing data size • Two common types analysis 1. Descriptive statistics – to describe, summarize, or explain a given set of data 2. Inferential statistics – use statistics computed from a sample to infer about the population – It is concerned by making inferences from the samples about the populations from which they have been drawn Common data analysis technique 1. 2. 3. 4. 5. 6. Frequency distribution Measures of central tendency Measures of dispersion Correlation Regression And more Frequency distribution • It is simply a table in which the data are grouped into classes and the number of cases which fall in each class are recorded. • Shows the frequency of occurrence of different values of a single Phenomenon. • Main purpose 1. To facilitate the analysis of data. 2. To estimate frequencies of the unknown population distribution from the distribution of sample data and 3. To facilitate the computation of various statistical measures Example – Frequency Distribution • In a survey of 30 organizations, the number of computers registered in each organizations is given in the following table • This data has meaning unless it is summarized in some form Example The following table shows frequency distribution Number of computers Example … • The above table can tell us meaningful information such as – How many computers most organizations has? – How many organizations do not have computers? – How many organizations have more than five computers? – Why the computer distribution is not the same in all organizations? – And other questions Continuous frequency distribution • Continuous frequency distribution constructed when the values do not have discrete values like number of computers • Example is age, salary variables have continuous values Constructing frequency table • The number of classes should preferably be between 5 and 20. However there is no rigidity about it. • Preferably one should have class intervals of either five or multiples of 5 like 10,20,25,100 etc. • The starting point i.e the lower limit of the first class, should either be zero or 5 or multiple of 5. • To ensure continuity and to get correct class interval we should adopt “exclusive” method. • Wherever possible, it is desirable to use class interval of equal sizes. Constructing … You can create a frequency table with two variables This is called Bivariate frequency table IT staff Type of organizations Private Government Non-government <10 10-50 >5 0 15 0 0 5 10 30 0 50 5 Graphs • You can plot your frequency distribution using bar graph, pie chart, frequency polygon and other type of charts • Computer Import in Ethiopia in 2010 Country of Origin China Japan Germany Computer import 62 47 35 India USA 16 6 Bar graph 70 60 50 40 Computer import 30 20 10 0 China Japan Germany India Measures of central tendency • Mode shows values that occurs most frequently • is the only measure of central tendency that can be interpreted sensibly • Median is used to identify the mid point of the data Central Tendency …. • Mean is a measure of central tendency • includes all data values in its calculation Mean = sum of observation (sum)/ Total no. of observation (frequency ) • The mean for grouped data is obtained from the following formula: fx x N • where x = the mid-point of individual class • f = the frequency of individual class • N = the sum of the frequencies or total frequencies. Mean • Mean is used to assess the association between two variables. • Assume an organization (X) that uses web based service sales and the other organization (Y) using the traditional rented shop sales office • X average monthly sales is 10, 000 birr while Y monthly sales is 7, 000 birr • The mean has significant difference and we conclude that use of web based sales service increase X organization sales performance • You can apply T-test to check its statistical significance Exercise • Do the following exercise for the following IT staff data for 13 organizations named as O1 to O13 • 25, 18, 20, 10, 8, 30, 42, 20, 53, 25, 10, 20, 42 • What is the mode? • What is the median? • What is the mean? • Change into frequency table? • Plot on bar graph? Pie chart? • What you interpret from the data? Measures of Dispersion • The measure of central tendency serve to locate the center of the distribution, • Do not measure how the items are spread out on either side of the center. • This characteristic of a frequency distribution is commonly referred to as dispersion. • Small dispersion indicates high uniformity of the items, • Large dispersion indicates less uniformity. • Less variation or uniformity is a desirable characteristic such as income distribution, computer access by house holds, etc Type of measure of dispersion • There are two types 1. Absolute measure of dispersion 2. Relative measure of dispersion. • Absolute measure of dispersion indicates the amount of variation in a set of values in terms of units of observations. For example, if computers measured by numbers, it shows dispersion by number • Relative measures of dispersion are free from the units of measurements of the observations. You may measure dispersion by percentage • Range is an absolute measure while coefficient of variation is the relative measure Dispersion … • There are different type of dispersion measures • We look at Standard Deviation and Coefficient of variation • Karl Pearson introduced the concept of standard deviation in 1893 • Standard deviation is most frequently used one • The reason is that it is the square–root of the mean of the squared deviation • Square of standard deviation is called Variance Standard Deviation • It is given by the formula 2 ( x x) n 1 • Calculate the standard deviation from the following data. • 14, 22, 9, 15, 20, 17, 12, 11 • The Answer is 4.18 Interpretation • We expect about two-thirds of the scores in a sample to lie within one standard deviation of the mean. • Generally, most of the scores in a normal distribution cluster fairly close to the mean, • There are fewer and fewer scores as you move away from the mean in either direction. • In a normal distribution, 68.26% of the scores fall within one standard deviation of the mean, • 95.44% fall within two standard deviations, and • 99.73% fall within three standard deviations. Advantage of SD • Assume the mean is 10.0, and standard deviation is 3.36. • one standard deviation above the mean is 13.36 and one standard deviation below the mean is 6.64. • The standard deviation takes account of all of the scores and provides a sensitive measure of dispersion. • it also has the advantage that it describes the spread of scores in a normal distribution with great precision. • The most obvious disadvantage of the standard deviation is that it is much harder to work out than the other measures of dispersion like rank and percentiles Coefficient of Variation • The Standard deviation is an absolute measure of dispersion. • However, It may not always applicable • The standard deviation of number of computers cannot be compared with the standard deviation of computer use of students, as both are expressed in different units, • standard deviation must be converted into a relative measure of dispersion for the purpose of comparison -coefficient of variation • The is obtained by dividing the standard deviation by the mean and multiply it by 100 coefficient of variation = X 100 x Skewness • skewness means ‘ lack of symmetry’ . • We study skewness to have an idea about the shape of the curve which we can draw with the help of the given data. • If in a distribution mean = median =mode, then that distribution is known as symmetrical distribution. • The spread of the frequencies is the same on both sides of the center point of the curve. Symmetrical distribution • Mean = Median = Mode Positively skewed distribution Negatively skewed distribution Measures of Skewness 1. Karl – Pearason’ s coefficient of skewness 2. Bowley’ s coefficient of skewness 3. Measure of skewness based on moments We see Karl- Pearson, read others from the textbook • Karl – Pearson is the absolute measure of skewness = mean – mode. • Not suitable for different unit of measures • Use relative measure of skewness -- Karl – Pearson’ s coefficient of skewness, i.e (Mean –Mode)/standard deviation In case of ill defined mode, we use 3(Mean –median)/standard deviation Kurtosis • All the frequency curves expose different degrees of flatness or peakedness – called kurtosis • Measure of kurtosis tell us the extent to which a distribution is more peaked or more flat topped than the normal curve, which is symmetrical and bell-shaped, is designated as Mesokurtic. • If a curve is relatively more narrow and peaked at the top, it is designated as Leptokurtic. • If the frequency curve is more flat than normal curve, it is designated as platykurtic. Interpretation • Real word things are usually have a normal distribution pattern – Bell shape Normal dist… • • • • • This implies that 68% of the population is in side 1 95% of the population is inside 2 99% of the population is 3 So you need to select a confidence limit to say your sample is statistically significant or not • For example, if more than 5% of the population falls outside 2 standard deviation, the difference between two groups of population is not statistically significant Correlation • Correlation is used to measure the linear association between two variables • For example, assume X is IT skill and Y is IT use. Is there association b/n these two variables r ( x x) ( y y ) ( x x) ( y y ) 2 2 Correlation … • Correlation expresses the inter-dependence of two sets of variables upon each other. • One variable may be called as independent variable (IV) and the other is dependent variable (DV) • A change in the IV has an influence in changing the value of dependent variable • For example IT use will increase organization productivity because have better information access and improve their skills and knowledge Correlation Lines Correlation Lines Perfect Correlation No Correlation Type of Correlation 1. 2. 3. • • Simple Multiple correlation Partial correlation In simple correlation, we study only two variables. For example, number of computers and organization efficiency • In multiple correlation we study more than two variables simultaneously. • For example, usefulness and easy of use and IT adoption • In Partial and total correlation, it refers to the study of two variables excluding some other variable Karl pearson’ s coefficient of correlation • Karl pearson, a great biometrician and statistician, suggested a mathematical method for measuring the magnitude of linear relationship between the two variables • Karl pearson’ s coefficient of correlation is the most widely used method of correlation r r XY n x y XY x . y 2 2 where X = x - x , Y = y - y Spear Man Rank Correlation • Developed by Edward Spearman in 1904 • It is studied when no assumption about the parameters of the population is made. • This method is based on ranks • It is useful to study the qualitative measure of attributes like honesty, colour, beauty, intelligence, character, morality etc. • The individuals in the group can be arranged in order and there on, obtaining for each individual a number showing his/her rank in the group Formula r 1 6 D n n 2 3 • Where D2 = sum of squares of differences between the pairs of ranks. • n = number of pairs of observations. • The value of r lies between –1 and +1. If r = +1, there is complete agreement in order of ranks and the direction of ranks is also same. If r = -1, then there is complete disagreement in order of ranks and they are in opposite directions. Exercise Calculate the correlation for the following given data 7 4 6 2 1 9 3 8 5 X 56 78 65 89 93 24 87 44 74 Y 34 65 67 90 86 30 80 50 70 8 6 5 1 2 9 3 7 4 D -1 -2 1 1 -1 0 0 1 1 D2 1 4 1 1 1 0 0 1 1 Advantage of Correlation • It is a simplest and attractive method of finding the nature of correlation between the two variables. • It is a non-mathematical method of studying correlation. It is easy to understand. • It is not affected by extreme items. • It is the first step in finding out the relation between the two variables. • We can have a rough idea at a glance whether it is a positive correlation or negative correlation. • But we cannot get the exact degree or correlation between the two variables The Pearson Chi-square • it is the most common coefficient of association, which is calculated to assess the significance of the relationship between categorical variables. • It is used to test the null hypothesis that observations are independent of each other. • It is computed as the difference between observed frequencies shown in the cells of crosstabulation and expected frequencies that would be obtained if variables were truly independent. Chi-square … 2 x (O E ) E Where O is observed value E is expected value X2 is the association Where is X2 value and its significance level depend on the total number of observations and the number of cells in the table X2=0.17 Degree freedom is no. of variable minus from no. of observations Obse Exp. M T 3 5 6 6 differe nce -3 -1 W 7 6 1 Th 6 6 0 F 9 6 3 Tot 1 Assumptions • • • • • Ensure that every observation is independent of every other observation; in other words, each individual should be counted once and in only one category. Make sure that each observation is included in the appropriate category; it is not permitted to omit some of the observations (e.g. those from individuals with intermediate levels of cholesterol). The total sample should exceed 20; otherwise, the chi-squared test as described here is not applicable. More precisely, the minimum expected frequency should be at least 5 in every use. The significance level of a chi-squared test is assessed by consulting the one-tailed values in the Appendix table if a specific form of association has been predicted and that form was obtained. However, the two-tailed values should always be consulted if there are more than two categories on either dimension. Remember that showing that there is an association is not the same as showing that there is a causal effect; for example, the association between a healthy diet and low cholesterol does not demonstrate that a healthy diet causes low cholesterol. Parametric Tests There are many test but some examples are • • • • Pearson correlation coefficient T-test - between two population ANOVA Linear regression ANOVA • Analysis of variance (ANOVA) is one of the statistical tools developed by Professor R.A. Fisher • ANOVA is used to test the homogeneity of several population means. • Five different software systems were used by five business organizations to increase the organizations’ profit such by increasing customer satisfaction, sales and the like. • In such a situation, we are interested in finding out whether the effect of these software systems on profit is significantly different or not. F Statistic • Like any other test, the ANOVA test has its own test statistic • The statistic for ANOVA is called the F statistic, which we get from the F Test • The F statistic takes into consideration: – number of samples taken (I) or groups – N is total samples for all groups – sample size of each sample n23, …, nI) X 1, (n x2,1,X – means of the samples ( ) – standard deviations of each sample (s1, s2, …, sI) F Statistic Equation Rewritten as a formula, the F Statistic looks like this: Weighing Means (Squared) n1 ( x1 x ) 2 n2 ( x2 x ) 2 ... nI ( xI x ) 2 I 1 F (n1 1) s12 (n2 1) s22 ... (nI 1) sI2 N I Weighing Standard Deviations (Squared) Calculate Anova for the Following group The Data for three organizations IT use is Level X1 X2 X3 n 28 26 8 Mean 27.1 hrs 20.4 hrs 23.1 hrs StDev 2.6 hrs 2.9 hrs 2.5 hrs The F Statistic Regression • Regression is used to estimate (predict) the value of one variable given the value of another. • The variable predicted on the basis of other variables is called the “dependent” or the ‘ explained’ variable and the other the ‘ independent’ or the ‘ predicting’ variable. • The prediction is based on average relationship derived statistically by regression analysis. • For example, if we know that advertising and sales are correlated we may find out expected amount of sales f or a given advertising expenditure or the required amount of expenditure for attaining a given amount of sales. Regression • Regression is the measure of the average relationship between two or more variables in terms of the original units of the data. • Type of regression 1. Simple and Multiple 2. Linear and Non –Linear 3. Total and Partial Linear and Non-linear • The linear relationships are based on straight-line trend, the equation of which has no-power higher than one. But, remember a linear relationship can be both simple and multiple. • Normally a linear relationship is taken into account because besides its simplicity, it has a better predictive value, a linear trend can be easily projected into the future. • In the case of non-linear relationship curved trend lines are derived. The equations of these are parabolic. Regression analysis • The goal of regression analysis is to develop a regression equation from which we can predict one score on the basis of one or more other scores. • For example, it can be used to predict a job applicant's potential job performance on the basis of test scores and other factors Linear regression equation • Linear regression equation of Y on X is Y = a + bX ……. (1) • And X on Y is X = a + bY……. (2) Where a, b are constants. • In a regression equation, y is the dependent variable or criterion variable, or outcome variable we would like to predict. • X represents the variable we are using to predict y; x is called the predictor variable. • a is called the regression constant (or beta- zero), and is the y-intercept of the line that best fits the data in the scatter plot; • It is the regression coefficient, • B is the slope of the line that best represents the relationship between the predictor variable (x) and the criterion variable (y). • You can use multiple regression Y= a+bx1+bx2+….+bxn Example • Find the Regression Equation for the following data X Y 6 9 2 11 10 5 4 8 8 7 Solution • • Regression equation of Y on X is Y = a + bX and the normal equations are ∑Y = na + b ∑X ∑XY = a ∑X + b ∑X2 • Where n= 5 ∑Y= 40 ∑X= 30 ∑XY = 214 ∑X2 = 220 Regression • Substituting the values, we get • 40 = 5a + 30b …… ( equation 1) • 214 = 30a + 220b ……. ( equation 2) • Multiplying (equation 1) by 6 • 240 = 30a + 180b……. ( equation 3) • Subtract equation 3 from equation 2 • You get - 26 = 40b or b = - 26/40 b = - 0.65 • Now, substituting the value of ‘ b’ in equation (1) • 40 = 5a – 19.5 • 5a = 59.5 • a = 59.5/5 or a = 11.9 Regression • Hence, required regression line Y on X is • Y = 11.9 – 0.65 X. • This implies that • 11.9 is a constant or intercept. When X is zero, the Value of Y is 11.9 • 0.65 is the slope. This implies that 1 unit change in X brings 0.65 minus the constant (11.9) change on Y • Likewise 2 units change in X results 1.30 minus the constant change in Y • This is generalized for the population by checking the statistical significance Statistical significance • What happens after we have chosen a statistical test, and analysed our data, and want to interpret our findings? We use the results of the test to choose between the following: 1. Alternative (Experimental) hypothesis (e.g. loud noise disrupts learning). 2. Null hypothesis, which asserts that there is no difference between conditions (e.g. loud noise has no effect on learning). • If the statistical test indicates that there is only a small probability of the difference between two conditions (e.g. loud noise vs. no noise), then we reject the null hypothesis in favor of the experimental hypothesis Statistical …. • Psychologists generally use the 5% (0.05) level of statistical significance. What this means is that the null hypothesis is rejected (and the experimental hypothesis is accepted) if the probability that the results were due to chance alone is 5% or less. This is often expressed as p 0.05 • It is possible to use other statistical significance such as 10%, we have greater confidence • But the null hypothesis can be rejected with greater confidence • This leads to Type I and Type II errors – Type I error: we may reject the null hypothesis in favour of the experimental hypothesis even though the findings are actually due to chance; the probability of this happening is given by the level of statistical significance that is selected. – Type II error: we may retain the null hypothesis even though the experimental hypothesis is actually correct. Statistical significance … • Researchers are interested not only in the correlation between two variables, but also in whether the value of r they obtain is statistically significant. • Statistical significance exists when a correlation coefficient calculated on a sample has a very low probability of being zero in the population. • Assume we get a correlation between X and Y is 0.4 in our sample. • How do we now if this r is not zero (r=0.0) if we take the census of the entire population. • The probability that our correlation is truly zero in the population is sufficiently low (usually less than .05), • we refer this probability as statistically significant Techniques • There are two methods to make generalizations about the population 1. Mean Method – by confidence Interval 2. Statistical significance method – using different inferential statistics such as Chai Square, ANOVA, regression, etc Confidence interval Method • When you compute a confidence interval on the mean, you compute the mean of a sample in order to estimate the mean of the population. • Clearly, if you already knew the population mean, there would be no need for a confidence interval. • Assume that the weights of 10-year-old children are normally distributed with a mean of 90 and a standard deviation of 36. • What is the sampling distribution of the mean for a sample size of 9? • The formula (standard error ) is given by • The sampling distribution of the mean has a mean of 90 and a standard deviation of 36/3 = 12. • Note that the standard deviation of a sampling distribution is its standard error. Confidence Interval • The shaded area represents the middle 95% of the distribution and stretches from 66.48 to 113.52. These confidence limits were computed by adding and subtracting 1.96 standard deviations to/from the mean of 90 as follows: 90 - (1.96)(12) = 66.48 90 + (1.96)(12) = 113.52 The value of 1.96 is based on the fact that 95% of the area of a normal distribution is within 1.96 standard deviations of the mean; 12 is the standard error of the mean. Statistical Significance • Formulas and tables for testing the statistical significance of correlation coefficients can be found in many statistics books • Imagine that you obtained a value of r = .32 based on a sample of 100 participants. • Looking down the left-hand column, find the number of participants (100). • Looking at the other column, we see that the minimum value of r that is significant with 100 participants is .16. • Because our correlation coefficient (.32) exceeds .16, we conclude that the population correlation is very unlikely to be zero (in fact, there is less than a 5% chance that the population correlation is zero). Statistical … • Keep in mind that, with large samples, even very small correlations are statistically significant • Thus, finding that a particular r is significant tells us only that it is very unlikely to be .00 in the population; it does not tell us whether the relationship between the two variables is a strong or an important one. • The strength of a correlation is assessed only by its magnitude, not whether it is statistically significant. • As a rule of thumb, behavioral researchers tend to regard correlations at or below about .10 as weak in magnitude (they account for only 1 % of the variance), correlations around .30 as moderate in magnitude, and correlations over .50 as strong in magnitude Exercise on Data Analysis The following data shows number of hours used study on Internet and the students score on a test out of 10. 1. calculate the correlation between the two variables. 2. Compute the regression equation and estimate the value of Y for a given value of X in table 2 3. Calculate the mean of student test 4. Calculate the standard deviation 5. Calculate the confidence interval for the total student population mean score with 95% confidence 6. Do you think that Internet use has an impact on student performance X 4 6 3 10 8 Y 5 8 4 7 9 X 8 10 7 20 15 References • http://onlinestatbook.com/2/normal_distribut ion/normal_distribution.html