* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Radial Basis Function Networks

Nervous system network models wikipedia , lookup

Central pattern generator wikipedia , lookup

Neural modeling fields wikipedia , lookup

Metastability in the brain wikipedia , lookup

Catastrophic interference wikipedia , lookup

Artificial neural network wikipedia , lookup

Pattern recognition wikipedia , lookup

Gene expression programming wikipedia , lookup

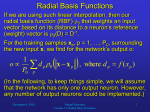

Convolutional neural network wikipedia , lookup

In the name of God Institute for advanced studies in basic sciences Radial Basis Function Networks Yousef Akhlaghi This seminar is an introduction to radial basis function networks as linear neural networks . Definition : Radial basis function (RBF) networks are a special class of single hidden-layer feed forward neural networks for application to problems of supervised learning. Reference: Radial Basis Function Networks, Mark J. Orre, Linear models have been studied in statistics for about 200 years and the theory is applicable to RBF networks which are just one particular type of linear model. However the fashion for neural networks which started in the mid-80’s has given rise to new names for concepts already familiar to statisticians. Equivalent terms in statistics and neural networks: Supervised Learning: Problem to be solved: Estimate a function from some example input-output pairs with little or no knowledge of the form of the function. DIFFERENT NAMES OF THIS PROBLEM: Nonparametric regression Function approximation System identification Inductive learning IN NEURAL NETWORK : Supervised learning The function is learned from the examples or training set contains elements which consist of paired values of the independent input variable and the dependent output variable. Two main regression problems in statistics: - parametric regression - nonparametric regression Parametric Regression: In parametric regression the form of the functional relationship between the dependent and independent variables is known but may contain parameters whose values are unknown and capable of being estimated from the training set. For example fitting a straight line: parameters to a bunch of points Important point: The free parameters as well as the dependent and independent variables have meaningful interpretations like initial concentration or rate. Nonparametric regression: There is no or very little a priori knowledge about the form of the true function which is being estimated. The function is still modeled using an equation containing free parameters but Typically this involves using many free parameters which have no physical meaning in relation to the problem. In parametric regression there is typically a small number of parameters and often they have physical interpretations. In neural networks including radial basis function networks: - Models are nonparametric and their weights and other parameters have no particular meaning in relation to the problems to which they are applied. -The primary goal is to estimate the underlying function or at least to estimate its output at certain desired values of the input Linear Models: A linear model for a function f (x) takes the form: The model ‘f ’ is expressed as a linear combination of a set of ‘m’ fixed functions often called basis functions by analogy with the concept of a vector being composed of a linear combination of basis vectors. Nonlinear Models: if the basis functions can change during the learning process then the model is nonlinear. An example Almost the simplest polynomial is the straight line: which is a linear model whose two basis functions are: and whose parameters (weights) are: The two main advantages of the linear character of RBF networks: 1- Keeping the mathematics simple it is just linear algebra (the linearly weighted structure of RBF networks) 2- There is no optimization by general purpose gradient descent algorithms (without involving nonlinear optimization). Radial Functions: Their characteristic feature is that their response decreases (or increases) monotonically with distance from a central point. The centre, the distance scale, and the precise shape of the radial function, are parameters of the model, all fixed if it is linear. A typical radial function is the Gaussian: Its parameters are c : centre r : width (spread) Local modelling with radial basis function networks B. Walczak , D.L. Massart Chemometrics and Intelligent Laboratory Systems 50 (2000) 179–198 From exact fitting to RBFNs RBF methods were originally developed for exact interpolation of a set of data points in a multidimensional space. The aim of exact interpolation is to project every input vector , onto the corresponding target , to find a function such that: According to the radial basis function approach, exact mapping can be performed using a set of m basis functions (one for each data point) with the form , where is some nonlinear function, and denotes distance between and , usually Euclidean distance. Then the output of the mapping can be presented as linear combinations of these basis functions: where denotes weights, basis function, respectively. and denote input object and the center of In matrix notation the above equation is: For a large class of functions, the matrix Φ is non-singular, and eq. 3 can be solved: The basis functions can have different forms. The most popular among them is the Gaussian function: controlling the smoothness properties of the interpolating function. || xi – xj || Euclidean Distance of center j from object i = [(xi1 - x1j)2 + (xi2–x2j)2 + …+ (xin-xnj)2]0.5 object center Distance (x) φ w x2 e x p ( 2) 2σ × x2 e x p ( 2) 2σ × x2 e x p ( 2) 2σ × # Hidd. nodes = # objects Exact Fitting Σ output In practice, we do not want exact modeling of the training data, as the constructed model would have a very poor predictive ability, due to fact that all details noise, outliers are modeled. To have a smooth interpolating function in which the number of basis functions is determined by the fundamental complexity of the data structure, some modifications to the exact interpolation method are required. 1) The number K of basis functions need not equal the number m of data points, and is typically much less than m. 2) Bias parameters are included in the linear sum. 3) The determination of suitable centers becomes part of the training process. 4) Instead of having a common width parameters, each basis function is given its own width σj whose value is also determined during training. By introducing at least the first two modifications to the exact interpolation method we obtain RBFN. Architecture of RBFN RBFN can be presented as a three-layer feedforward structure. • The input layer serves only as input distributor to the hidden layer. • Each node in the hidden layer is a radial function, its dimensionality being the same as the dimensionality of the input data. • The output is calculated by a linear combination . i.e. a weighted sum of the radial basis functions plus the bias, according to: In matrix notation: Nod1 object1 Nod2 Nodk bias Tr aining algor ithms RBF network parameters – The centers of the RBF activation functions – The spreads of the Gaussian RBF activation functions – The weights from the hidden to the output layer subset selection: Different subsets of basis functions can be drawn from the same fixed set of candidates. This is called subset selection in statistics. • forward selection – starts with an empty subset – added one basis function at a time (the one that most reduces the sum-squared-error) – until some chosen criterion stops • backward elimination – starts with the full subset – removed one basis function at a time ( the one that least increases the sum-squarederror) – until the chosen criterion stops decreasing Orthonormalization of basis functions: The Gram–Schmidt orthogonalization procedure is used to replace the k basis functions by the set of K orthogonal vectors describing the same space. Initially, the basis functions are centered on data objects. : , can The set of vectors ui , which are a linear combination of the functions be used directly to model y. To model y, the consecutive orthonormal vectors ui (i =1,2, . . . m) are introduced until the network performance reaches the desired level of approximation error. Nonlinear training algorithm: Apply the gradient descent method for finding centers, spread and weights, by minimizing the cost function (in most cases squared error). Back-propagation adapts iteratively the network parameters considering the derivatives of the cost function with respect to those parameters. Nonlinear neural network Drawback: Back-propagation algorithm may require several iterations and can get stuck into a local minima of the cost function Radial Basis Function Neural Net in MATLAB nnet-Toolbox function [net, tr] = newrb (p, t, goal, spread, mn, df) NEWRB adds neurons to the hidden layer of a radial basis network until it meets the specified mean squared error goal. P T GOAL SPREAD MN DF - RxQ matrix of Q input vectors. - SxQ matrix of Q target class vectors. - Mean squared error goal, default = 0.0. - Spread of radial basis functions, default = 1.0. - Maximum number of neurons, default is Q. - Number of neurons to add between displays, default = 25. The following steps are repeated until the network's mean squared error falls below GOAL or the maximum number of neurons are reached: 1) The network is simulated with random weight 2) The input vector with the greatest error is found 3) A neuron (basis function) is added with weights equal to that vector. 4) The output layer weights are redesigned to minimize error. THANKS